This the multi-page printable view of this section. Click here to print.

Developers

- 1: Architecture

- 2: Microservices

- 2.1: Console

- 2.2: Identity

- 2.3: Inventory

- 2.4: Monitoring

- 2.5: Notification

- 2.6: Statistics

- 2.7: Billing

- 2.8: Plugin

- 2.9: Supervisor

- 2.10: Repository

- 2.11: Secret

- 2.12: Config

- 3: Frontend

- 4: Design System

- 4.1: Getting Started

- 5: Backend

- 6: Plugins

- 6.1: About Plugin

- 6.2: Developer Guide

- 6.2.1: Plugin Interface

- 6.2.2: Plugin Register

- 6.2.3: Plugin Deployment

- 6.2.4: Plugin Debugging

- 6.3: Plugin Designs

- 6.4: Collector

- 7: API & SDK

- 7.1: gRPC API

- 8: CICD

- 8.1: Frontend Microservice CI

- 8.2: Backend Microservice CI

- 8.3: Frontend Core Microservice CI

- 8.4: Backend Core Microservice CI

- 8.5: Plugin CI

- 8.6: Tools CI

- 9: Contribute

- 9.1: Documentation

- 9.1.1: Content Guide

- 9.1.2: Style Guide (shortcodes)

1 - Architecture

1.1 - Micro Service Framework

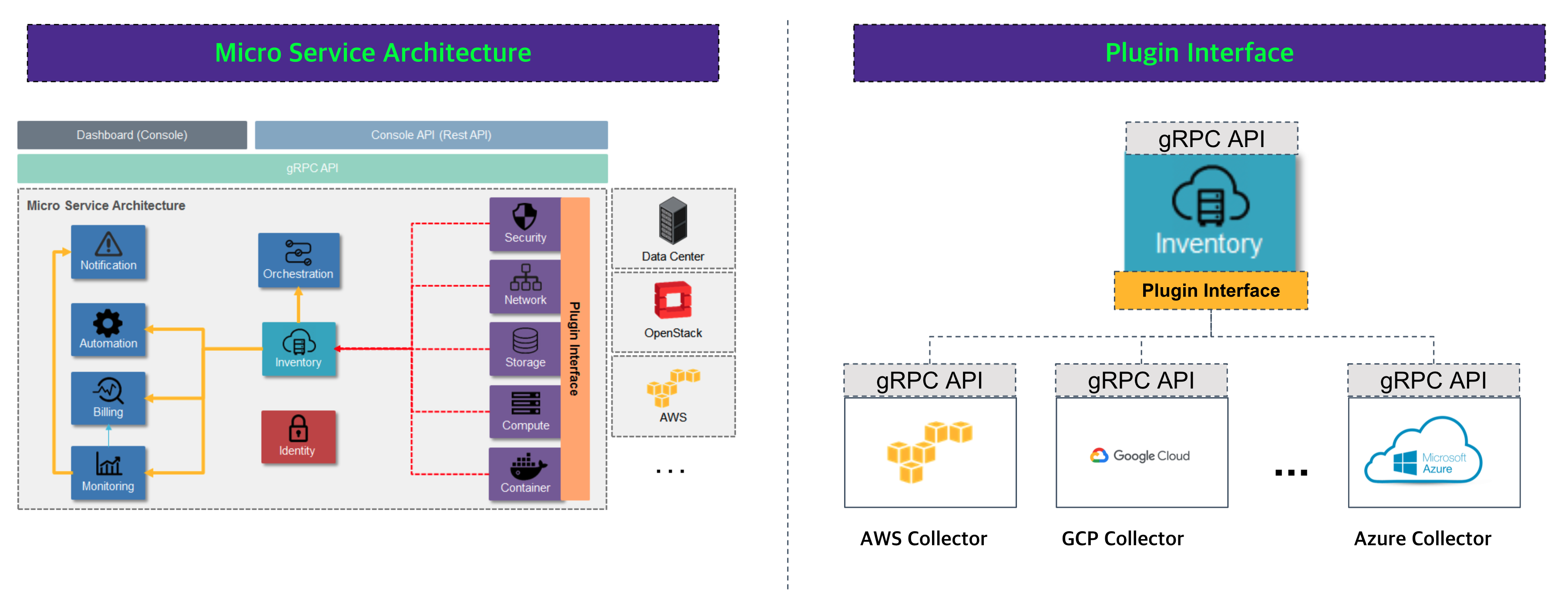

Cloudforet Architecture

The Cloudforet consists of a micro service architecture based on identity and inventory. Each micro services provides a plugin interface for flexibility of implementation.

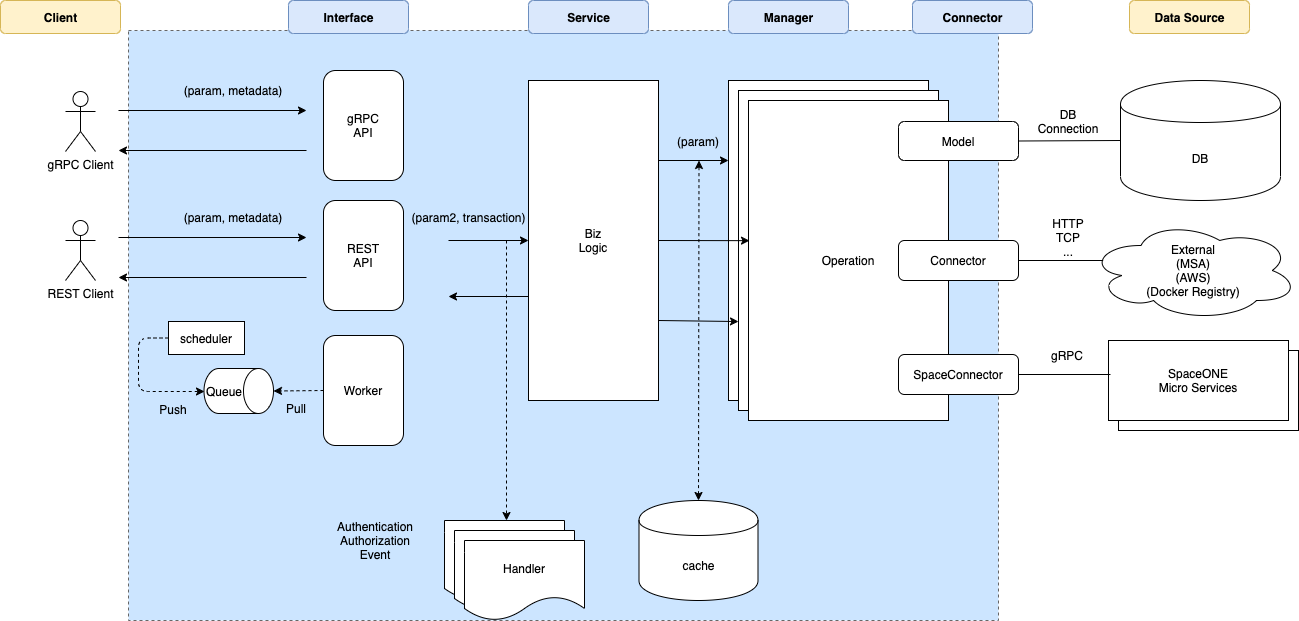

Cloudforet Backend Software Framework

The Cloudforet development team has created our own S/W framework like Python Django or Java Spring.

Cloudforet S/W Framework provides software framework for implementing business logic. Each business logic can present its services in various way like gRPC interface, REST interface or periodic task.

Cloudforet S/W Framework provides software framework for implementing business logic. Each business logic can present its services in various way like gRPC interface, REST interface or periodic task.

| Layer | Descrption | Base Class | Implementation Directory | |

|---|---|---|---|---|

| Interface | Entry point of Service request | core/api.py | project/interface/interface type/ | |

| Handler | Pre, Post processing before Service call | |||

| Service | Business logic of service | core/service.py | project/service/ | |

| Cache | Caching for manager function(optional) | core/cache/ | ||

| Manager | Unit operation for each service function | core/manager.py | project/manager/ | |

| Connector | Interface for Data Source(ex. DB, Other micro services) |

Backend Server Type

Based on Interface type, each micro service works as

| Interface type | Description |

|---|---|

| gRPC server | gRPC based API server which is receiving requests from console or spacectl client |

| rest server | HTTP based API server, usually receiving requests from external client like grafana |

| scheduler server | Periodic task creation server, for example collecting inventory resources at every hour |

| worker server | Periodic task execution server which requests came from scheduler server |

1.2 - Micro Service Deployment

Cloudforet Deployment

The Cloudforet can be deployed by Helm chart. Each micro services has its own Helm chart, and the top chart, spaceone/spaceone contains all sub charts like console, identity, inventory and plugin.

Cloudforet provides own Helm chart repository. The repository URL is https://cloudforet-io.github.io/charts

helm repo add spaceone https://cloudforet-io.github.io/charts

helm repo list

helm repo update

helm search repo -r spaceone

NAME CHART VERSION APP VERSION DESCRIPTION

spaceone/spaceone 1.8.6 1.8.6 A Helm chart for Cloudforet

spaceone/spaceone-initializer 1.2.8 1.x.y Cloudforet domain initializer Helm chart for Kube...

spaceone/billing 1.3.6 1.x.y Cloudforet billing Helm chart for Kubernetes

spaceone/billing-v2 1.3.6 1.x.y Cloudforet billing v2 Helm chart for Kubernetes

spaceone/config 1.3.6 1.x.y Cloudforet config Helm chart for Kubernetes

spaceone/console 1.2.5 1.x.y Cloudforet console Helm chart for Kubernetes

spaceone/console-api 1.1.8 1.x.y Cloudforet console-api Helm chart for Kubernetes

spaceone/cost-analysis 1.3.7 1.x.y Cloudforet Cost Analysis Helm chart for Kubernetes

spaceone/cost-saving 1.3.6 1.x.y Cloudforet cost_saving Helm chart for Kubernetes

spaceone/docs 2.0.0 1.0.0 Cloudforet Open-Source Project Site Helm chart fo...

spaceone/identity 1.3.7 1.x.y Cloudforet identity Helm chart for Kubernetes

spaceone/inventory 1.3.7 1.x.y Cloudforet inventory Helm chart for Kubernetes

spaceone/marketplace-assets 1.1.3 1.x.y Cloudforet marketplace-assets Helm chart for Kube...

spaceone/monitoring 1.3.15 1.x.y Cloudforet monitoring Helm chart for Kubernetes

spaceone/notification 1.3.8 1.x.y Cloudforet notification Helm chart for Kubernetes

spaceone/plugin 1.3.6 1.x.y Cloudforet plugin Helm chart for Kubernetes

spaceone/power-scheduler 1.3.6 1.x.y Cloudforet power_scheduler Helm chart for Kubernetes

spaceone/project-site 1.0.0 0.1.0 Cloudforet Open-Source Project Site Helm chart fo...

spaceone/repository 1.3.6 1.x.y Cloudforet repository Helm chart for Kubernetes

spaceone/secret 1.3.9 1.x.y Cloudforet secret Helm chart for Kubernetes

spaceone/spot-automation 1.3.6 1.x.y Cloudforet spot_automation Helm chart for Kubernetes

spaceone/spot-automation-proxy 1.0.0 1.x.y Cloudforet Spot Automation Proxy Helm chart for K...

spaceone/statistics 1.3.6 1.x.y Cloudforet statistics Helm chart for Kubernetes

spaceone/supervisor 1.1.4 1.x.y Cloudforet supervisor Helm chart for Kubernetes

Installation

Cloudforet can be easily deployed by single Helm chart with spaceone/spaceone.

See https://cloudforet.io/docs/setup_operation/

Helm Chart Code

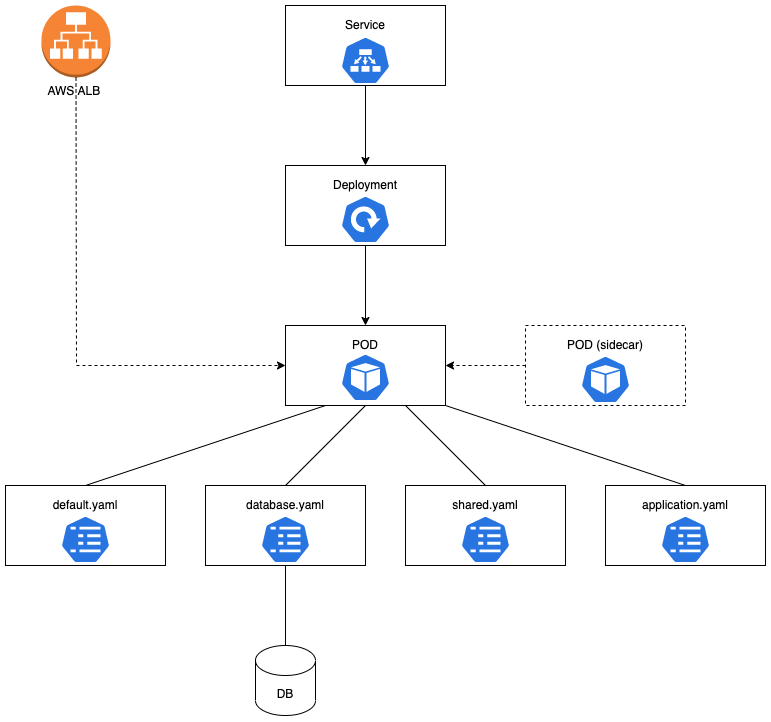

Each repository should provide its own helm chart.

The code should be at {repository}/deploy/helm

Every Helm charts consists of four components.

| File or Directory | Description |

|---|---|

| Chart.yaml | Information of this Helm chart |

| values.yaml | Default vaule of this Helm chart |

| config (directory) | Default configuration of this micro service |

| templates (directory) | Helm template files |

The directory looks like

deploy

└── helm

├── Chart.yaml

├── config

│ └── config.yaml

├── templates

│ ├── NOTES.txt

│ ├── _helpers.tpl

│ ├── application-grpc-conf.yaml

│ ├── application-rest-conf.yaml

│ ├── application-scheduler-conf.yaml

│ ├── application-worker-conf.yaml

│ ├── database-conf.yaml

│ ├── default-conf.yaml

│ ├── deployment-grpc.yaml

│ ├── deployment-rest.yaml

│ ├── deployment-scheduler.yaml

│ ├── deployment-worker.yaml

│ ├── ingress-rest.yaml

│ ├── rest-nginx-conf.yaml

│ ├── rest-nginx-proxy-conf.yaml

│ ├── service-grpc.yaml

│ ├── service-rest.yaml

│ └── shared-conf.yaml

└── values.yaml

3 directories, 21 files

Based on micro service types like frontend, backend, or supervisor, the contents of templates directory is different.

Template Samples

Since every backend services has same templates files, spaceone provides sample of templates directory.

Template Sample URL:

https://github.com/cloudforet-io/spaceone/tree/master/helm_templates

Implementation

values.yaml

values.yaml file defines default vault of templates.

Basic information

###############################

# DEFAULT

###############################

enabled: true

developer: false

grpc: true

scheduler: false

worker: false

rest: false

name: identity

image:

name: spaceone/identity

version: latest

imagePullPolicy: IfNotPresent

database: {}

- enabled: true | false defines deploy this helm chart or not

- developer: true | false for developer mode (recommendation: false)

- grpc: true if you want to deploy gRPC server

- rest: true if you want to deploy rest server

- scheduler: true if you want to deploy scheduler server

- worker: true if you want to deploy worker server

- name: micro service name

- image: docker image and version for this micro service

- imagePullPolicy: IfNotPresent | Always

- database: if you want to overwrite default database configuration

Application Configuration

Each server type like gRPC, rest, scheduler or worker server has its own specific configuration.

application_grpc: {}

application_rest: {}

application_scheduler: {}

application_worker: {}

This section is used at templates/application-{server type}-conf.yaml, then saved as ConfigMap.

Deployment file uses this ConfigMap at volumes,

then volumeMount as /opt/spaceone/{ service name }/config/application.yaml

For example, inventory scheduler server needs QUEUES and SCHEDULERS configuration.

So you can easily configure by adding configuration at application_scheduler like

application_scheduler:

QUEUES:

collector_q:

backend: spaceone.core.queue.redis_queue.RedisQueue

host: redis

port: 6379

channel: collector

SCHEDULERS:

hourly_scheduler:

backend: spaceone.inventory.scheduler.inventory_scheduler.InventoryHourlyScheduler

queue: collector_q

interval: 1

minute: ':00'

Local sidecar

If you want to append specific sidecar in this micro service.

# local sidecar

##########################

#sidecar:

Local volumes

Every micro service needs default timezone and log directory.

##########################

# Local volumes

##########################

volumes:

- name: timezone

hostPath:

path: /usr/share/zoneinfo/Asia/Seoul

- name: log-volume

emptyDir: {}

Global variables

Every micro services need some part of same configuration or same sidecar.

#######################

# global variable

#######################

global:

shared: {}

sidecar: []

Service

gRPC or rest server needs Service

# Service

service:

grpc:

type: ClusterIP

annotations:

nil: nil

ports:

- name: grpc

port: 50051

targetPort: 50051

protocol: TCP

rest:

type: ClusterIP

annotations:

nil: nil

ports:

- name: rest

port: 80

targetPort: 80

protocol: TCP

volumeMounts

Some micro service may need additional file or configuration. In this case use volumeMounts which can attach any thing.

################################

# volumeMount per deployment

################################

volumeMounts:

application_grpc: []

application_rest: []

application_scheduler: []

application_worker: []

POD Spec

We can configure specific value for POD spec. For example, we can use nodeSelector for deploy POD at specific K8S worker node.

####################################

# pod spec (append more pod spec)

# example nodeSelect

#

# pod:

# spec:

# nodeSelector:

# application: my-node-group

####################################

pod:

spec: {}

CI (github action)

If you want to make helm chart for this micro service, trigger at github action Make Helm Chart.

Make Helm Chart

We don't need to make helm chart for each micro service usually,

since spaceone/spaceone top chart do all these steps.

2 - Microservices

2.1 - Console

2.2 - Identity

2.3 - Inventory

2.4 - Monitoring

2.5 - Notification

2.6 - Statistics

2.7 - Billing

2.8 - Plugin

2.9 - Supervisor

2.10 - Repository

2.11 - Secret

2.12 - Config

3 - Frontend

4 - Design System

Overview

In the hyper-competitive software market, design system have become a big part of a product’s success. So, we built our design system based on the principles below.

A design system increases collaboration and accelerates design and development cycles. Also, a design system is a single source of truth that helps us speak with one voice and vary our tone depending on the situational context.

Principle

User-centered

Design is the “touch point” for users to communicate with the product. Communication between a user and a product is the key activity for us. We prioritize accessibility, simplicity, and perceivability. We are enabling familiar interactions that make complex products simple and straightforward for users to use.

Clarity

Users need to accomplish their complex tasks on our multi-cloud platform. We reduced the length of the thinking process by eliminating confusion for a better user experience. We aim to users achieve tasks simpler and improve motivation to solve tasks.

Consistency

Language development is supported by a variety of sensory experiences. We aim to have the best and the most perfectly consistent design system and keep improving the design system by checking usability.

Click the links to open the resource below for Mirinae’s development.

Resources

GitHub

Design system repositoryStorybook

Component LibraryFigma

Preparing For Release

4.1 - Getting Started

개발 환경 세팅

Fork

현재 스페이스원의 콘솔은 오픈소스로 운영중에 있습니다.

개발에 기여하기위해 먼저 Design System 레포지토리를 개인 github 계정에 포크해 줍니다.

Clone

이후 포크해온 레포지토리를 로컬로 클론해 줍니다.

서브모듈로 assets과 번역 관련 레포지토리가 사용중이기 때문에 함께 초기화합니다.

git clone --recurse-submodules https://github.com/[github username]/spaceone-design-system

cd console

Run Storybook

콘솔을 실행 실행시키기 위해 npm으로 의존성을 설치하고, 스크립트를 실행해 줍니다.

npm install --no-save

npm run storybook

Build

배포 가능한 zip을 생성하려면 아래의 스크립트를 실행하시면 됩니다.

npm run build

스토리북

SpaceOne Design system은 Storybook을 제공하고 있습니다.

컴포넌트를 생성하면 해당 컴포넌트의 기능 정의를 Storybook을 통해 문서화합니다.

기본적으로 한 컴포넌트가 아래와 같은 구조로 구성되어 있습니다.

- component-name

- [component-name].stories.mdx

- [component-name].vue

- story-helper.ts

- type.ts

[component-name].stories.mdx 와 story-helper.ts

컴포넌트의 설명, 사용예시, Playground를 제공합니다.

mdx 포멧을 사용중이며 사용방법은 문서를 참고하십시오.

playground에 명시되는 props, slots, events와 같은 속성들은 가독성을 위해 story-helper를 통해 분리하여 작성하는 방식을 지향합니다.

차트 라이선스

SpaceONE 디자인 시스템은 내부적으로 amCharts for Dynamic Chart를 사용합니다.

디자인 시스템을 사용하기 전에 amCharts의 라이선스를 확인해주십시오.

자신에게 적합한 amCharts 라이선스를 구입하여 애플리케이션에서 사용하려면 라이선스 FAQ를 참조하십시오.

스타일

스타일 정의에 있어 SpaceOne Console은 tailwind css와 postcss를 사용중에 있습니다.

SpaceOne의 color palette에 따라 tailwind 커스텀을 통해 적용되어 있습니다. (세부 정보는 storybook을 참고해주세요)

5 - Backend

6 - Plugins

6.1 - About Plugin

About Plugin

A plugin is a software add-on that is installed on a program, enhancing its capabilities.

The Plugin interface pattern consists of two types of architecture components: a core system and plug-in modules. Application logic is divided between independent plug-in modules and the basic core system, providing extensibility, flexibility, and isolation of application features and custom processing logic

Why Cloudforet use a Plugin Interface

- Cloudforet wants to accommodate various clouds on one platform. : Multi-Cloud / Hybrid Cloud / Anything

- We want to be with Cloudforet not only in the cloud, but also in various IT solutions.

- We want to become a platform that can contain various infrastructure technologies.

- It is difficult to predict the future direction of technology, but we want to be a flexible platform that can coexist in any direction.

Integration Endpoints

| Micro Service | Resource | Description |

|---|---|---|

| Identity | Auth | Support Single Sign-On for each specific domain ex) OAuth2, ActiveDirectory, Okta, Onelogin |

| Inventory | Collector | Any Resource Objects for Inventory ex) AWS inventory collector |

| Monitoring | DataSource | Metric or Log information related with Inventory Objects ex) CloudWatrch, StackDriver ... |

| Monitoring | Webhook | Any Event from Monitoring Solutions ex) CPU, Memory alert ... |

| Notification | Protocol | Specific Event notification ex) Slack, Email, Jira ... |

6.2 - Developer Guide

Plugin can be developed in any language using Protobuf.

This is because both Micro Service and Plugin communication use Protobuf by default. The basic structure is the same as the server development process using the gRPC interface.

When developing plugins, it is possible to develop in any language (all languages that gRPC interface can use), but

If you use the Python Framework we provide, you can develop more easily.

All of the currently provided plugins were developed based on the Python-based self-developed framework.

For the basic usage method for Framework, refer to the following.

The following are the development requirements to check basically when developing a plugin, and you can check the detailed step-by-step details on each page.

6.2.1 - Plugin Interface

First, check the interface between the plugin to be developed and the core service. The interface structure is different for each service. You can check the gRPC interface information about this in the API document. (SpaceONE API)

For example, suppose we are developing an Auth Plugin for authentication of Identity.

At this time, if you check the interface information of the Auth Plugin, it is as follows. (SpaceONE API - Identity Auth)

In order to develop Identity Auth Plugin, a total of 4 API interfaces must be implemented.

Of these, init and verify are intefaces that all plugins need equally,

The rest depends on the characteristics of each plugin.

Among them, let's take a closer look at init and verify, which are required to be implemented in common.

1. init

Plugin initialization.

In the case of Identity, when creating a domain, it is necessary to decide which authentication to use, and the related Auth Plugin is distributed.

When deploying the first plugin (or updating the plugin version), after the plugin container is created, the Core service calls the init API to the plugin.

At this time, the plugin returns metadata information required when the core service communicates with the plugin.

Information on metadata is different for each Core service.

Below is an example of python code for init implementation of Google oAuth2 plugin.

Metadata is returned as a return value, and at this time, various information required by identity is added and returned.

@transaction

@check_required(['options'])

def init(self, params):

""" verify options

Args:

params

- options

Returns:

- metadata

Raises:

ERROR_NOT_FOUND:

"""

manager = self.locator.get_manager('AuthManager')

options = params['options']

options['auth_type'] = 'keycloak'

endpoints = manager.get_endpoint(options)

capability= endpoints

return {'metadata': capability}

2. verify

Check the plugin's normal operation.

After the plugin is deployed, after the init API is called, it goes through a check procedure to see if the plugin is ready to run, and the API called at this time is verify.

In the verify step, the procedure to check whether the plugin is ready to perform normal operation is checked.

Below is an example of python code for verify implementation of Google oAuth2 plugin.

The verify action is performed through the value required for Google oAuth2 operation.

The preparation stage for actual logic execution requires verification-level code for normal operation.

def verify(self, options):

# This is connection check for Google Authorization Server

# URL: https://www.googleapis.com/oauth2/v4/token

# After connection without param.

# It should return 404

r = requests.get(self.auth_server)

if r.status_code == 404:

return "ACTIVE"

else:

raise ERROR_NOT_FOUND(key='auth_server', value=self.auth_server)

6.2.2 - Plugin Register

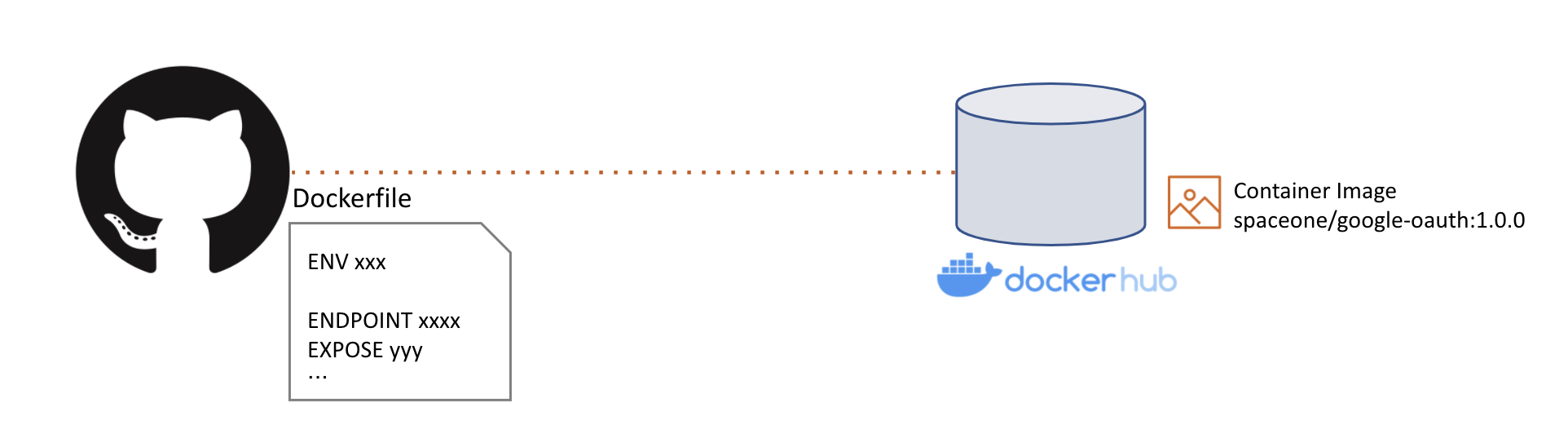

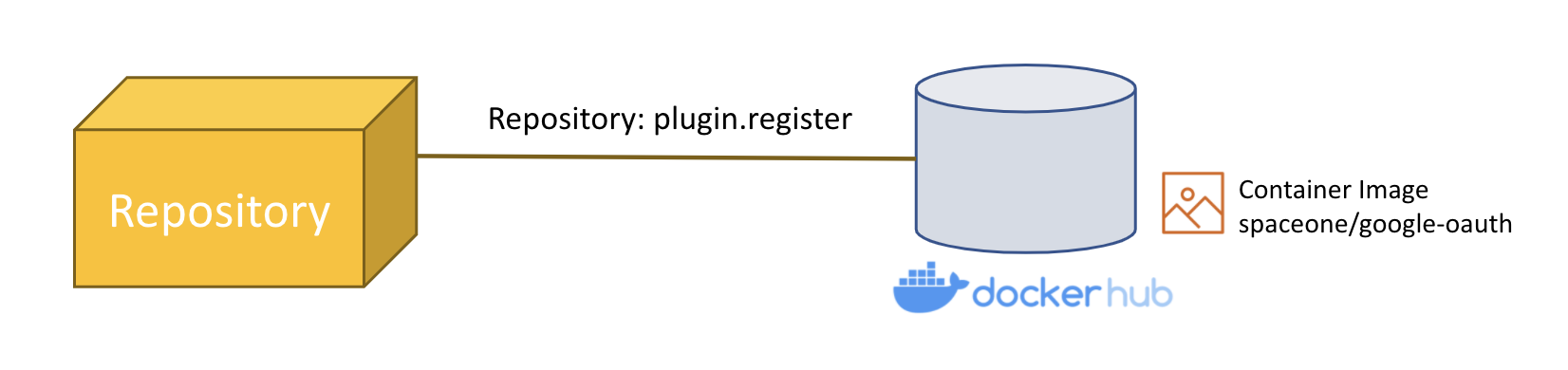

If plugin development is completed, you need to prepare plugin distribution. Since all plugins of SpaceONE are distributed as containers, the plugin code that has been developed must be built as an image for container distribution. Container build is done after docker build using Dockerfile, The resulting Image is uploaded to an image repository such as Docker hub. At this time, the image storage is uploaded to the storage managed by the Repository service, which is a microservice of SpaceONE.

If you have uploaded an image to the repository, you need to register the image in the Repository service among Microservices. Registration API uses Repository.plugin.register. (SpaceONE API - (Repository) Plugin.Register)

The example below is the parameter content delivered when registering the Notification Protocol Plugin. The image value contains the address of the previously built image.

name: Slack Notification Protocol

service_type: notification.Protocol

image: pyengine/plugin-slack-notification-protocol_settings

capability:

supported_schema:

- slack_webhook

data_type: SECRET

tags:

description: Slack

"spaceone:plugin_name": Slack

icon: 'https://spaceone-custom-assets.s3.ap-northeast-2.amazonaws.com/console-assets/icons/slack.svg'

provider: slack

template: {}

In the case of image registration, directly use gRPC API or use spacectl because it is not yet supported in Web Console. After creating the yaml file as above, you can register the image with the spacectl command as shown below.

> spacectl exec register repository.Plugin -f plugin_slack_notification_protocol.yml

When the image is registered in the Repository, you can check it as follows.

> spacectl list repository.Plugin -p repository_id=<REPOSITORY_ID> -c plugin_id,name

plugin_id | name

----------------------------------------+------------------------------------------

plugin-aws-sns-monitoring-webhook | AWS SNS Webhook

plugin-amorepacific-monitoring-webhook | Amore Pacific Webhook

plugin-email-notification-protocol_settings | Email Notification Protocol

plugin-grafana-monitoring-webhook | Grafana Webhook

plugin-keycloak-oidc | Keycloak OIDC Auth Plugin

plugin-sms-notification-protocol_settings | SMS Notification Protocol

plugin-voicecall-notification-protocol_settings | Voicecall Notification Protocol

plugin-slack-notification-protocol_settings | Slack Notification Protocol

plugin-telegram-notification-protocol_settings | Telegram Notification Protocol

Count: 9 / 9

Detailed usage of spacectl can be found on this page. Spacectl CLI Tool

6.2.3 - Plugin Deployment

To actually deploy and use the registered plugin, you need to deploy a pod in the Kubernetes environment based on the plugin image.

At this time, plugin distribution is automatically performed in the service that wants to use the plugin.

For example, in the case of Notification, an object called Protocol is used to deliver the generated Alert to the user.

At that time, Protocol.create action (Protocol.create) triggers installing Notification automatically.

The example below is an example of the Protocol.create command parameter for creating a Slack Protocol to send an alarm to Slack in Notification.

---

name: Slack Protocol

plugin_info:

plugin_id: plugin-slack-notification-protocol_settings

version: "1.0"

options: {}

schema: slack_webhook

tags:

description: Slack Protocol

In plugin_id, put the ID value of the plugin registered in the Repository,

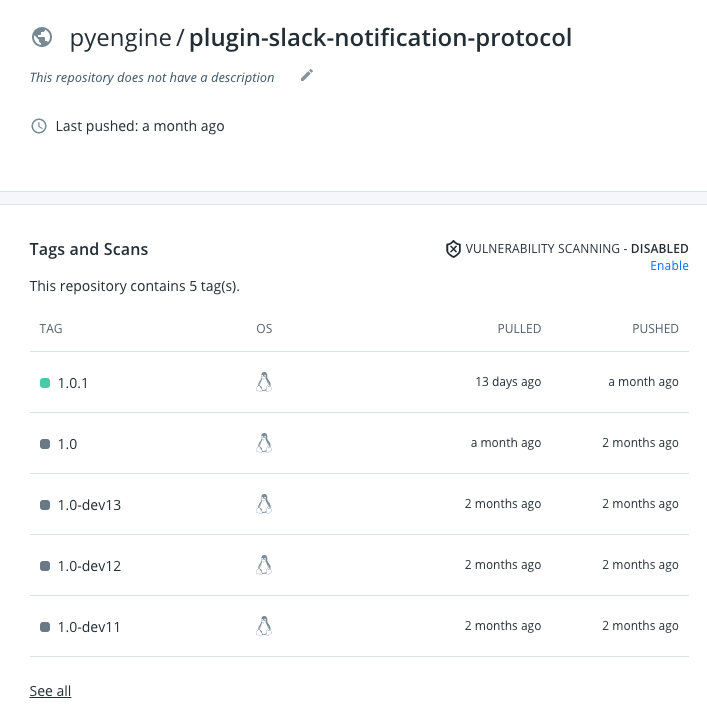

In version, put the image tag information written when uploading the actual image to an image repository such as Dockerhub.

If there are multiple tags in the image repository, the plugin is distributed with the image of the specified tag version.

In the above case, because the version was specified as "1.0"

It is distributed as a "1.0" tag image among the tag information below.

In the case of the API, it takes some time to respond because it goes through the steps of creating and deploying a Service and a Pod in the Kubernetes environment.

If you check the pod deployment in the actual Kubernetes environment, you can check it as follows.

> k get po

NAME READY STATUS RESTARTS AGE

plugin-slack-notification-protocol_settings-zljrhvigwujiqfmn-bf6kgtqz 1/1 Running 0 1m

6.2.4 - Plugin Debugging

Using Pycharm

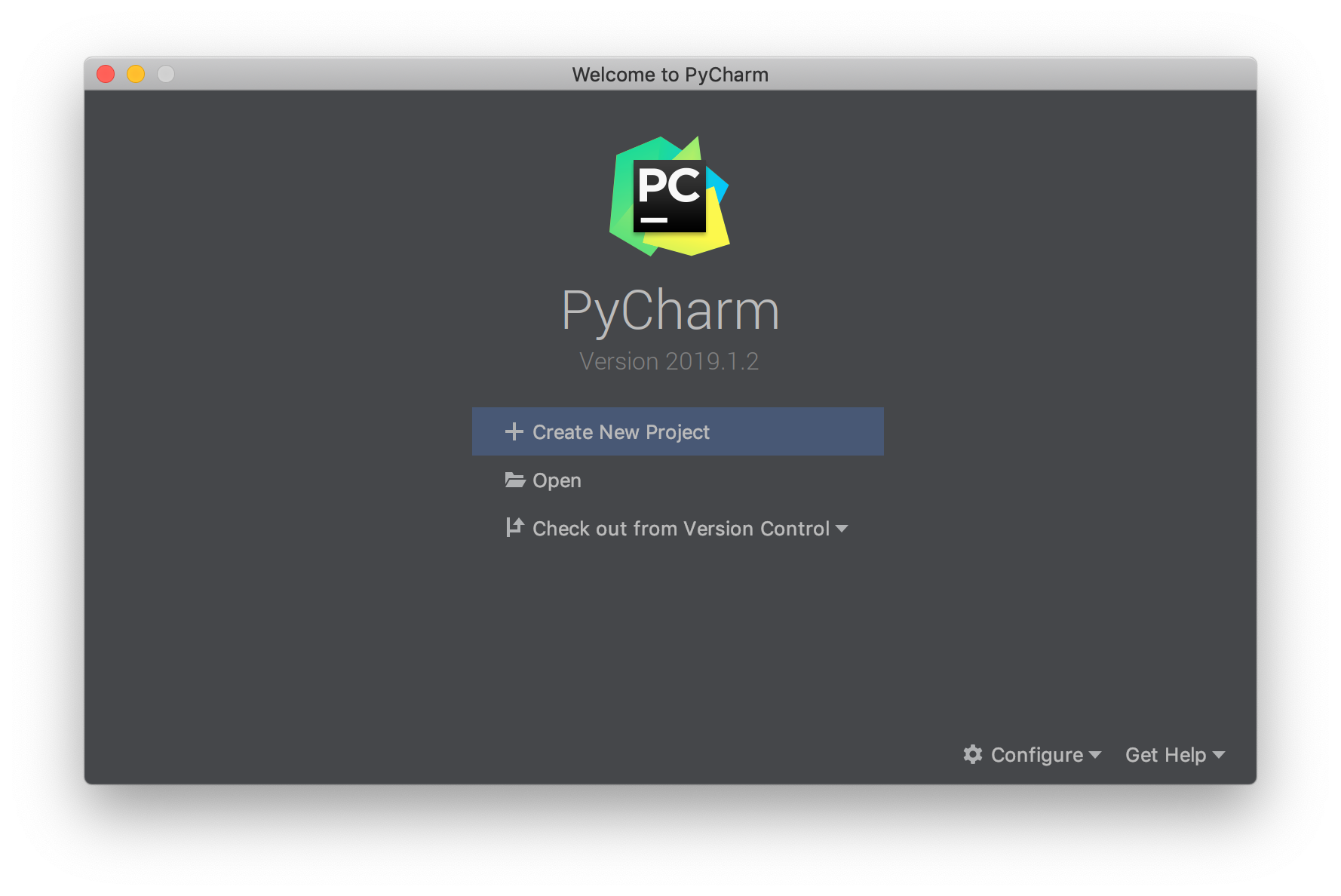

It is recommand to using pycharm(The most popular python IDE) to develop & testing plugins. Overall setting processes are as below.

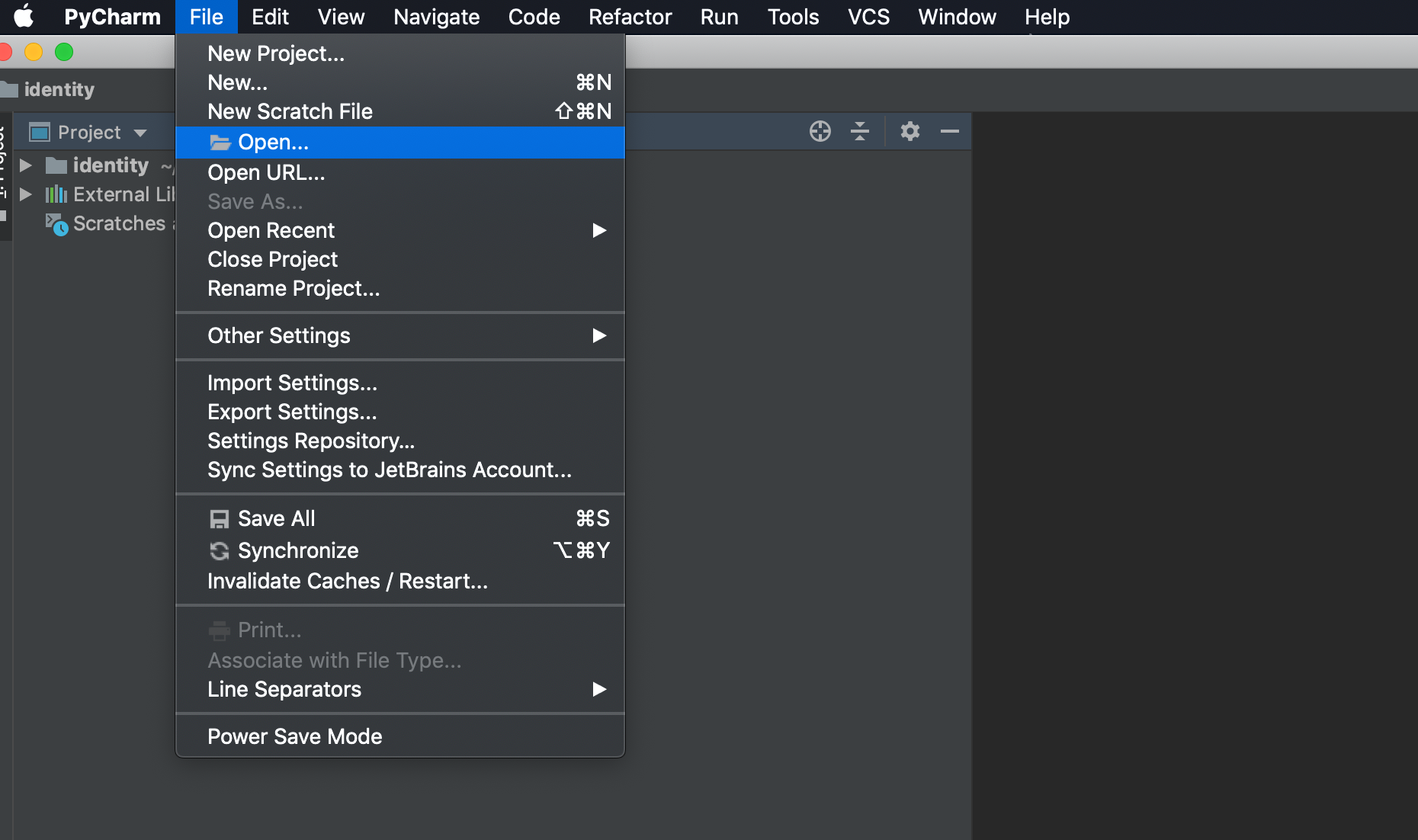

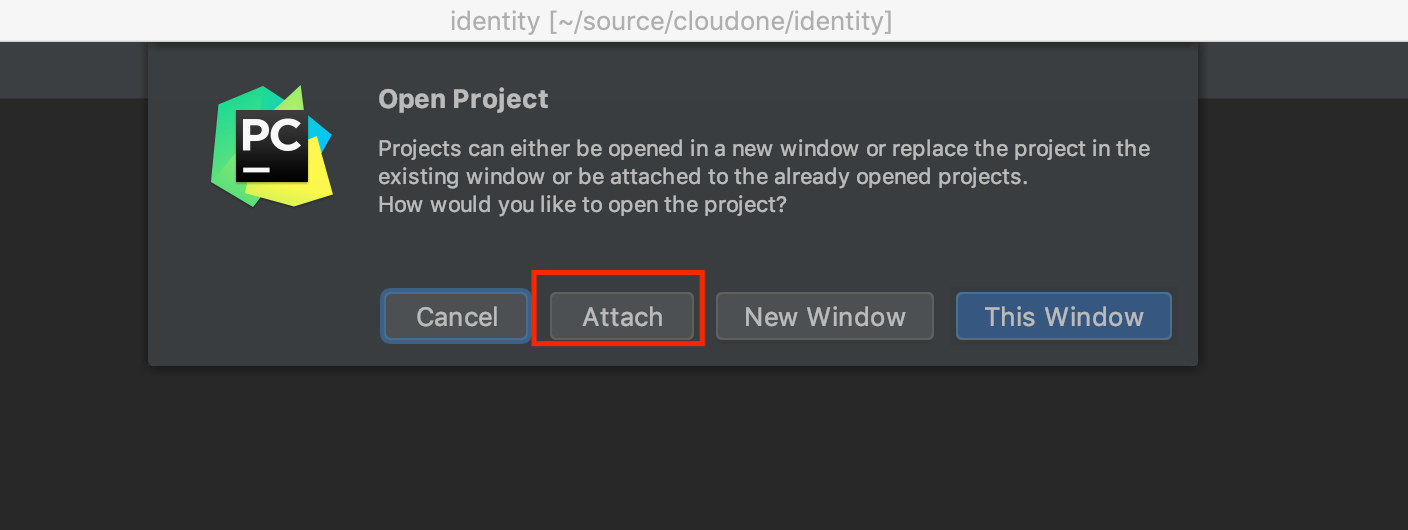

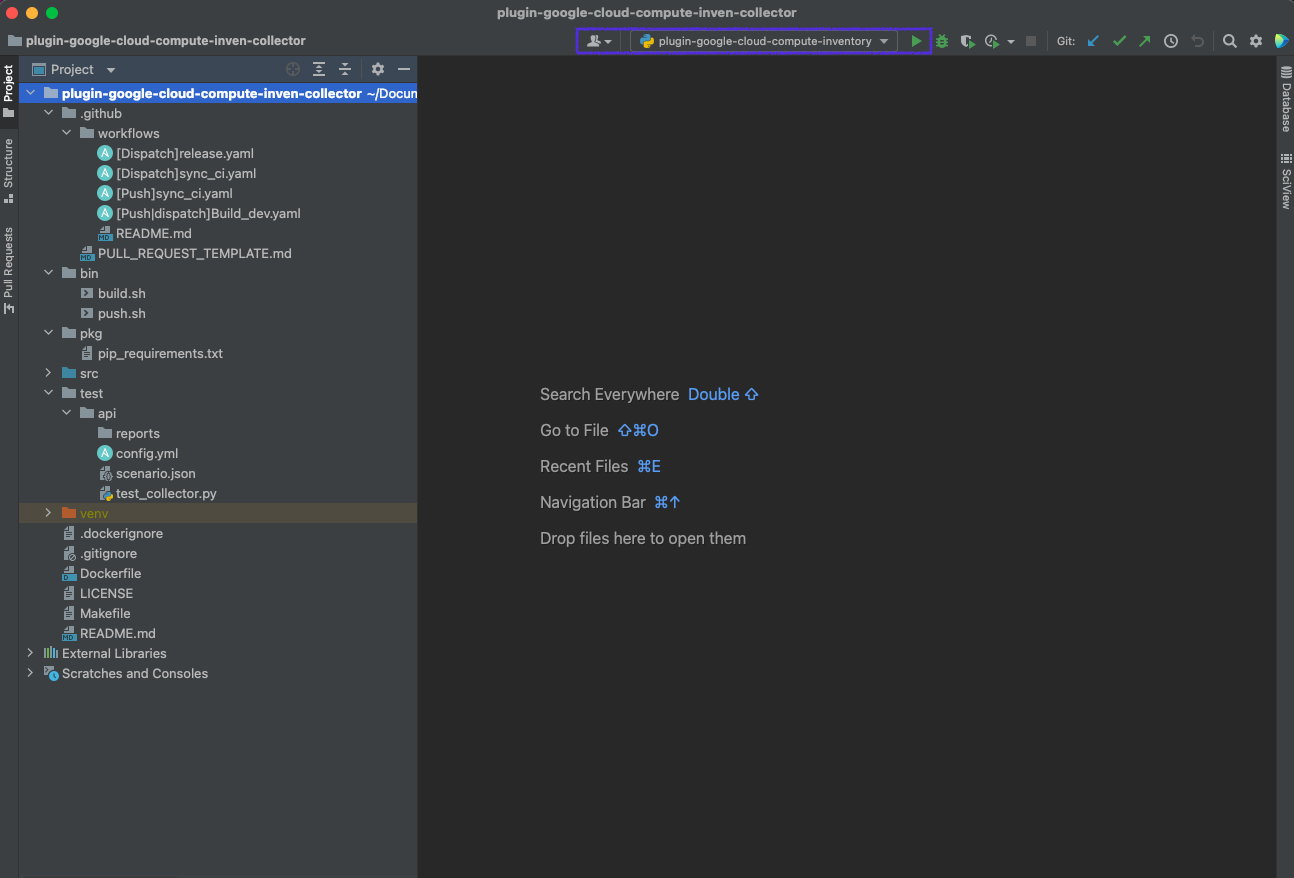

1. Open projects and dependencies

First, open project Identity, python-core and api one by one.

Click Open

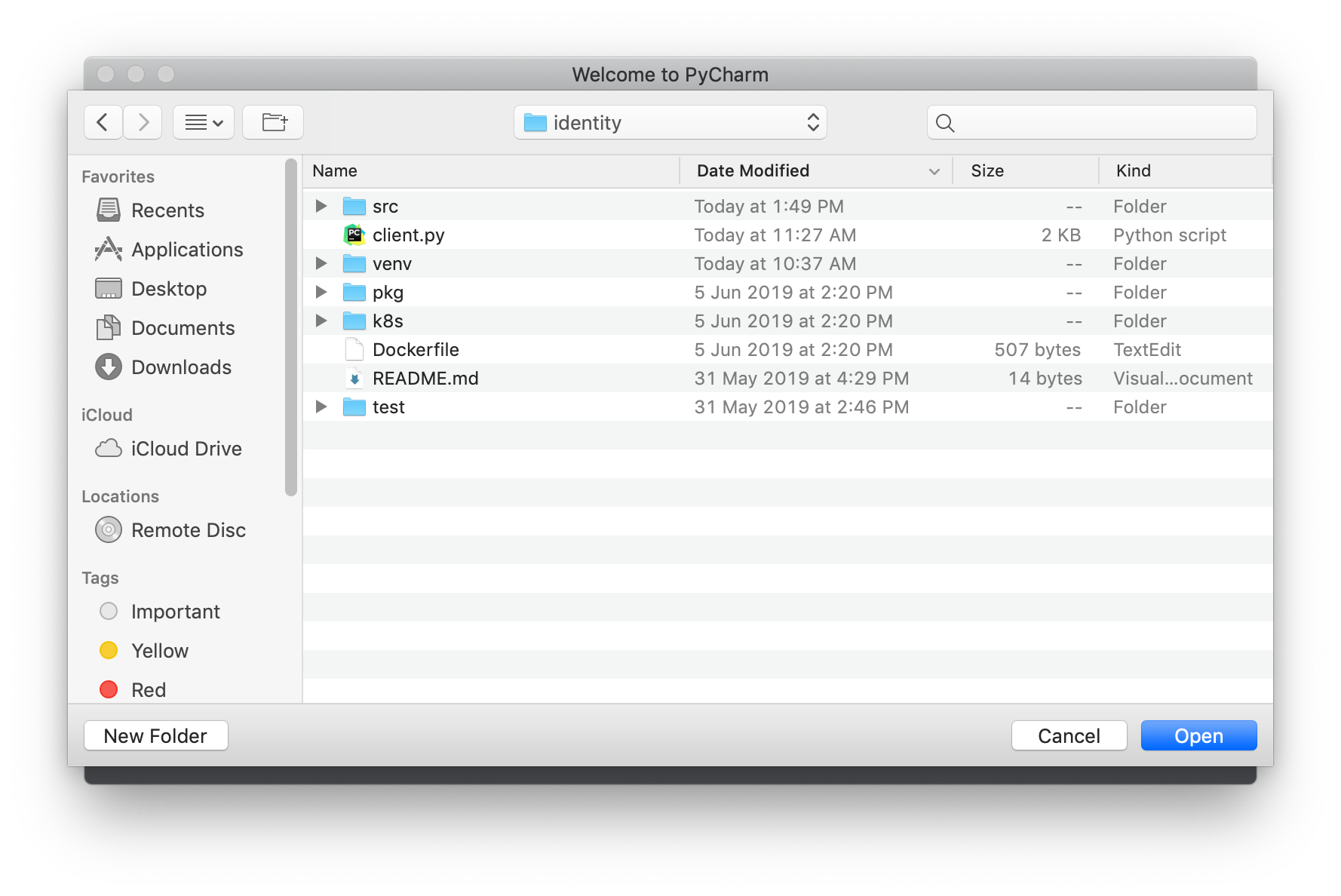

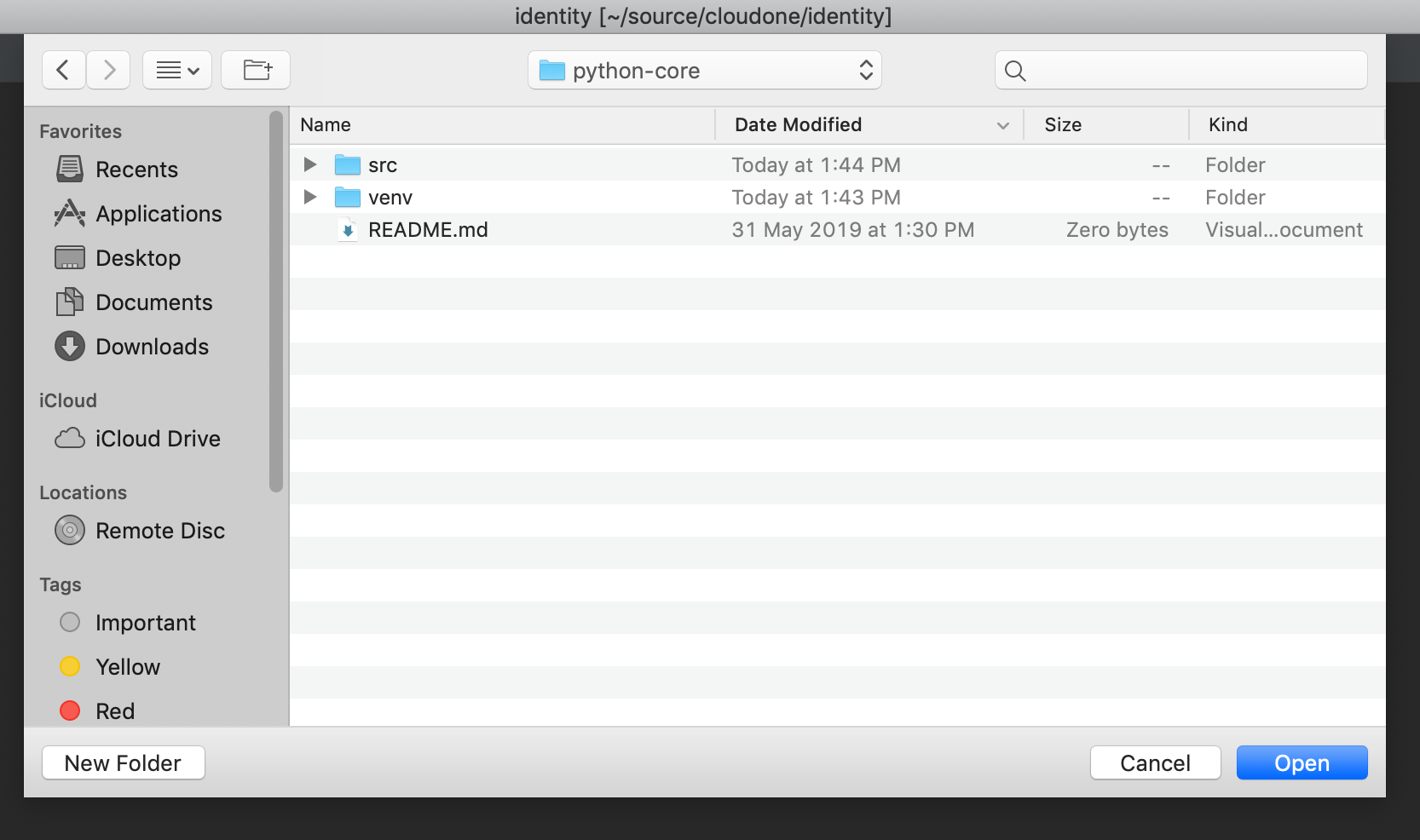

Select your project directory. In this example '~/source/cloudone/identity'

Click File > Open , then select related project one by one. In this example '~/source/cloudone/python-core'

Select New Window for an additional project. You might need to do several times if you have multiple projects. Ex) python-core and api

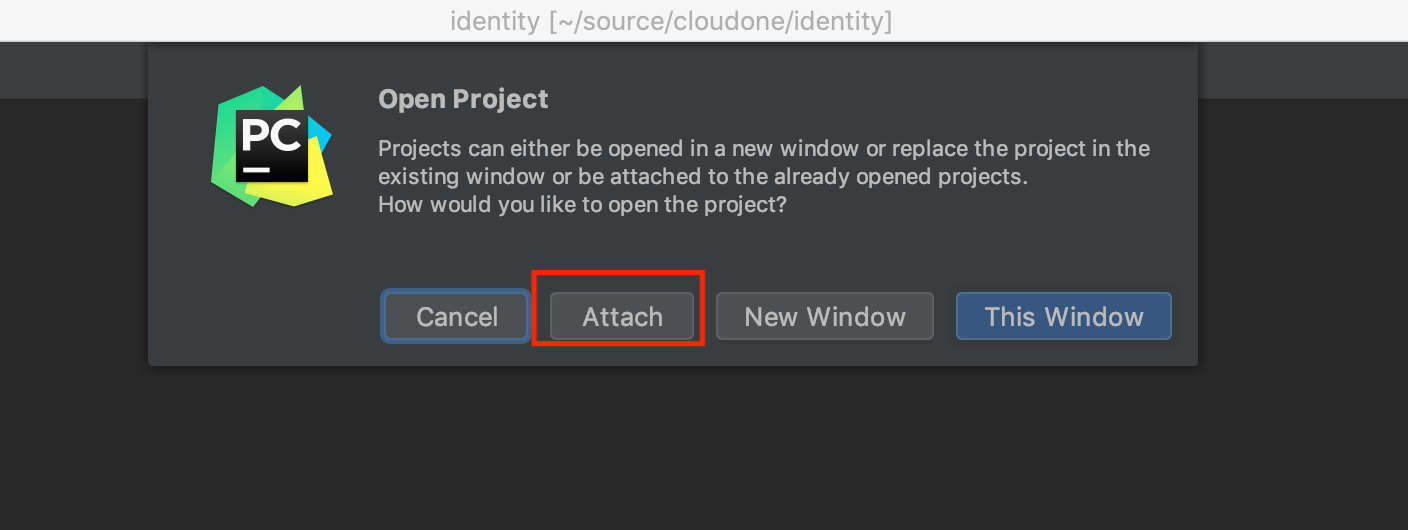

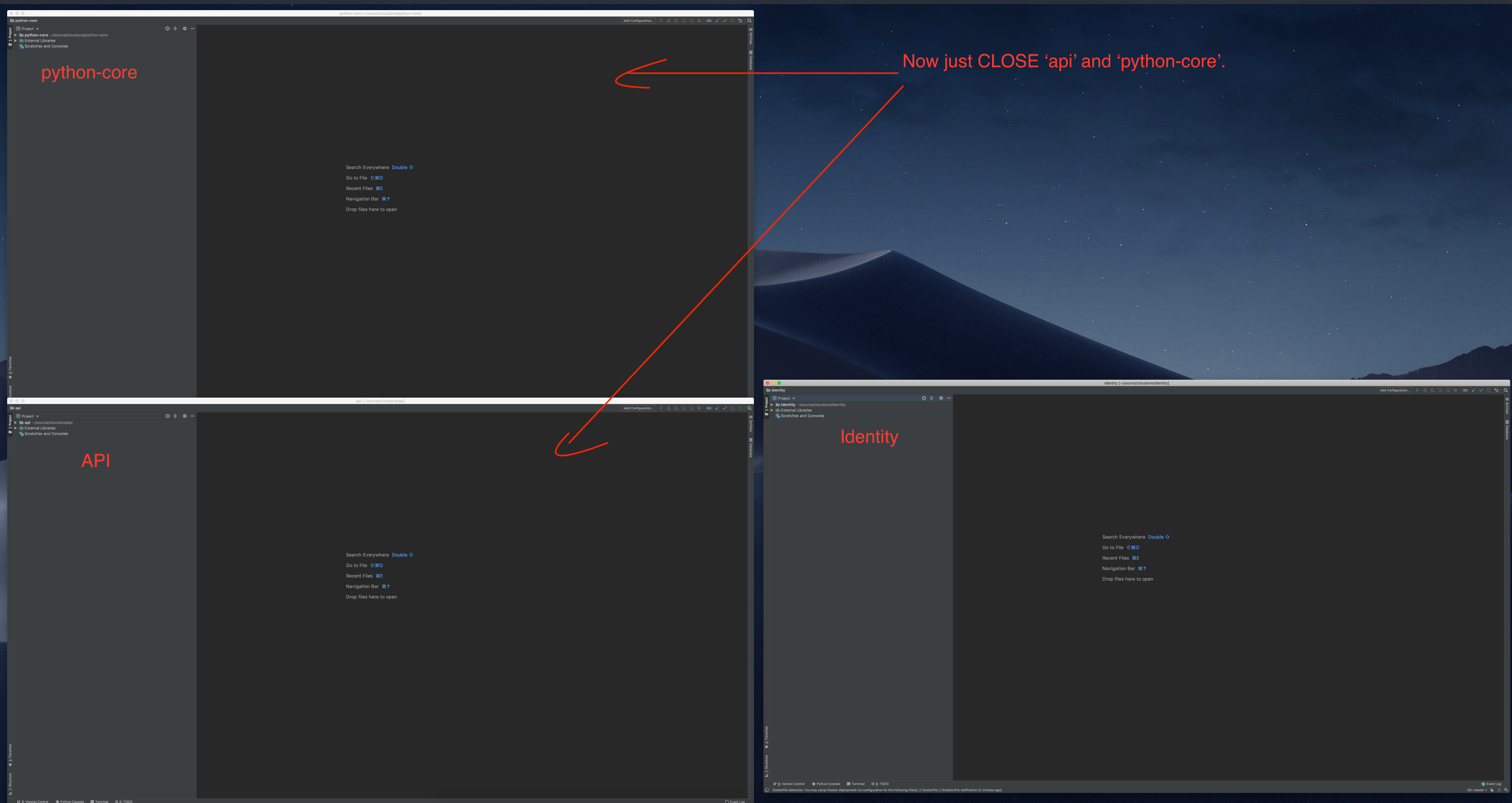

Now we have 3 windows. Just close python-core and API projects.

Once you open your project at least one time, you can attach them to each other. Let's do it on identity project. Do this again Open > select your anther project directory. In this example, python-core and API.

But this time, you can ATTACH it to Identity project.

You can attach a project as a module if it was imported at least once.

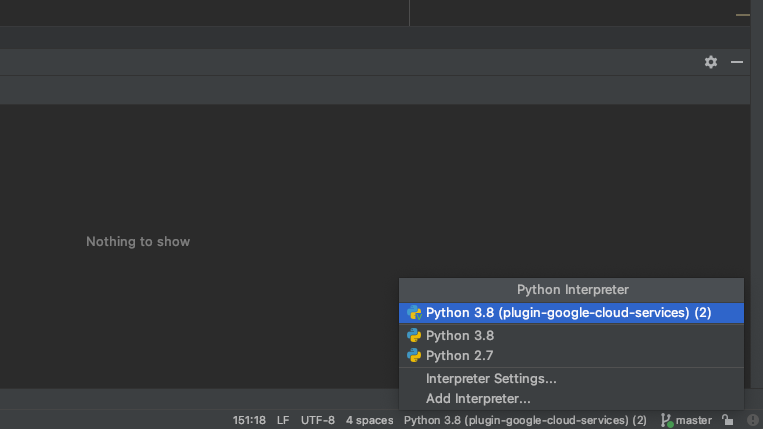

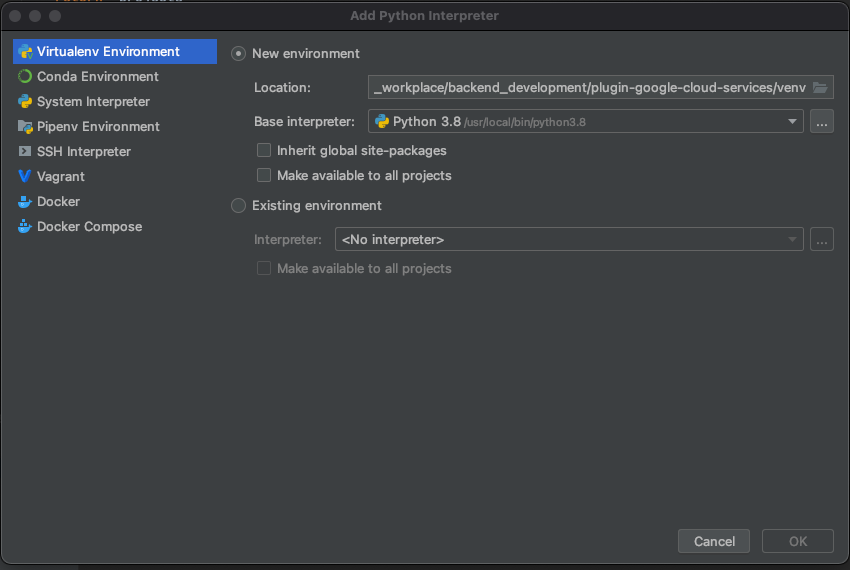

2. Configure Virtual Environment

Add additional python interpreter

Click virtual environment section

Designate base interpreter as 'Python 3.8'(Python3 need to be installed previously)

Then click 'OK'

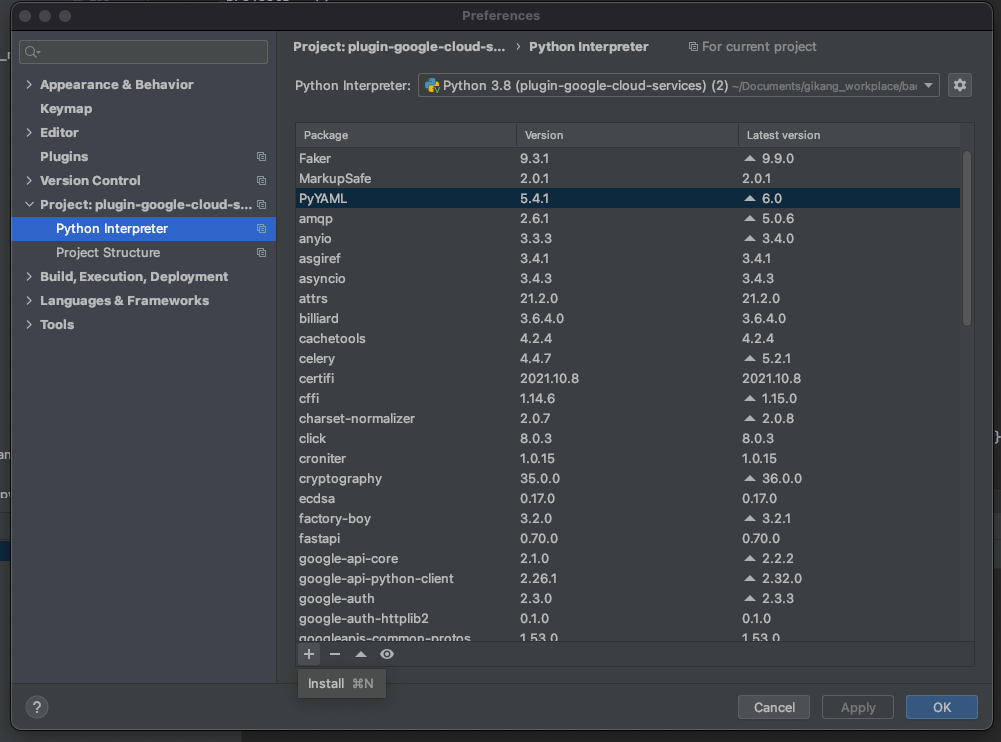

Return to 'Python interpreter > Interpreter Settings..'

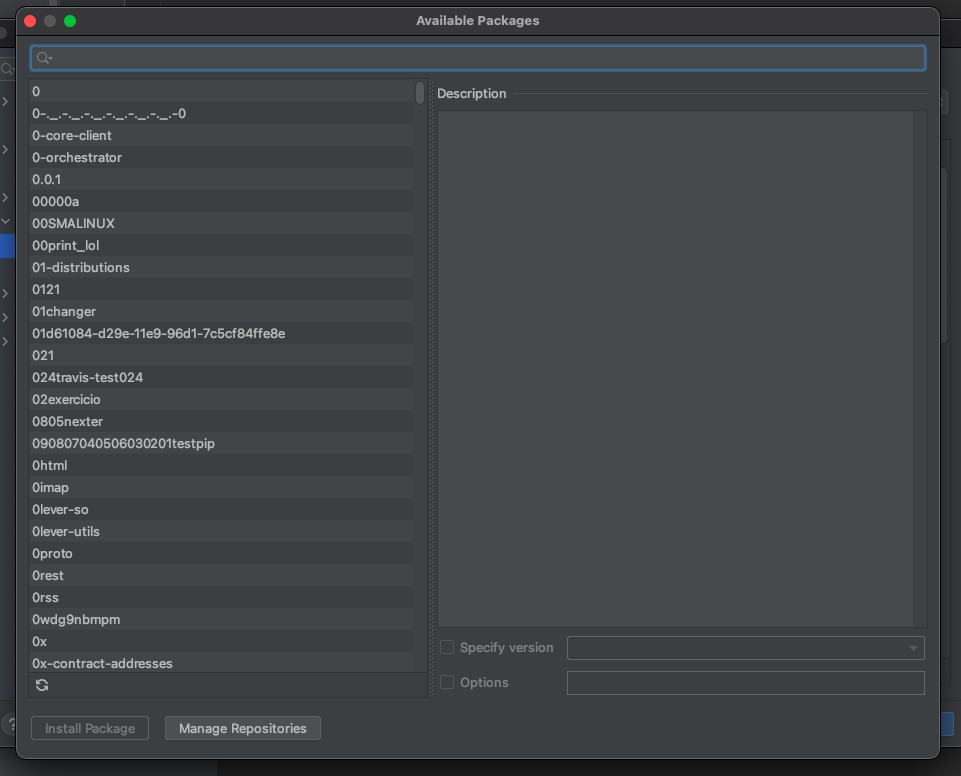

List of installed python package on virtual environment will be displayed

Click '+' button, Then search & click 'Install Package' below

'spaceone-core'

'spaceone-api'

'spaceone-tester'

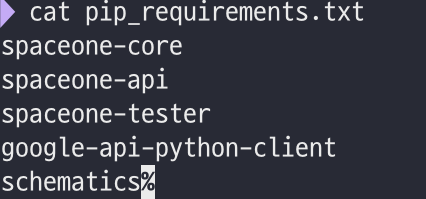

Additional libraries are in 'pkg/pip_requirements.txt' in every repository. You also need to install them.

Repeat above process or you can install through command line

$> pip3 install -r pip_requirements.txt

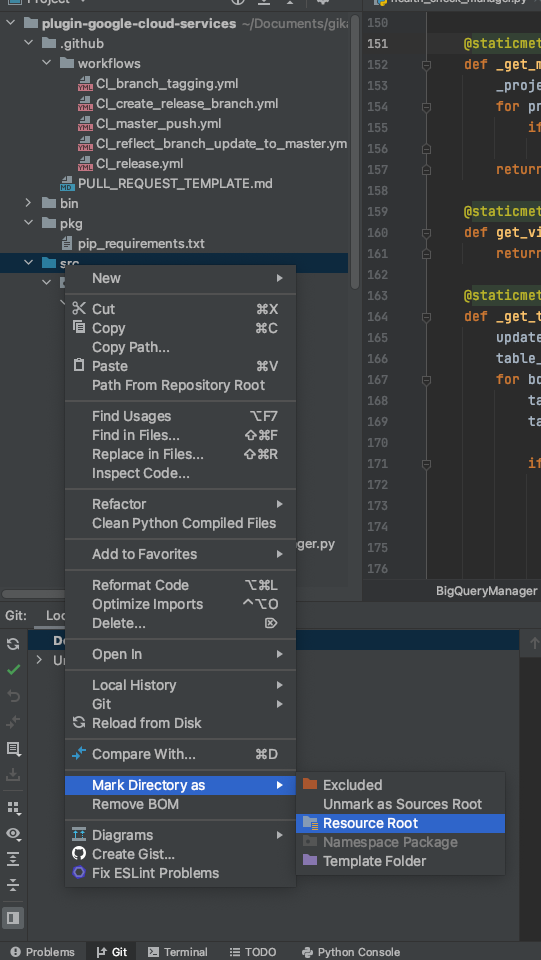

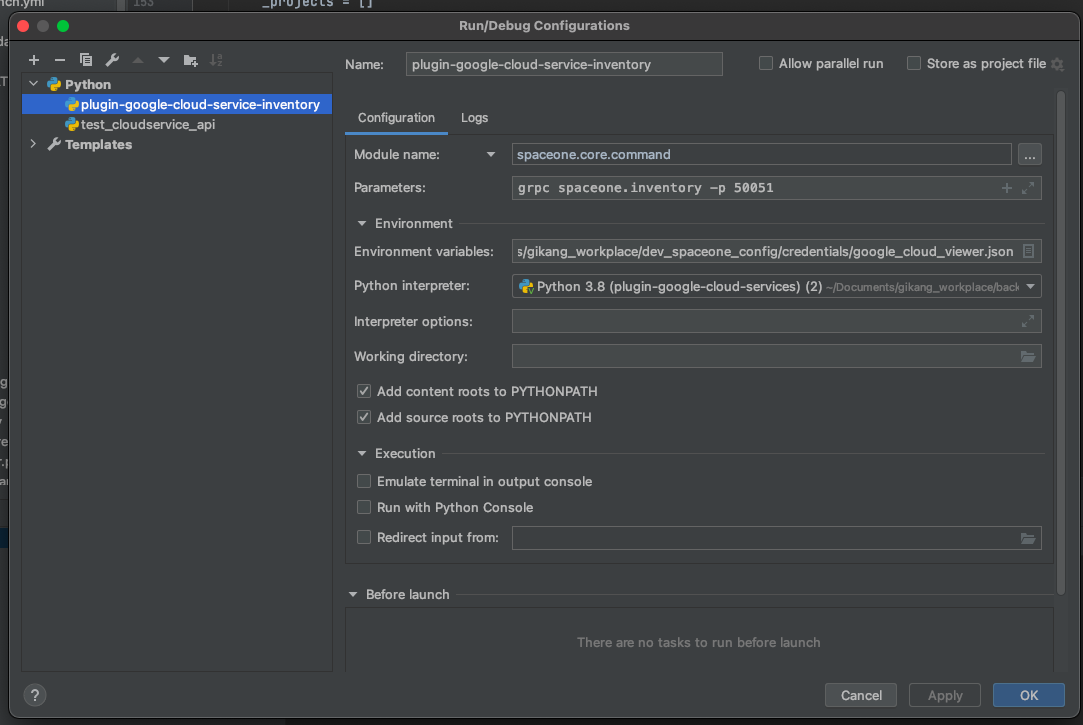

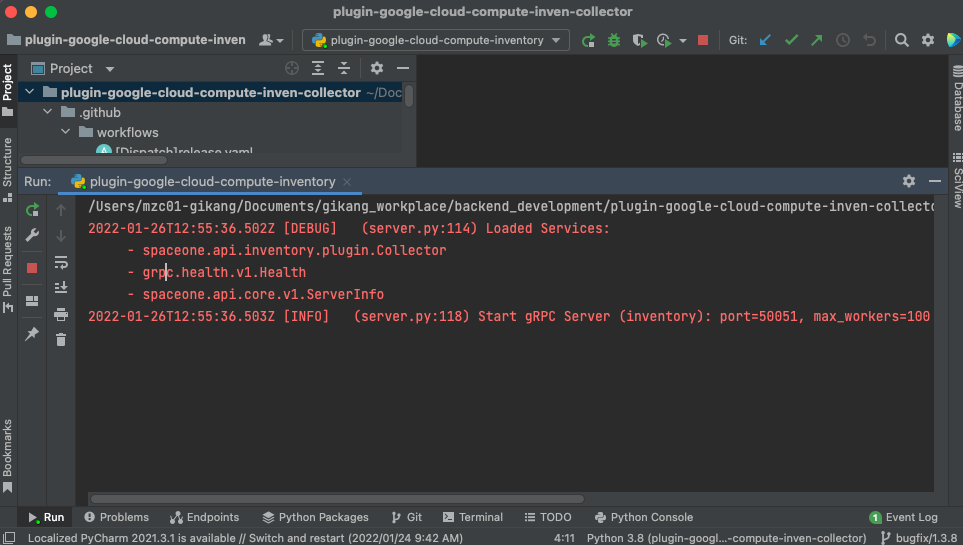

3. Run Server

- Set source root directory

- Right click on 'src' directory 'Mark Directory as > Resource Root'

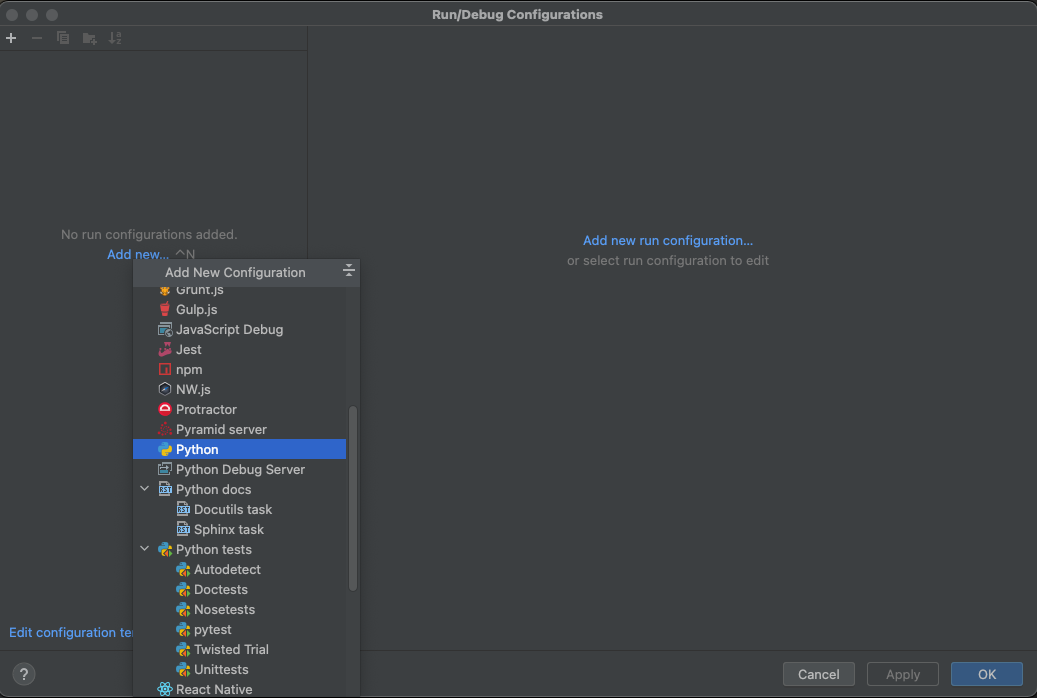

- Set test server configuration

- Fill in test server configurations are as below, then click 'OK'

| Item | Configuration | Etc |

|---|---|---|

| Module name | spaceone.core.command | |

| Parameters | grpc spaceone.inventory -p 50051 | -p option means portnumber (Can be changed) |

- You can run test server with 'play button' on the upper right side or the IDE

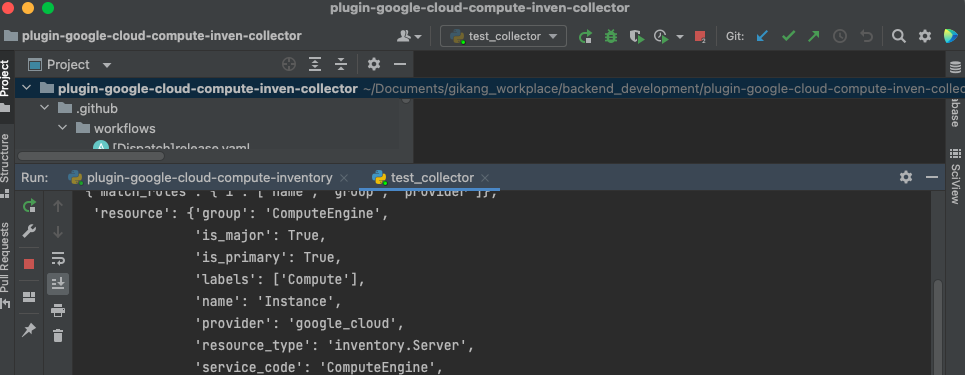

4. Execute Test Code

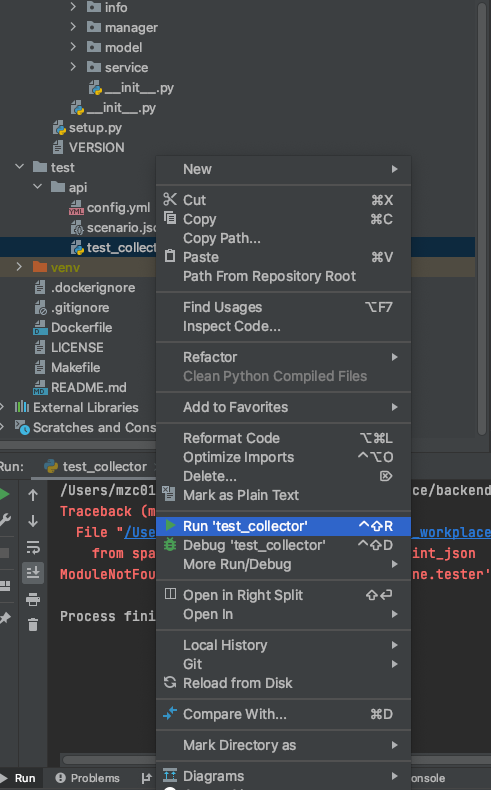

Every plugin repository has their own unit test case file in 'test/api' directory

- Right click on 'test_collector.py' file

- Click 'Run 'test_collector''

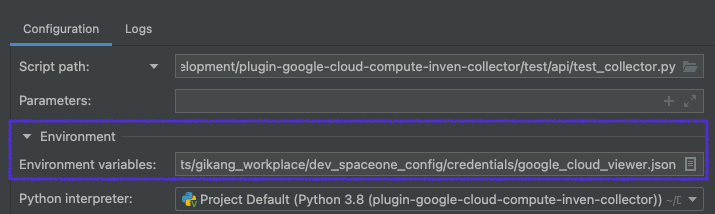

Some plugin needs credential to interface with other services. You need to make credential file and set them as environments before run

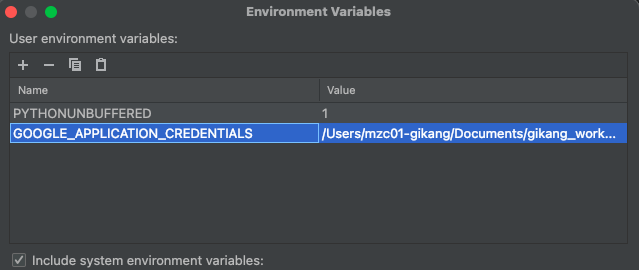

Go to test server configuration > test_server > Edit Configurations

Click Edit variables

Add environment variable as below

| Item | Configuration | Etc |

|---|---|---|

| PYTHONUNBUFFERED | 1 | |

| GOOGLE_APPLICATION_CREDENTIALS | Full path of your configuration file |

Finally you can test run your server

First, run test server locally

Second, run unit test

Using Terminal

6.3 - Plugin Designs

Inventory Collector

Inventory Collector 플러그인을 통해 전문적인 개발지식이 없는 시스템 엔지니어부터, 전문 클라우드 개발자들까지 원하는 클라우드 자산정보를 편리하게 수집하여 체계적으로 관리할 수 있습니다. 그리고, 수집한 자산 정보를 사용자 UI에 손쉽게 표현할 수 있습니다.

Inventory Collector 플러그인은 SpaceONE의 grpc framework 기본 모듈을 기반으로(spaceone-core, spaceone-api) 개발할 수 있습니다. 아래의 문서는 각각의 Cloud Provider별 상세 스펙을 나타냅니다.

AWS

Azure

Google Cloud

Google Cloud External IP Address

Collecting Google Cloud Instance Template

Collecting Google Cloud Load Balancing

Collecting Google Cloud Machine Image

Collecting Google Cloud Snapshot

Collecting Google Cloud Storage Bucket

Collecting Google Cloud VPC Network

Identity Authentication

Monitoring DataSources

Alert Manager Webhook

Notifications

Billing

6.4 - Collector

Add new Cloud Service Type

To add new Cloud Service Type

| Component | Source Directory | Description |

|---|---|---|

| model | src/spaceone/inventory/model/skeleton | Data schema |

| manager | src/spaceone/inventory/manager/skeleton | Data Merge |

| connector | src/spaceone/inventory/connector/skeleton | Data Collection |

Add model

7.1 - gRPC API

Developer Guide

This guide explains the new SpaceONE API specification which extends the spaceone-api.

git clone https://github.com/cloudforet-io/api.git

Create new API spec file

Create new API spec file for new micro service. The file location must be

proto/spaceone/api/<new service name>/<version>/<API spec file>

For example, the APIs for inventory service is defined at

proto

└── spaceone

└── api

├── core

│ └── v1

│ ├── handler.proto

│ ├── plugin.proto

│ ├── query.proto

│ └── server_info.proto

├── inventory

│ ├── plugin

│ │ └── collector.proto

│ └── v1

│ ├── cloud_service.proto

│ ├── cloud_service_type.proto

│ ├── collector.proto

│ ├── job.proto

│ ├── job_task.proto

│ ├── region.proto

│ ├── server.proto

│ └── task_item.proto

└── sample

└── v1

└── helloworld.proto

If you create new micro service called sample, create a directory proto/spaceone/api/sample/v1

Define API

After creating API spec file, update gRPC protobuf.

The content consists with two sections. + service + messages

service defines the RPC method and message defines the request and response data structure.

syntax = "proto3";

package spaceone.api.sample.v1;

// desc: The greeting service definition.

service HelloWorld {

// desc: Sends a greeting

rpc say_hello (HelloRequest) returns (HelloReply) {}

}

// desc: The request message containing the user's name.

message HelloRequest {

// is_required: true

string name = 1;

}

// desc: The response message containing the greetings

message HelloReply {

string message = 1;

}

Build API spec to specific language.

Protobuf can not be used directly, it must be translated to target langauge like python or Go.

If you create new micro service directory, udpate Makefile Append directory name at TARGET

TARGET = core identity repository plugin secret inventory monitoring statistics config report sample

Currently API supports python output.

make python

The generated python output is located at dist/python directory.

dist

└── python

├── setup.py

└── spaceone

├── __init__.py

└── api

├── __init__.py

├── core

│ ├── __init__.py

│ └── v1

│ ├── __init__.py

│ ├── handler_pb2.py

│ ├── handler_pb2_grpc.py

│ ├── plugin_pb2.py

│ ├── plugin_pb2_grpc.py

│ ├── query_pb2.py

│ ├── query_pb2_grpc.py

│ ├── server_info_pb2.py

│ └── server_info_pb2_grpc.py

├── inventory

│ ├── __init__.py

│ ├── plugin

│ │ ├── __init__.py

│ │ ├── collector_pb2.py

│ │ └── collector_pb2_grpc.py

│ └── v1

│ ├── __init__.py

│ ├── cloud_service_pb2.py

│ ├── cloud_service_pb2_grpc.py

│ ├── cloud_service_type_pb2.py

│ ├── cloud_service_type_pb2_grpc.py

│ ├── collector_pb2.py

│ ├── collector_pb2_grpc.py

│ ├── job_pb2.py

│ ├── job_pb2_grpc.py

│ ├── job_task_pb2.py

│ ├── job_task_pb2_grpc.py

│ ├── region_pb2.py

│ ├── region_pb2_grpc.py

│ ├── server_pb2.py

│ ├── server_pb2_grpc.py

│ ├── task_item_pb2.py

│ └── task_item_pb2_grpc.py

└── sample

├── __init__.py

└── v1

├── __init__.py

├── helloworld_pb2.py

└── helloworld_pb2_grpc.py

References

[Google protobuf] https://developers.google.com/protocol-buffers/docs/proto3

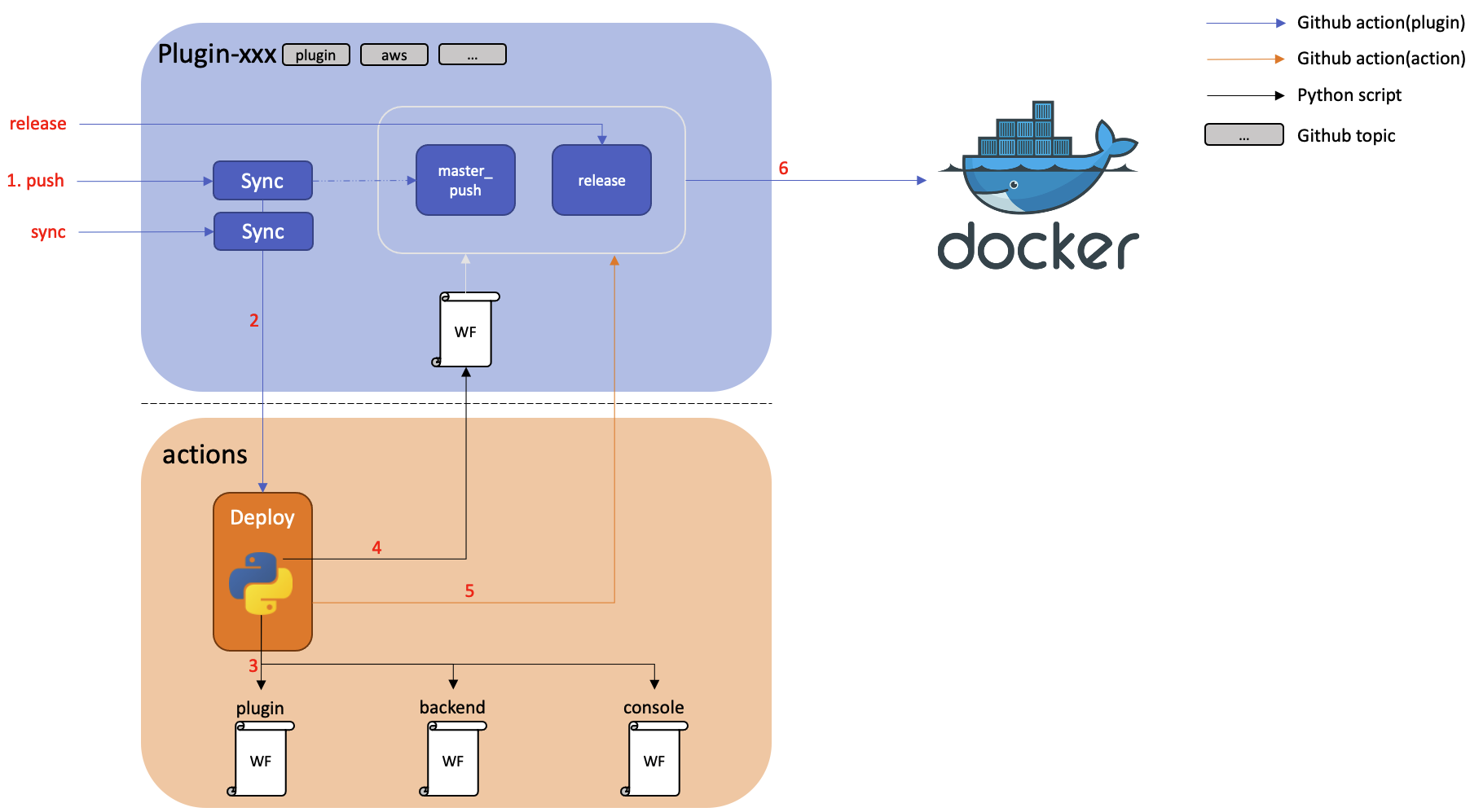

8 - CICD

Actions

Cloudforet has 100+ repositories, 70 of which are for applications and these repositories have github action workflows.

Because of that, it's very difficult to handle one by one when you need to update or run a workflow.

To solve this problem, we created Actions.

The diagram below shows the relationship between Actions and repositories.

What does actions actually do?

Actions is a control tower that manages and deploys github action workflows for Cloudforet's core services.

It can also bulk trigger these workflows when a new version of Cloudforet's core services needs to be released.

1. Manage and deploy github action workflows for Cloudforet's core services.

All workflows for Cloudforet's core services are managed and deployed in this repository.

We write the workflow according to our workflow policy and put it in the workflows directory of Actions.

Then these workflows can be deployed into the repository of Cloudforet's core services

Our devops engineers can modify workflows according to our policy and deploy them in batches using this feature.

The diagram below shows the process for this feature.

*) If you want to see the Actions script that appears in the diagram, see here.

2. trigger workflows when a new version of Cloudforet's core services needs to be released.

When a new version of Cloudforet's core services is released, we need to trigger the workflow of each repository.

To do this, we made workflow that can trigger workflows of each repository in Actions.

Reference

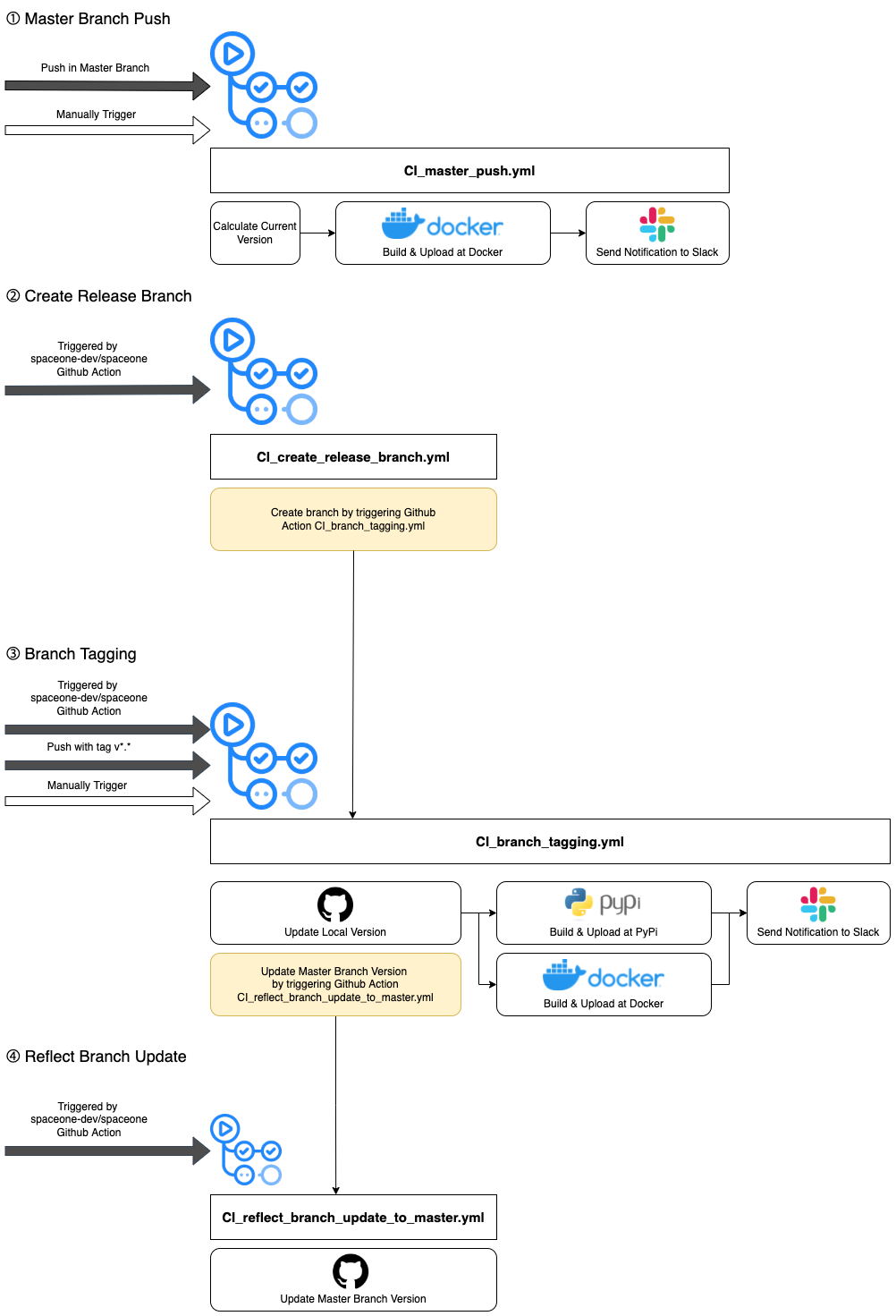

8.1 - Frontend Microservice CI

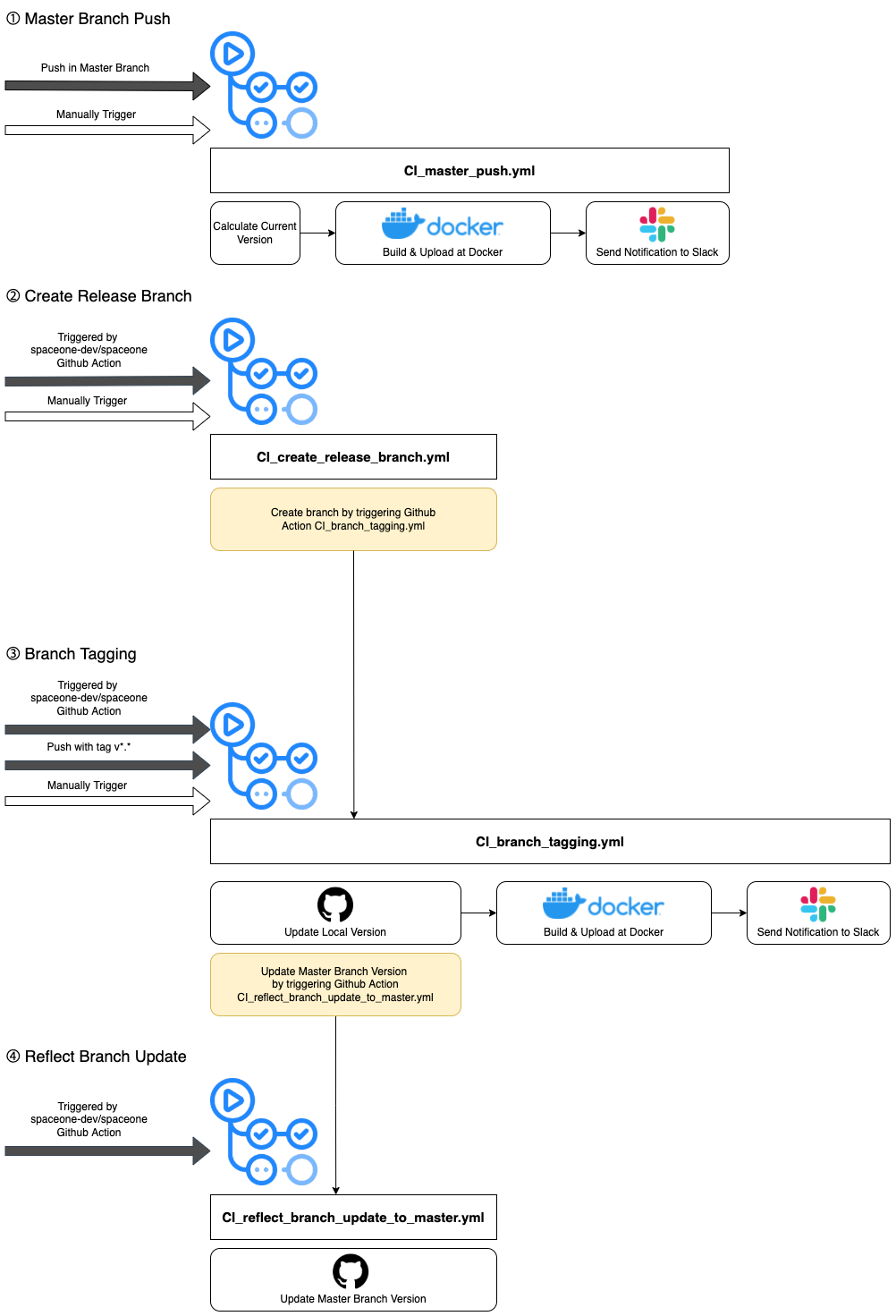

Frontend Microservice CI process details

The flowchart above describes 4 .yml GitHub Action files for CI process of frontend microservices. Unlike the backend microservices, frontend microservices are not released as packages, so the branch tagging job does not include building and uploading the NPM software package. Frontend microservices only build software and upload it on Docker, not NPM or PyPi.

To check the details, go to the .github/workflow directory in each directory. We provide an example of the workflow directory of the frontend microservices with the below link.

- console repository : cloudforet-io/console GitHub workflow file link

8.2 - Backend Microservice CI

Backend Microservice CI process details

The flowchart above describes 4 .yml GitHub Action files for CI process of backend microservices. Most of the workflow is similar to the frontend microservices' CI. However, unlike the frontend microservices, backend microservices are released as packages, therefore the process includes building and uploading PyPi package.

To check the details, go to the .github/workflow directory in each directory. We provide an example of the workflow directory of the backend microservices with the below link.

- identity repository : cloudforet-io/identity GitHub workflow file link

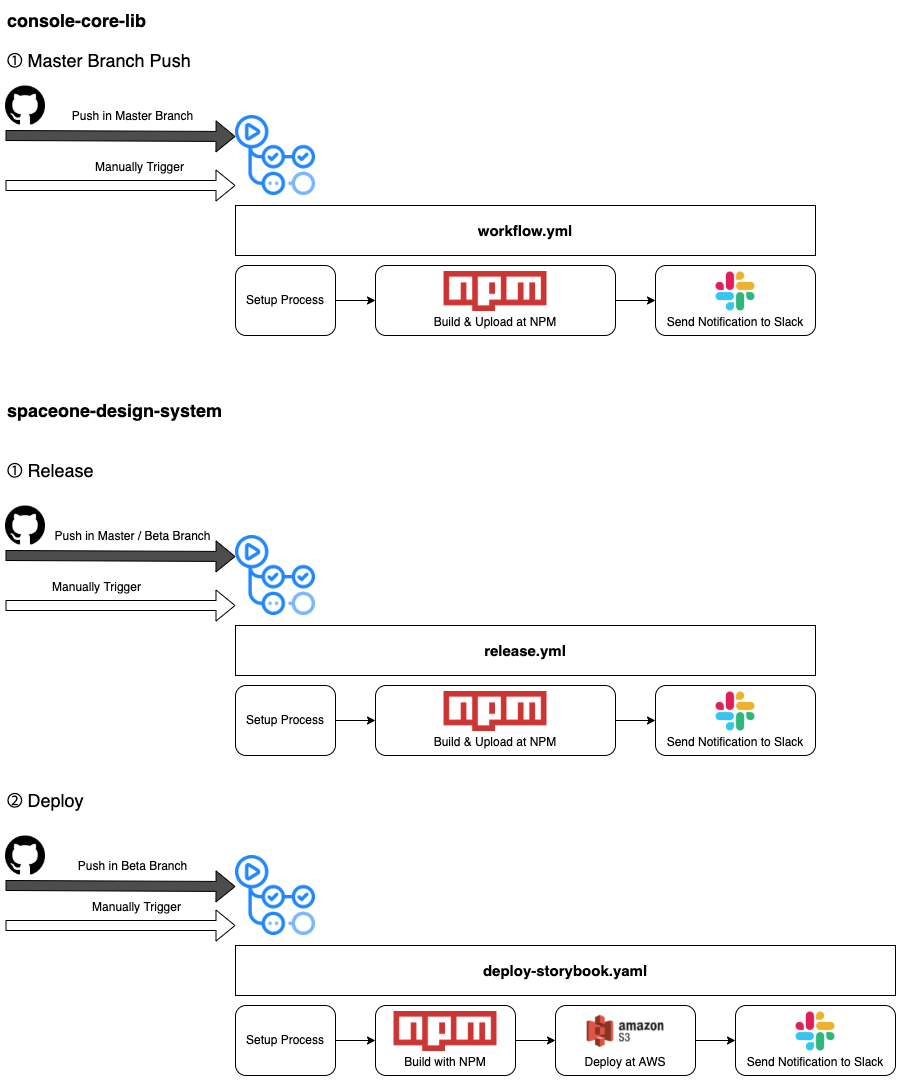

8.3 - Frontend Core Microservice CI

Frontend Core Microservice CI

Frontend Core microservices' codes are integrated and built, uploaded with the flow explained above. Most of the workflows include set-up process including setting Node.js, caching node modules, and installing dependencies. After the set-up proccess, each repository workflow is headed to building process proceeded in NPM. After building, both repositories' packages built are released in NPM by code npm run semantic-release.

Check semantic-release site, npm: semantic-release for further details about the release process.

Also, unlike other repositories deployed by the flow from Docker to Spinnaker and k8s, spaceone-design-system repository is deployed differently, based on direct deployment through AWS S3.

To check the details, go to the .github/workflow directory in each directory.

- console-core-lib repository : cloudforet-io/console-core-lib GitHub workflow file link

- spaceone-design-system repository : cloudforet-io/spaceone-design-system GitHub workflow file link

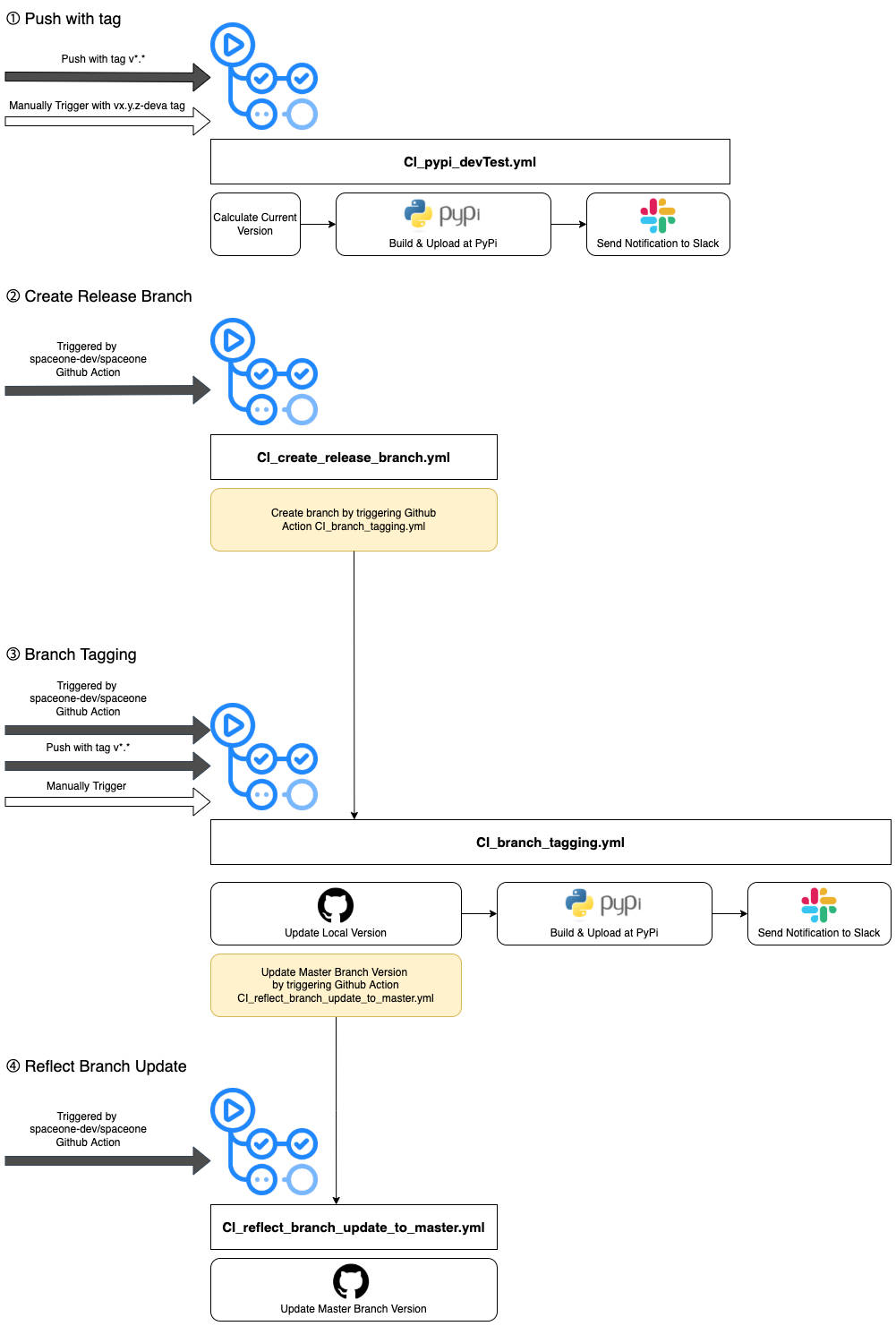

8.4 - Backend Core Microservice CI

Backend Core Microservice CI process details

Backend Core microservices' 4 workflow related GitHub Action files are explained through the diagram above. Unlike the other repositories, pushes in GitHub with tags are monitored and trigger to do building the package in PyPi for testing purposes, instead of workflow tasks for master branch pushes.

Also, Backend Core microservices are not built and uploaded on Docker. They are only managed in PyPi.

To check the details, go to the .github/workflow directory in each directory.

- api repository : cloudforet-io/api GitHub workflow file link

- python-core repository : cloudforet-io/python-core GitHub workflow file link

8.5 - Plugin CI

Plugin CI process details

Plugin repositories with name starting with ‘plugin-’ have unique CI process managed with workflow file named push_sync_ci.yaml. As the total architecture of CI is different from other repositories, plugin repositories' workflow files are automatically updated at every code commit.

We can follow the plugin CI process, step by step.

Step 1. push_sync_ci.yaml in each plugin repository is triggered by master branch push or in a manual way.

Step 2. push_sync_ci.yaml runs cloudforet-io/actions/.github/worflows/deploy.yaml.

Step 2-1. spaceone/actions/.github/worflows/deploy.yaml runs cloudforet-io/actions/src/main.py.

cloudforet-io/actions/src/main.pyupdates each plugin repository workflow files based on the repository characteristics distinguished by topics. Newest version files of all plugin repository workflows are managed incloudforet-io/actions.

Step 2-2. spaceone/actions/.github/worflows/deploy.yaml runs push_build_dev.yaml in each plugin repository

push_build_dev.yamlproceeds versioning based on current date.push_build_dev.yamlupload the plugin image in Docker.push_build_dev.yamlsends notification through Slack.

To build and release the docker image of plugin repositories, plugins use dispatch_release.yaml.

dispatch_release.yamlin each plugin repository is triggered manually.dispatch_release.yamlexecutes condition_check job to check version format and debug.dispatch_release.yamlupdates master branch version file.dispatch_release.yamlexecutes git tagging.dispatch_release.yamlbuilds and pushes to Docker Hub withdocker/build-push-action@v1dispatch_release.yamlsends notification through Slack.

For further details, you can check our GitHub cloudforet-io/actions.

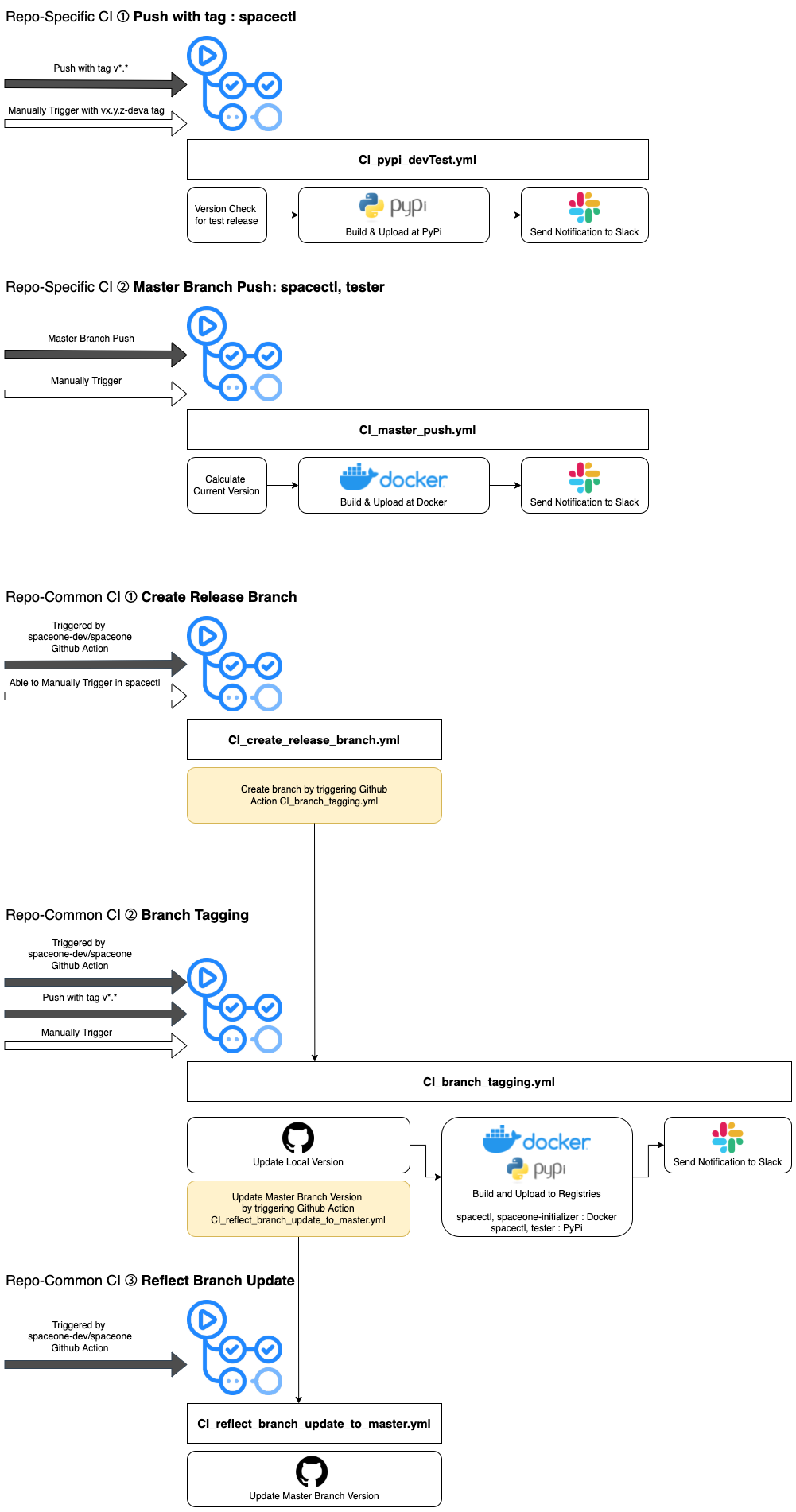

8.6 - Tools CI

Tools CI process details

spacectl, spaceone-initializer, tester repositories are tools used for the spaceone project. There are some differences from other repositories' CI process.

spacectl repository workflow includes test code for each push with a version tag, which is similar to the CI process of backend core repositories.

spaceone-initializer repository does not include the workflow file triggered by ‘master branch push’, which most of repositories including spacectl and tester have.

Tools-category repositories use different repositories to upload.

- spacectl : PyPi and Docker both

- spaceone-initializer : Docker

- tester : PyPi

To check the details, go to the .github/workflow directory in each directory.

- spacectl repository : cloudforet-io/spacectl GitHub workflow file link

- spaceone-initializer repository : cloudforet-io/spaceone-initializer GitHub workflow file link

- tester repository : cloudforet-io/tester GitHub workflow file link

9 - Contribute

9.1 - Documentation

9.1.1 - Content Guide

Create a new page

Go to the parent page of the page creation location. Then, click the 'Create child page' button at the bottom right.

or:

You can also fork from the repository and work locally.

Choosing a title and filename

Create a filename that uses the words in your title separated by underscore (_). For example, the topic with title Using Project Management has filename project_management.md.

Adding fields to the front matter

In your document, put fields in the front matter. The front matter is the YAML block that is between the triple-dashed lines at the top of the page. Here's an example:

---

title: "Project Management"

linkTitle: "Project Management"

weight: 10

date: 2021-06-10

description: >

View overall status of each project and Navigate to detailed cloud resources.

---

Attention

When writing a description, if you start a sentence without a space with a tab. Entire site will fail.Description of front matter variables

| Variables | Description |

|---|---|

| title | The title for the content |

| linkTitle | Left-sidebar title |

| weight | Used for ordering your content in left-sidebar. Lower weight gets higher precedence. So content with lower weight will come first. If set, weights should be non-zero, as 0 is interpreted as an unset weight. |

| date | Creation date |

| description | Page description |

If you want to see more details about front matter, click Front matter.

Write a document

Adding Table of Contents

When you add ## in the documentation, it makes a list of Table of Contents automatically.

Adding images

Create a directory for images named file_name_img in the same hierarchy as the document. For example, create project_management_img directory for project_management.md. Put images in the directory.

Style guide

Please refer to the style guide to write the document.

Opening a pull request

When you are ready to submit a pull request, commit your changes with new branch.

9.1.2 - Style Guide (shortcodes)

Heading tag

It is recommended to use them sequentially from ##, <h2>. It's for style, not just semantic markup.

Note

When you add## in the documentation, it makes a list of Table of Contents automatically.Link button

Code :

{{< link-button background-color="navy500" url="/" text="Home" >}}

{{< link-button background-color="white" url="https://cloudforet.io/" text="cloudforet.io" >}}

Output :

Home

cloudforet.io

Video

Code :

{{< video src="https://www.youtube.com/embed/zSoEg2v_JrE" title="Cloudforet Setup" >}}

Output:

Alert

Code :

{{< alert title="Note Title" >}}

Note Contents

{{< /alert >}}

Output:

Note Title

Note ContentsReference

- Learn about Hugo

- Learn about How to use Markdown for writing technical documentation