This the multi-page printable view of this section. Click here to print.

Documentation for Cloudforet - Easy guide for multi cloud management

Documentation and detailed use guide for Cloudforet contributors.

- 1: Introduction

- 1.1: Overview

- 1.2: Integrations

- 1.3: Key Differentiators

- 1.4: Release Notes

- 2: Concepts

- 2.1: Architecture

- 2.2: Identity

- 2.2.1: Project Management

- 2.2.2: Role Based Access Control

- 2.2.2.1: Understanding Policy

- 2.2.2.2: Understanding Role

- 2.3: Inventory

- 2.3.1: Monitoring

- 2.4: Alert Manager

- 2.5: Cost Analysis

- 3: Setup & Operation

- 3.1: Getting Started

- 3.2: Installation

- 3.2.1: AWS

- 3.2.2: On Premise

- 3.3: Configuration

- 4: User Guide

- 4.1: Get started

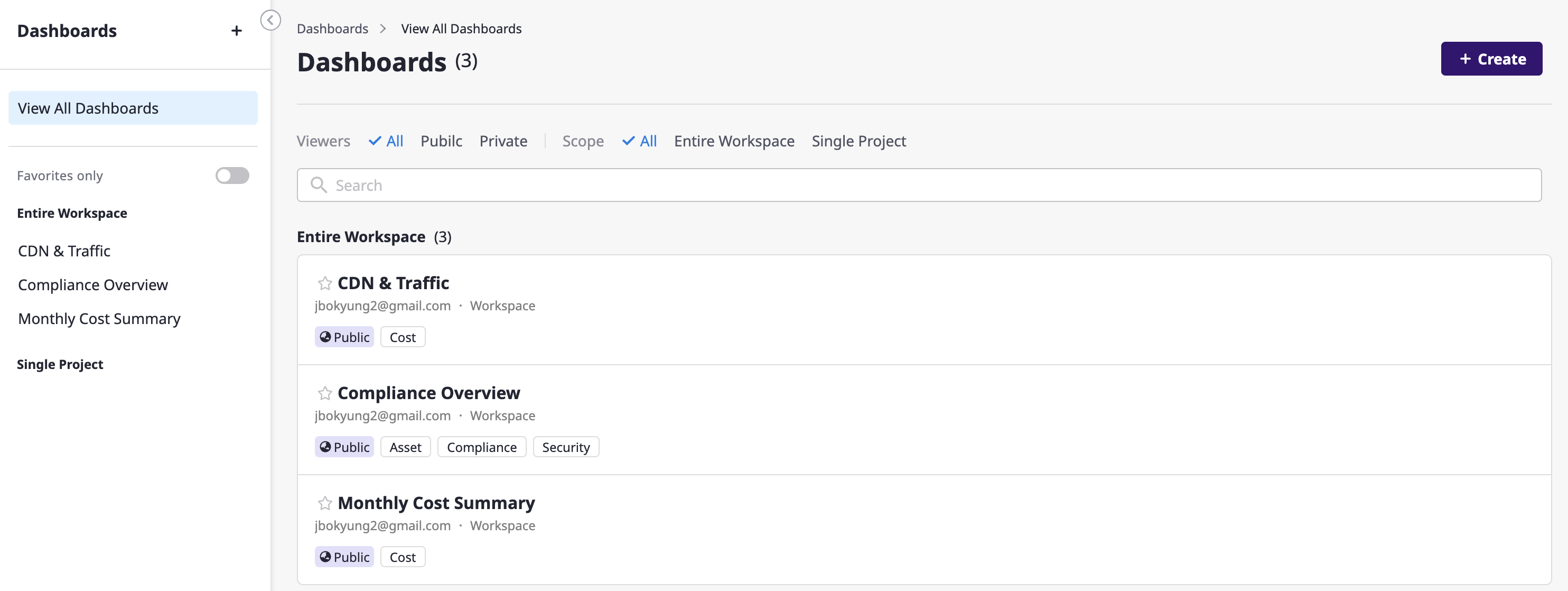

- 4.2: Dashboards

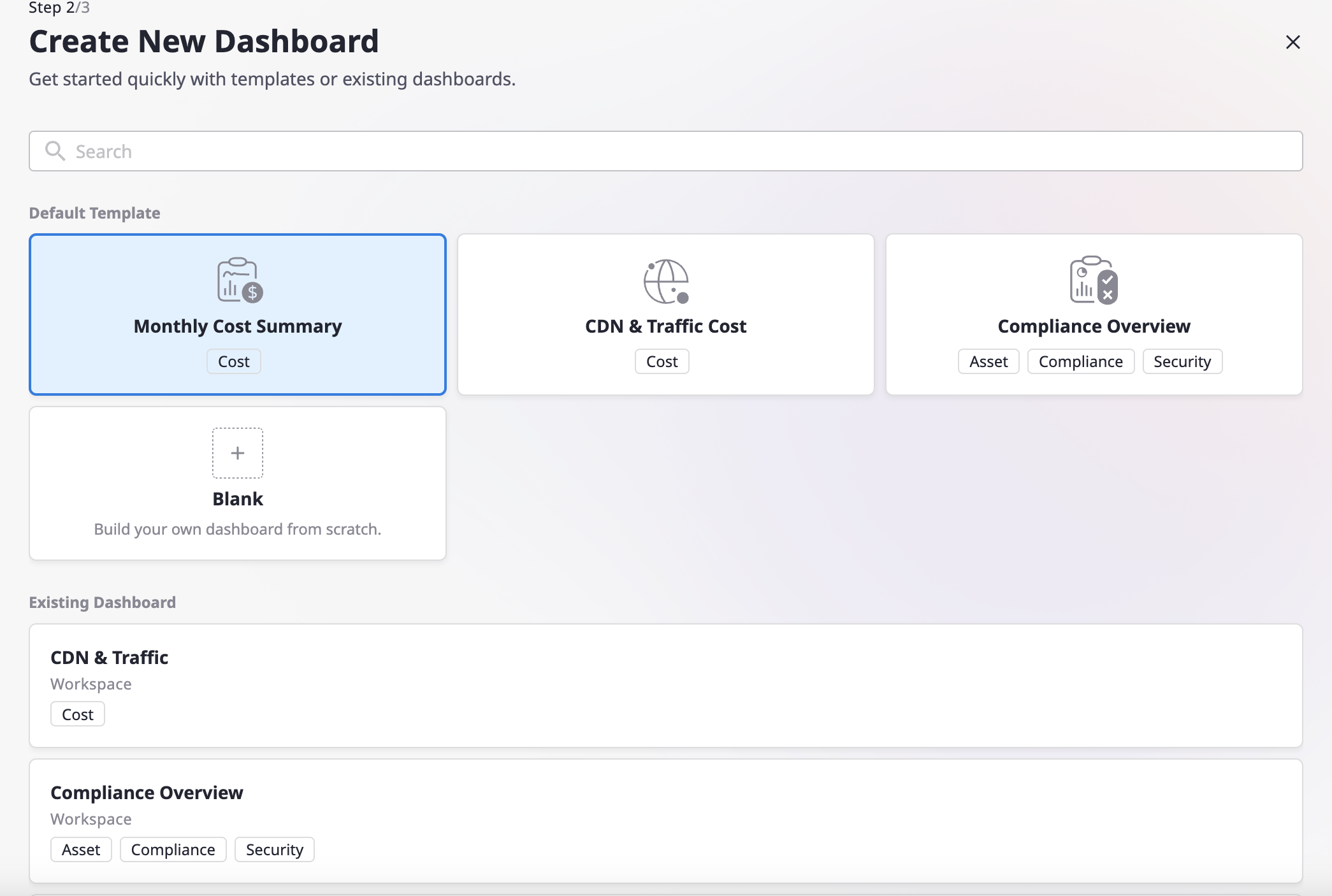

- 4.2.1: Dashboard Templates

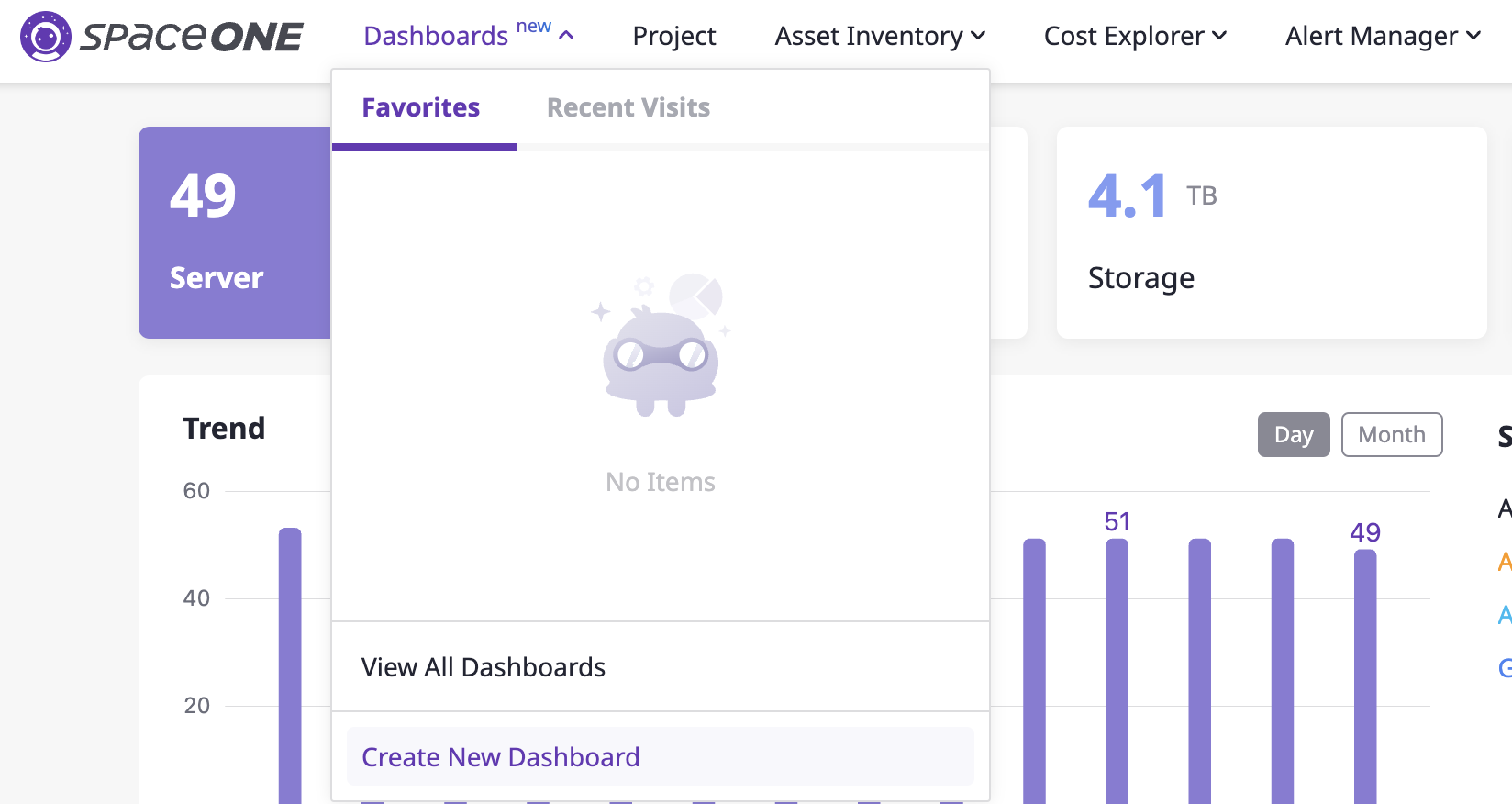

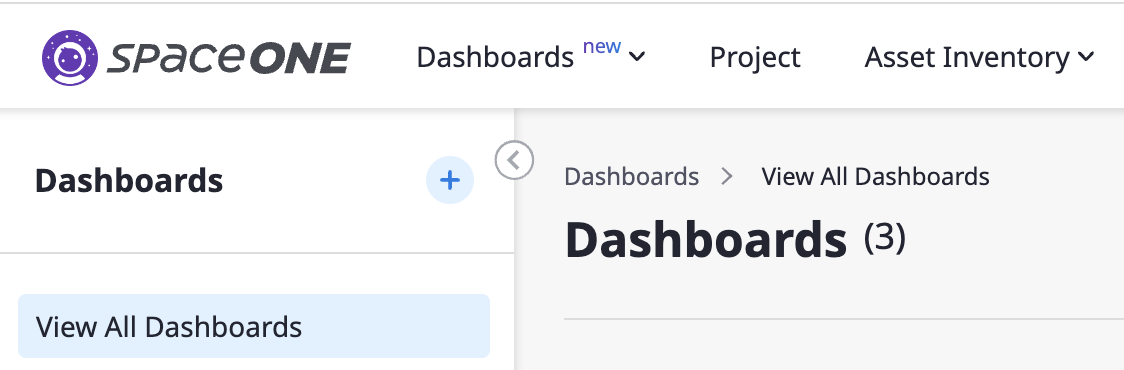

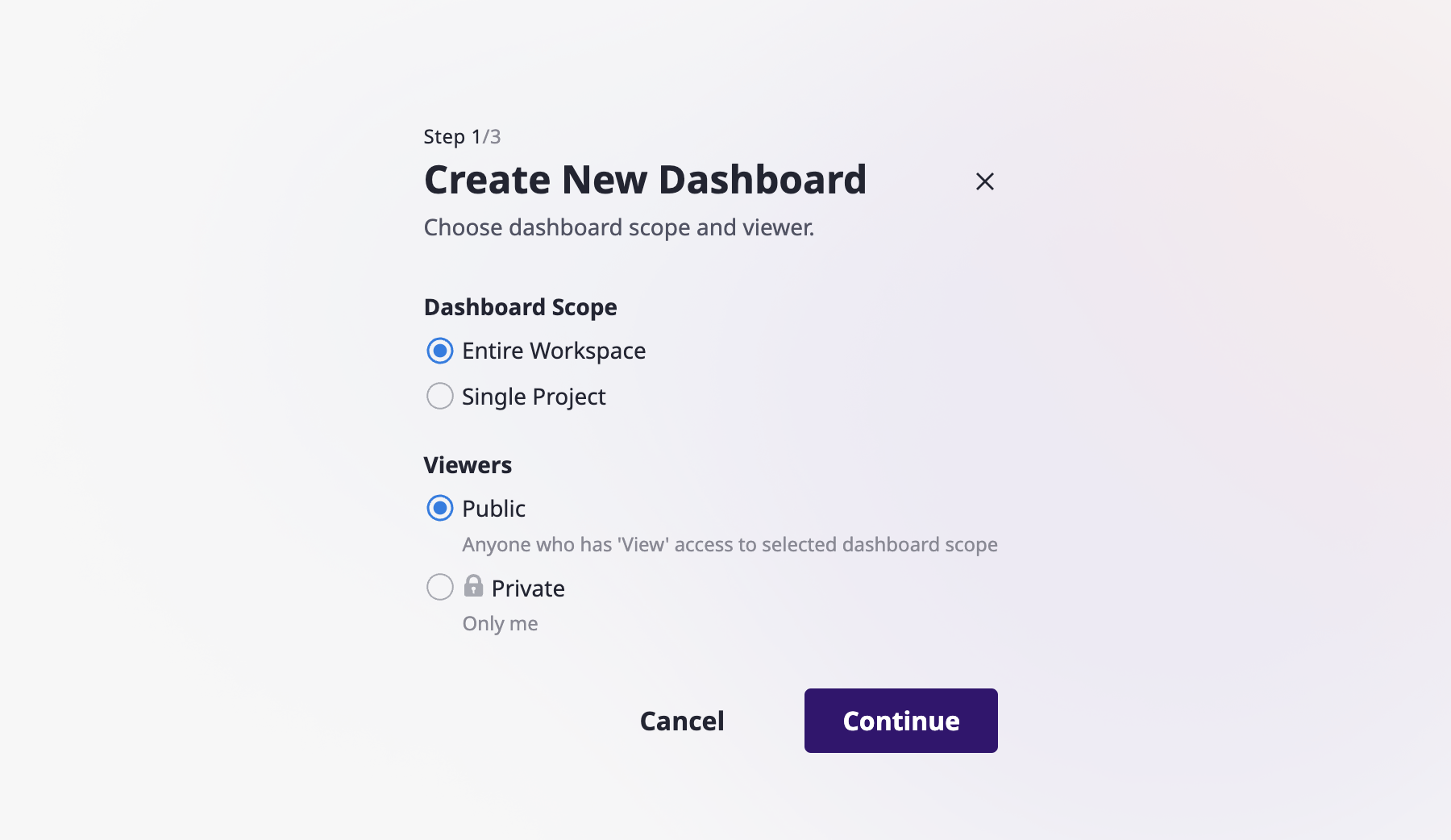

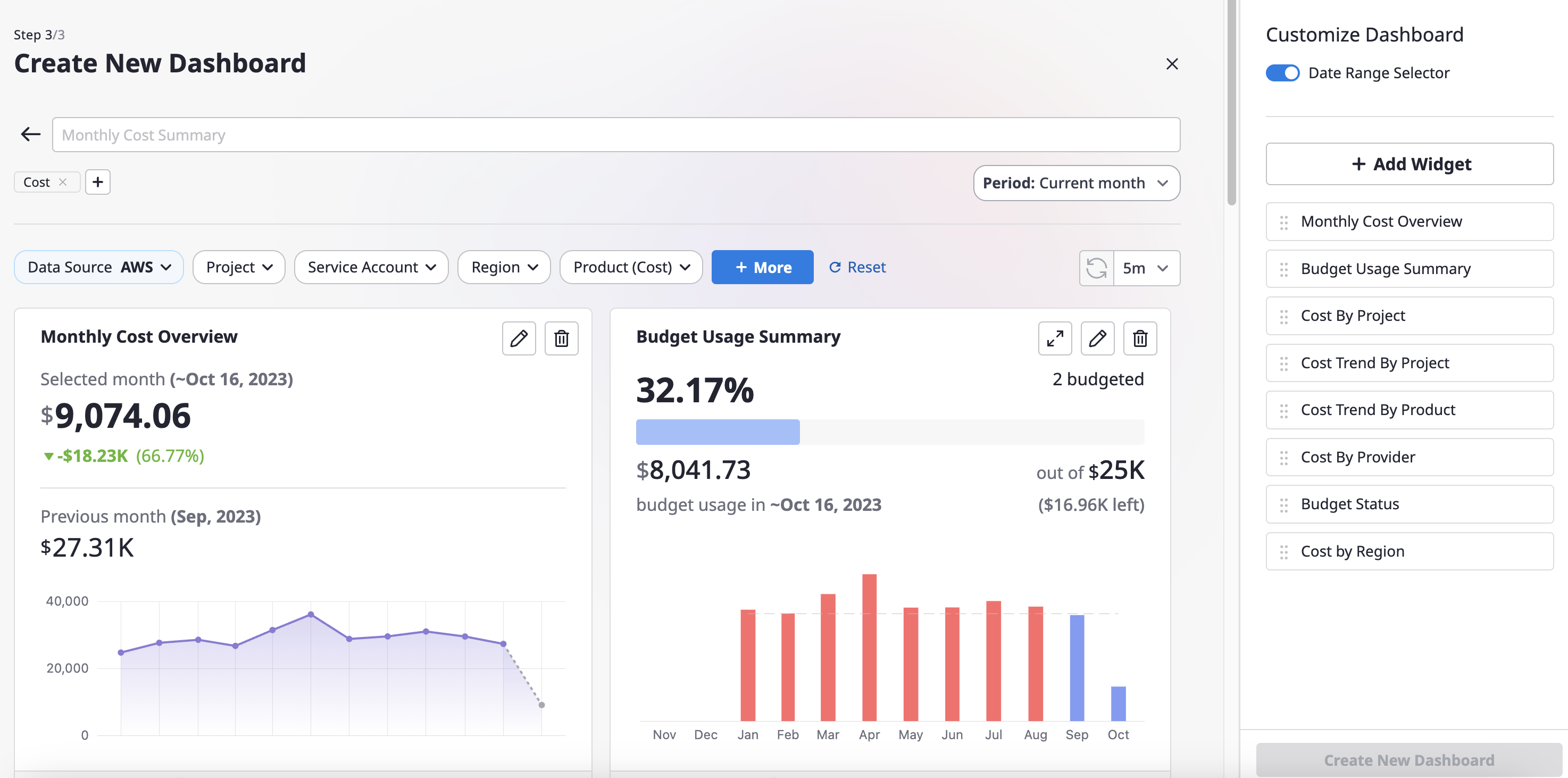

- 4.2.2: Create Dashboard

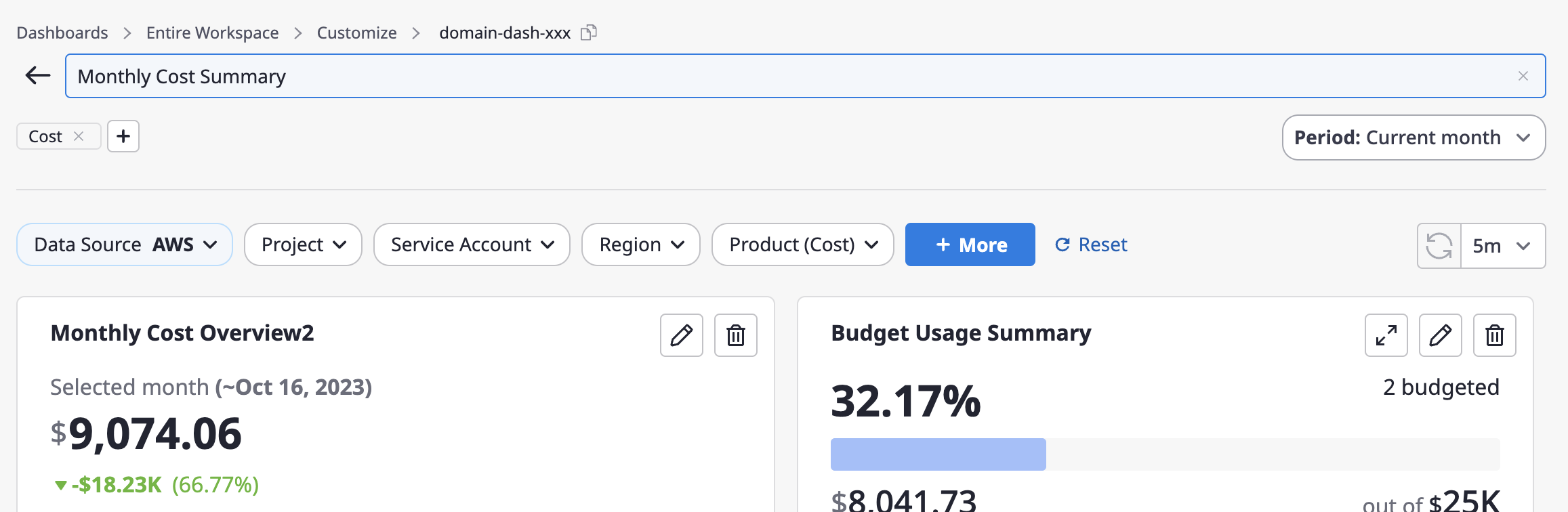

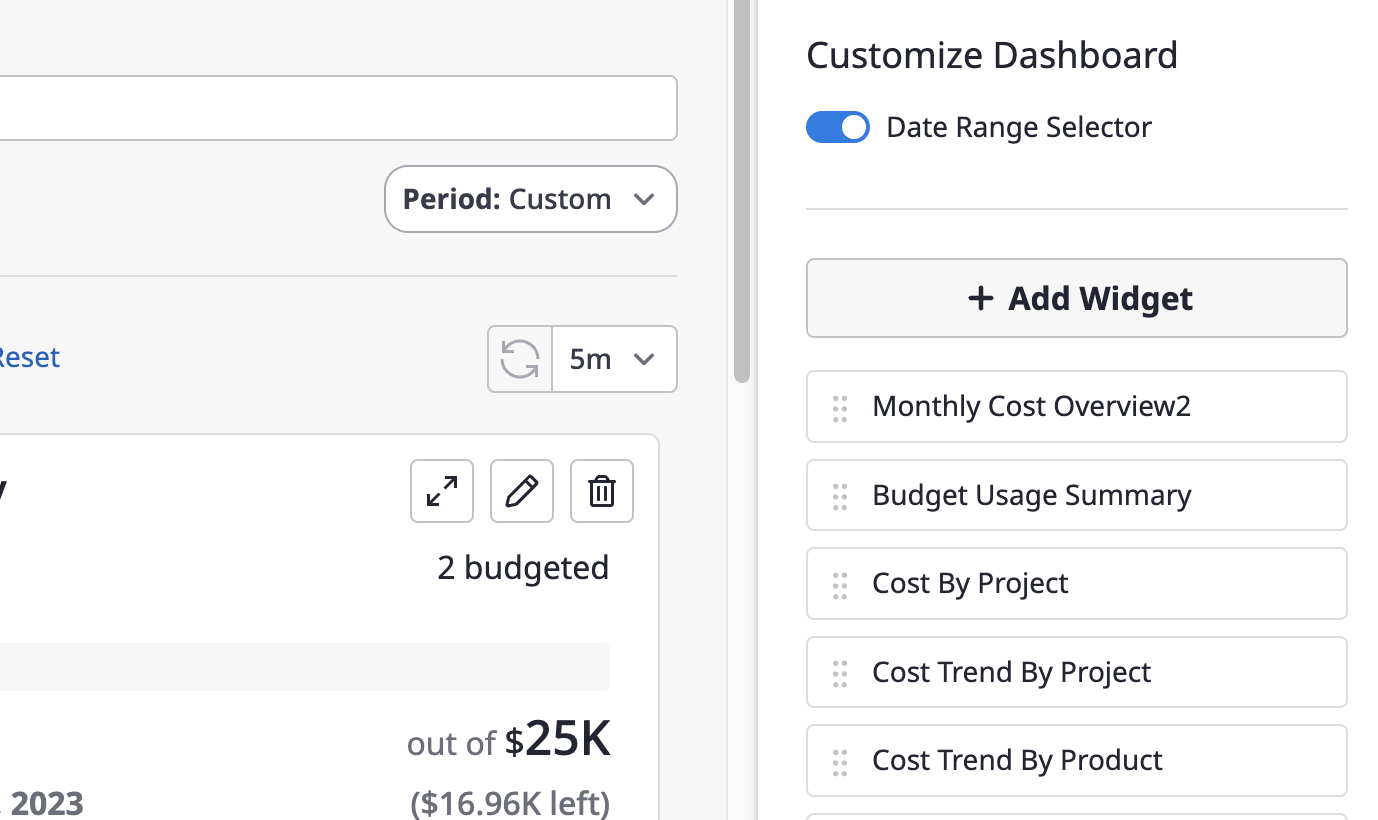

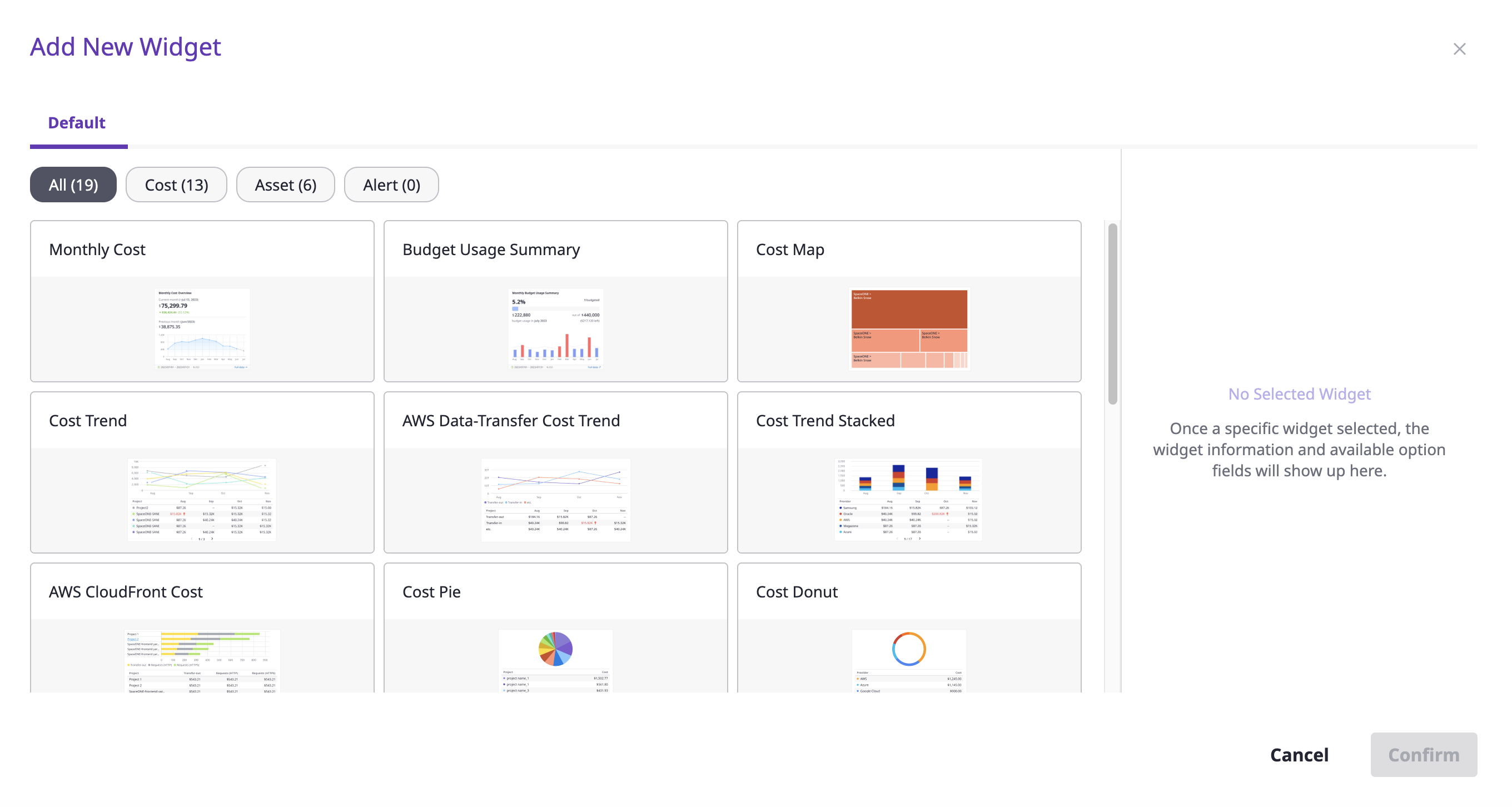

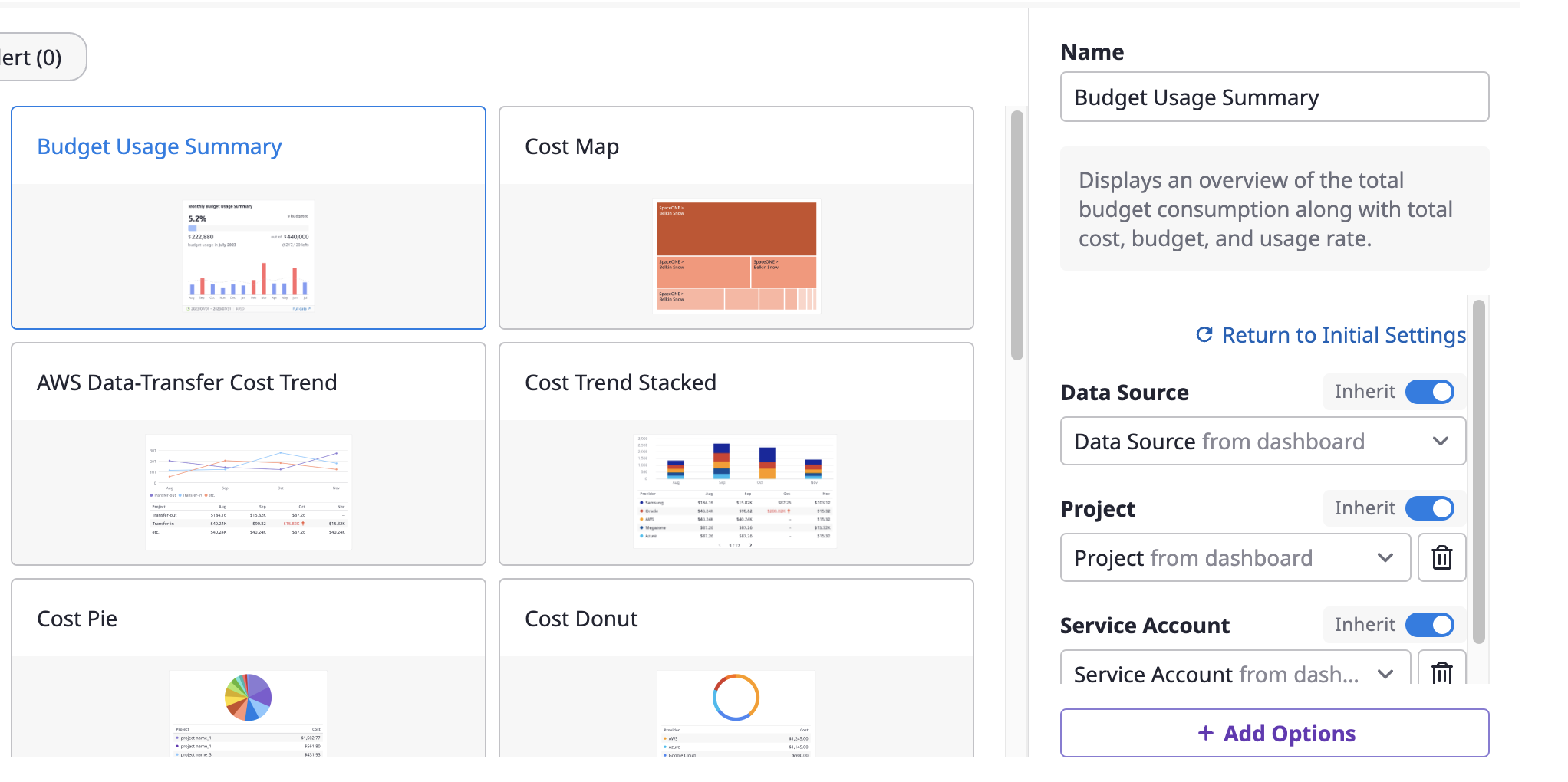

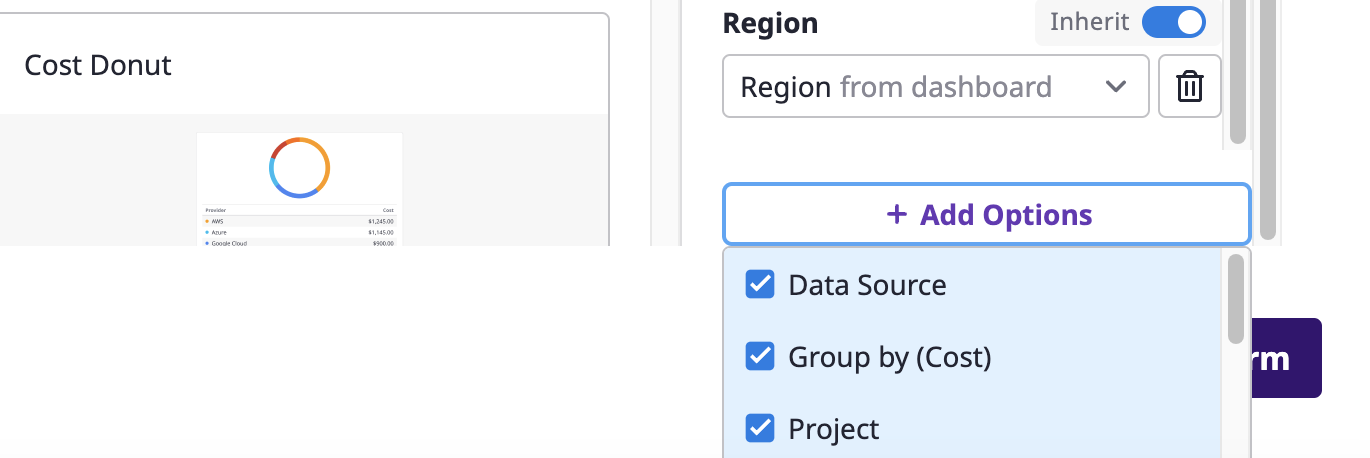

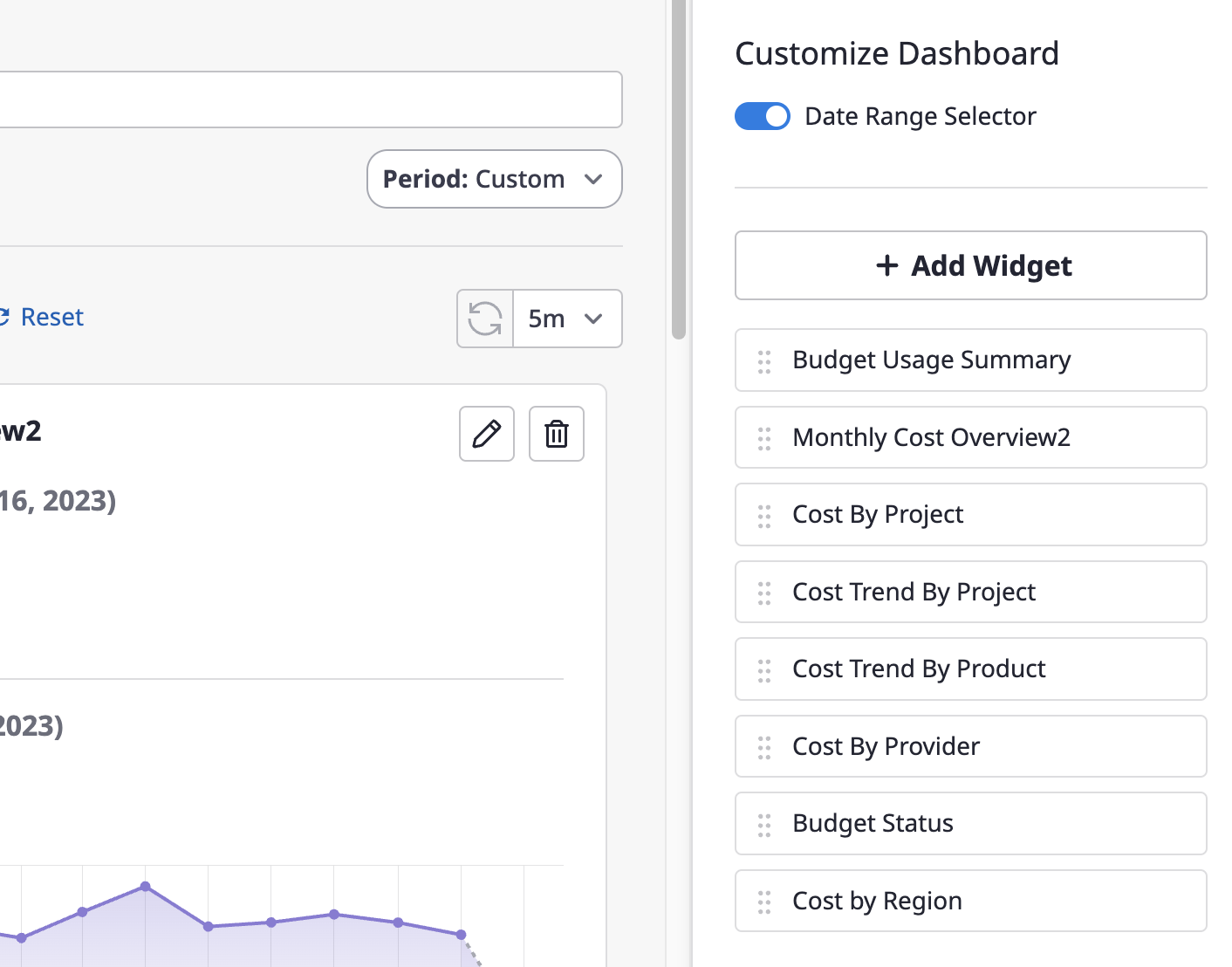

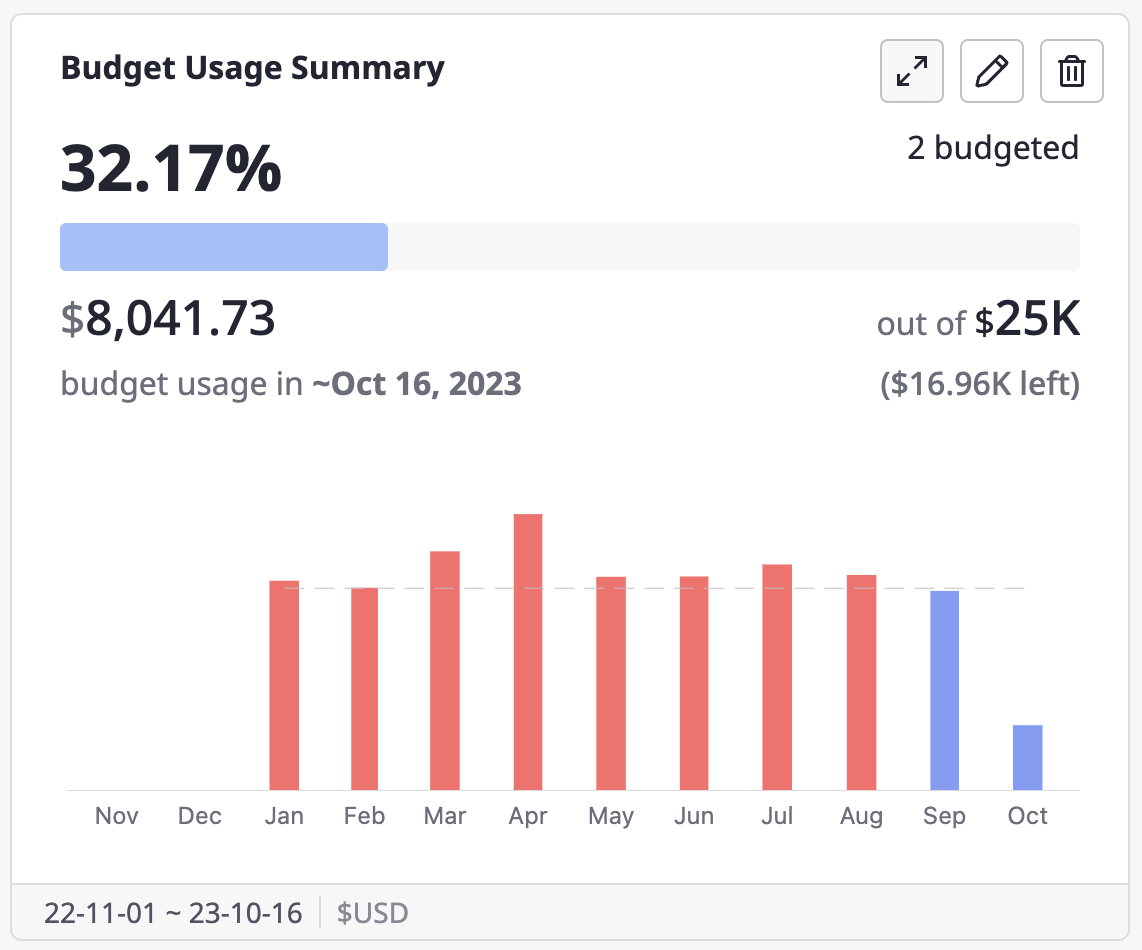

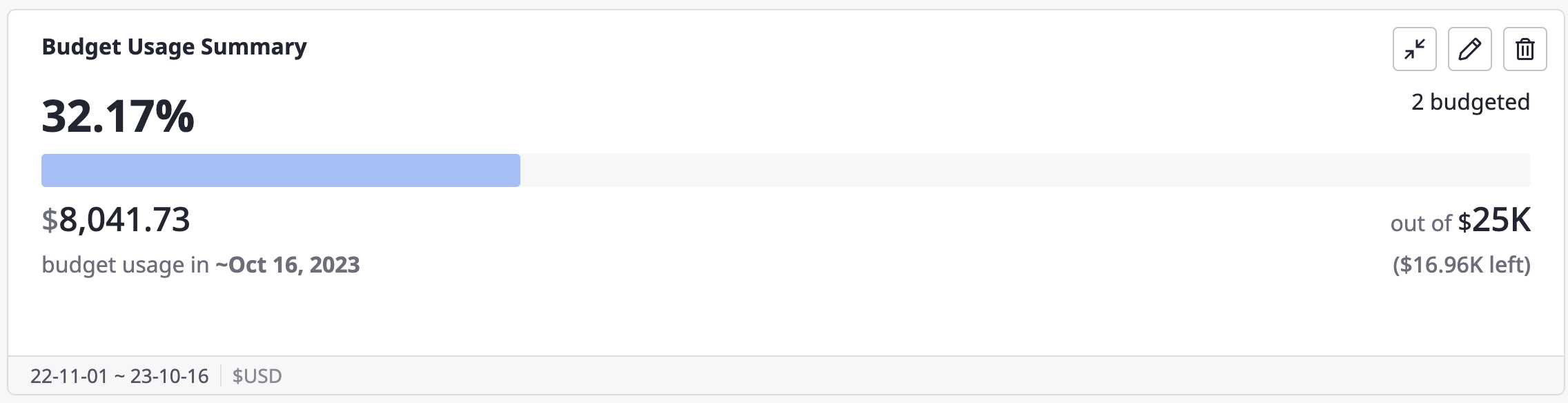

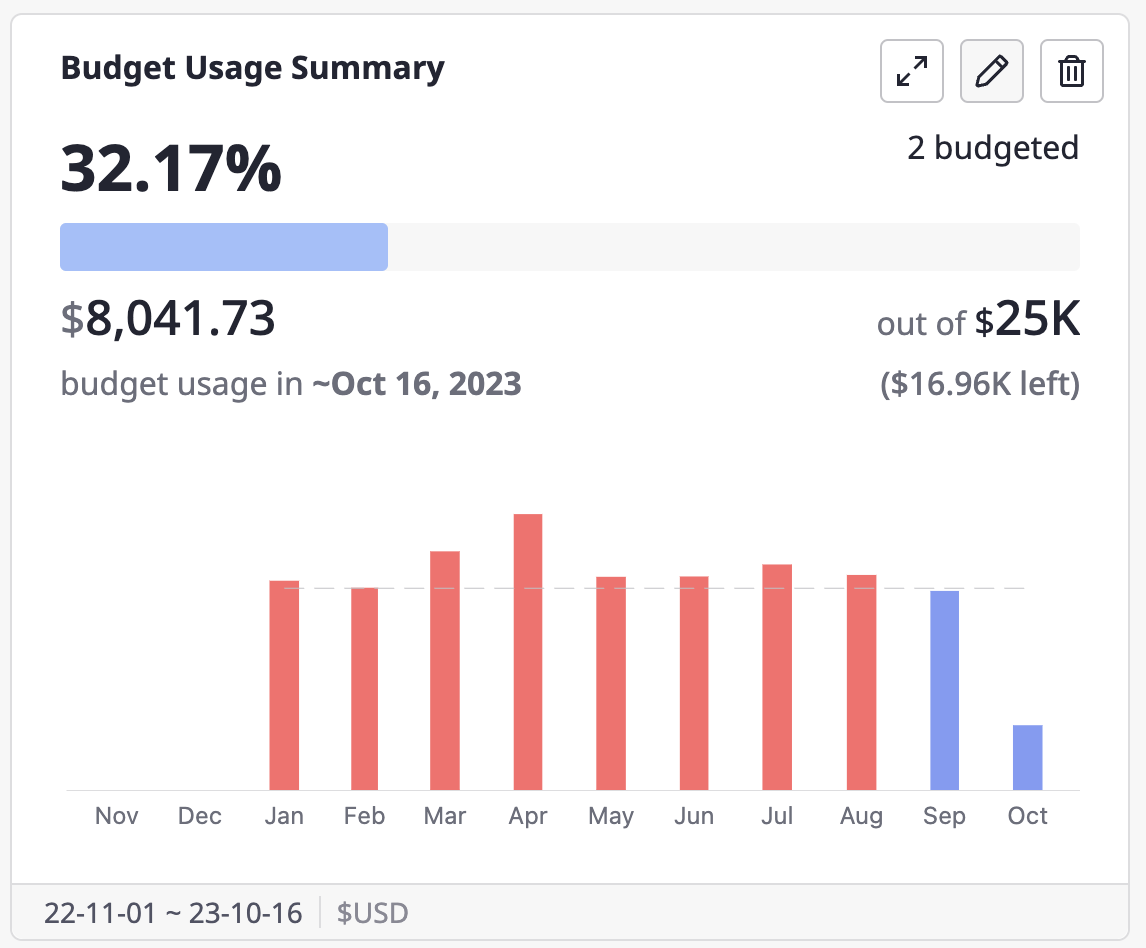

- 4.2.3: Customize Dashboard

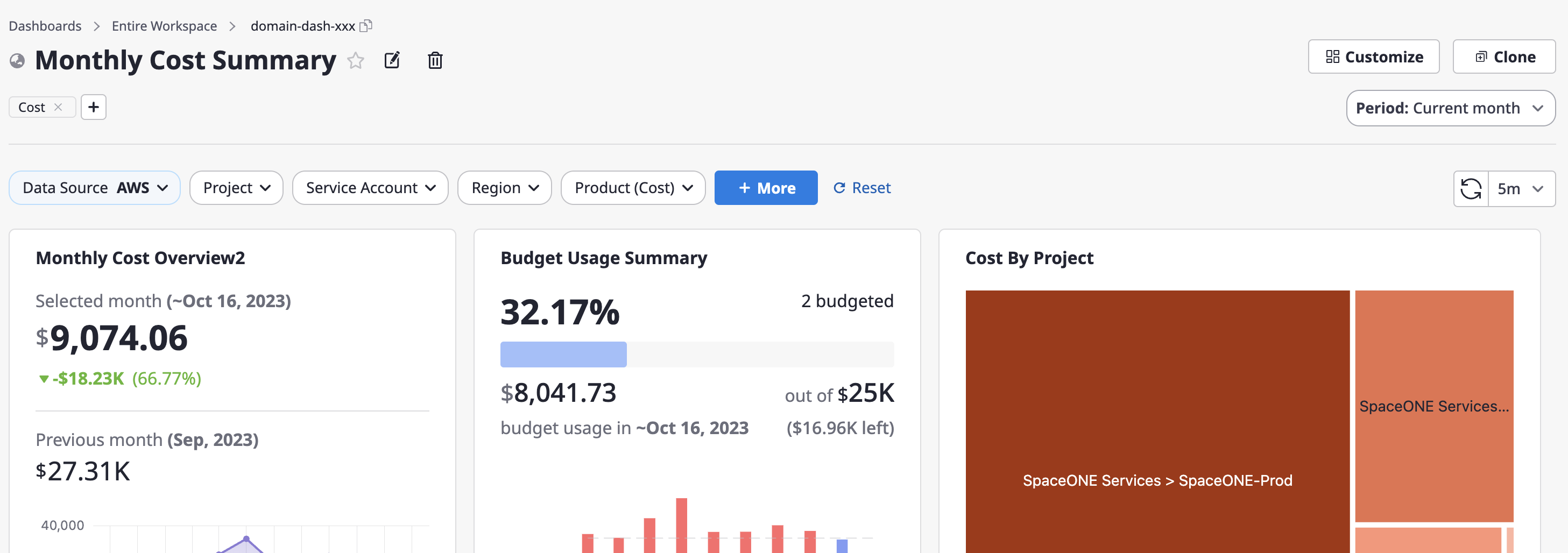

- 4.2.4: Review & Quick Configuration

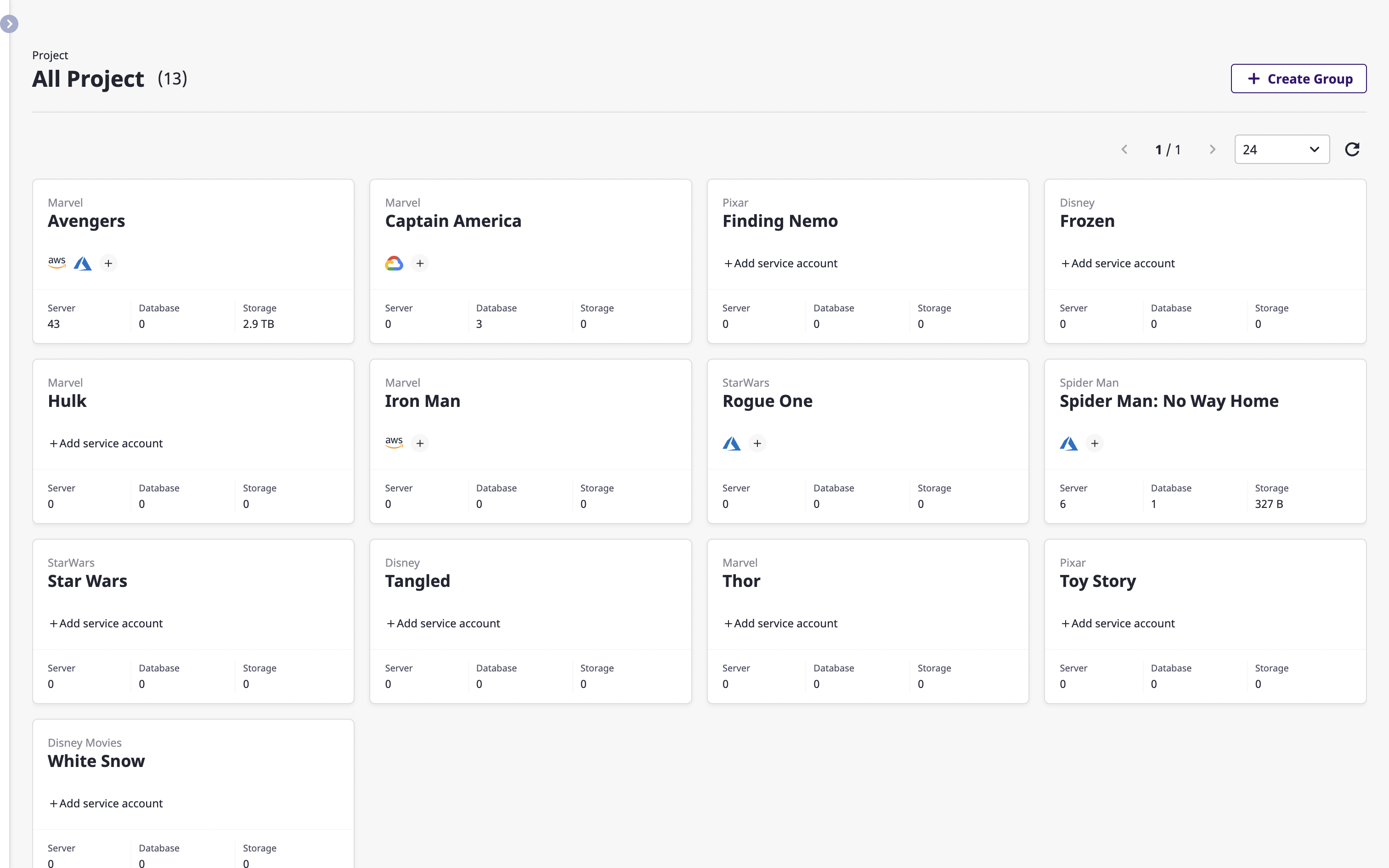

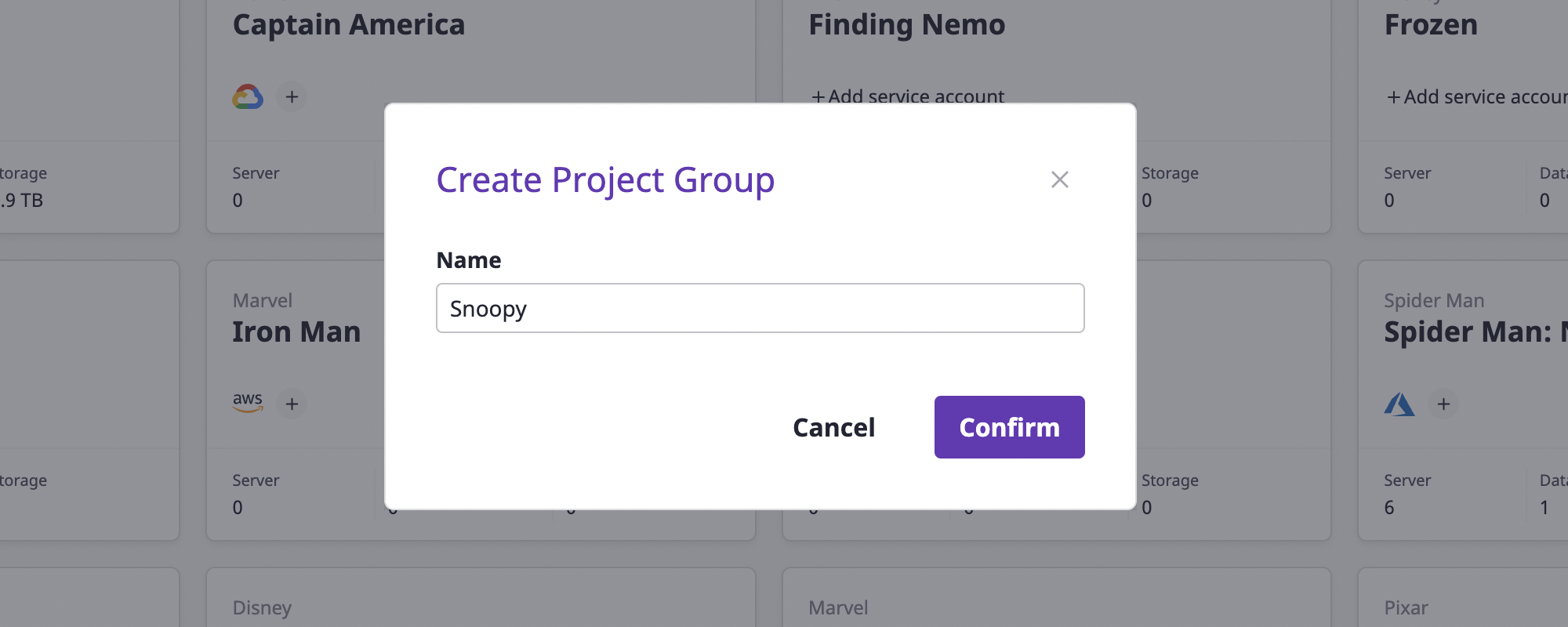

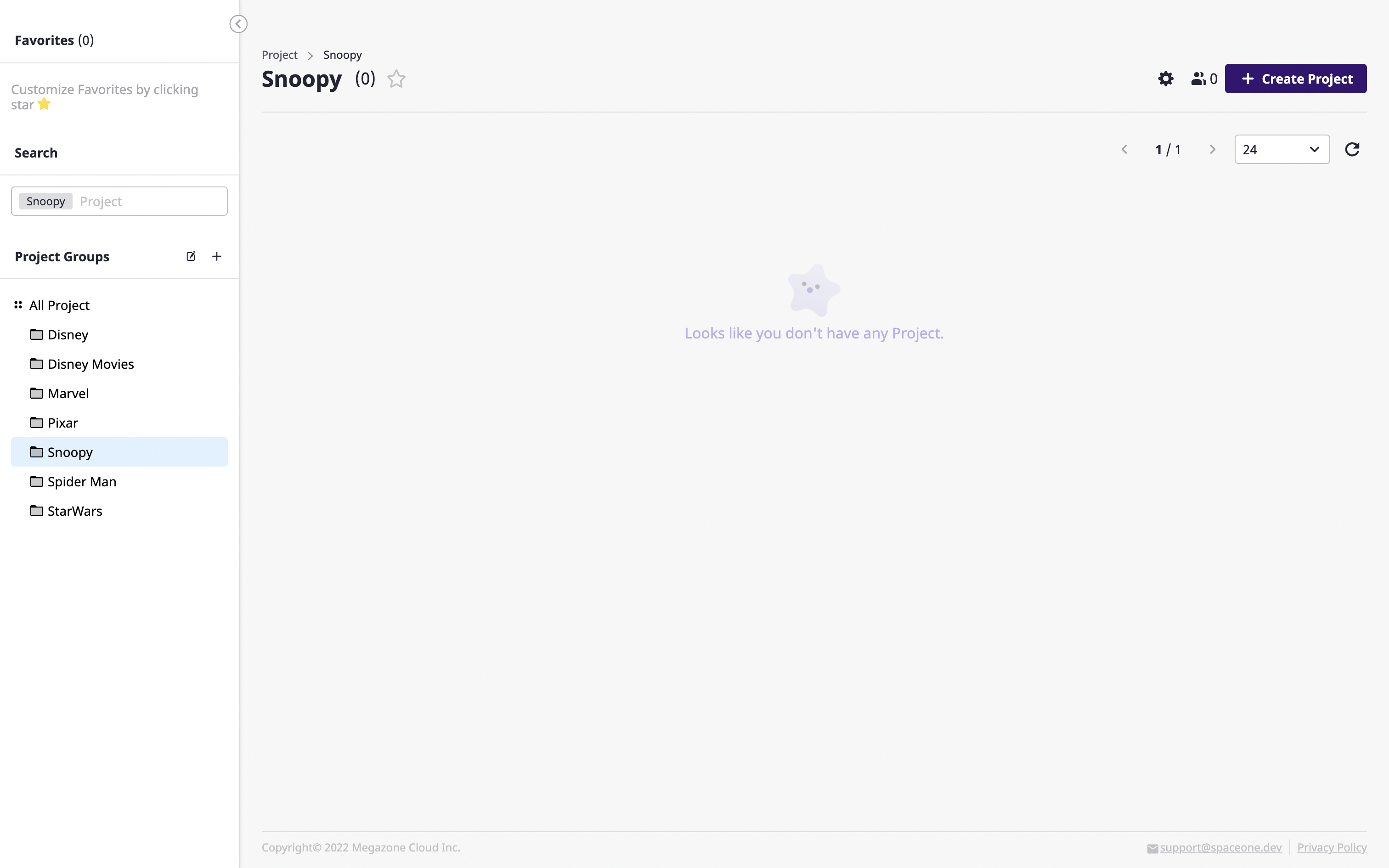

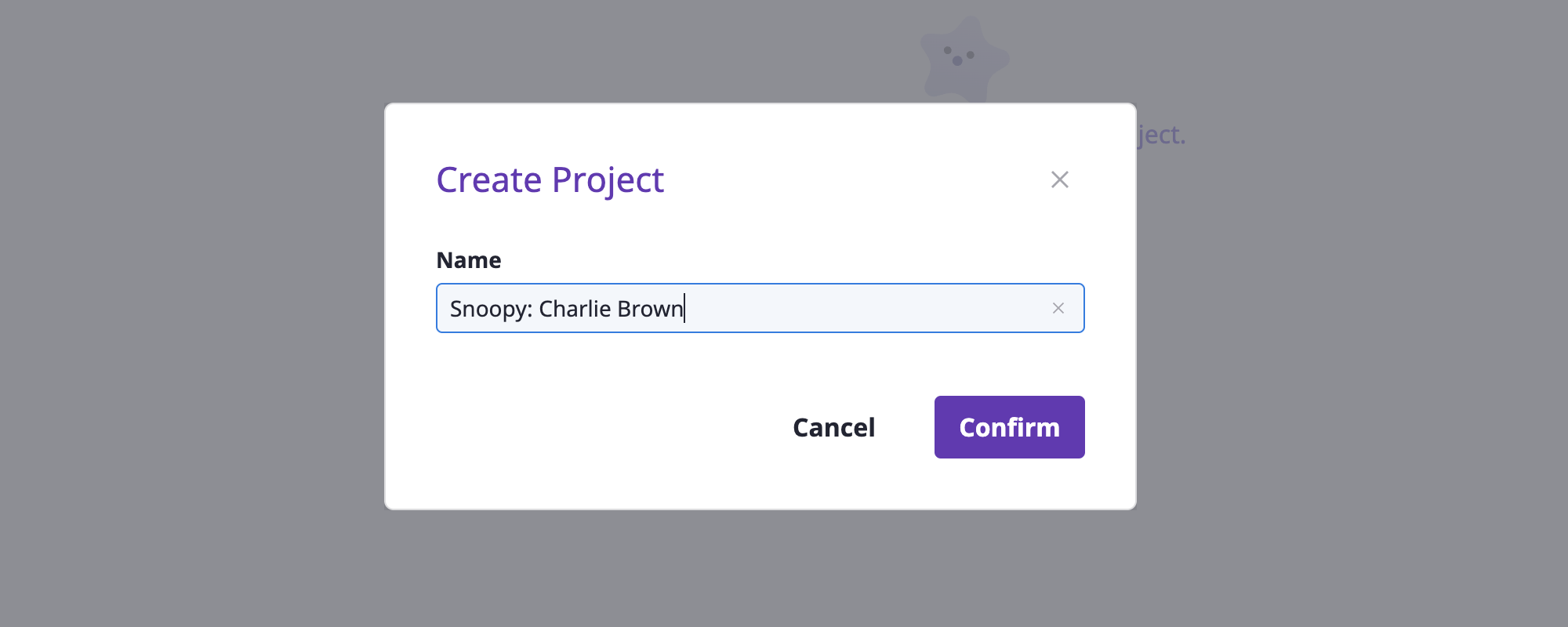

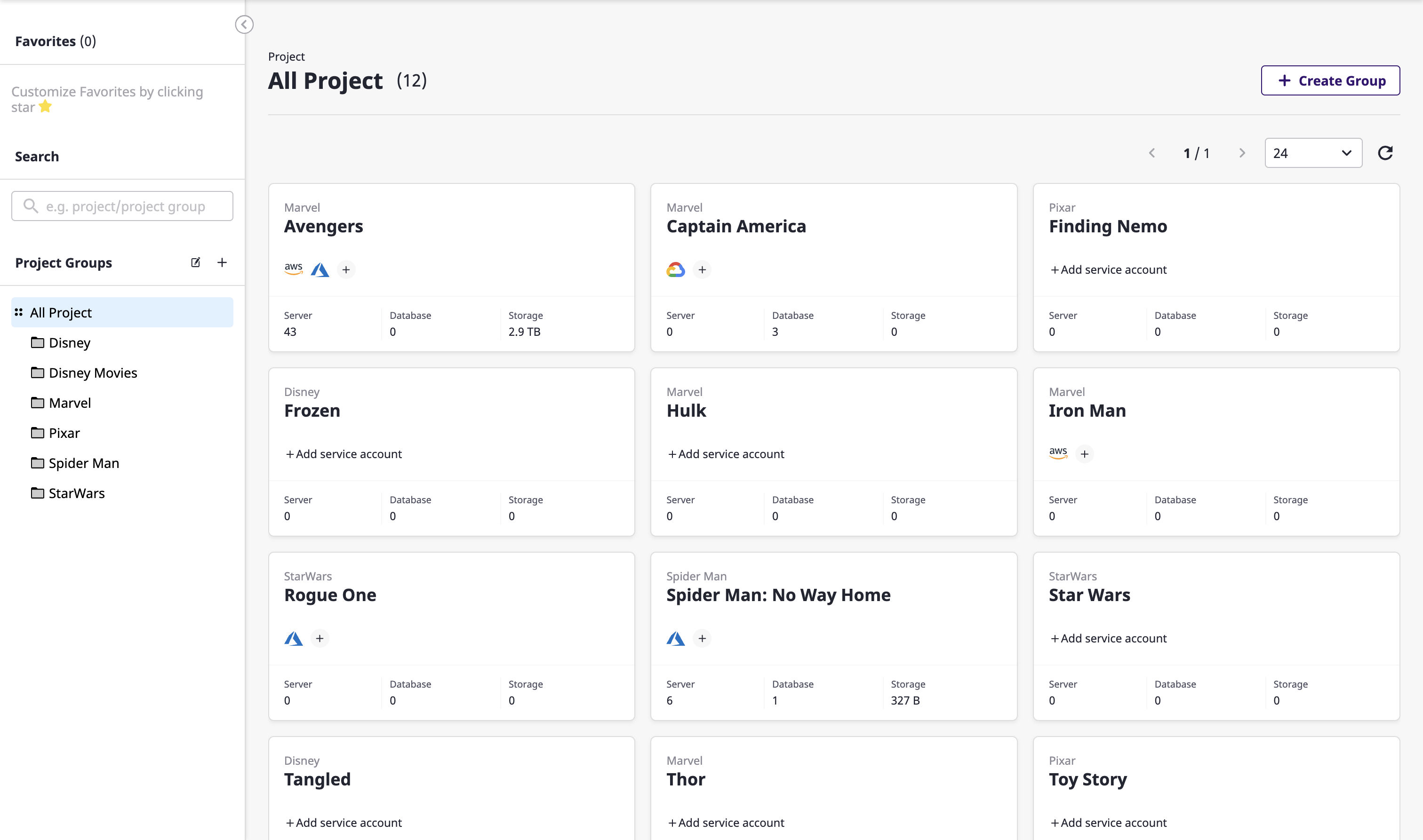

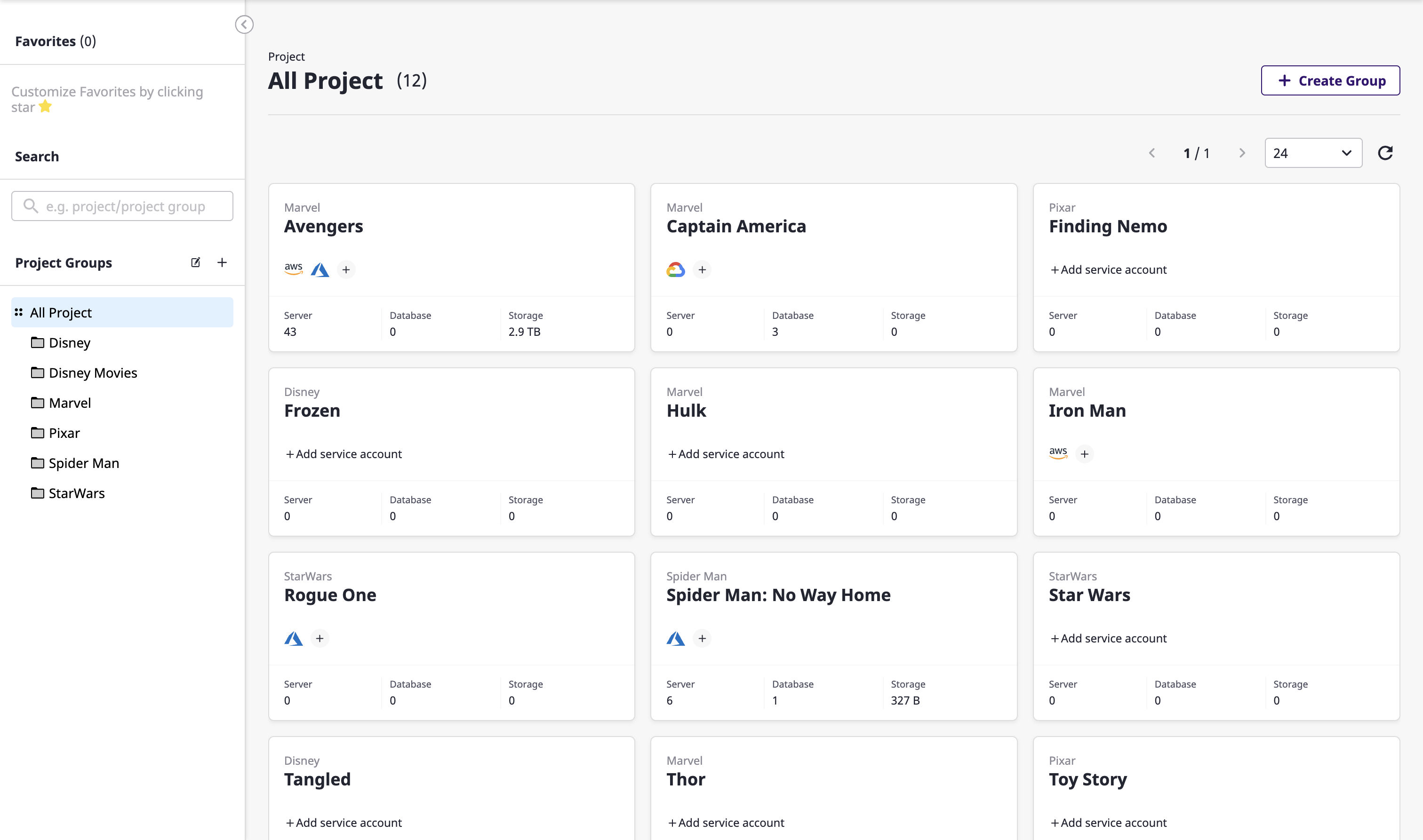

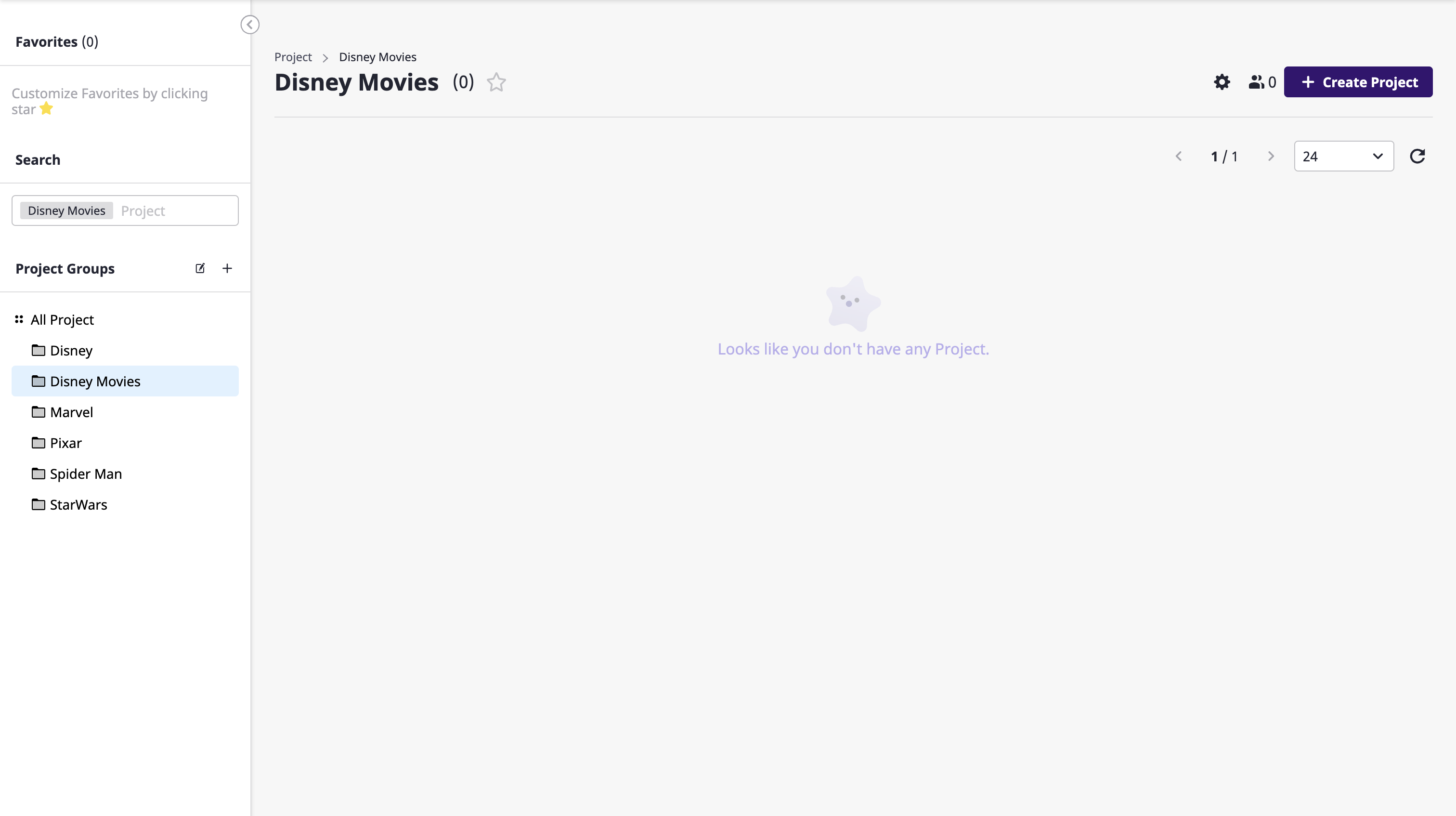

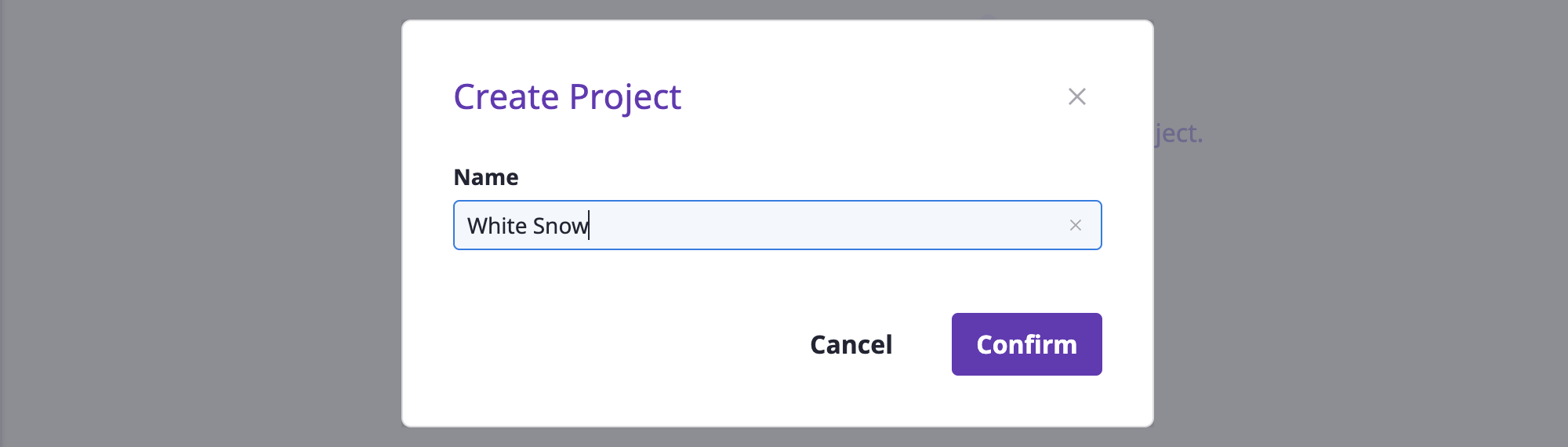

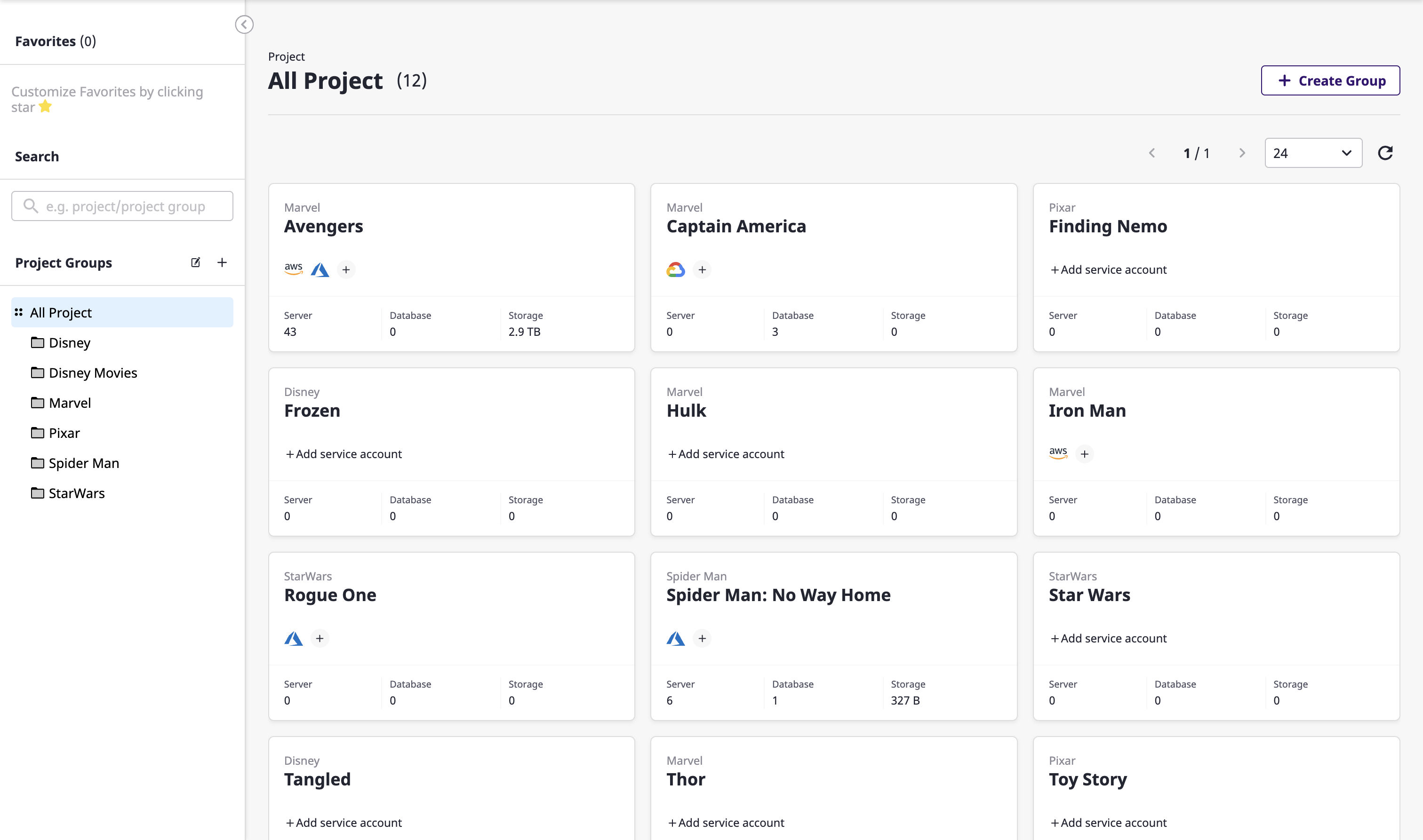

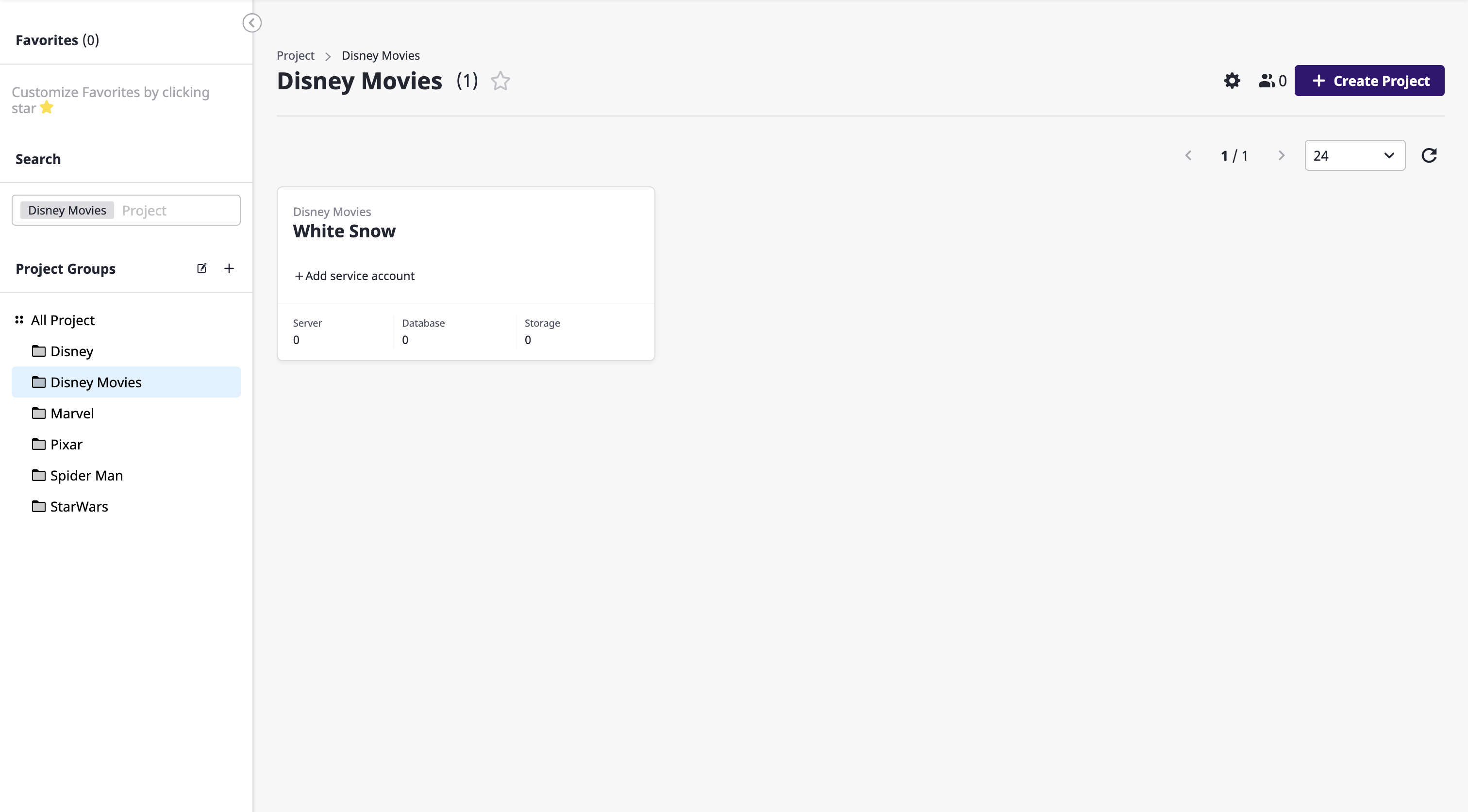

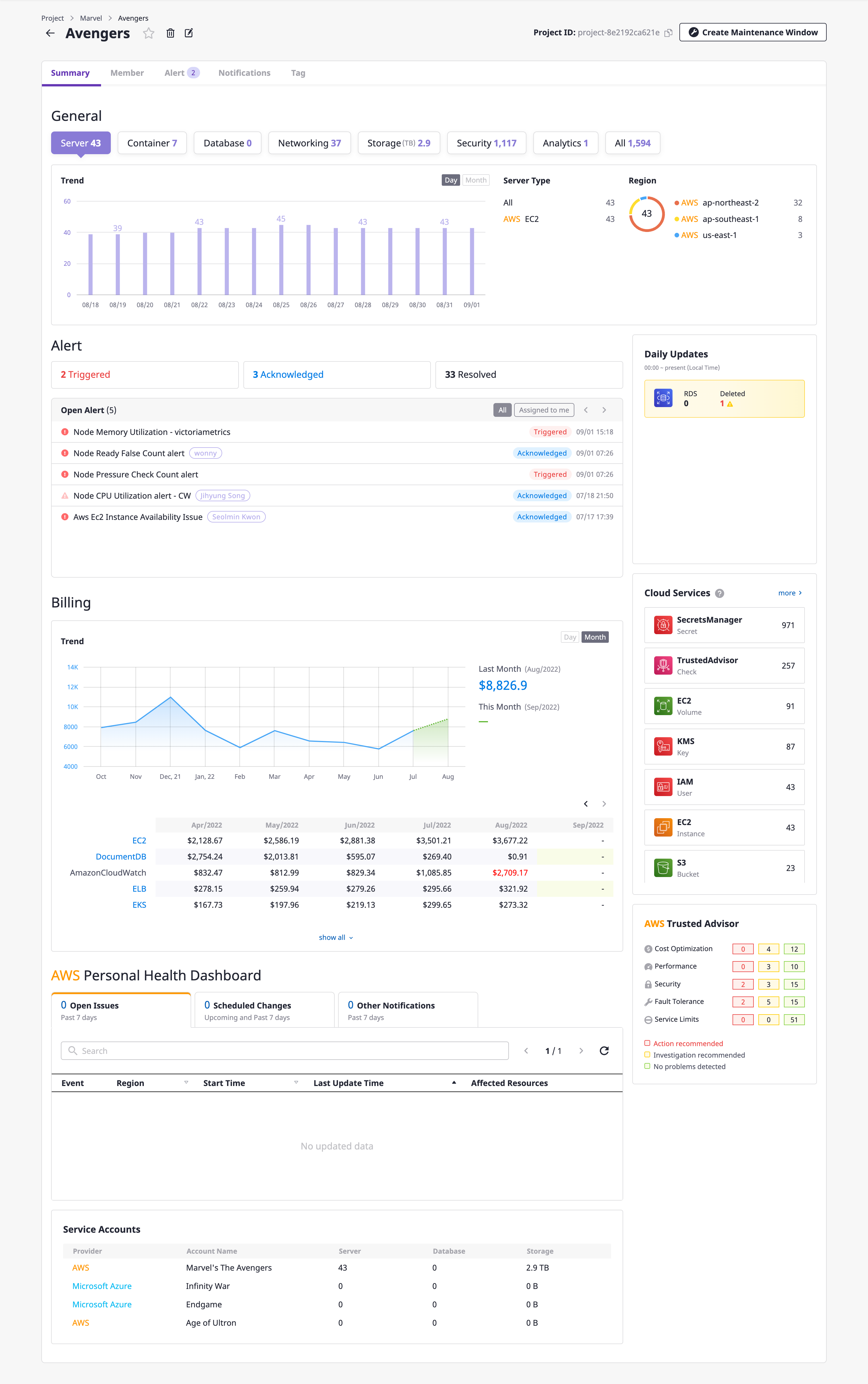

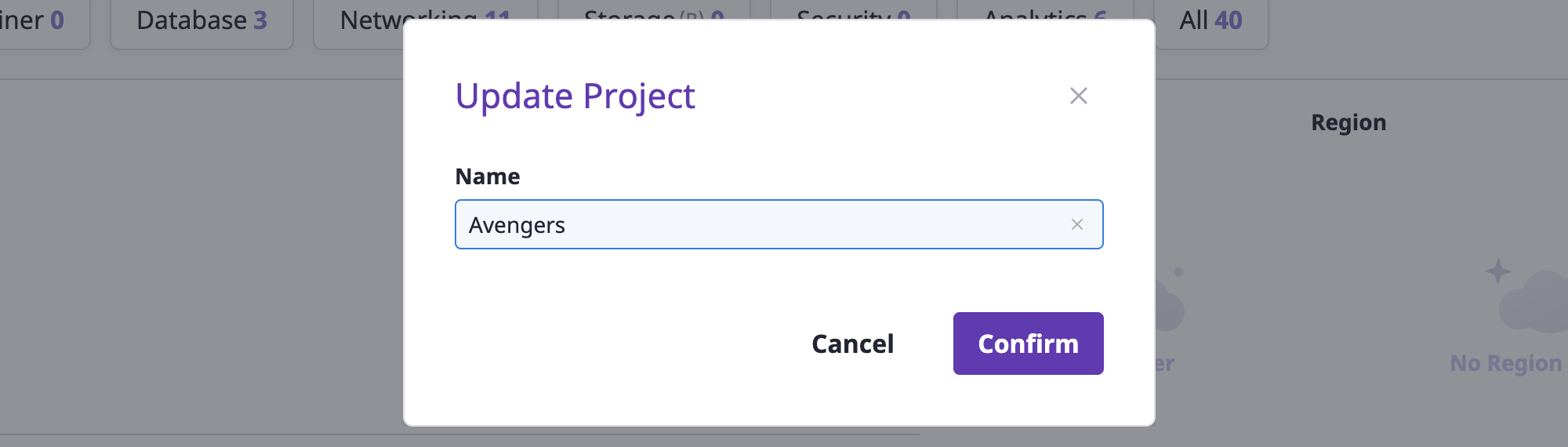

- 4.3: Project

- 4.4: Asset inventory

- 4.4.1: Quick Start

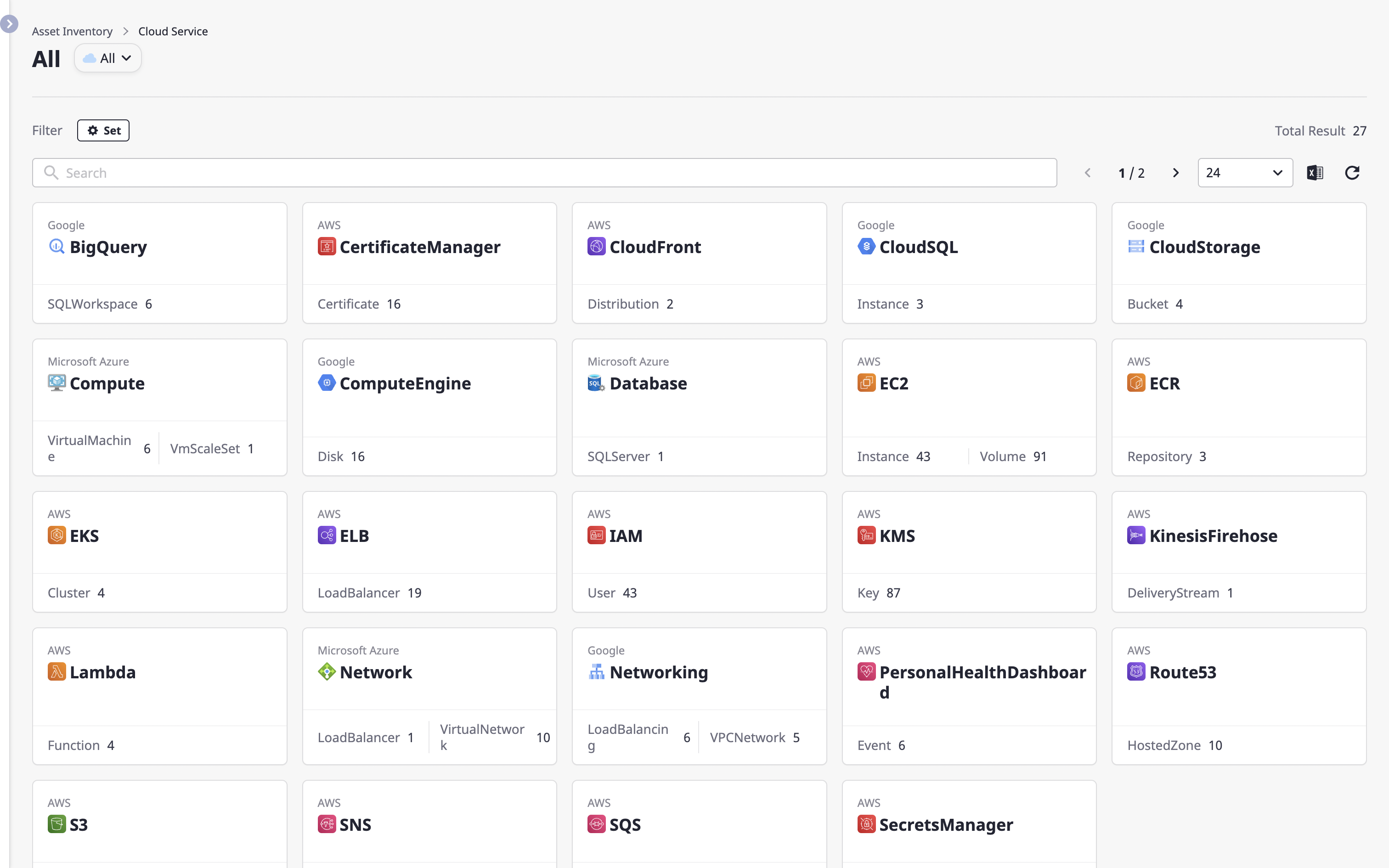

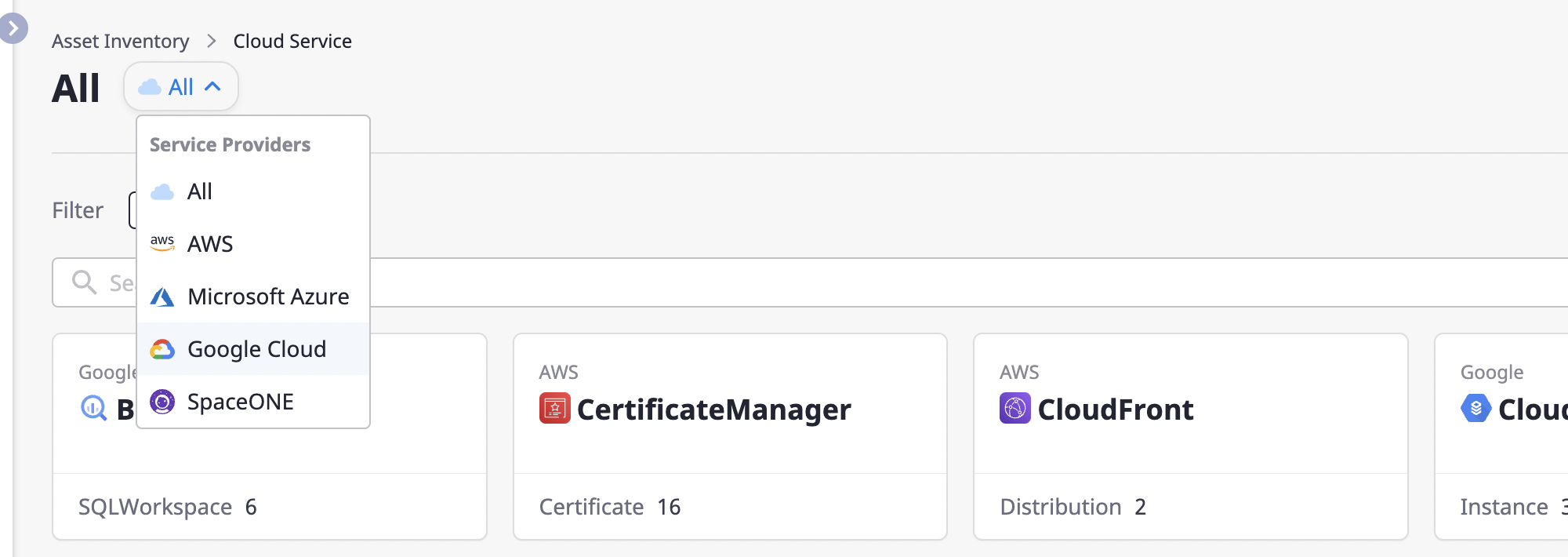

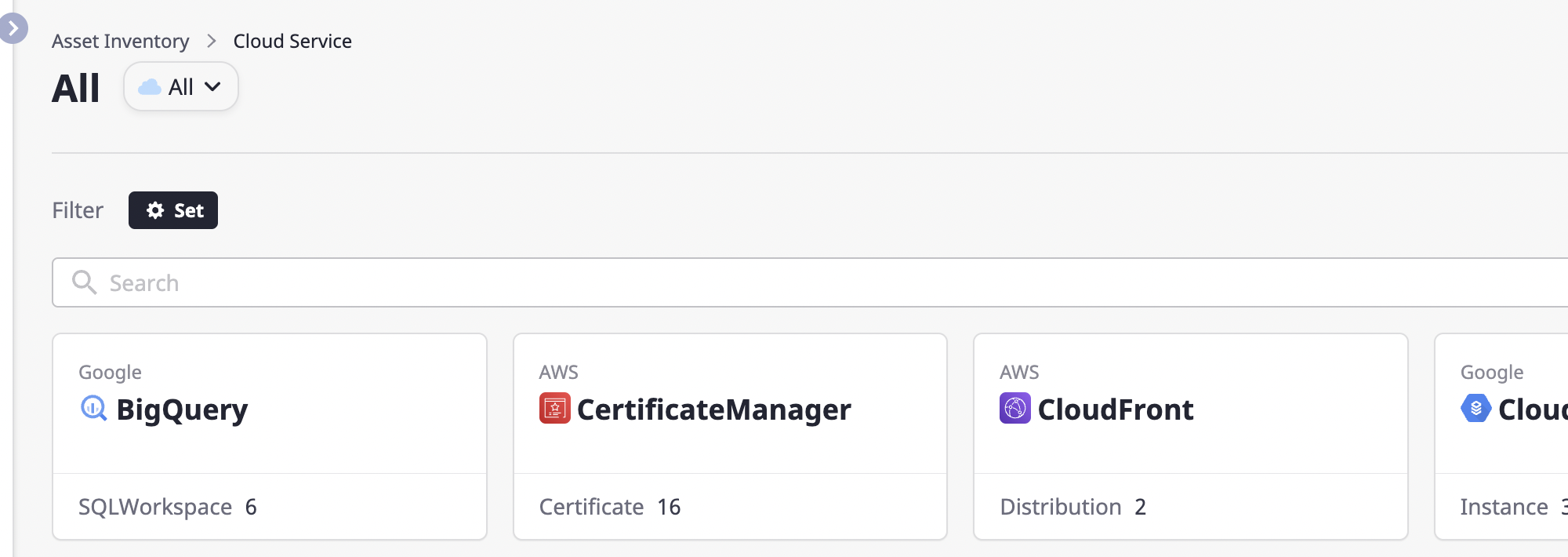

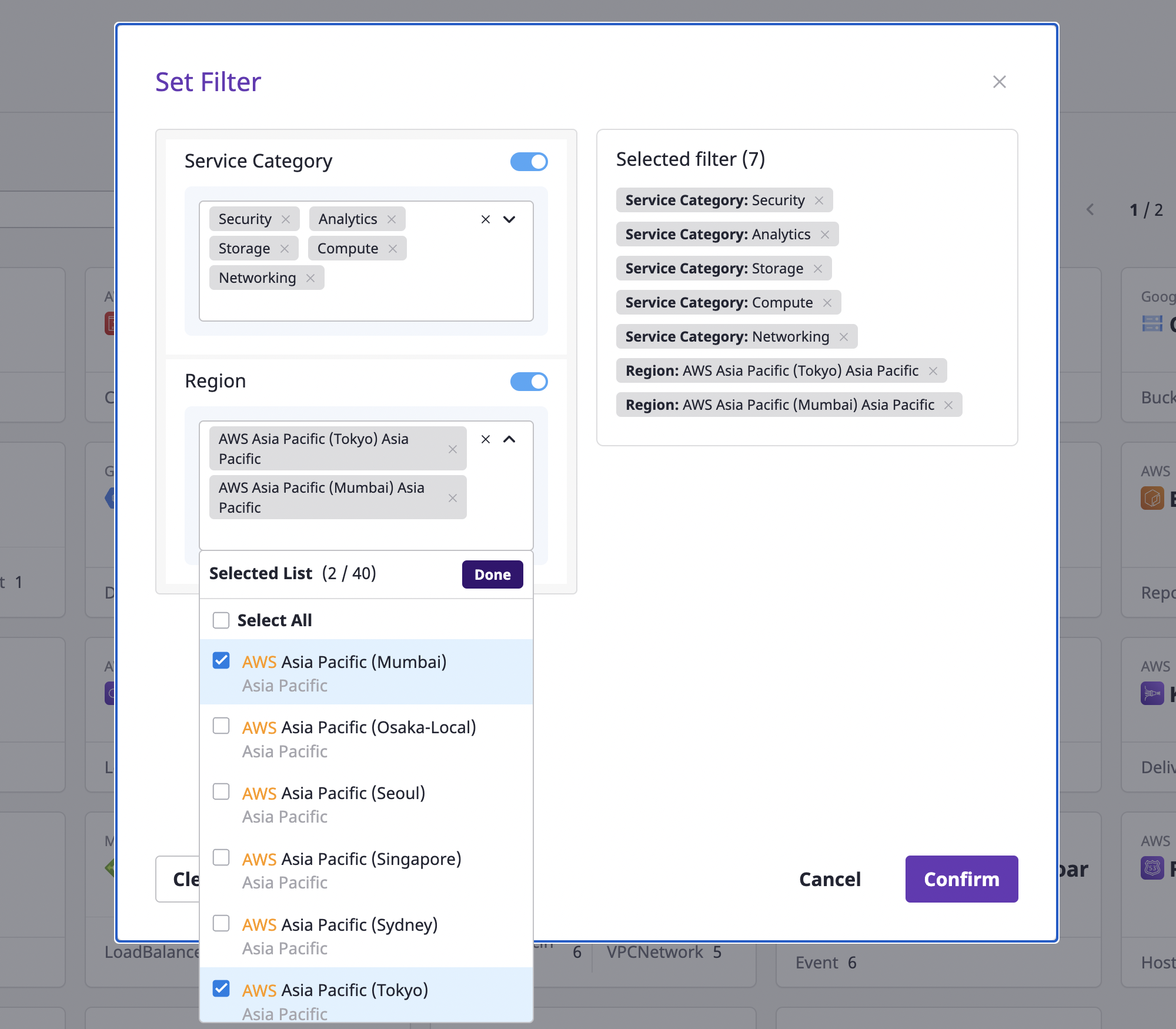

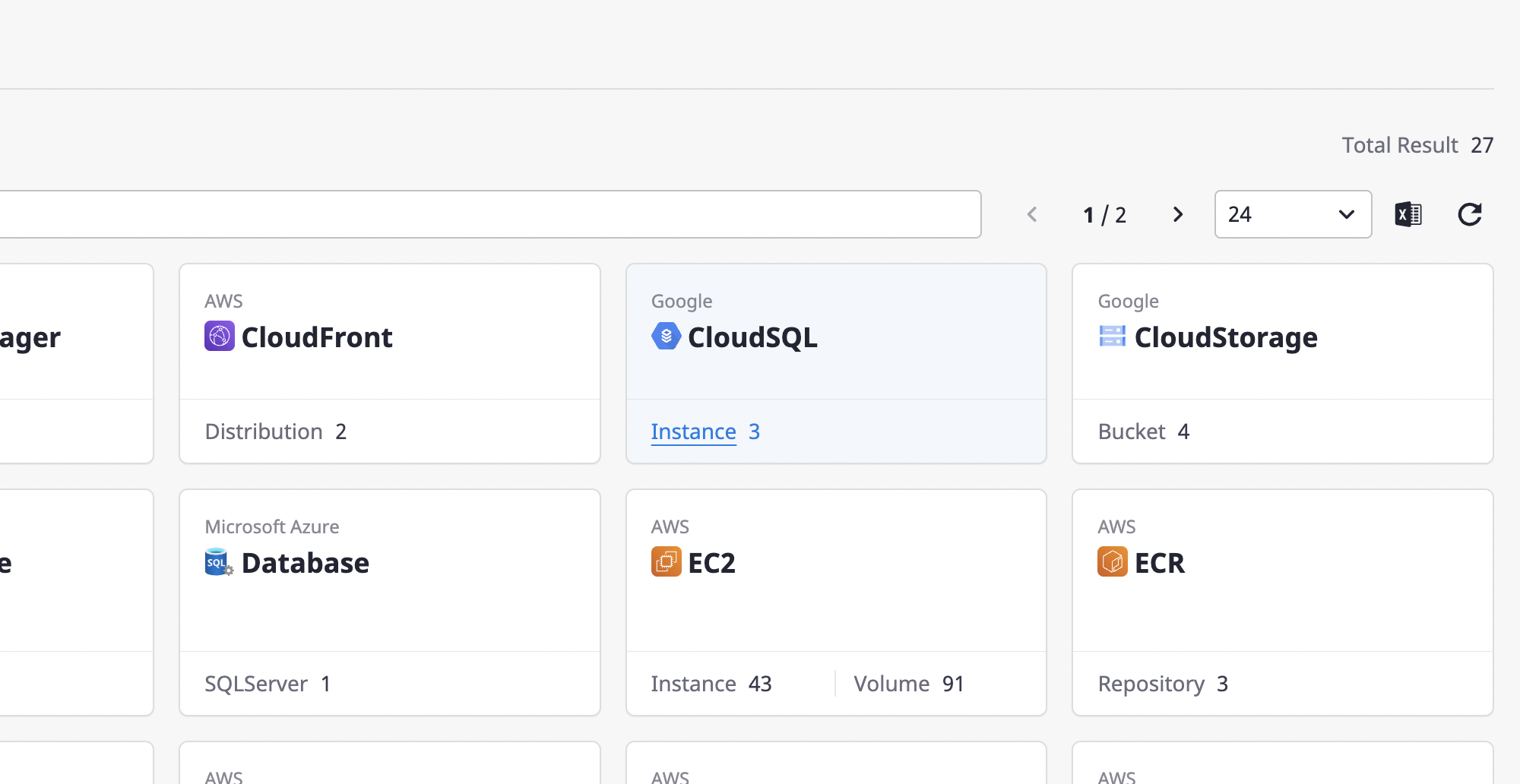

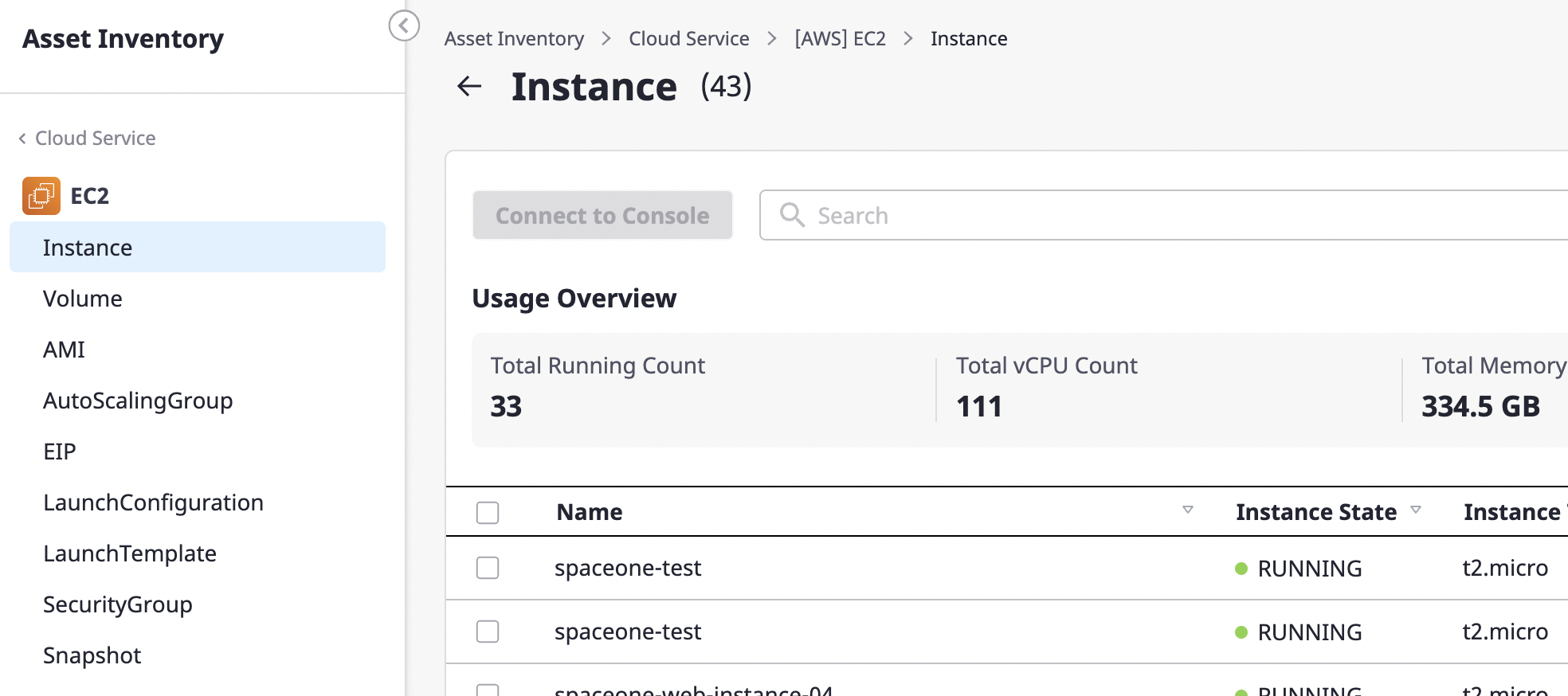

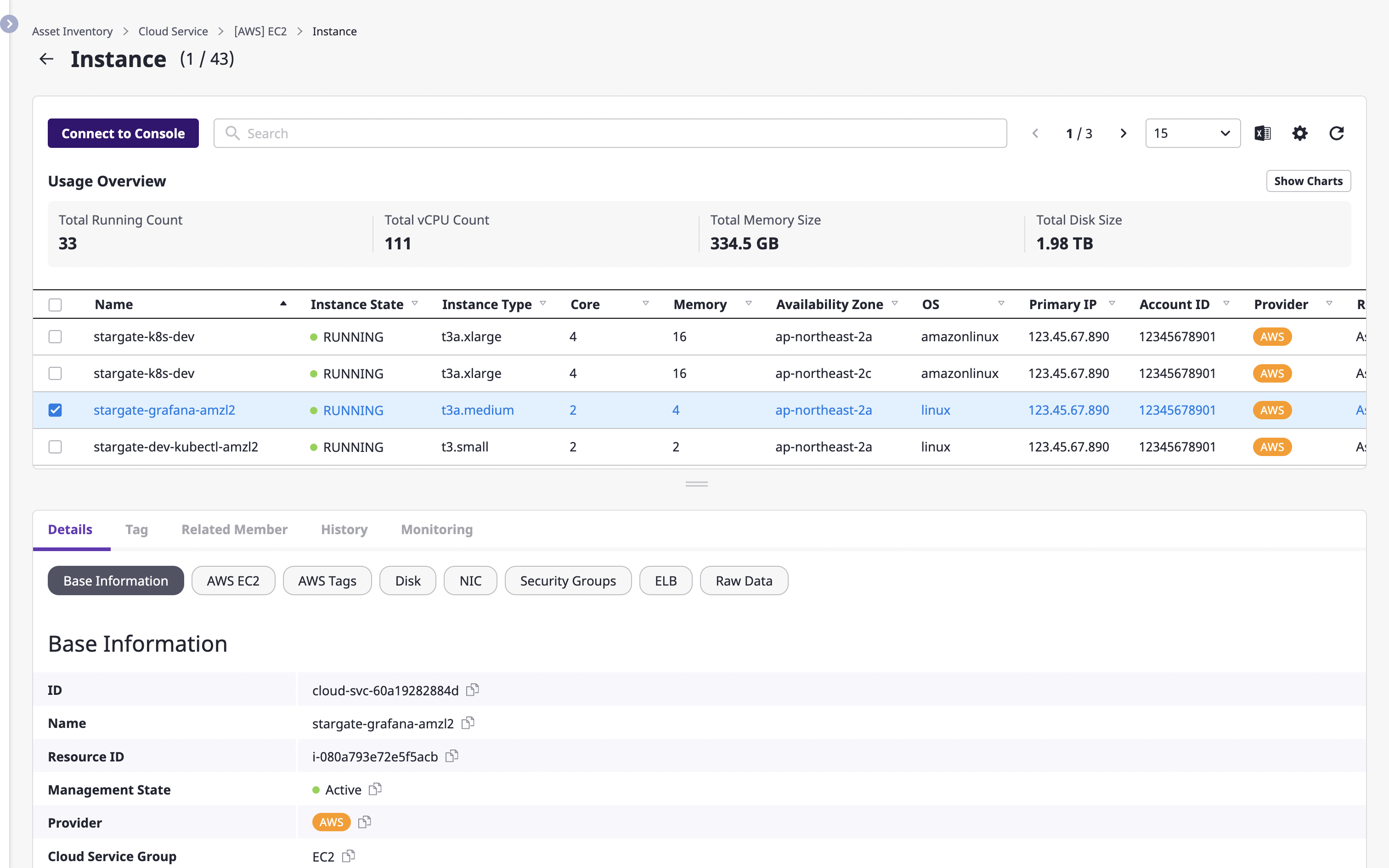

- 4.4.2: Cloud service

- 4.4.3: Server

- 4.4.4: Collector

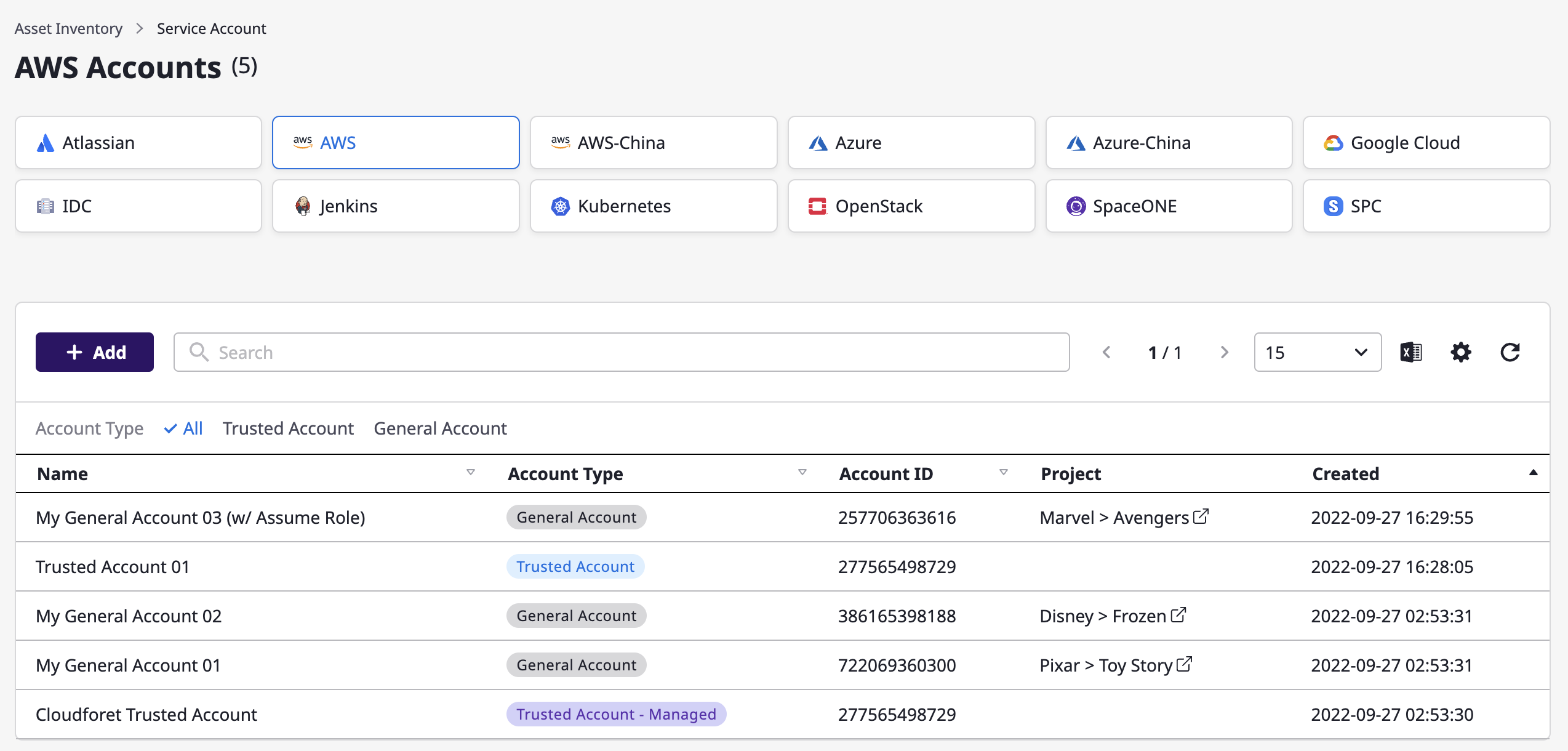

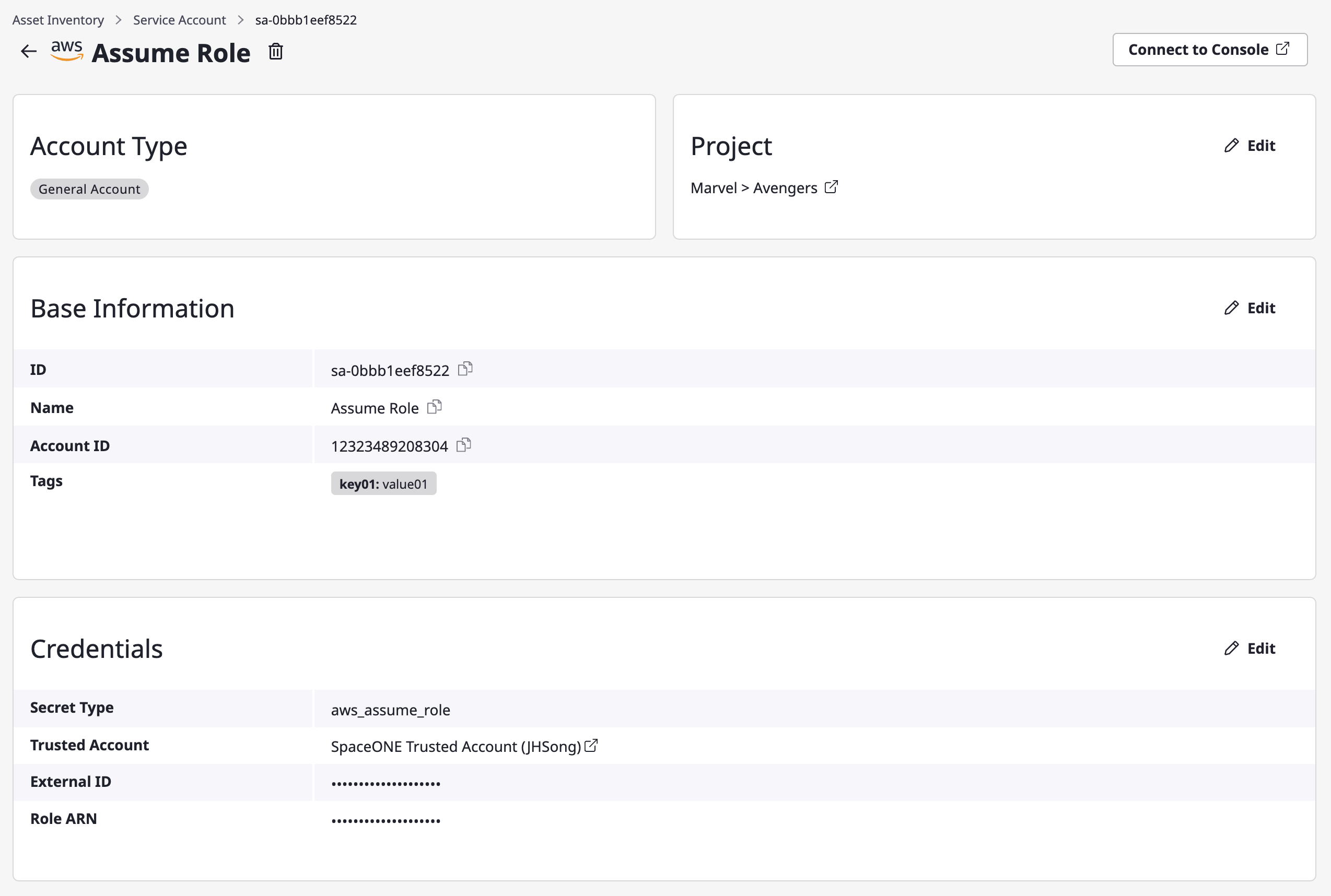

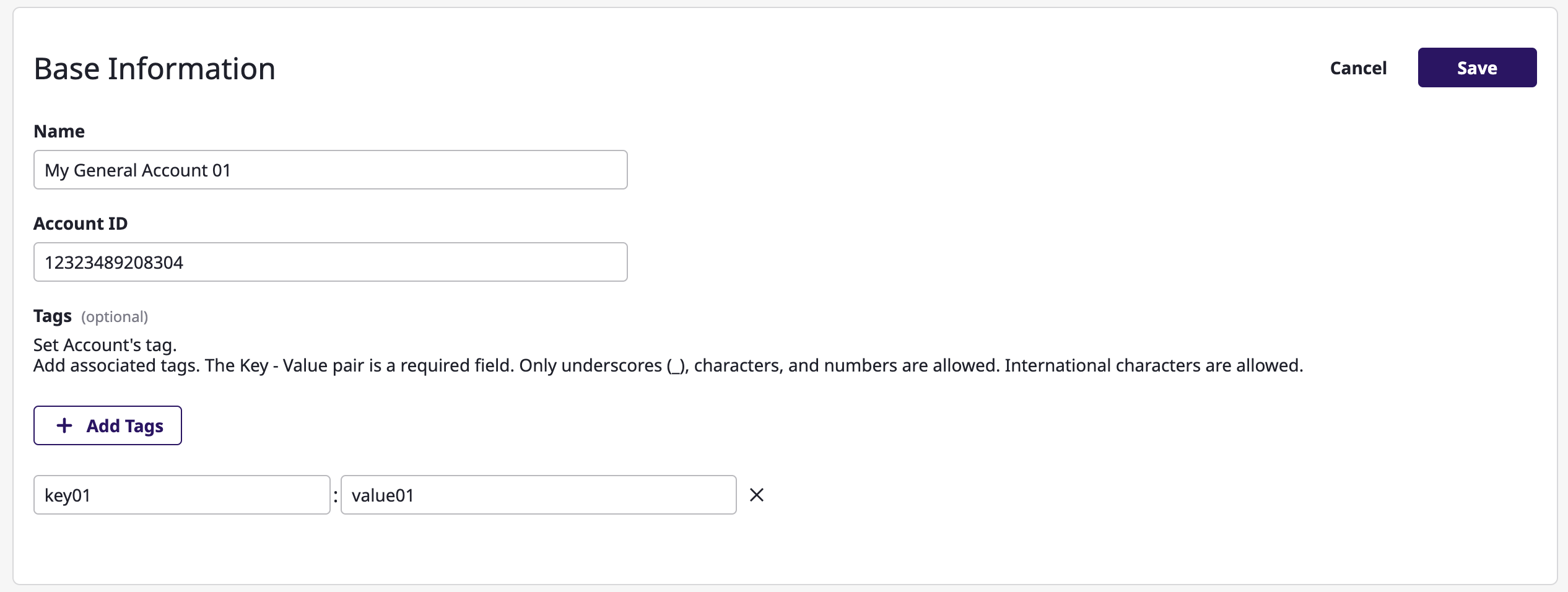

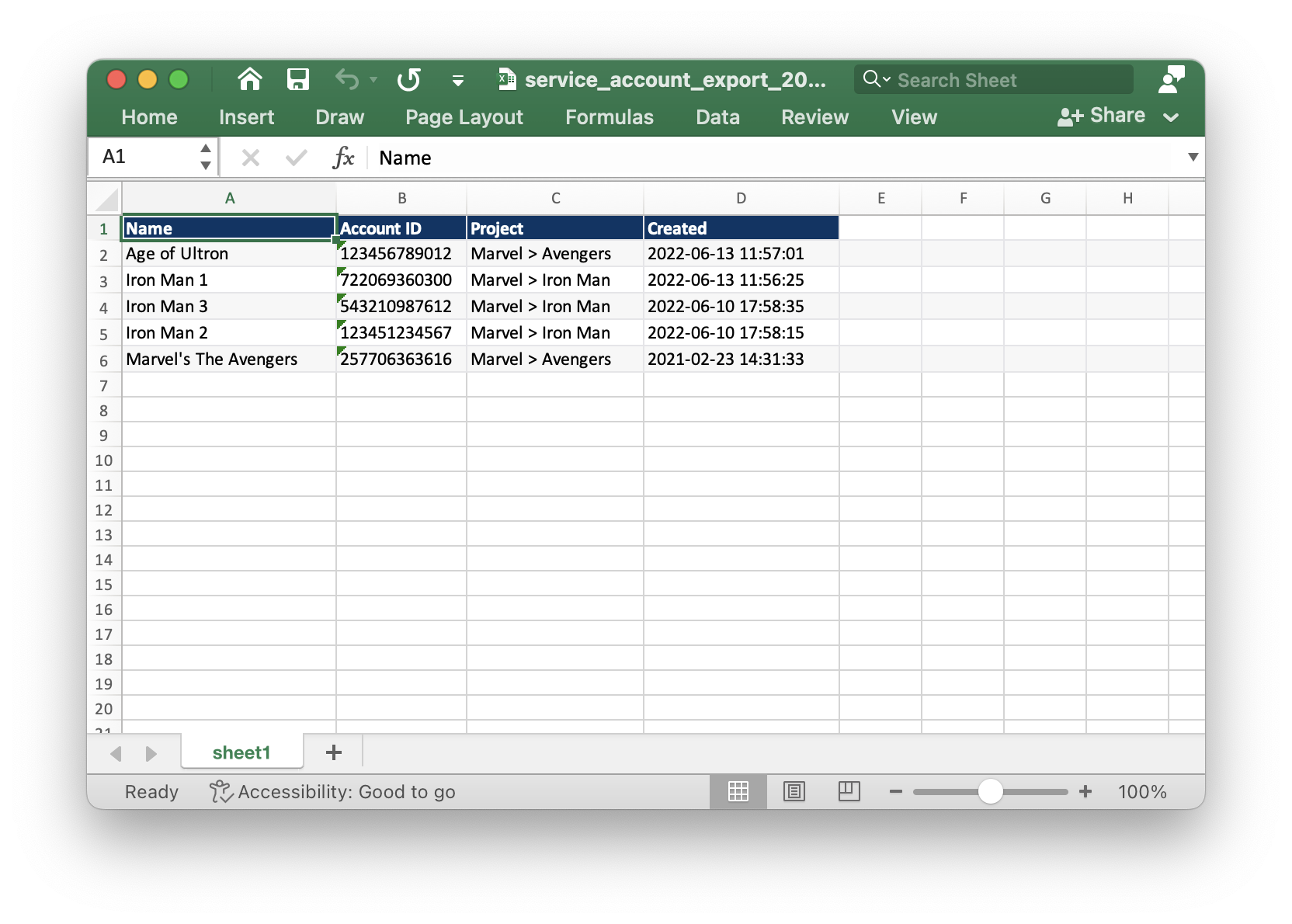

- 4.4.5: Service account

- 4.5: Cost Explorer

- 4.5.1: Cost analysis

- 4.5.2: Budget

- 4.6: Alert manager

- 4.6.1: Quick Start

- 4.6.2: Dashboard

- 4.6.3: Alert

- 4.6.4: Webhook

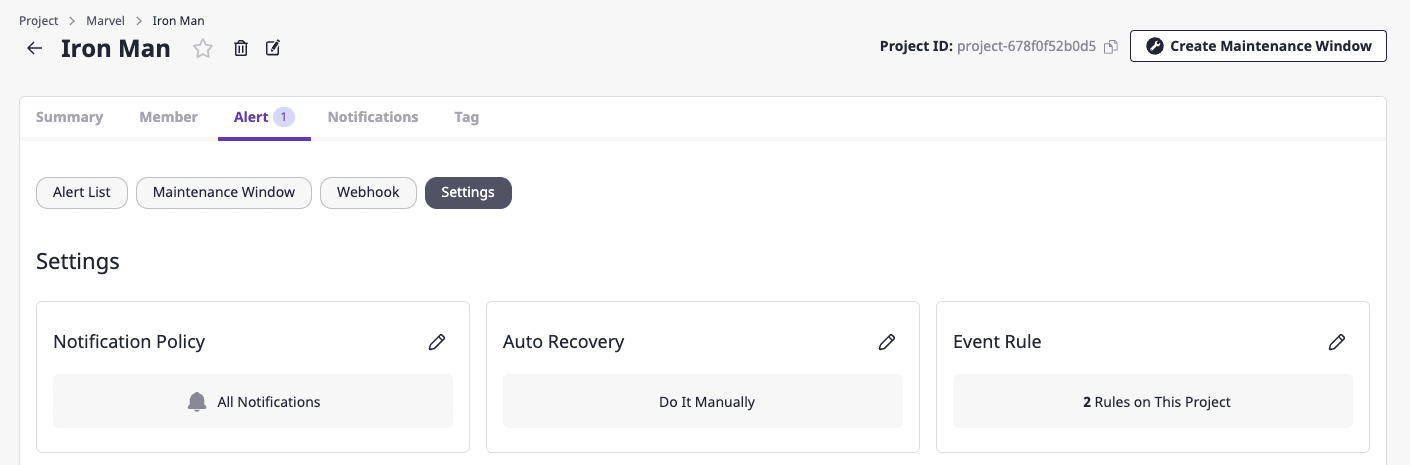

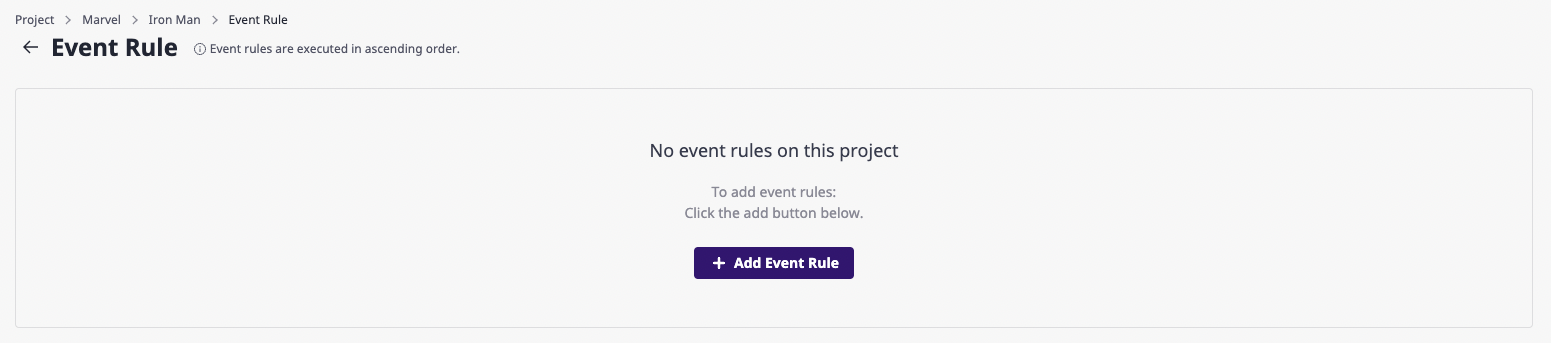

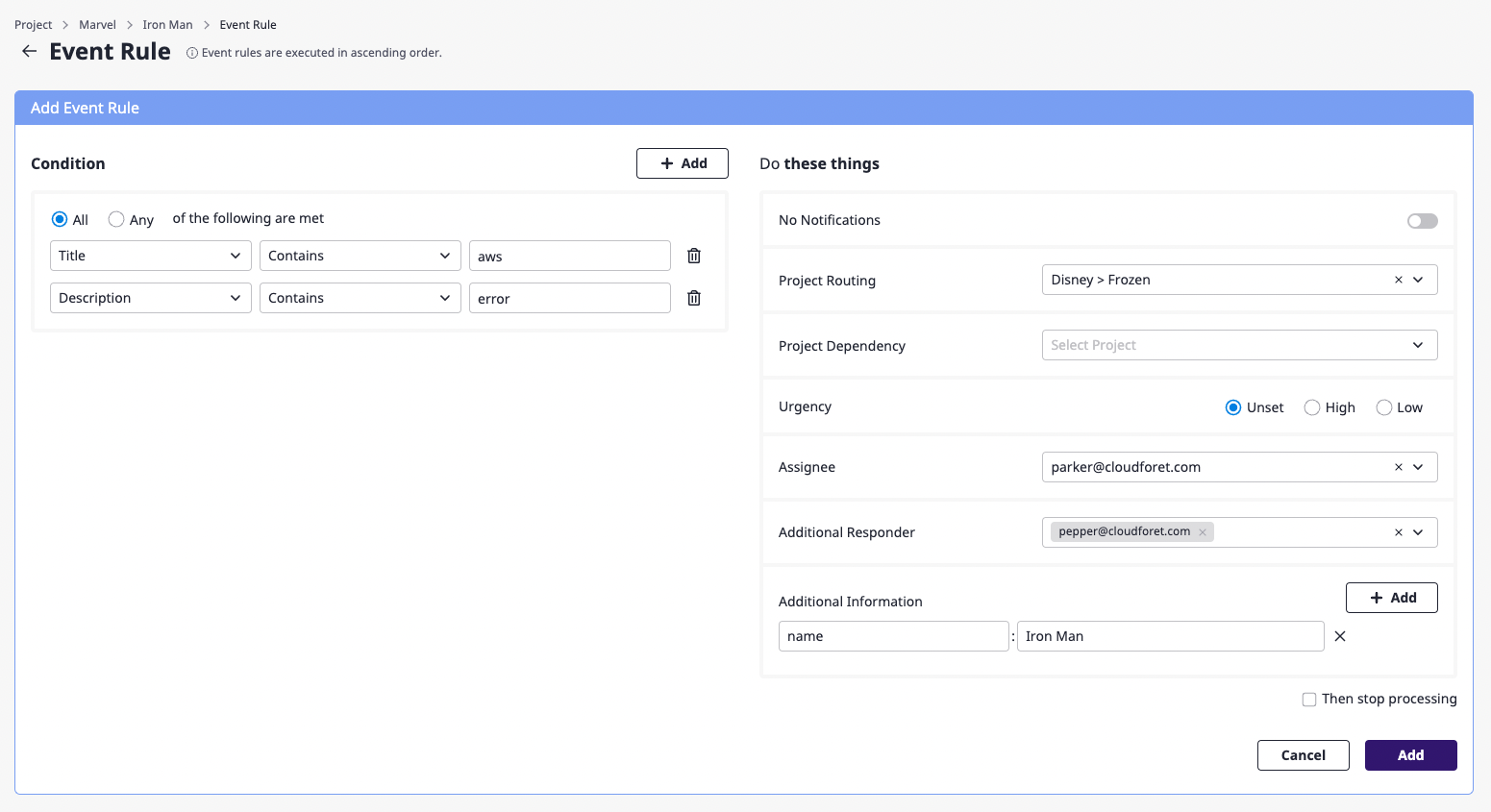

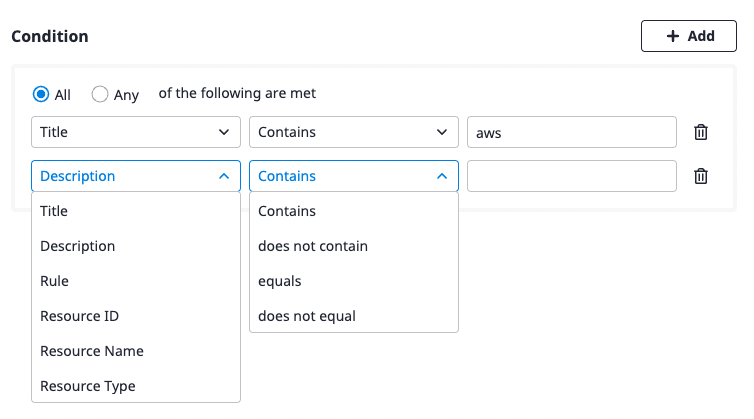

- 4.6.5: Event rule

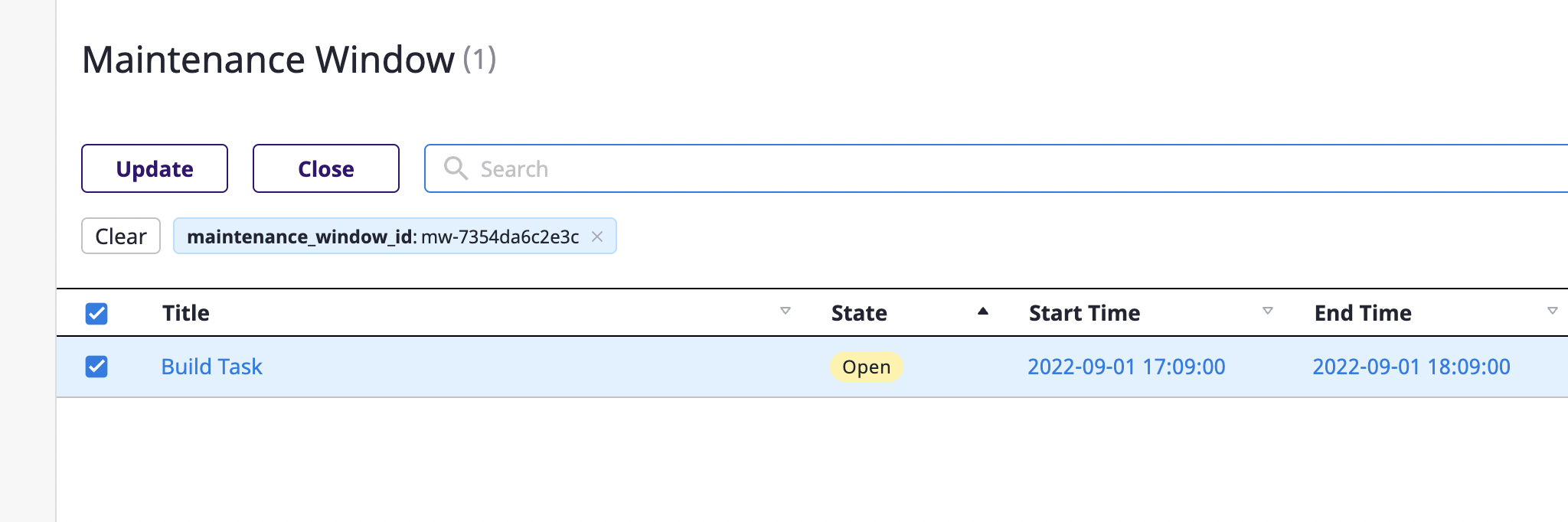

- 4.6.6: Maintenance window

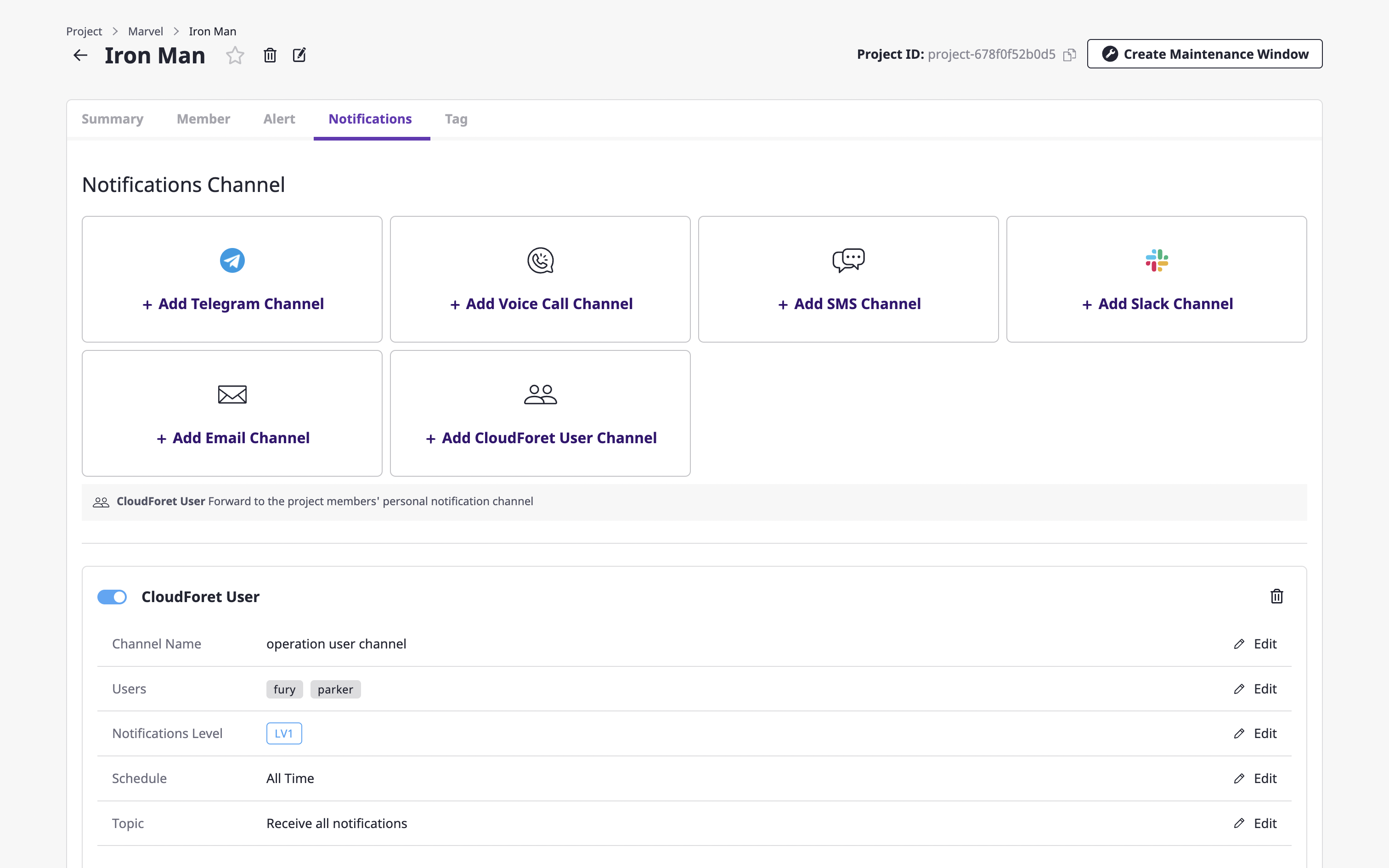

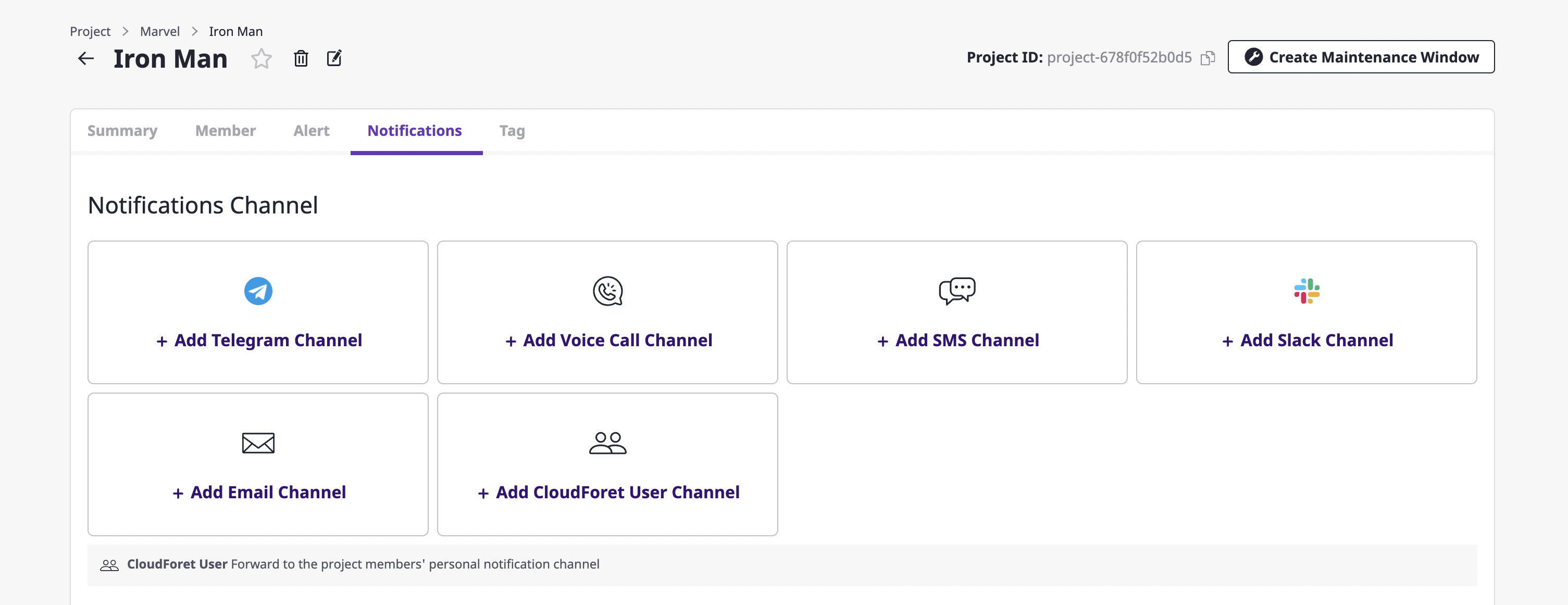

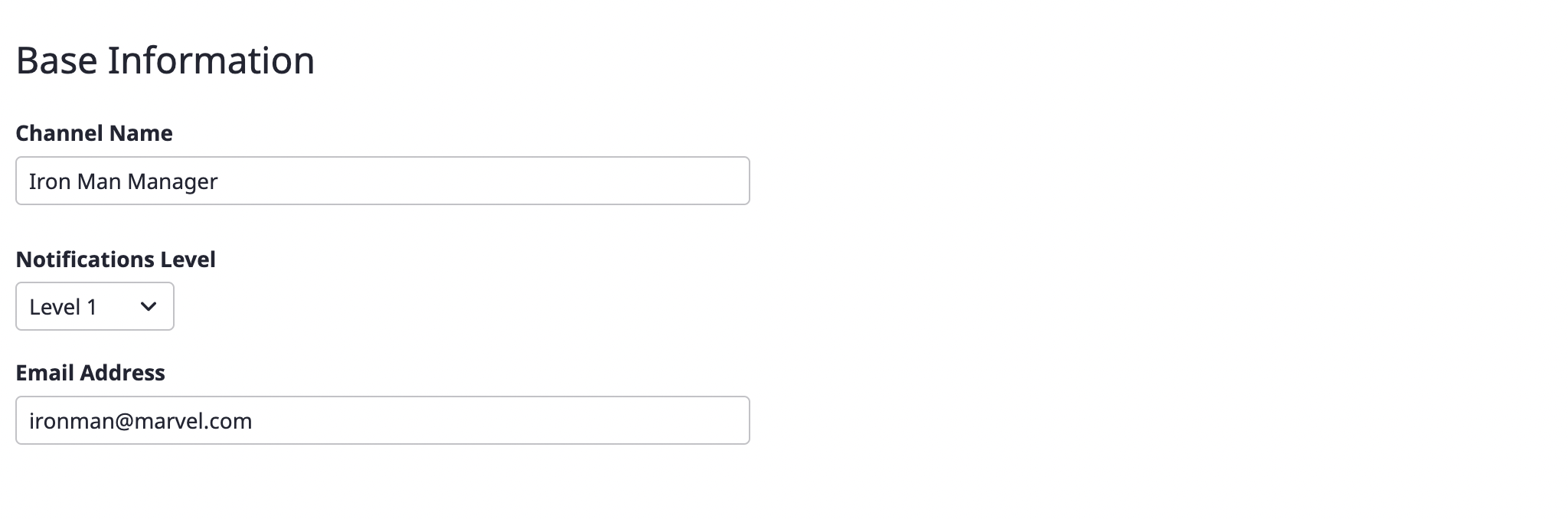

- 4.6.7: Notification

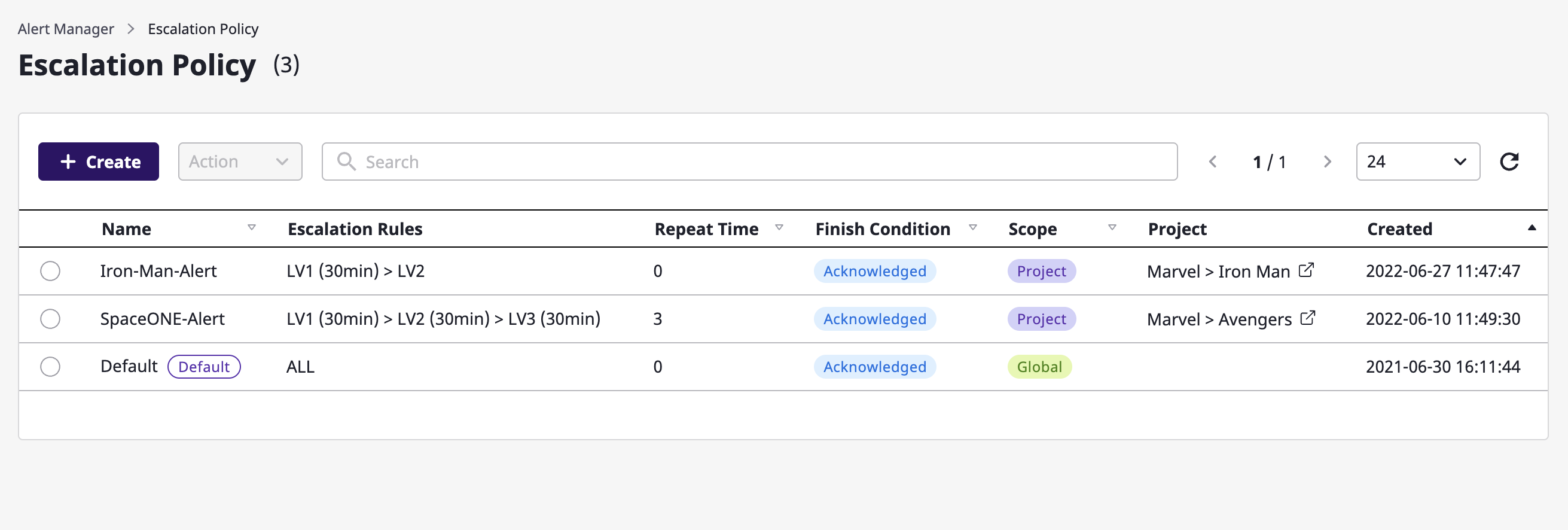

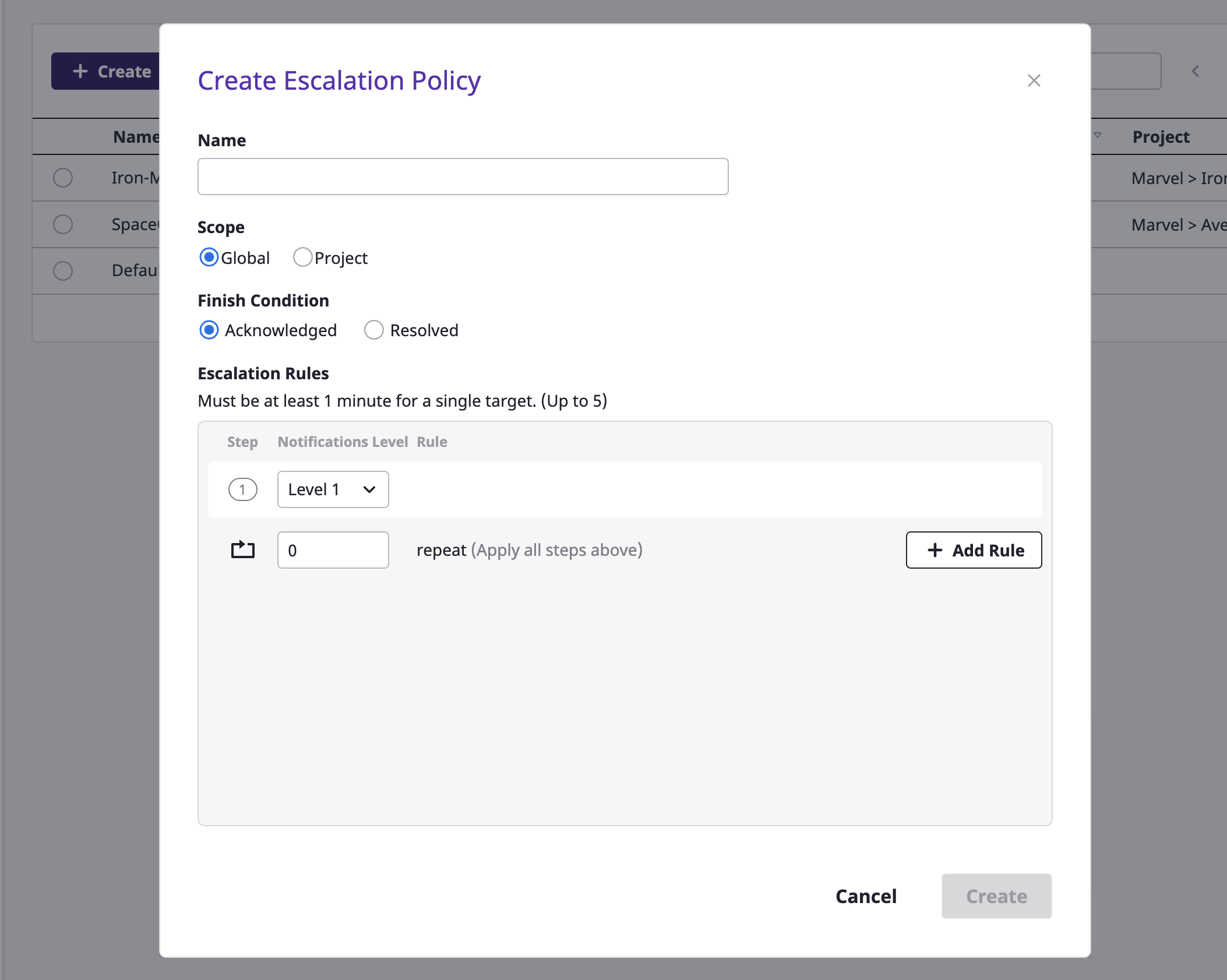

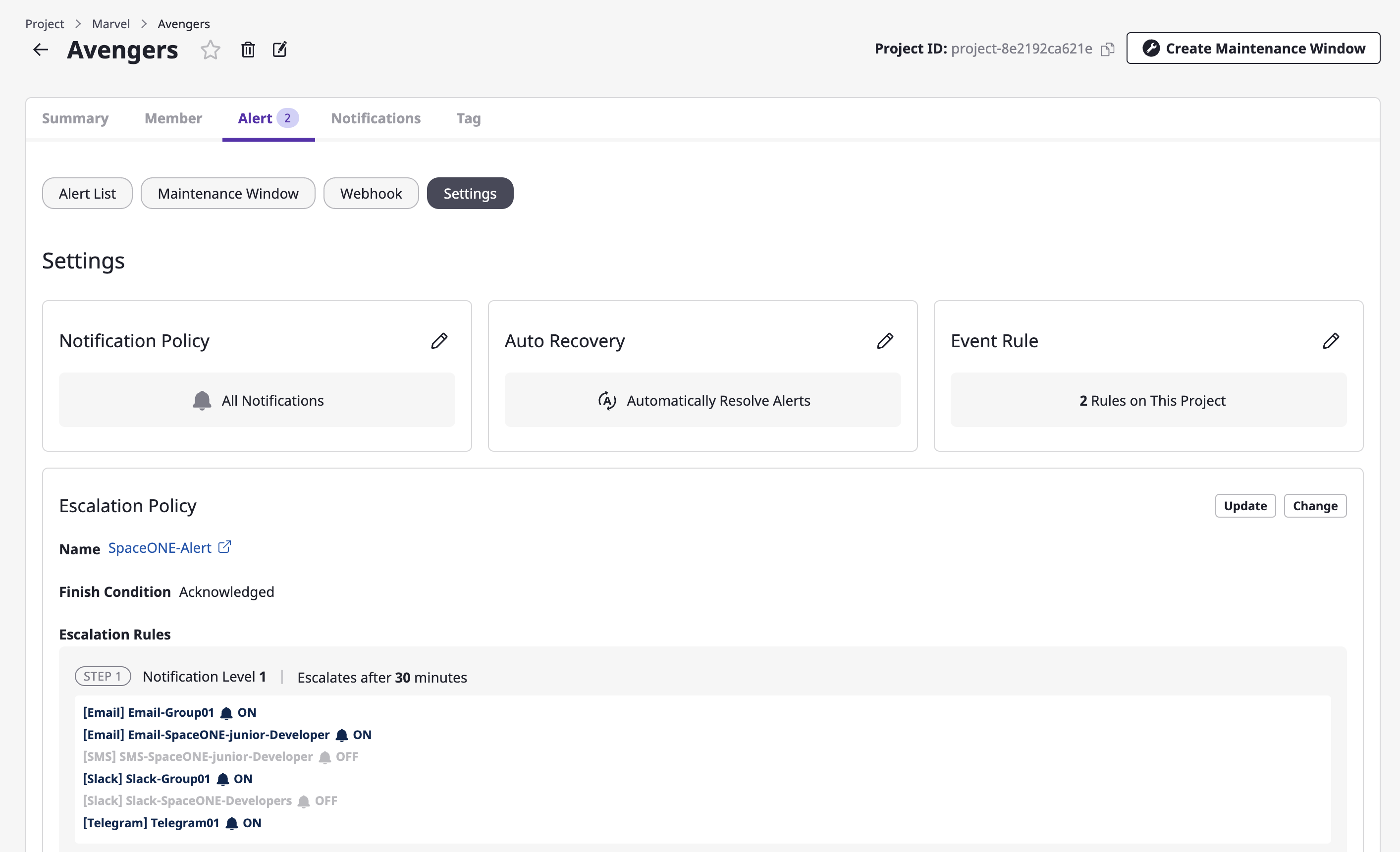

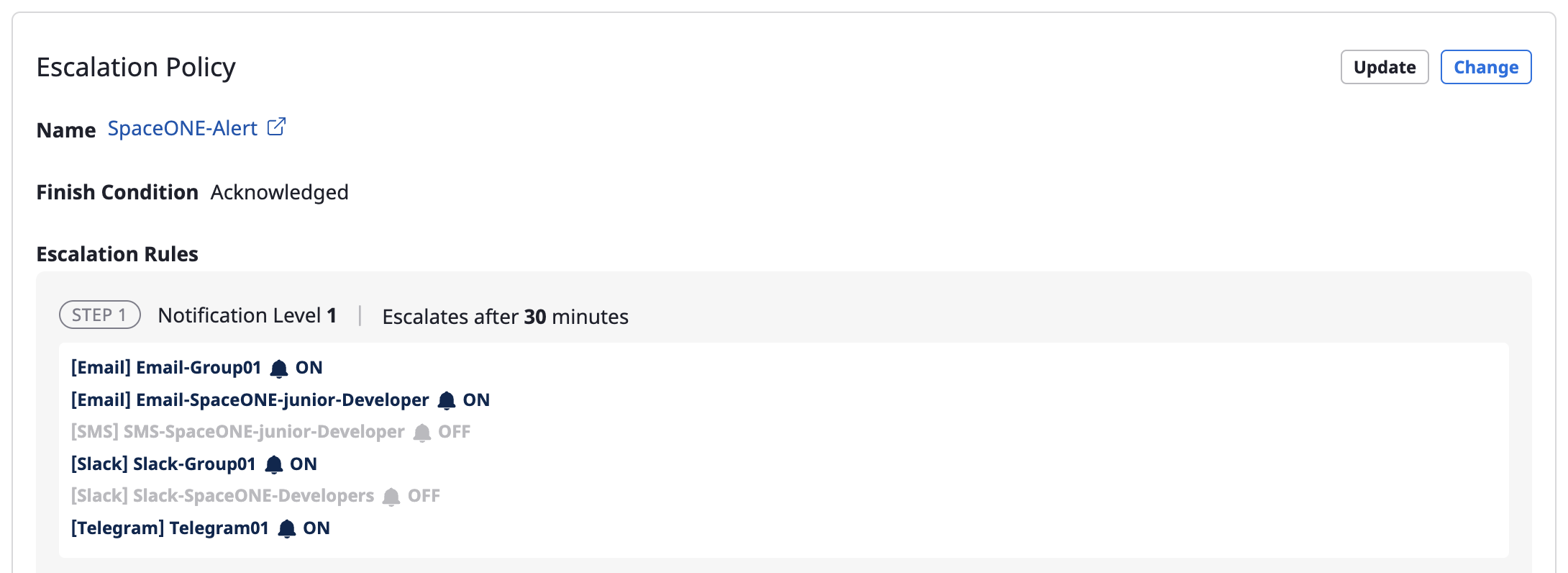

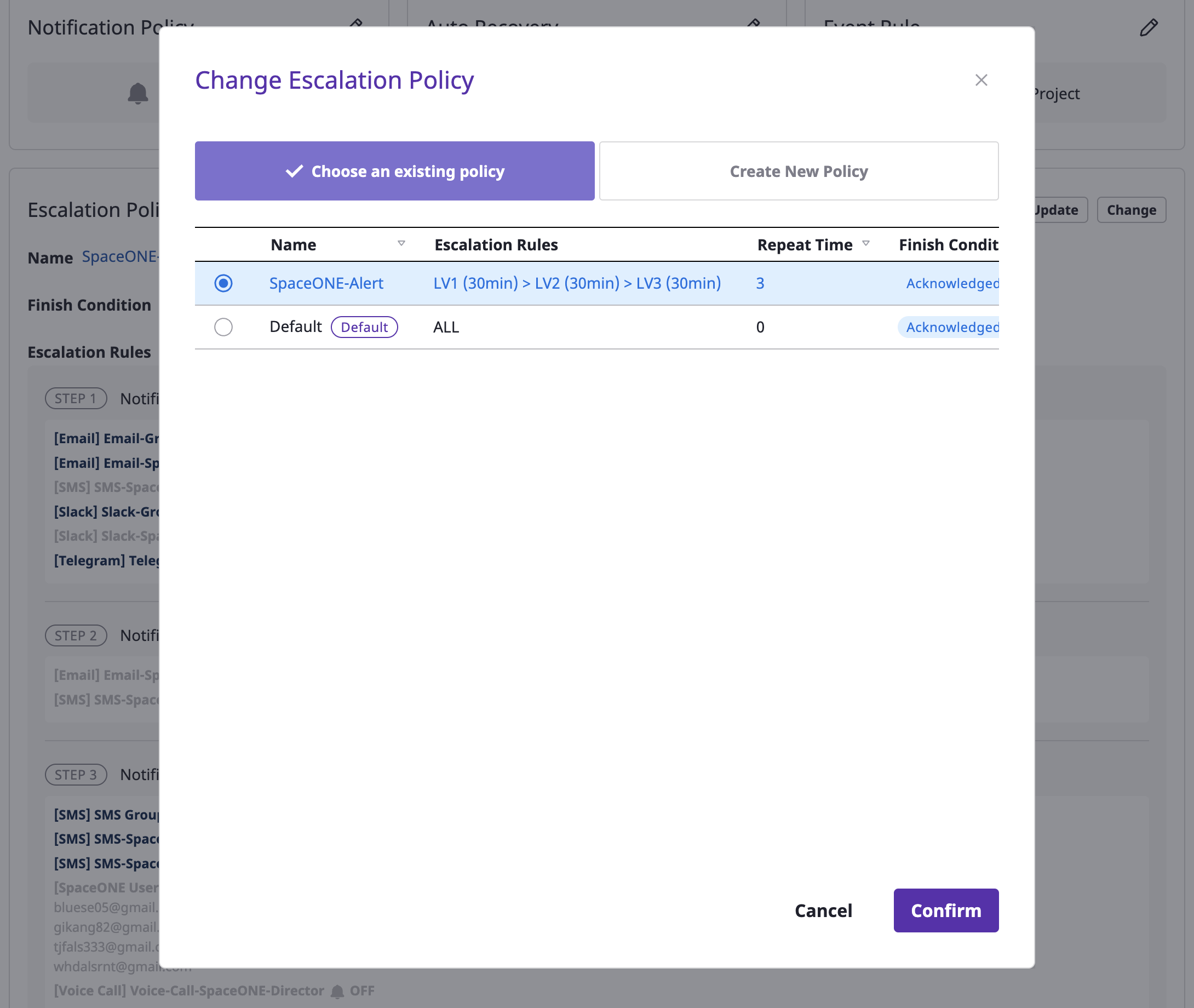

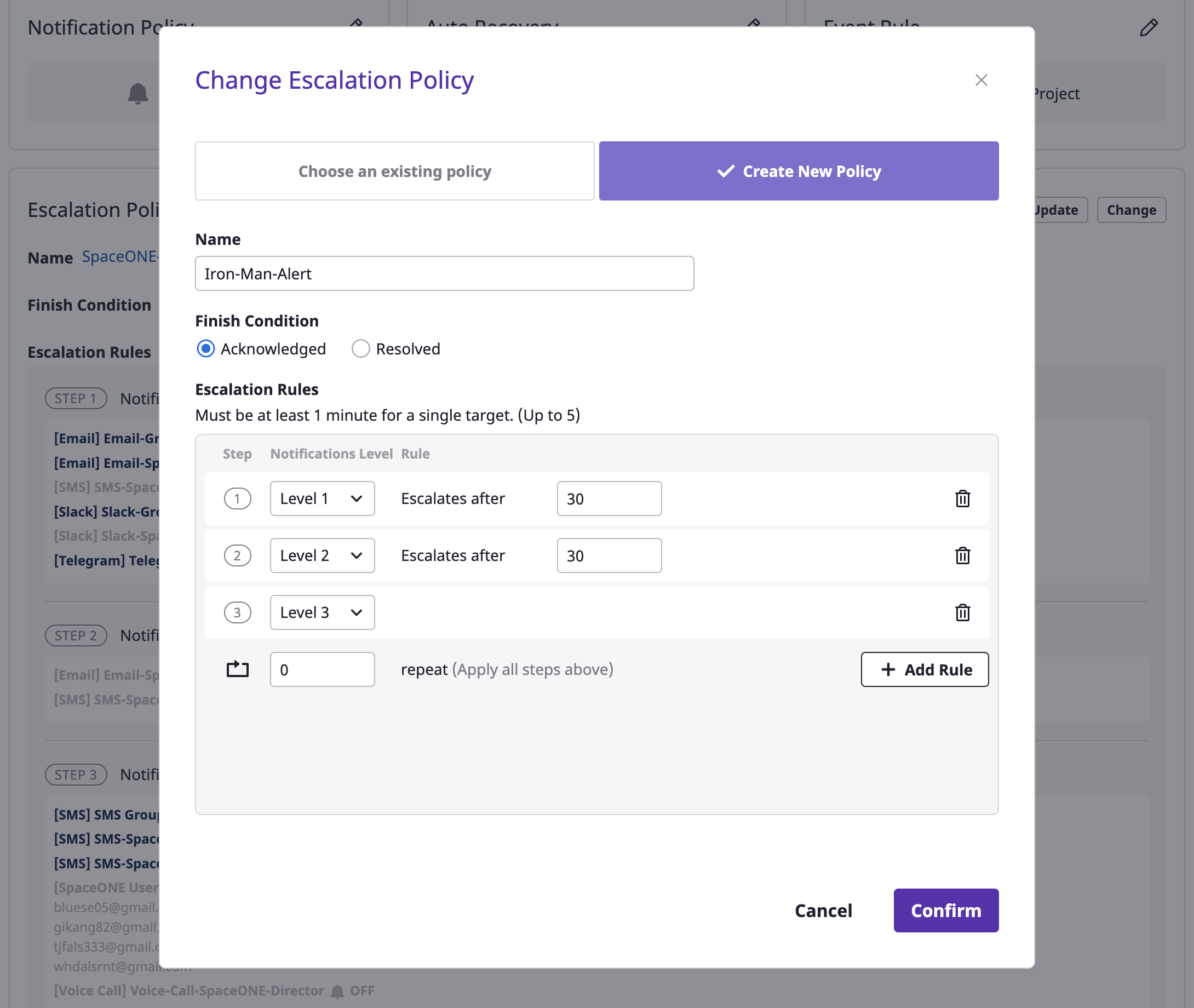

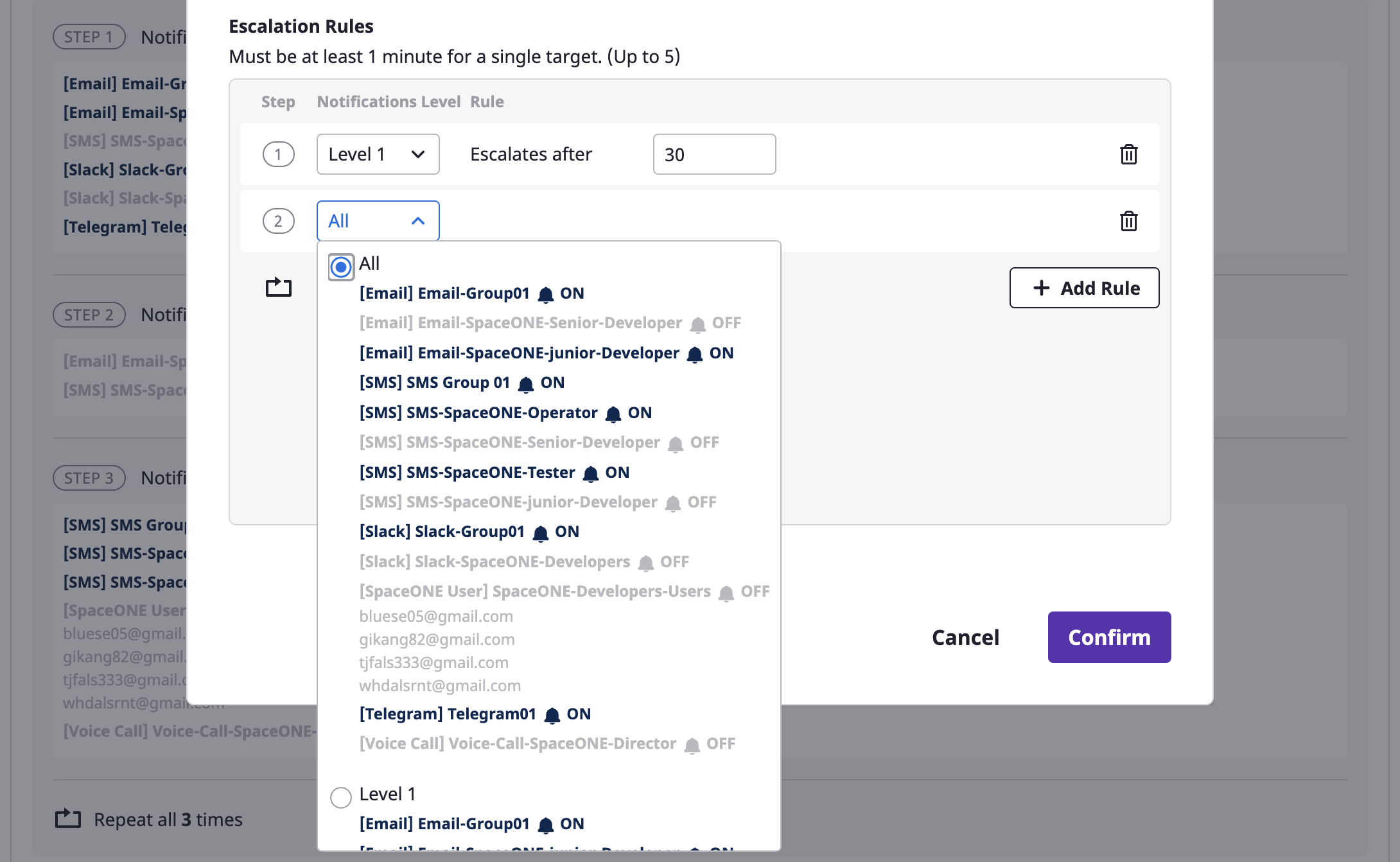

- 4.6.8: Escalation policy

- 4.7: Administration

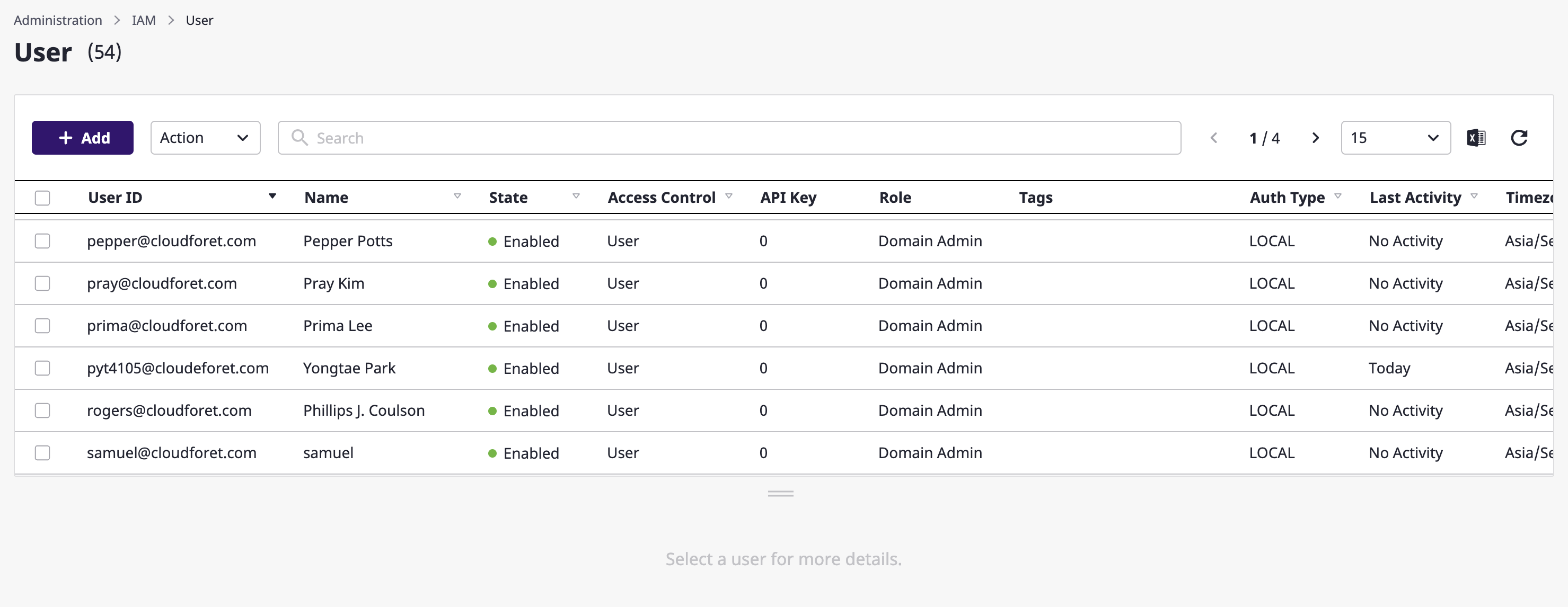

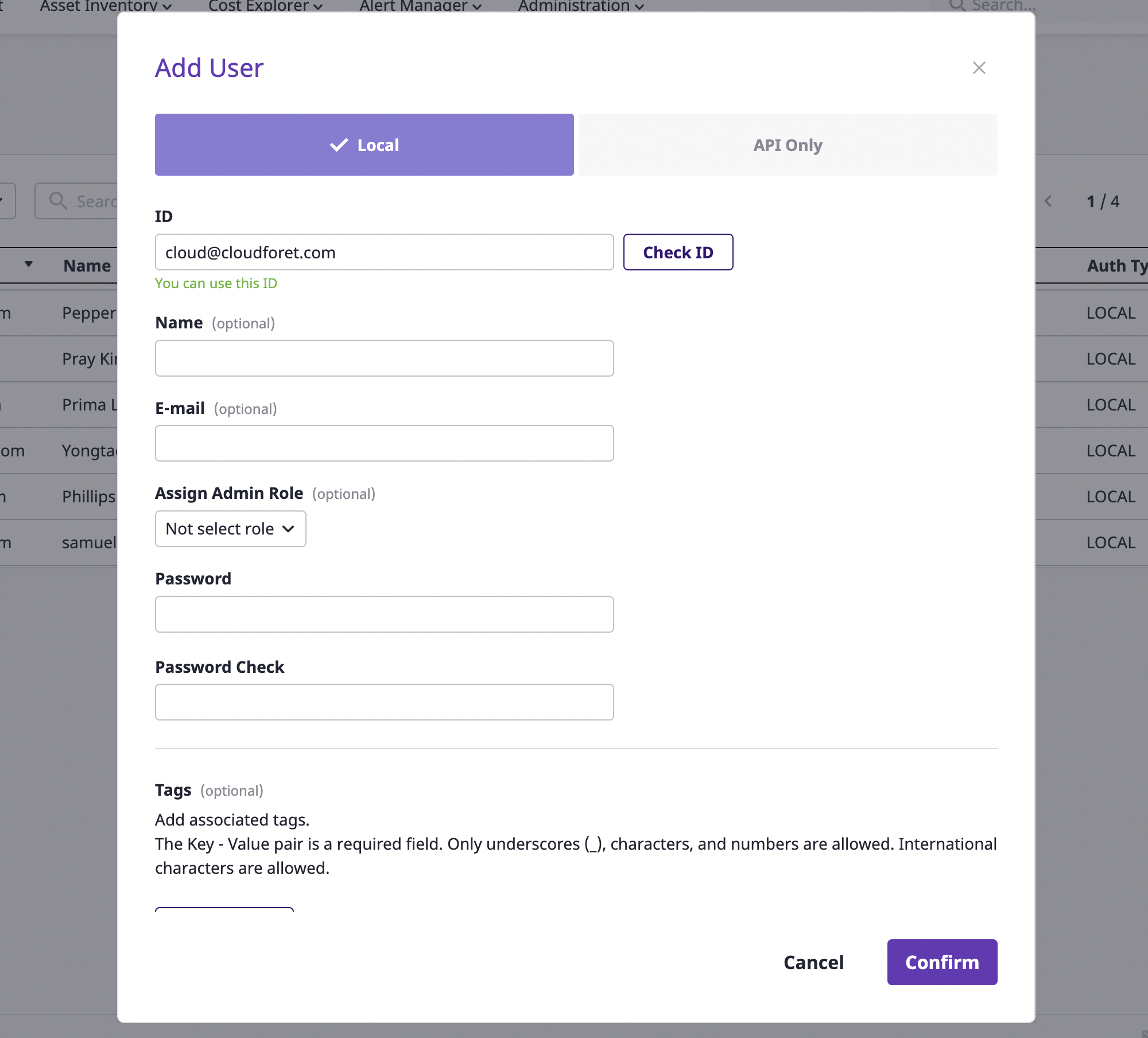

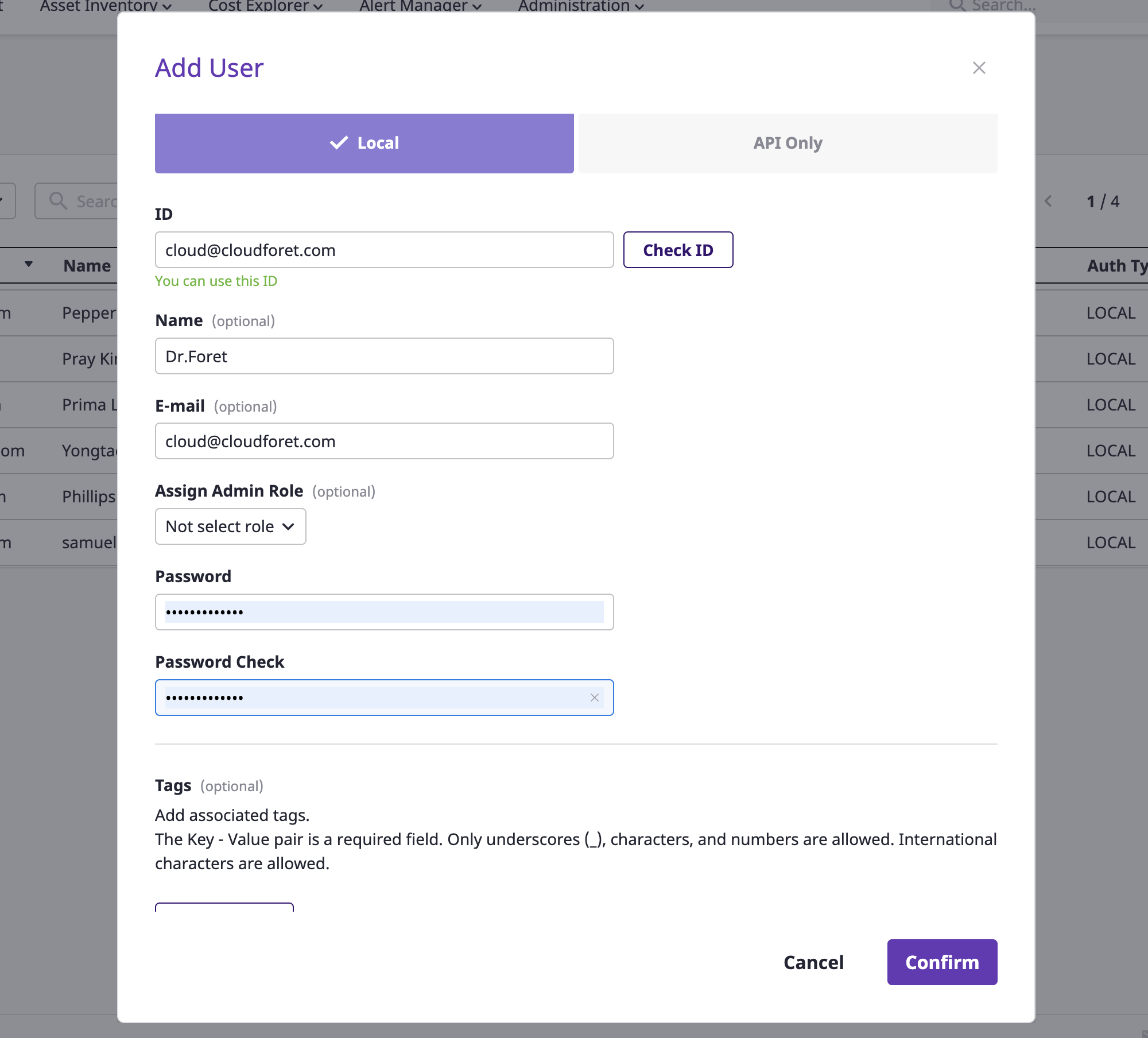

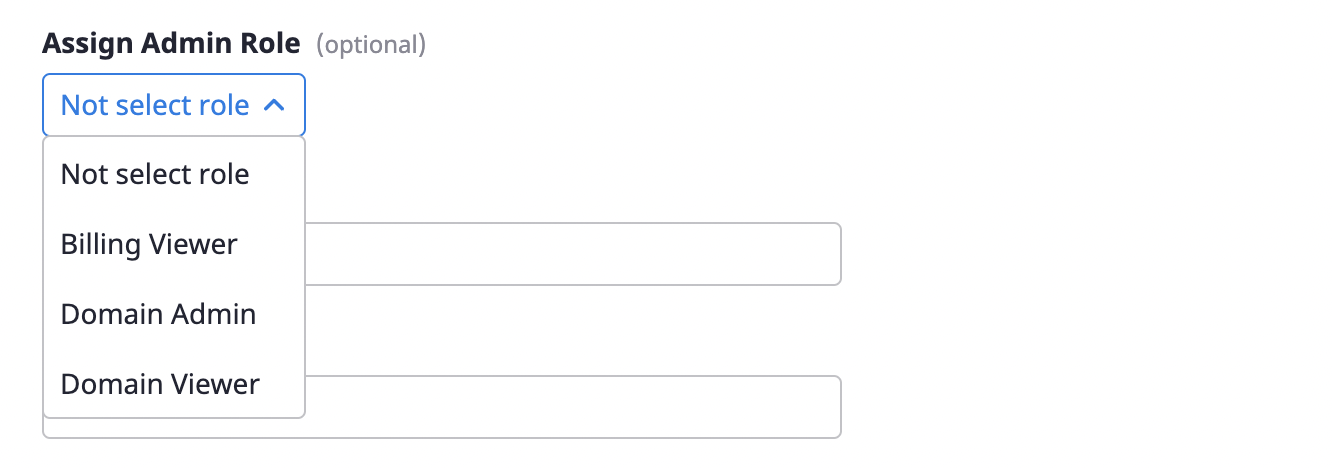

- 4.7.1: [IAM] User

- 4.8: My page

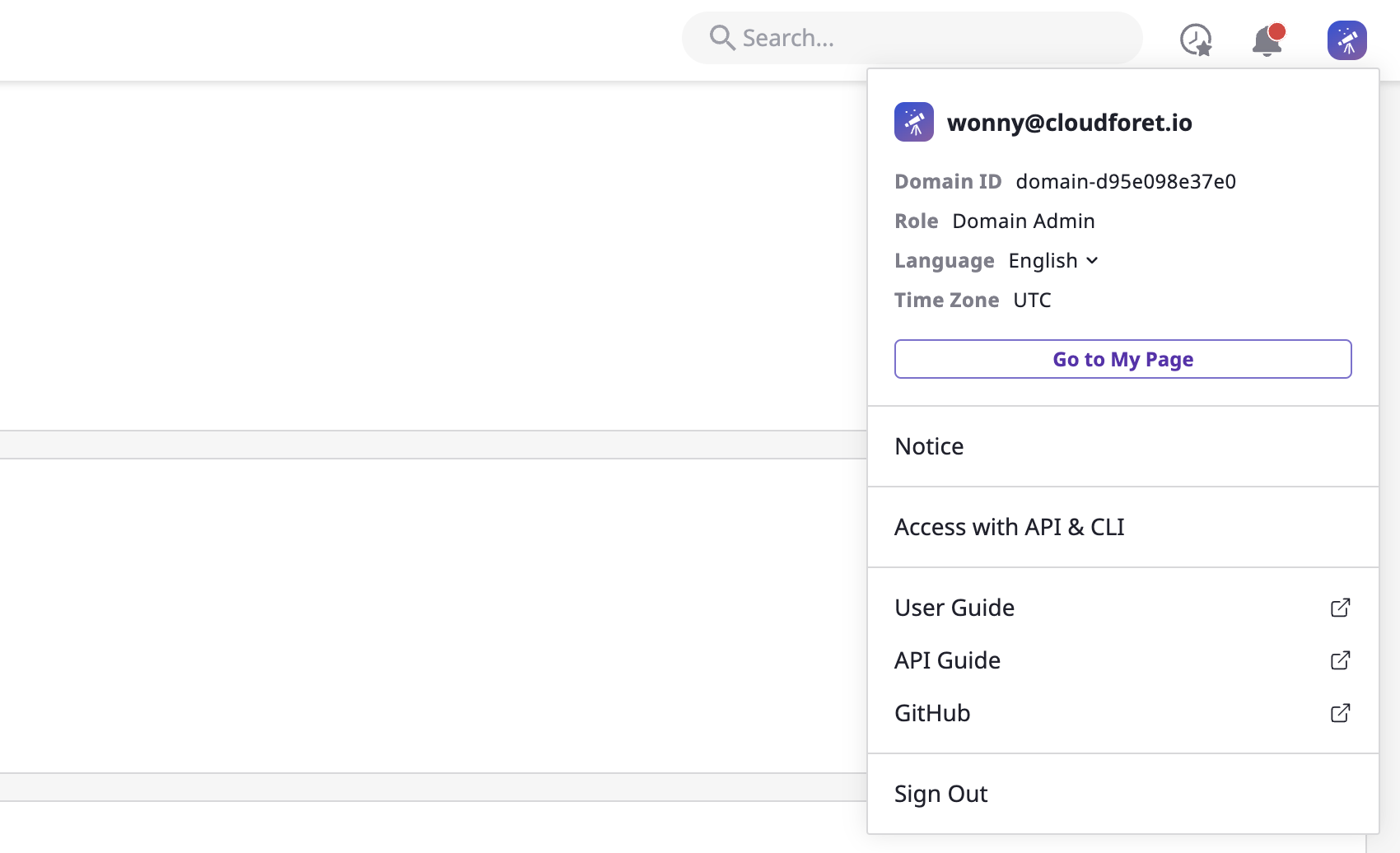

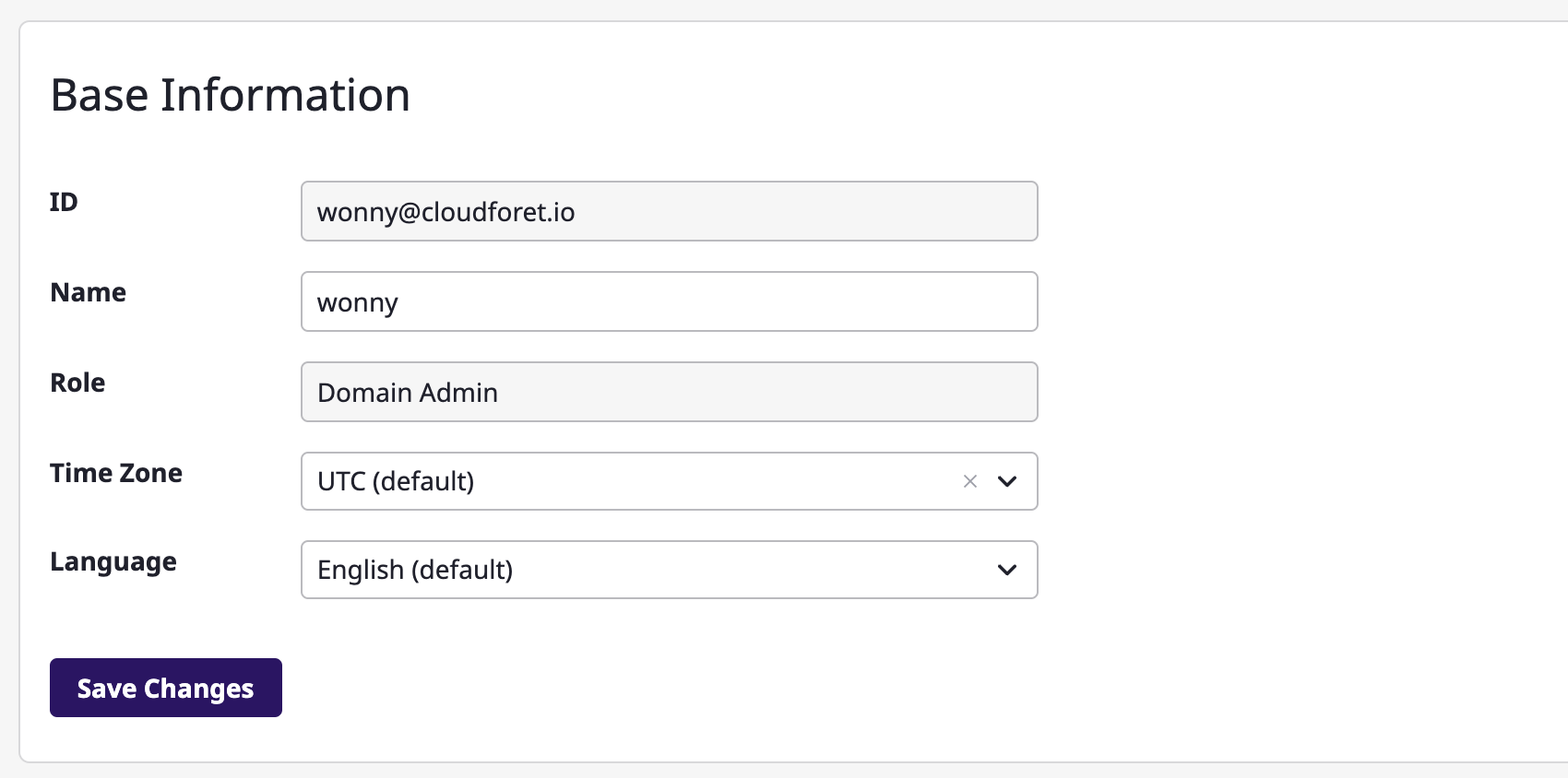

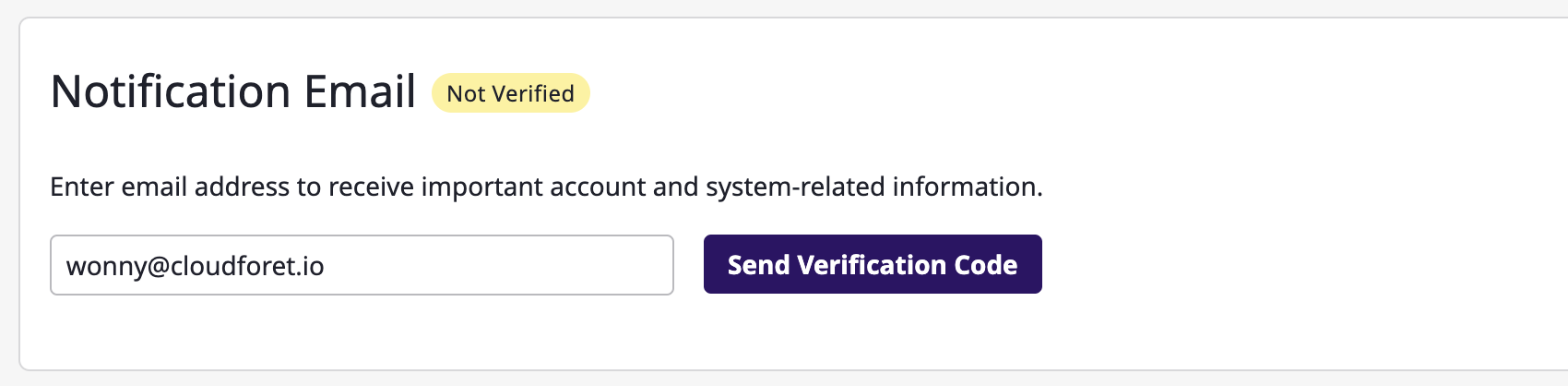

- 4.8.1: Account & profile

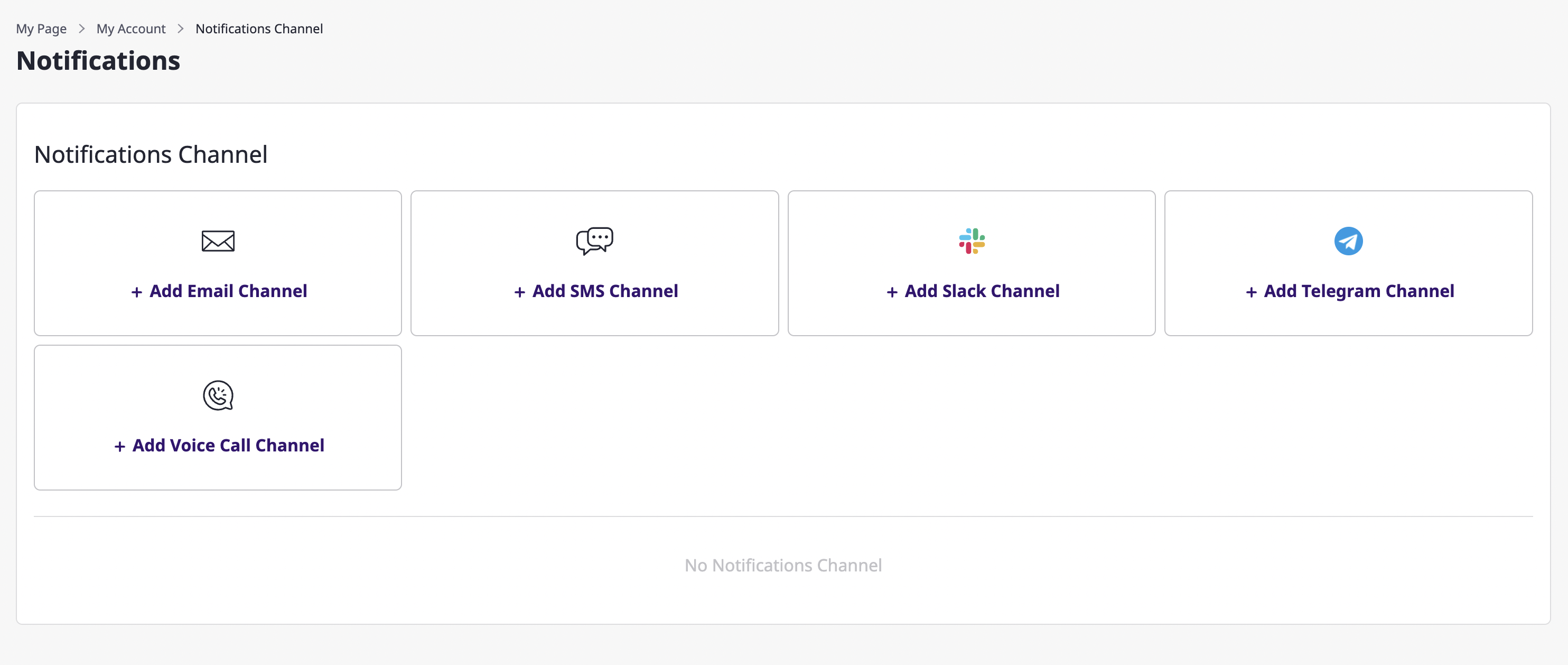

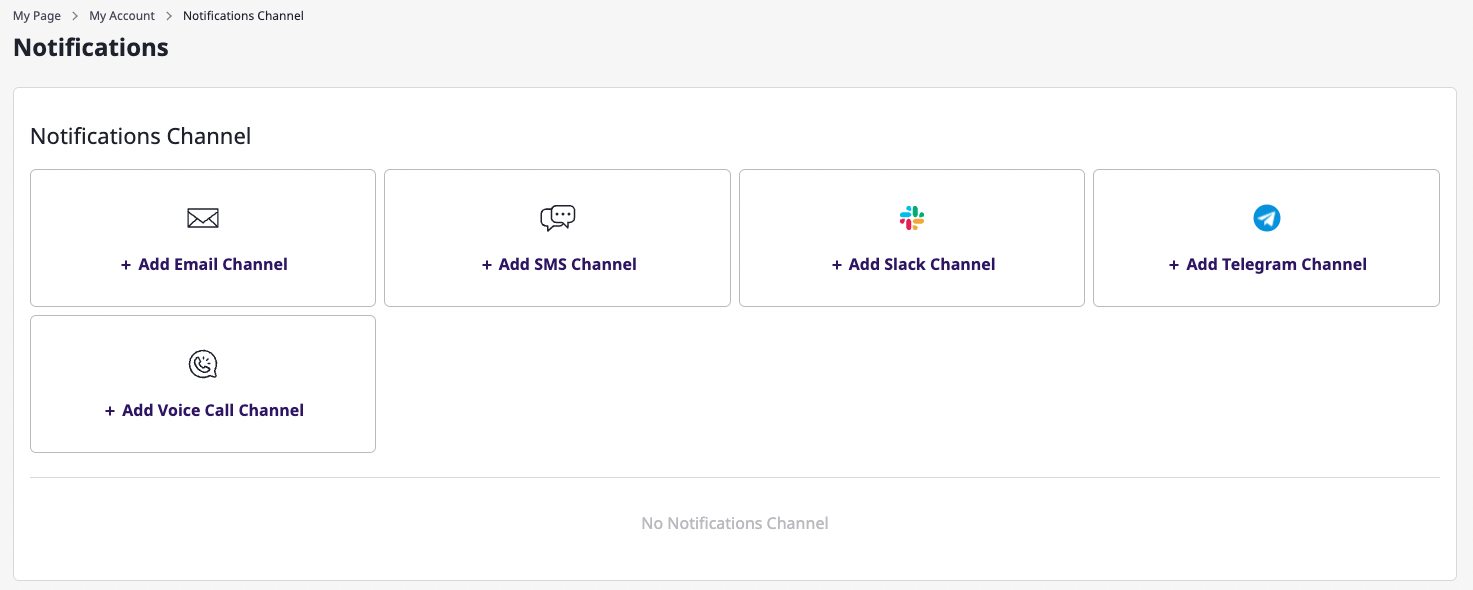

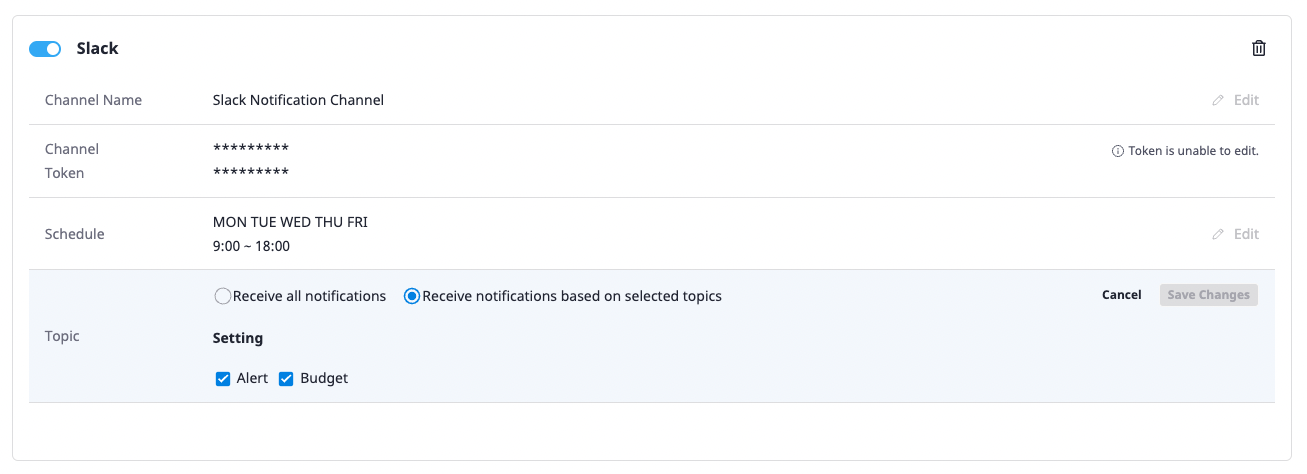

- 4.8.2: Notifications channel

- 4.9: Information

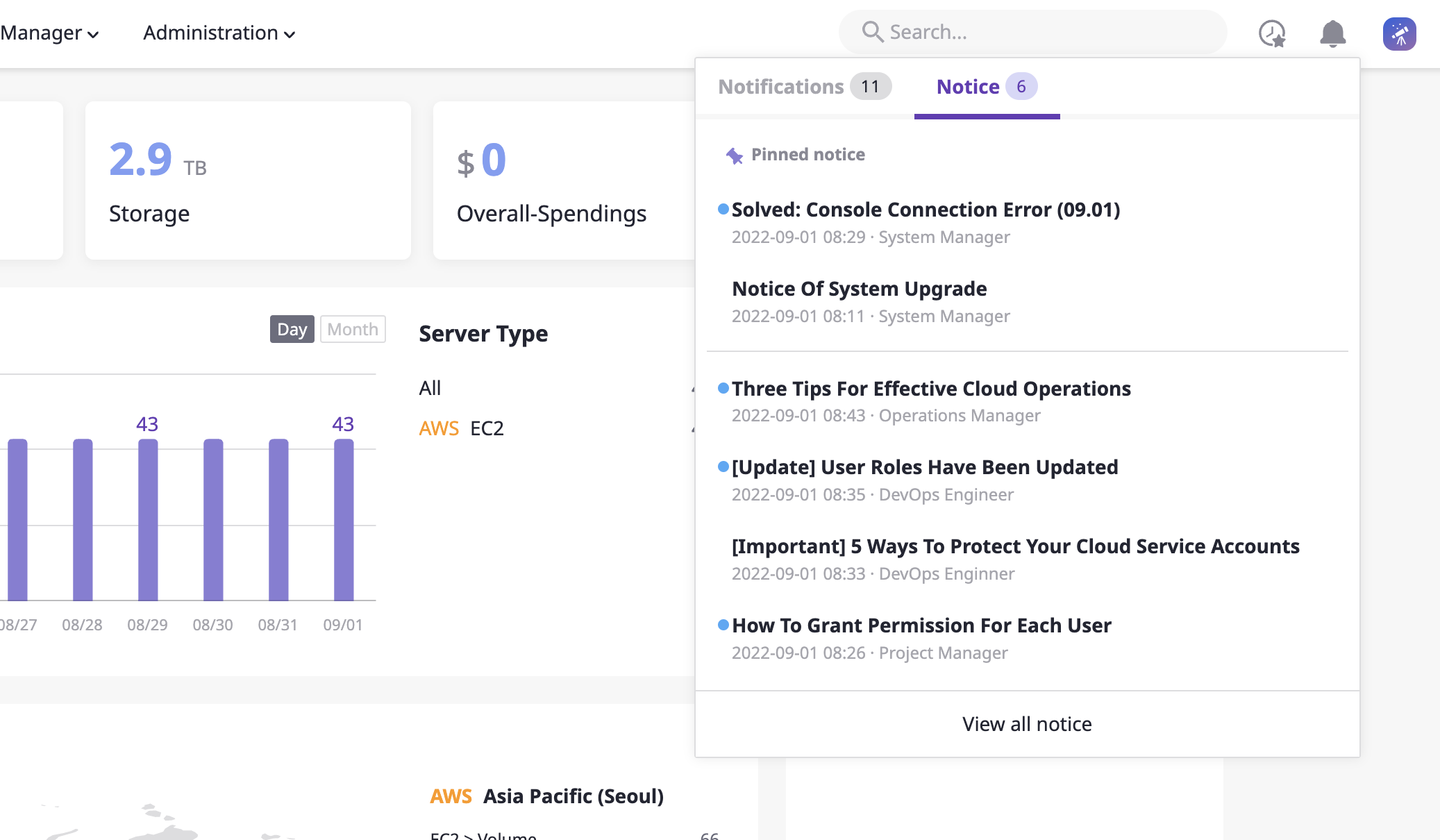

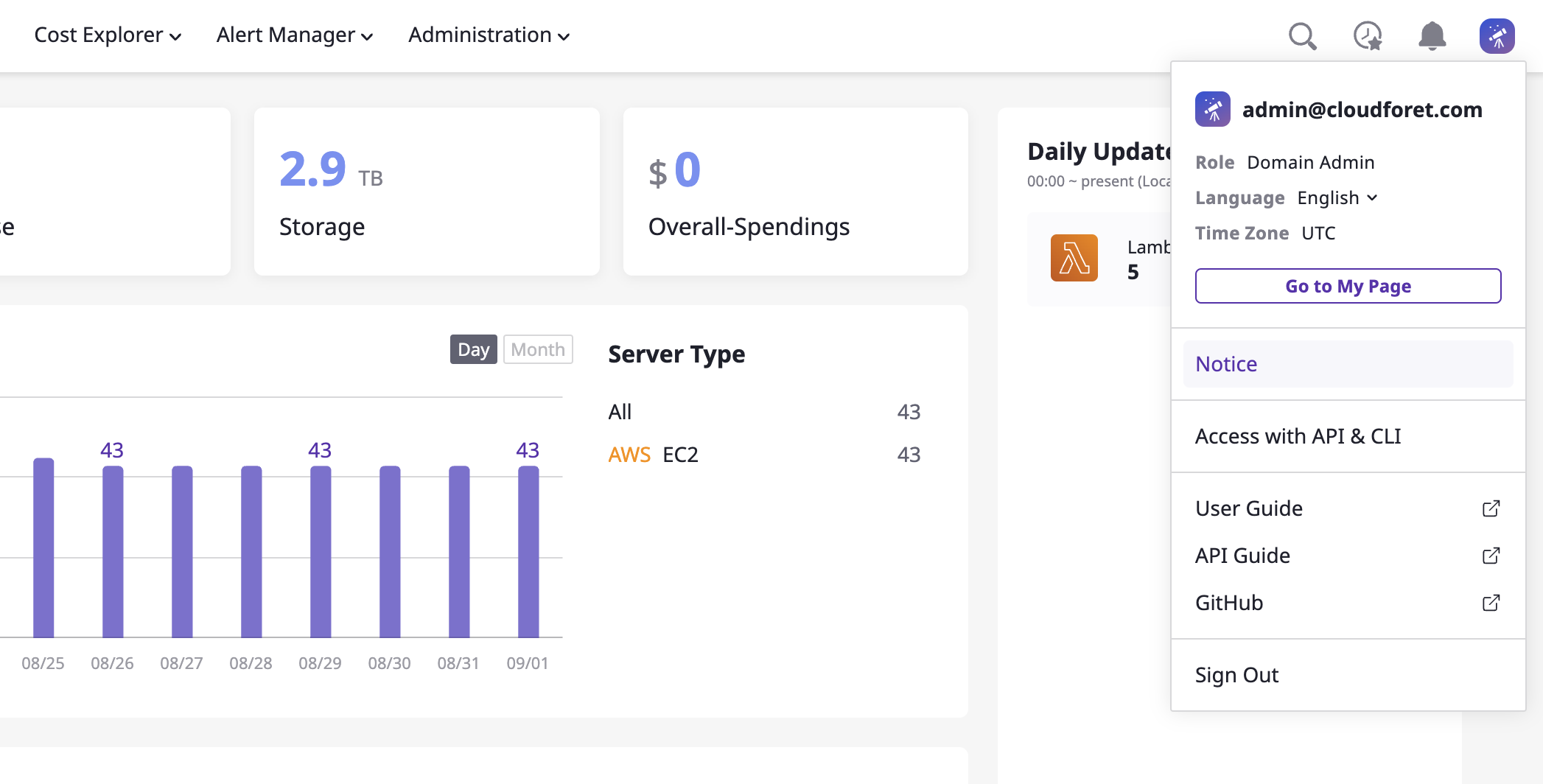

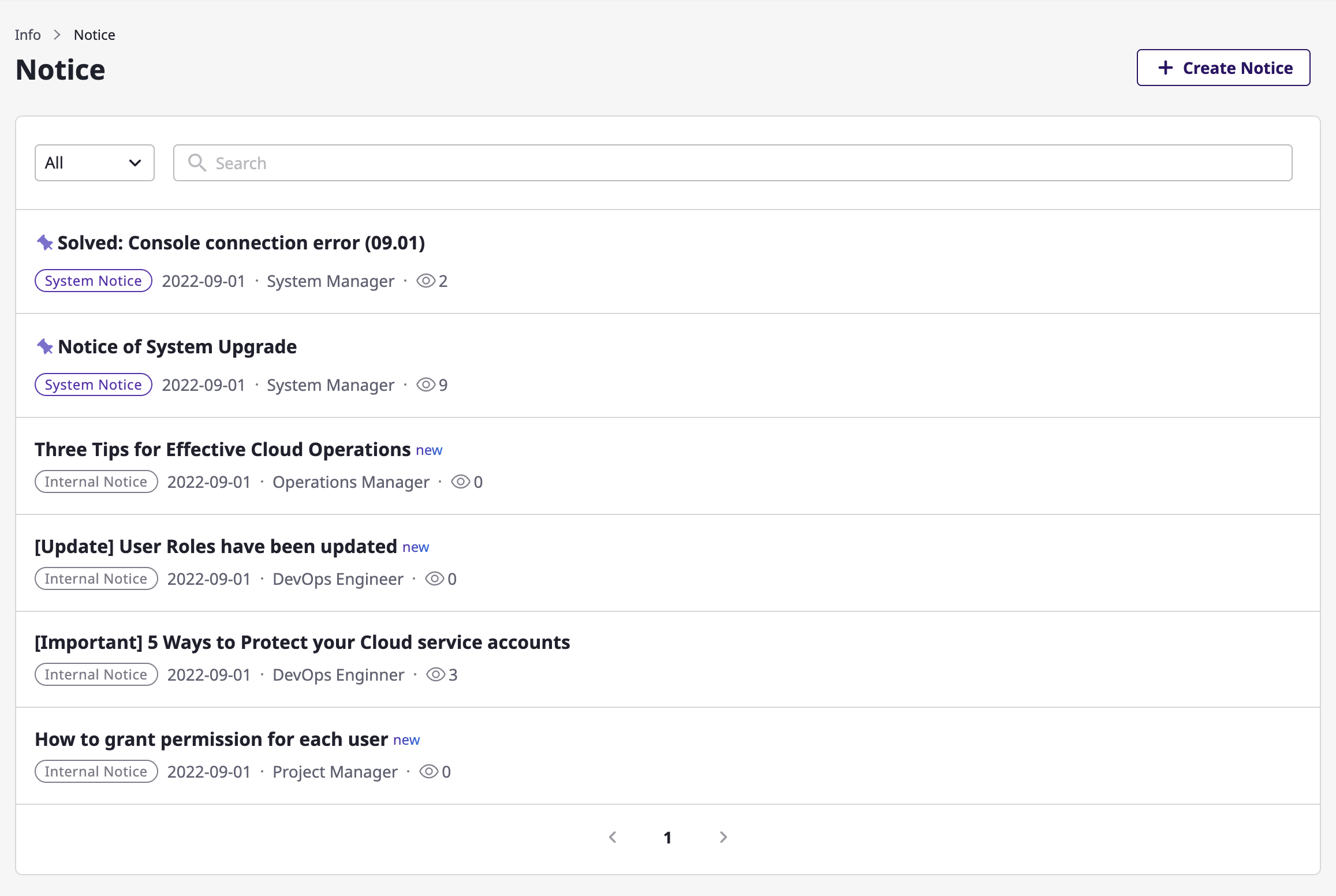

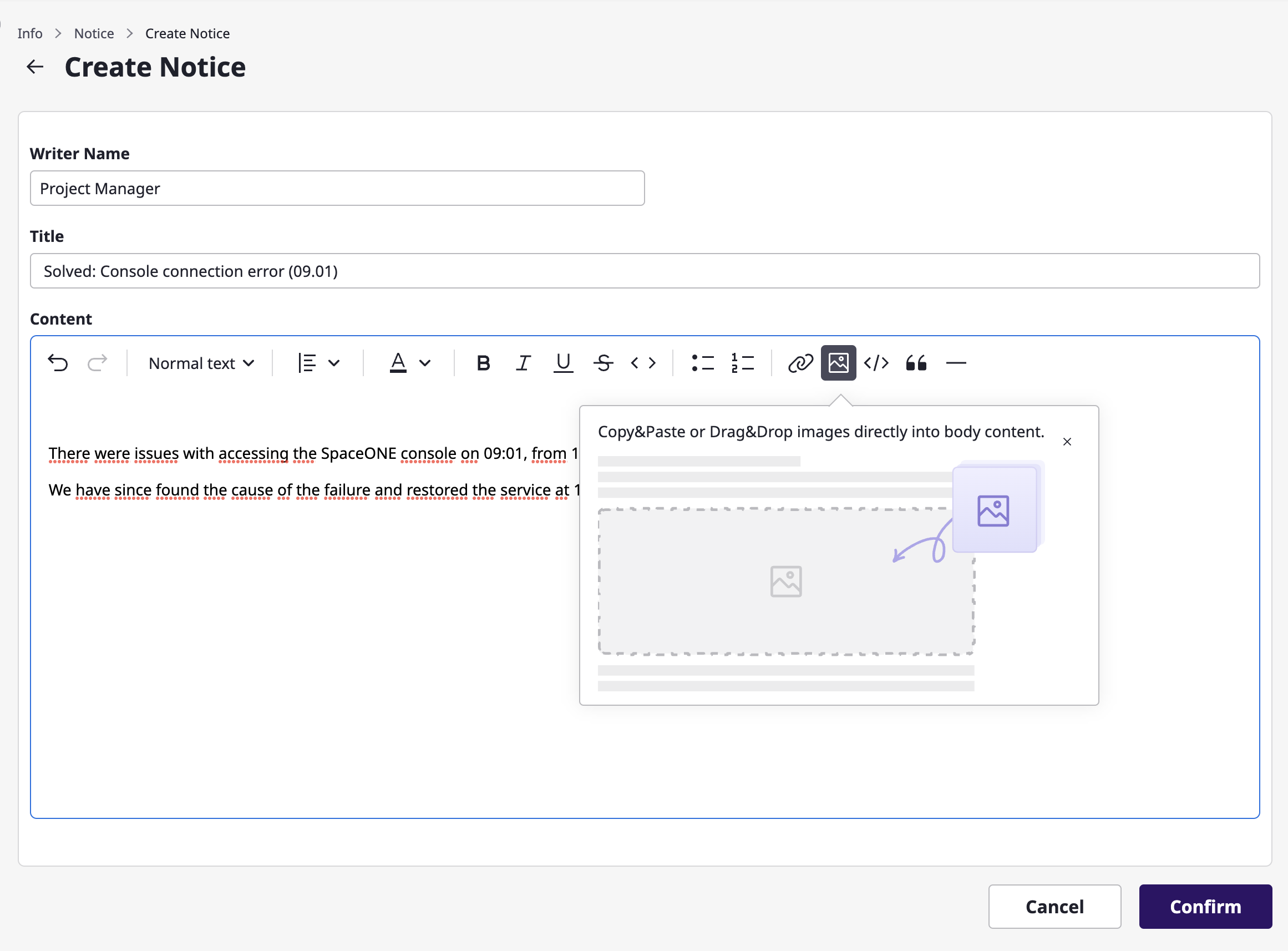

- 4.9.1: Notice

- 4.10: Advanced feature

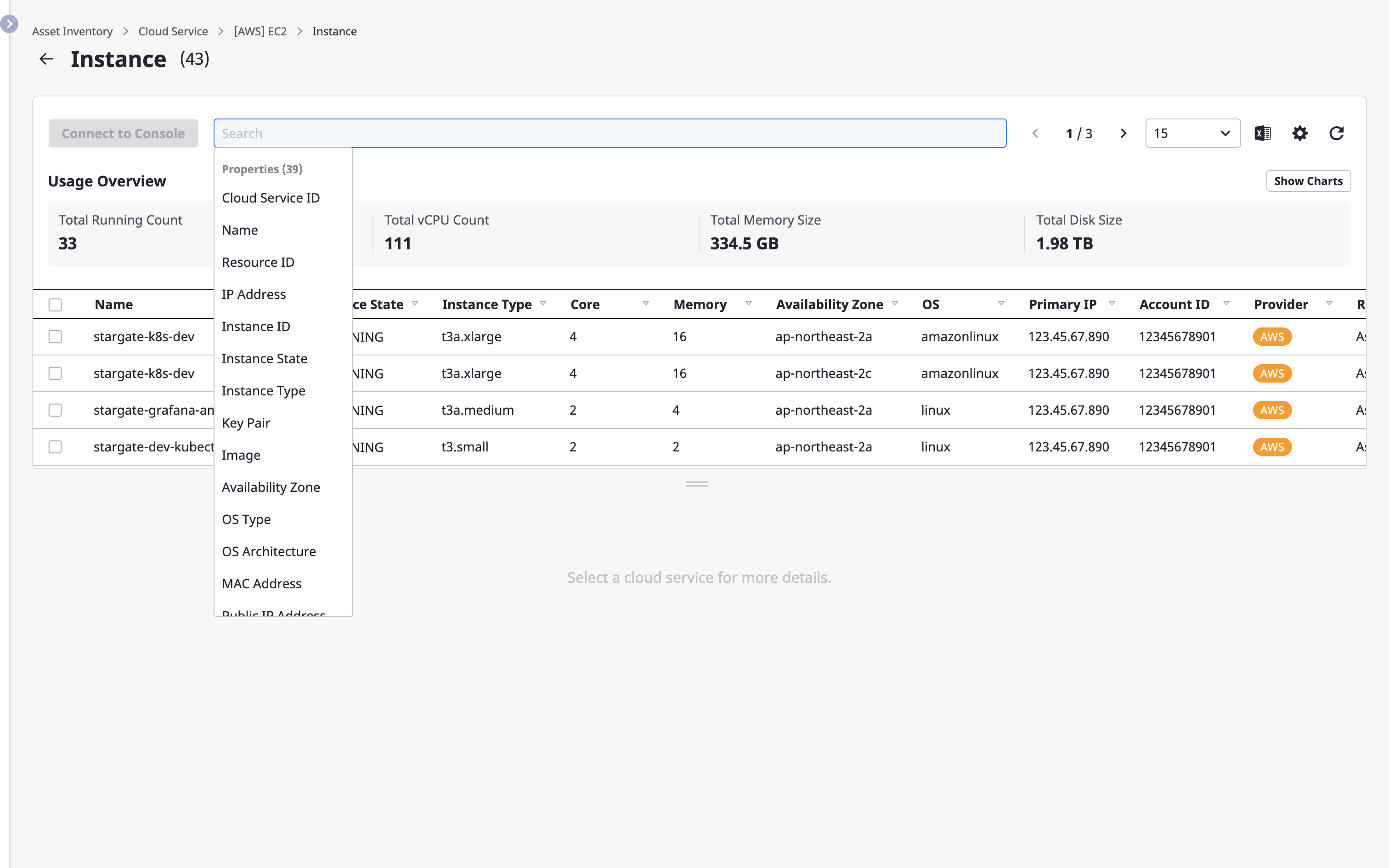

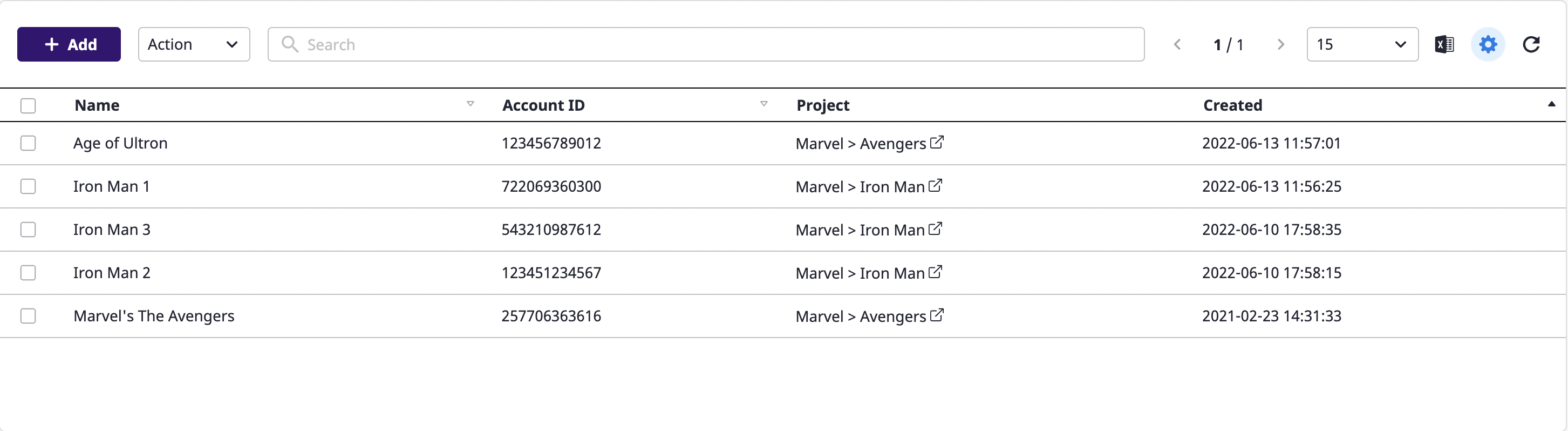

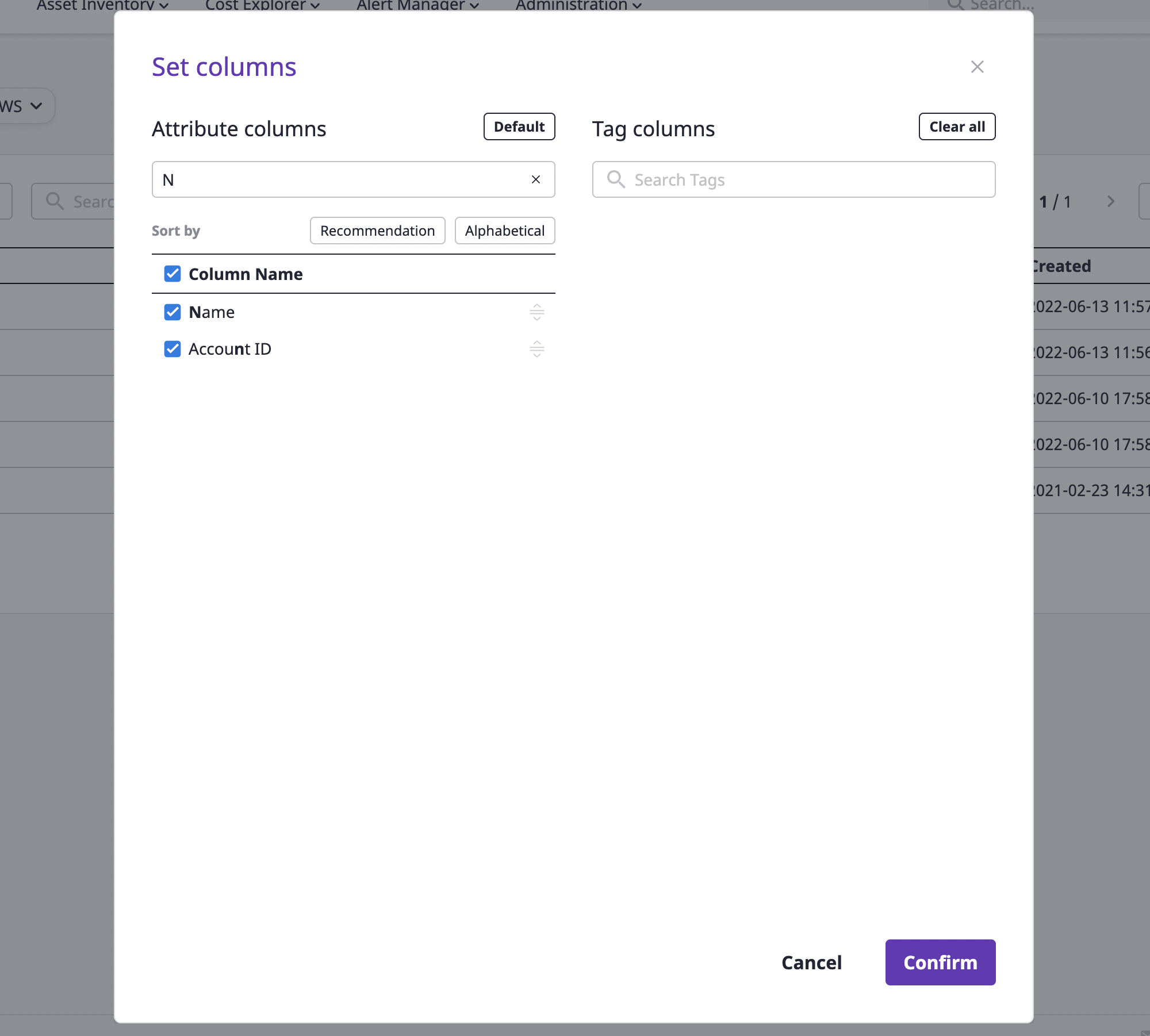

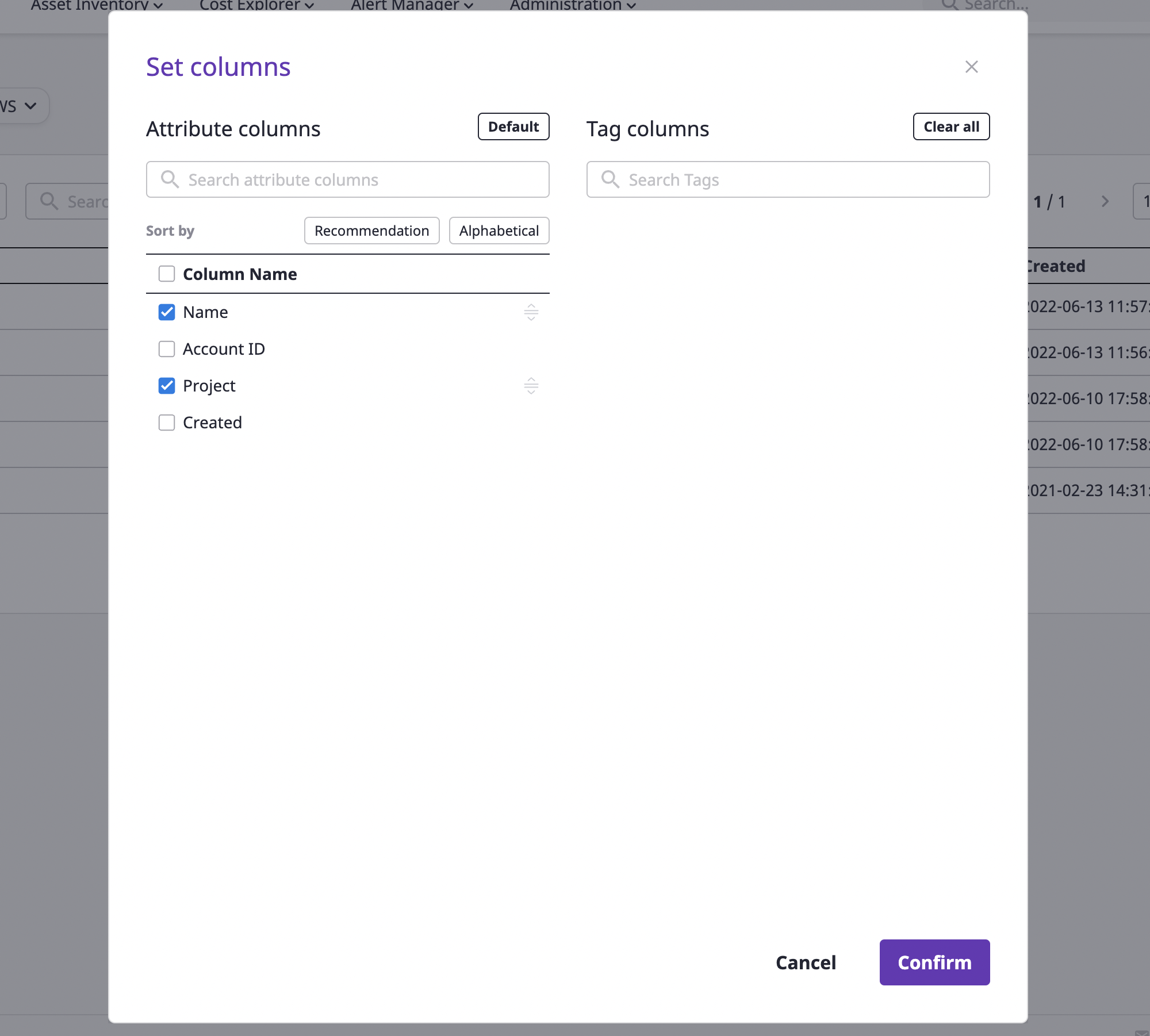

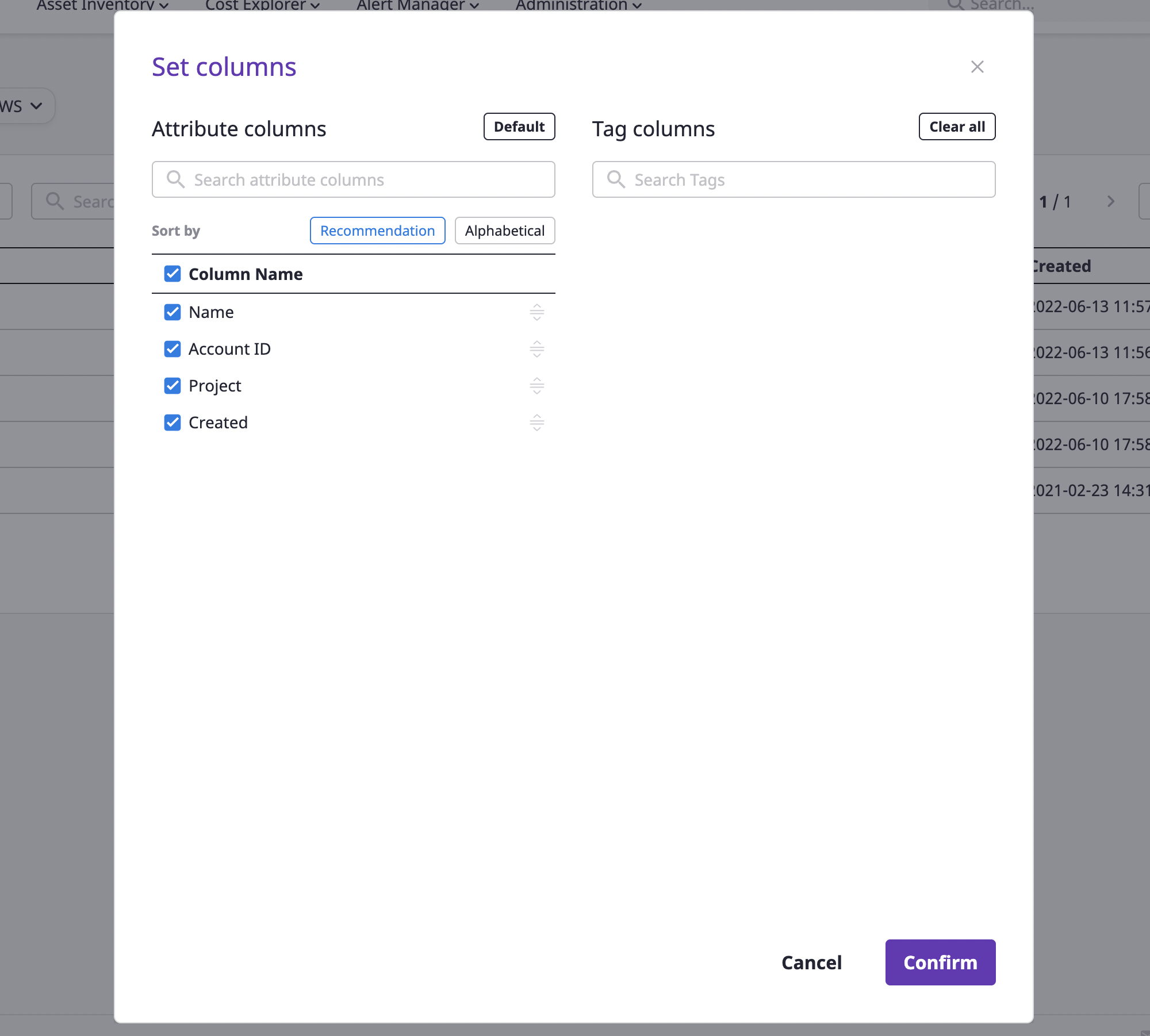

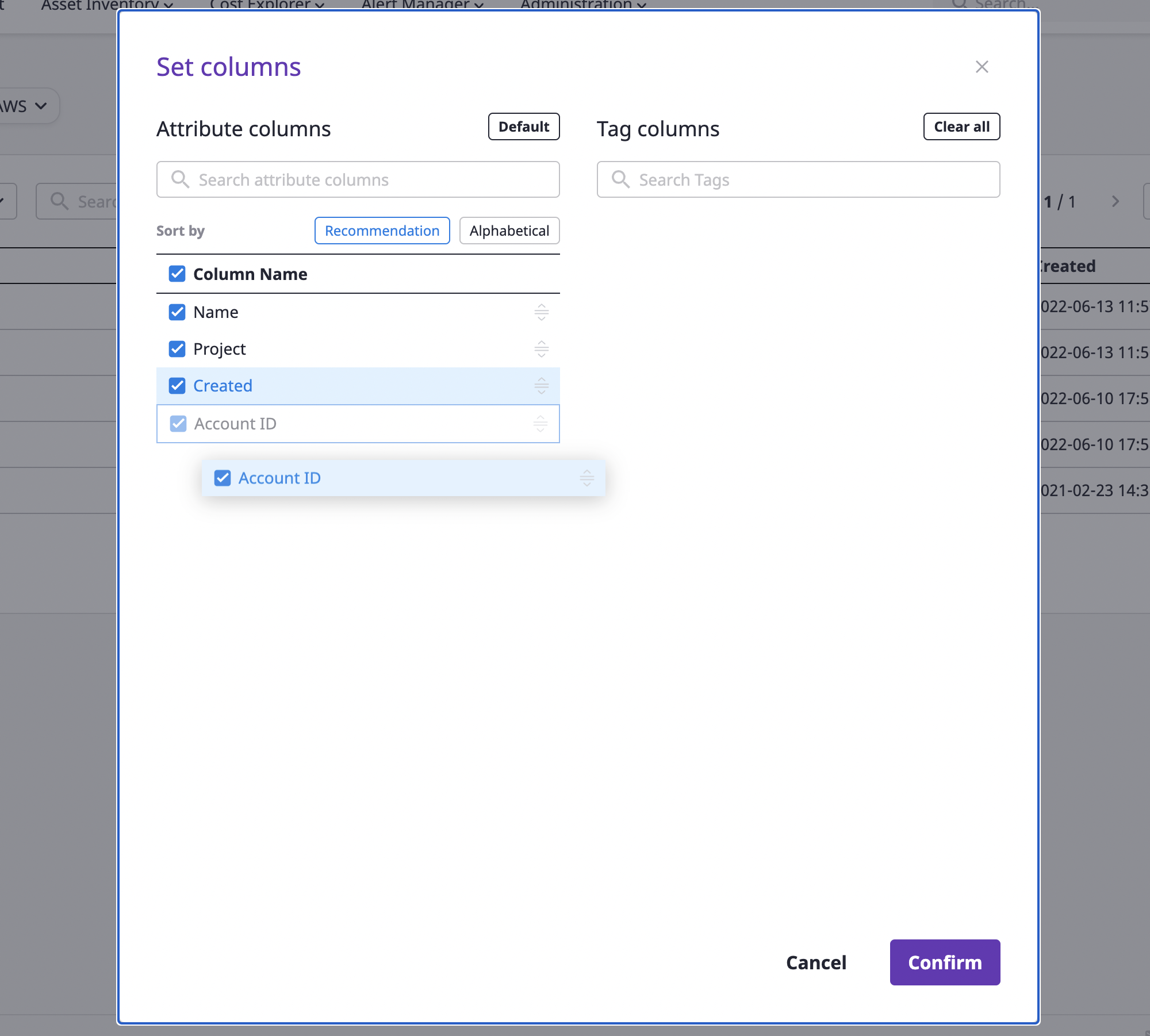

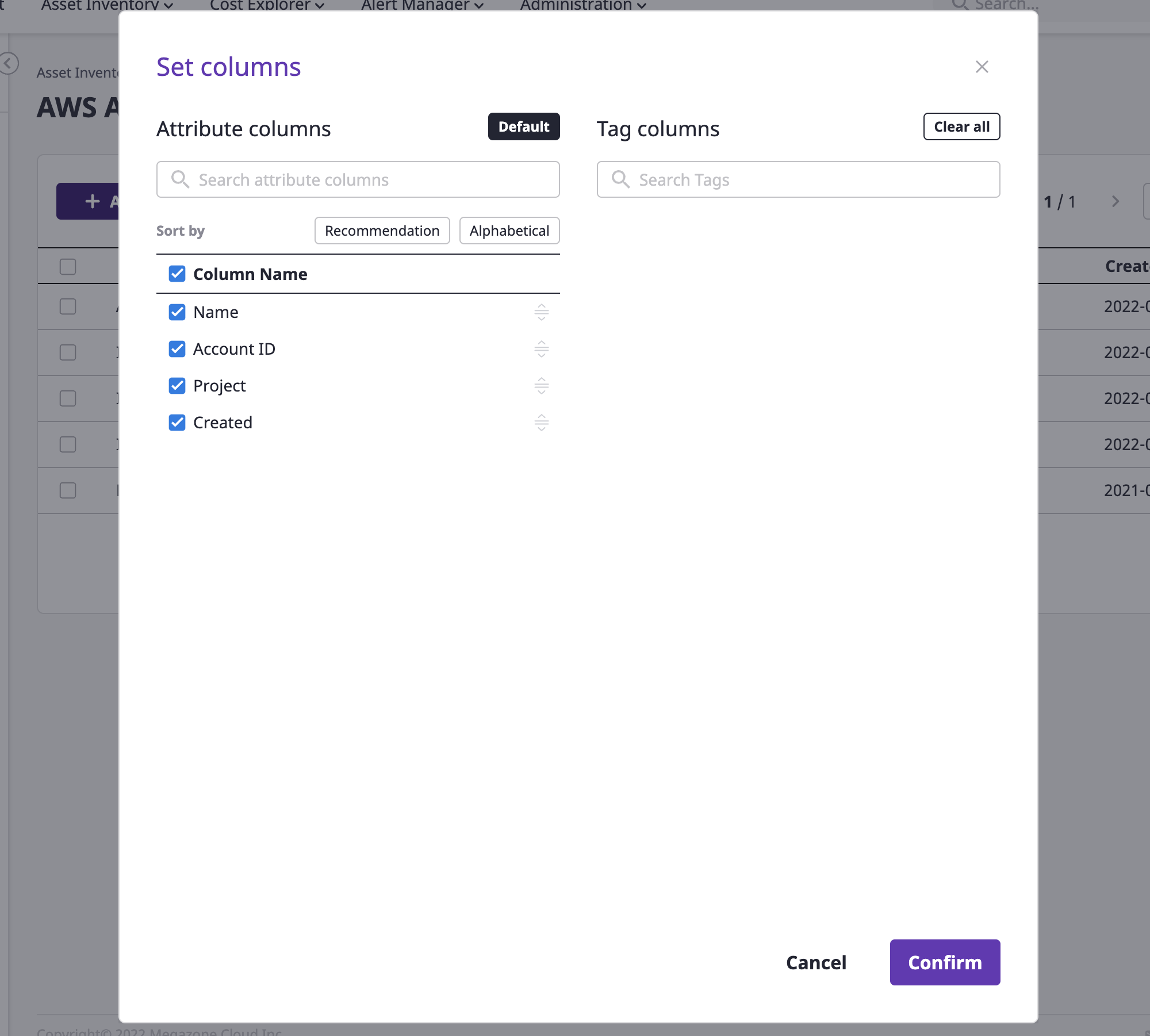

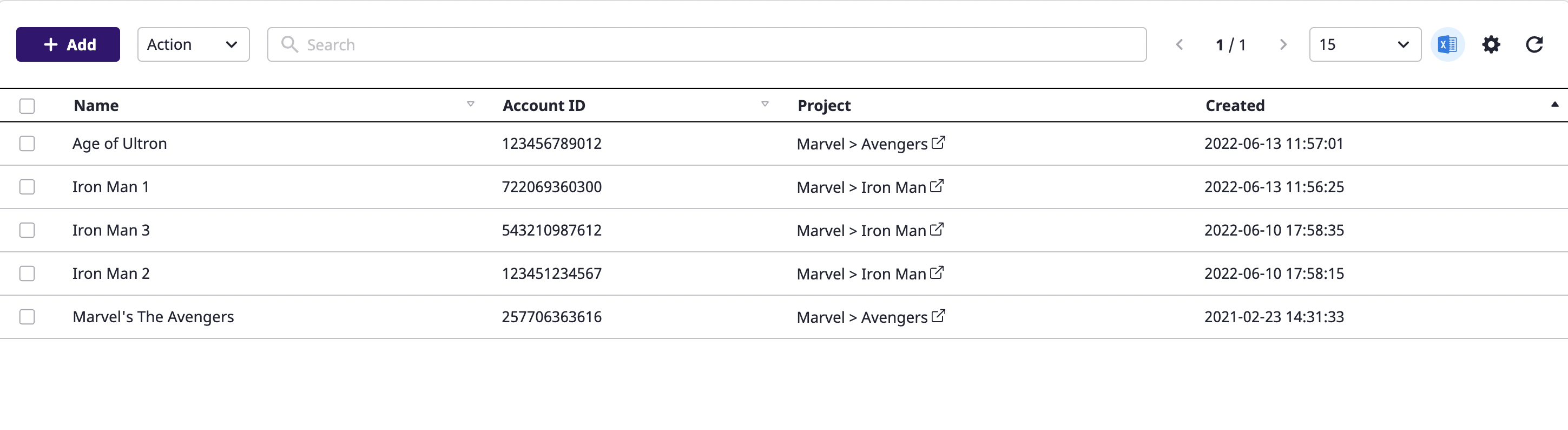

- 4.10.1: Custom table

- 4.10.2: Export as an Excel file

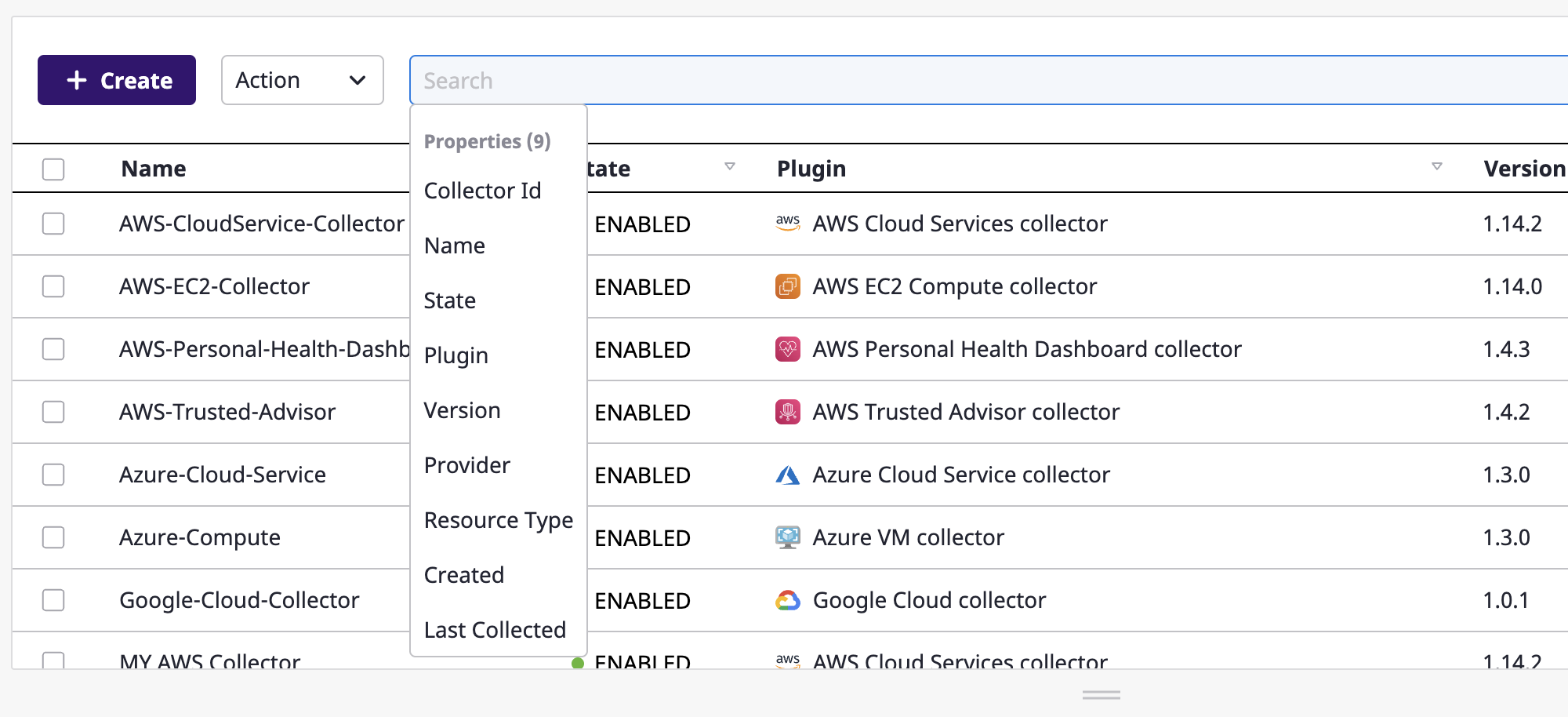

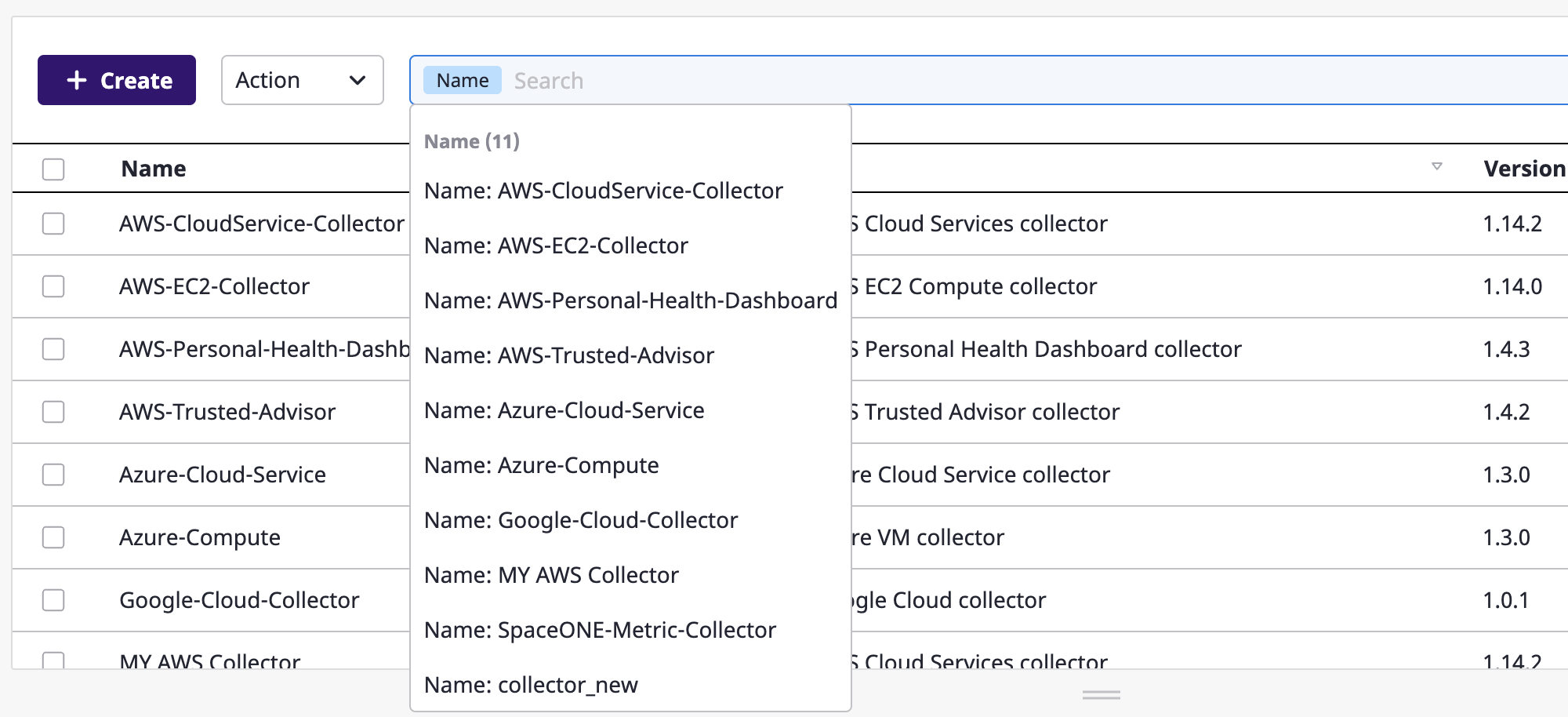

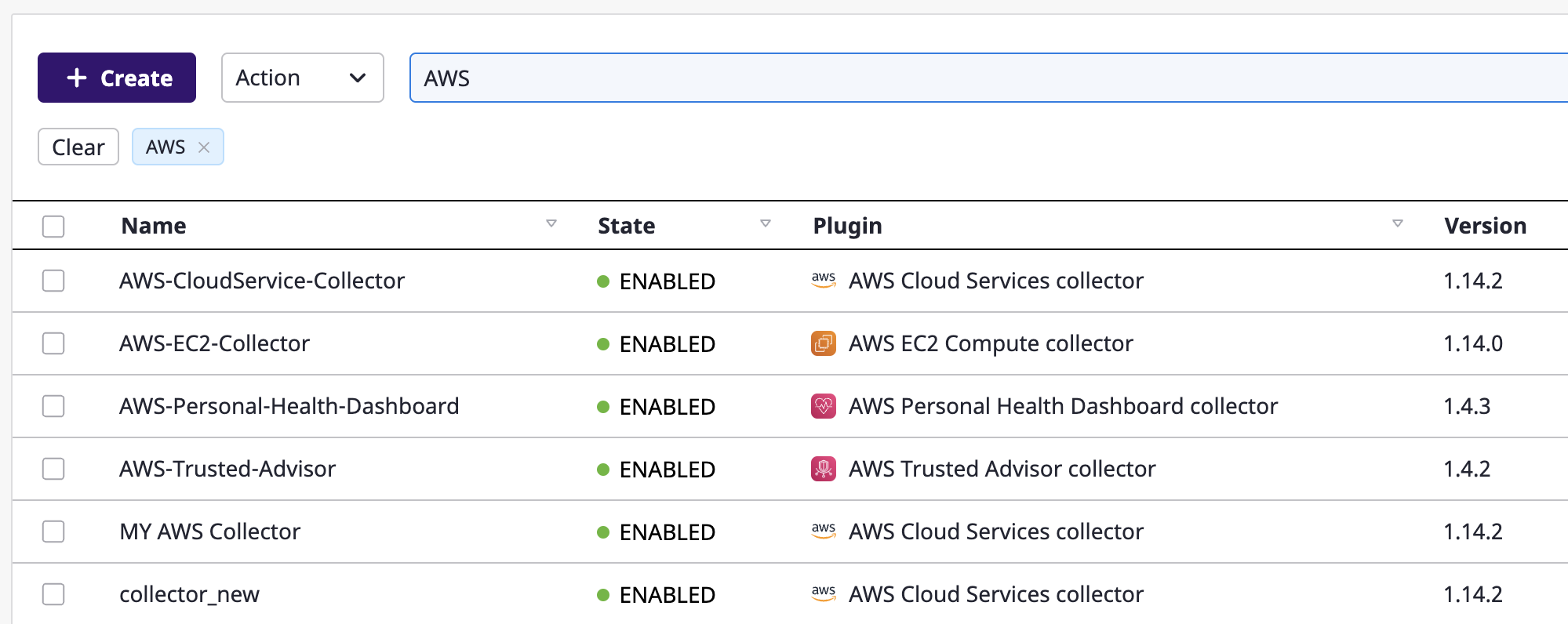

- 4.10.3: Search

- 4.11: Plugin

- 4.11.1: [Alert manager] notification

- 4.11.2: [Alert manager] webhook

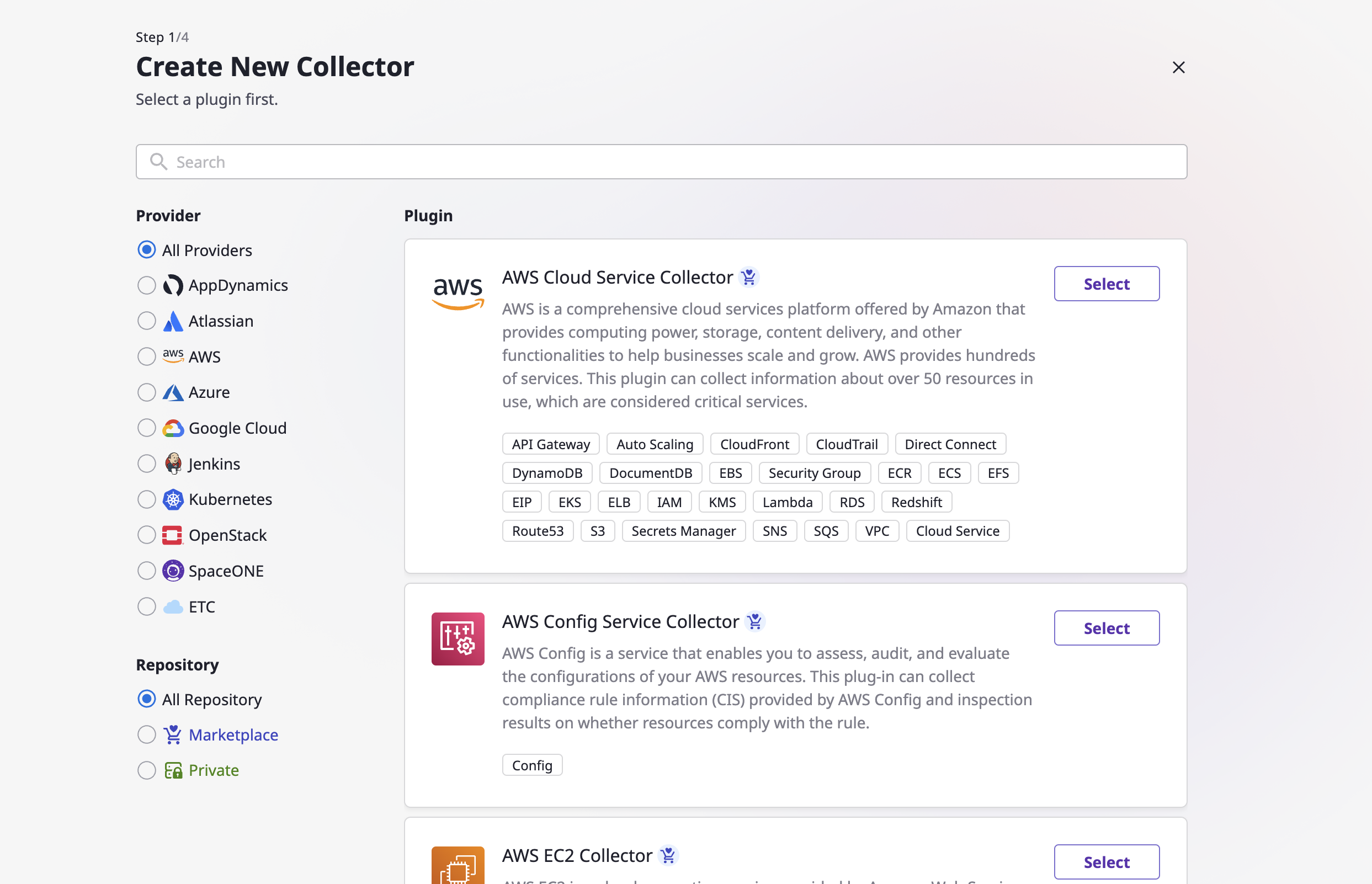

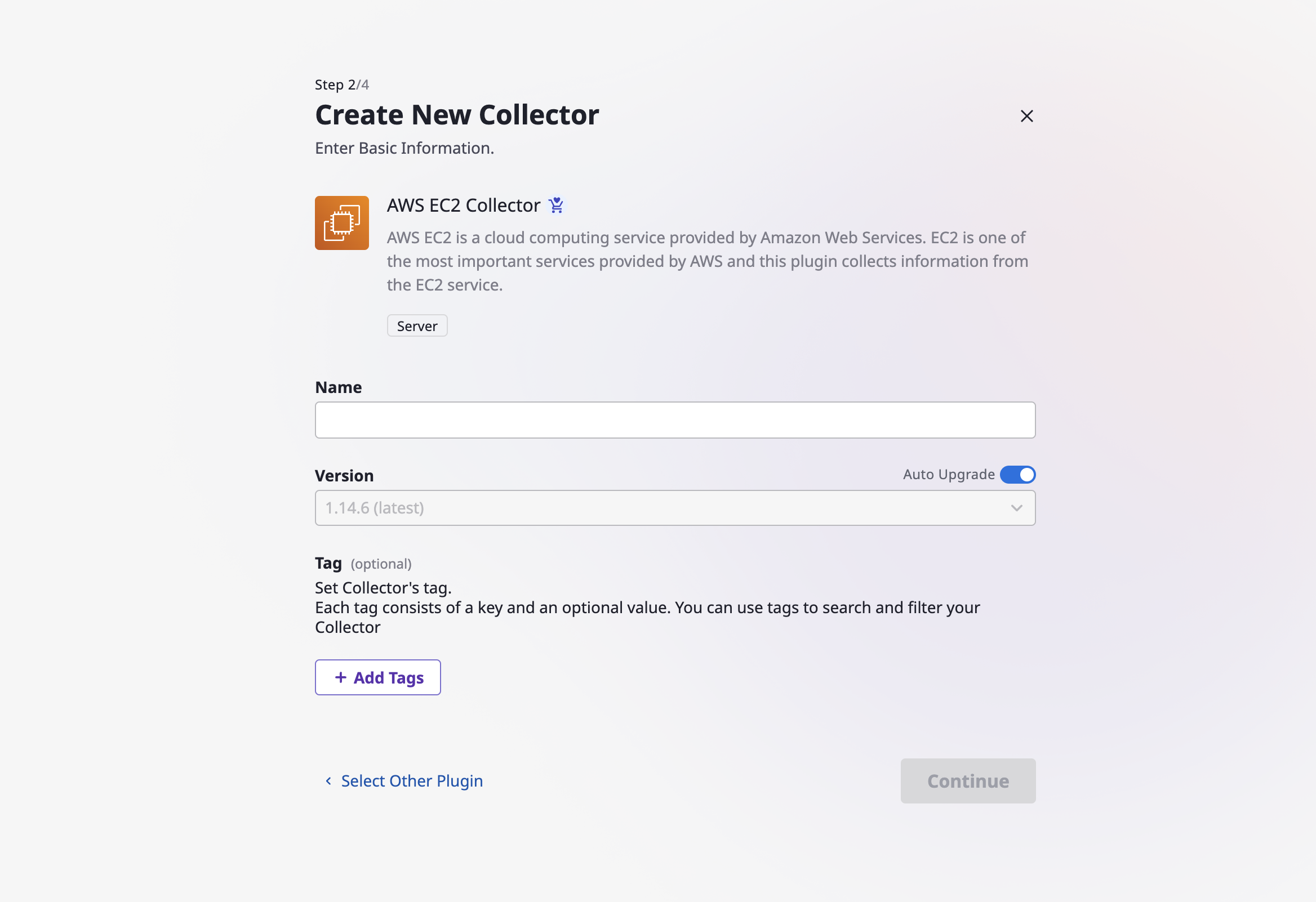

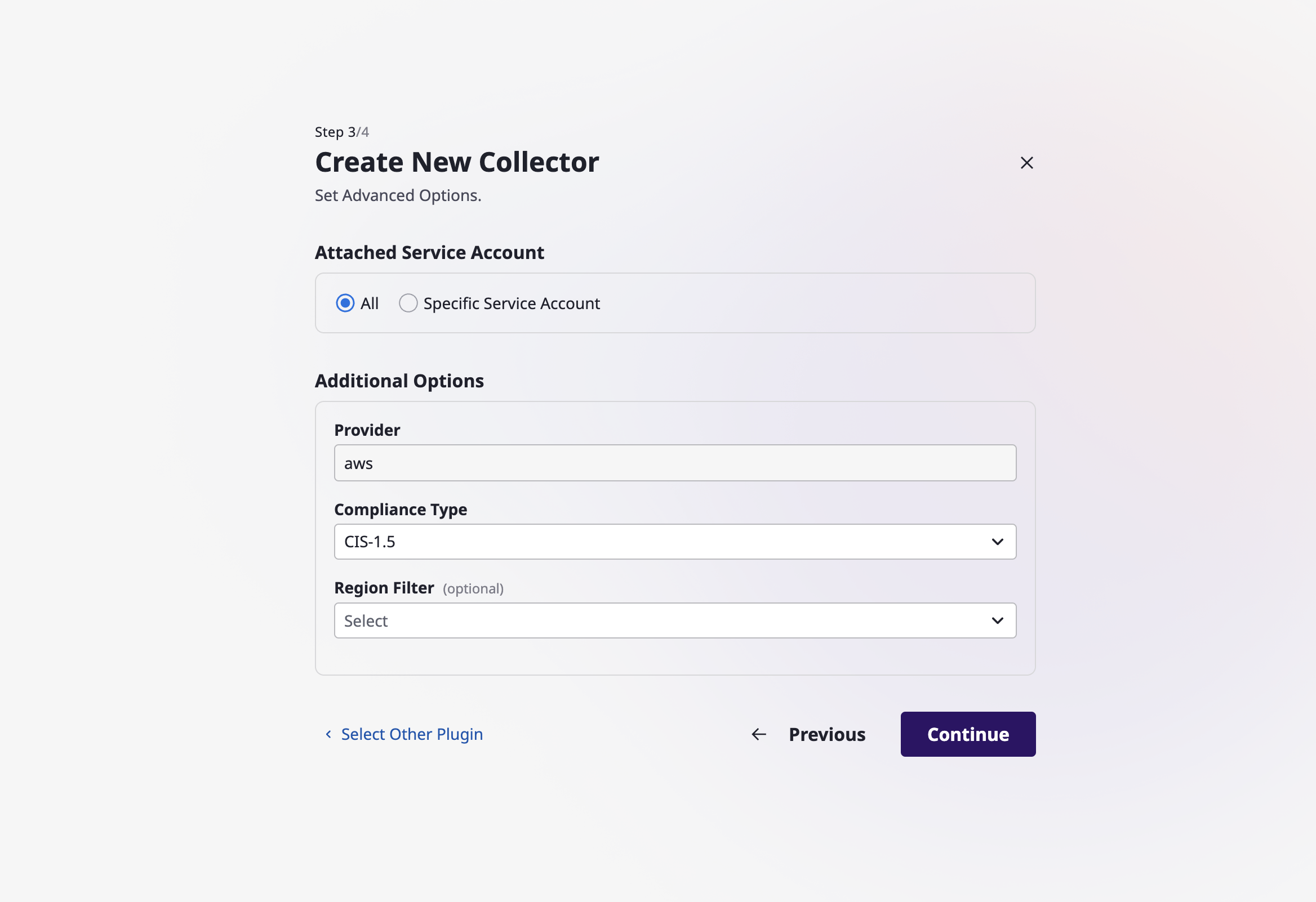

- 4.11.3: [Asset inventory] collector

- 4.11.4: [Cost analysis] data source

- 4.11.5: [IAM] authentication

- 5: Developers

- 5.1: Architecture

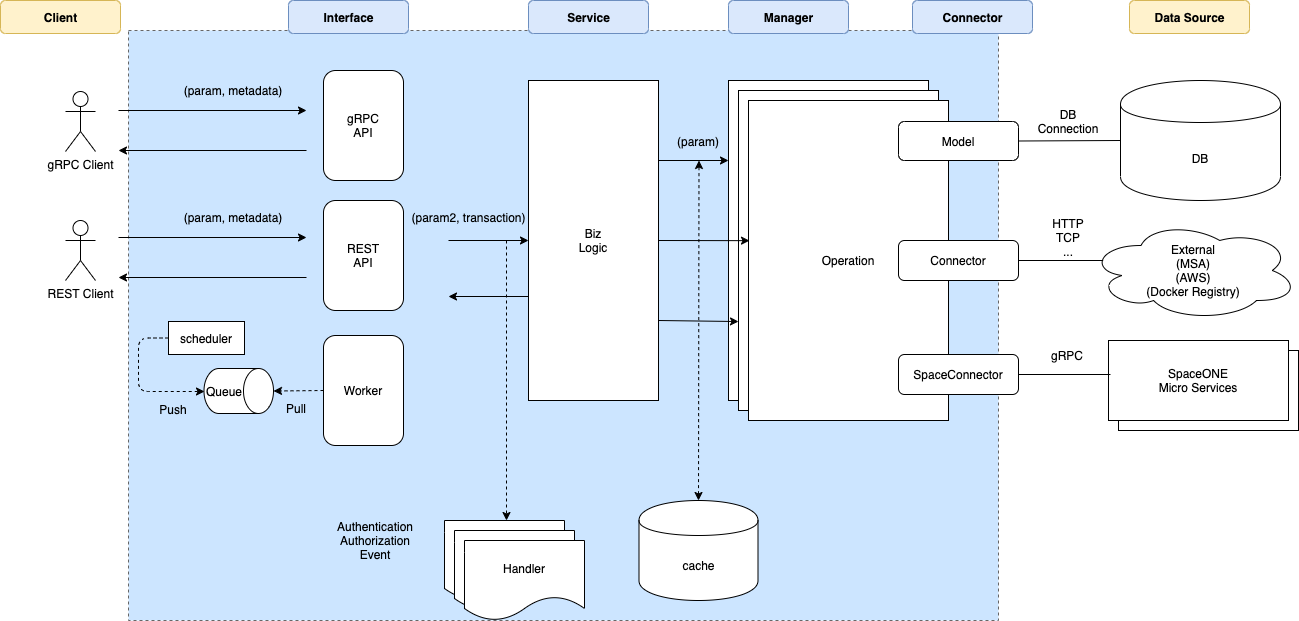

- 5.1.1: Micro Service Framework

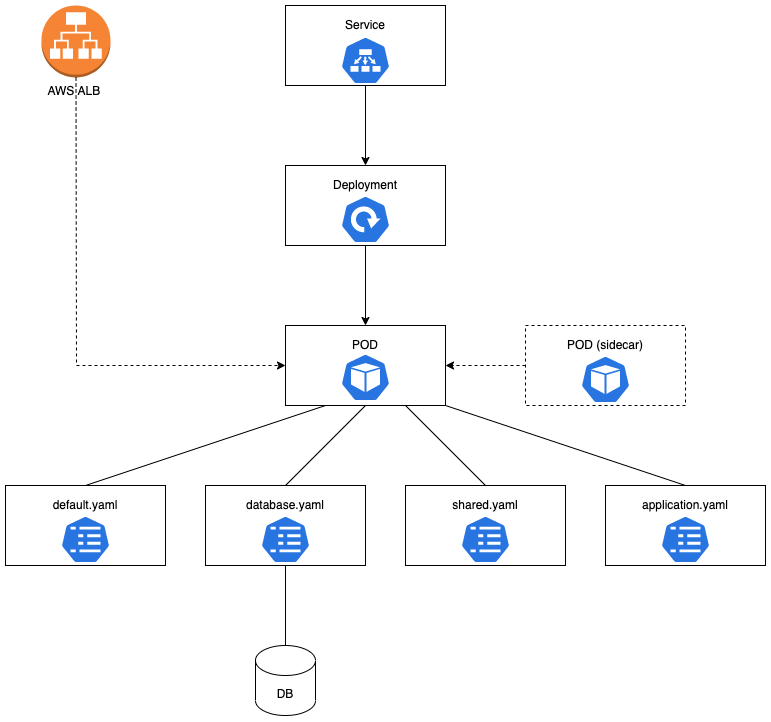

- 5.1.2: Micro Service Deployment

- 5.2: Microservices

- 5.2.1: Console

- 5.2.2: Identity

- 5.2.3: Inventory

- 5.2.4: Monitoring

- 5.2.5: Notification

- 5.2.6: Statistics

- 5.2.7: Billing

- 5.2.8: Plugin

- 5.2.9: Supervisor

- 5.2.10: Repository

- 5.2.11: Secret

- 5.2.12: Config

- 5.3: Frontend

- 5.4: Design System

- 5.4.1: Getting Started

- 5.5: Backend

- 5.6: Plugins

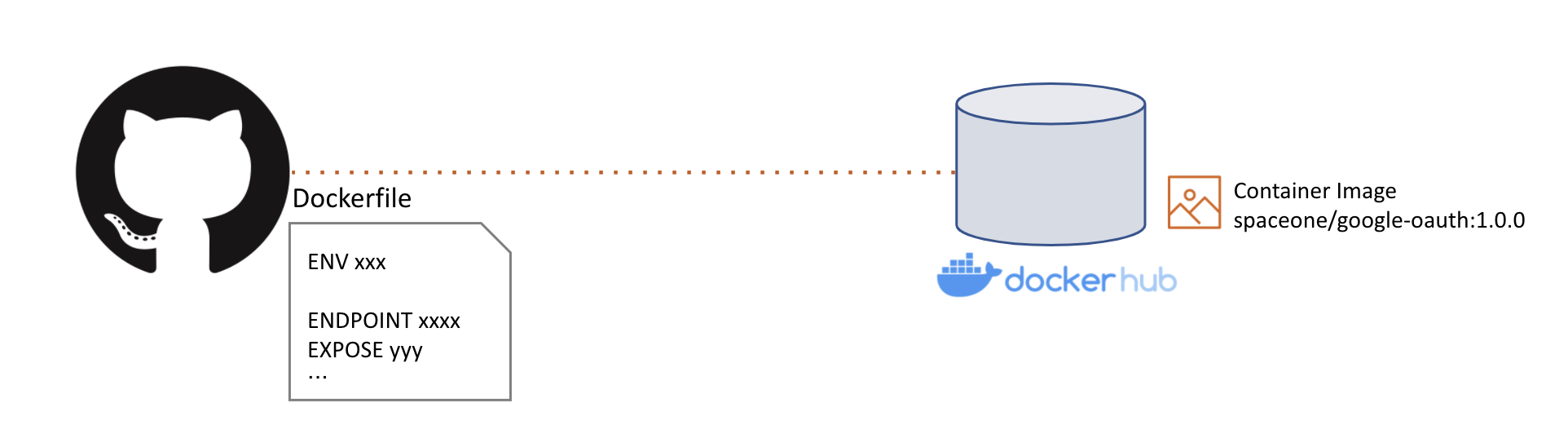

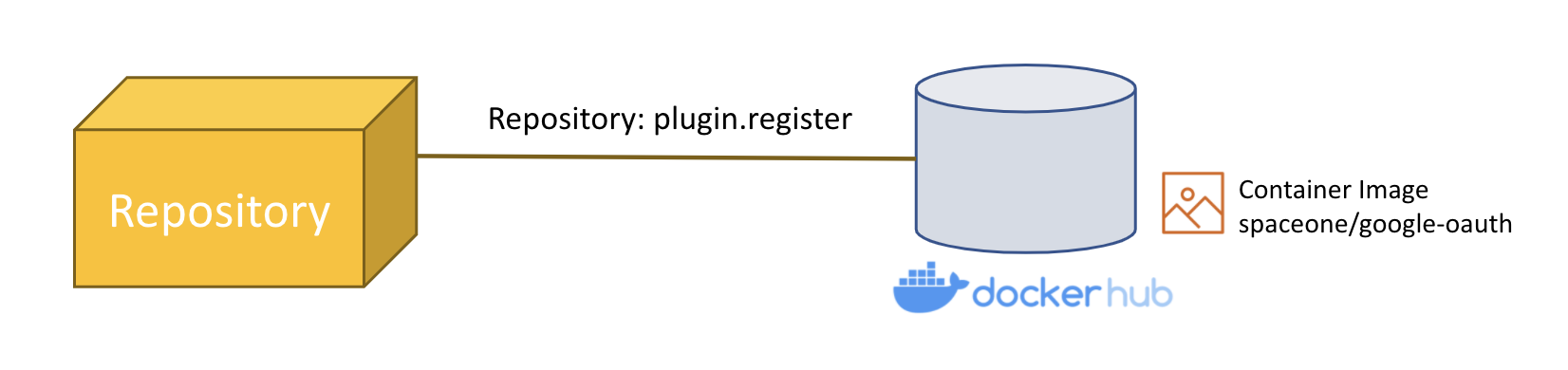

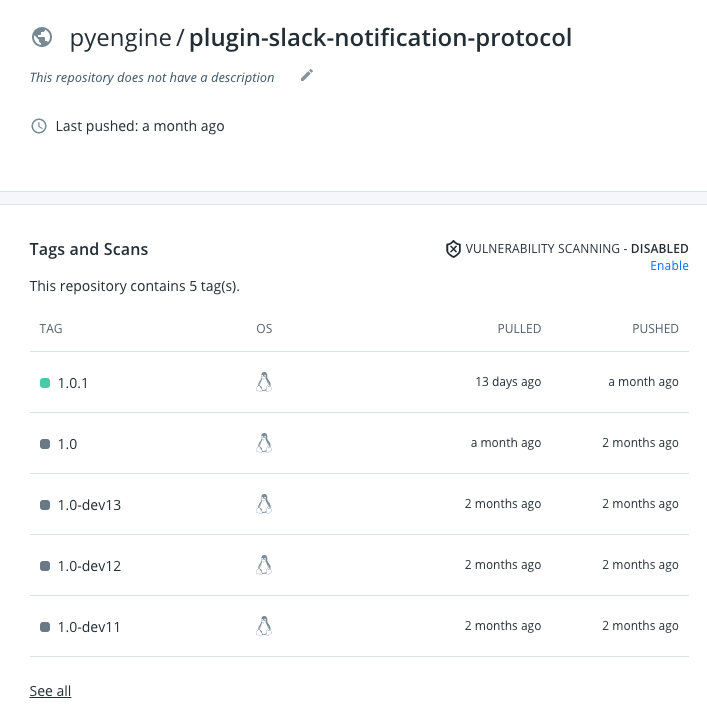

- 5.6.1: About Plugin

- 5.6.2: Developer Guide

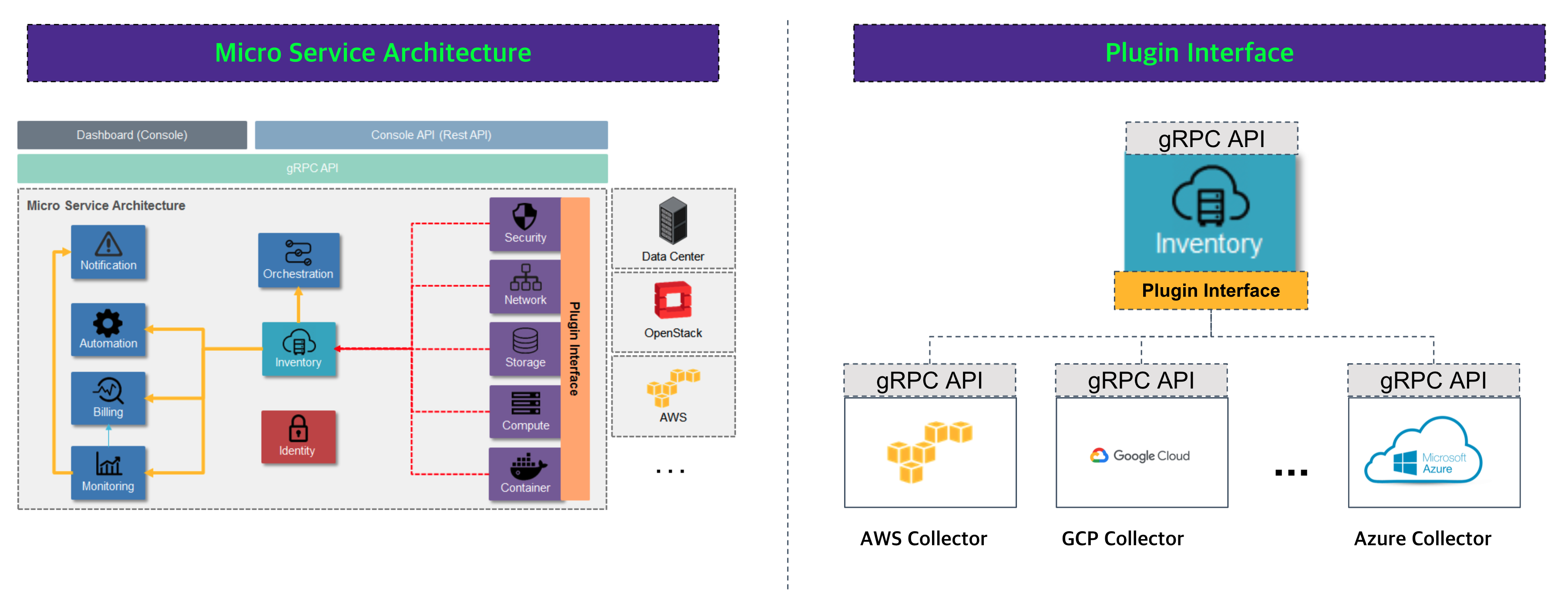

- 5.6.2.1: Plugin Interface

- 5.6.2.2: Plugin Register

- 5.6.2.3: Plugin Deployment

- 5.6.2.4: Plugin Debugging

- 5.6.3: Plugin Designs

- 5.6.4: Collector

- 5.7: API & SDK

- 5.7.1: gRPC API

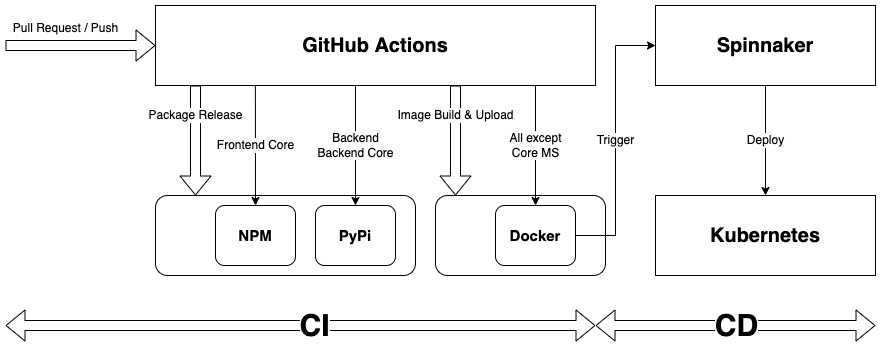

- 5.8: CICD

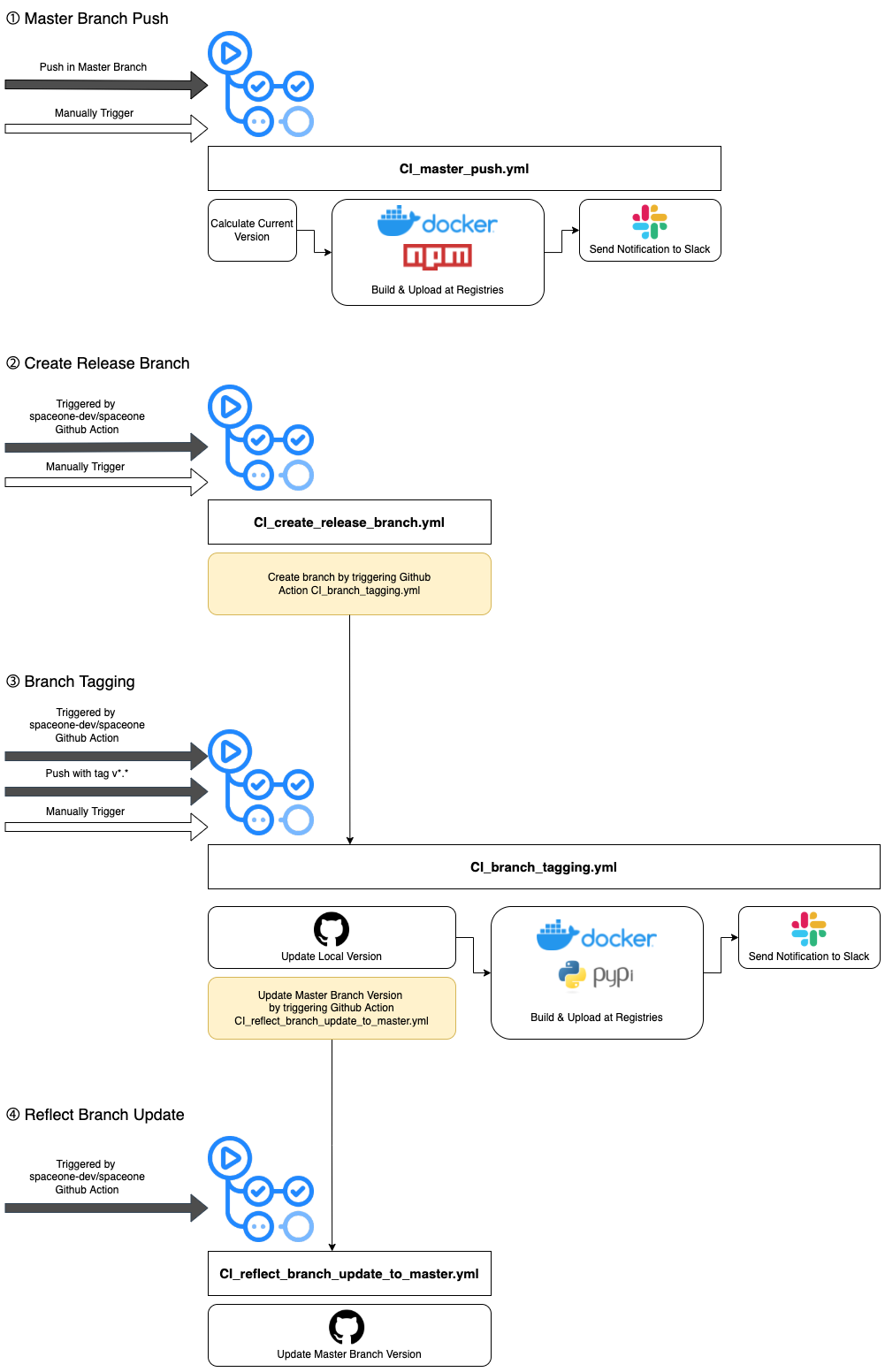

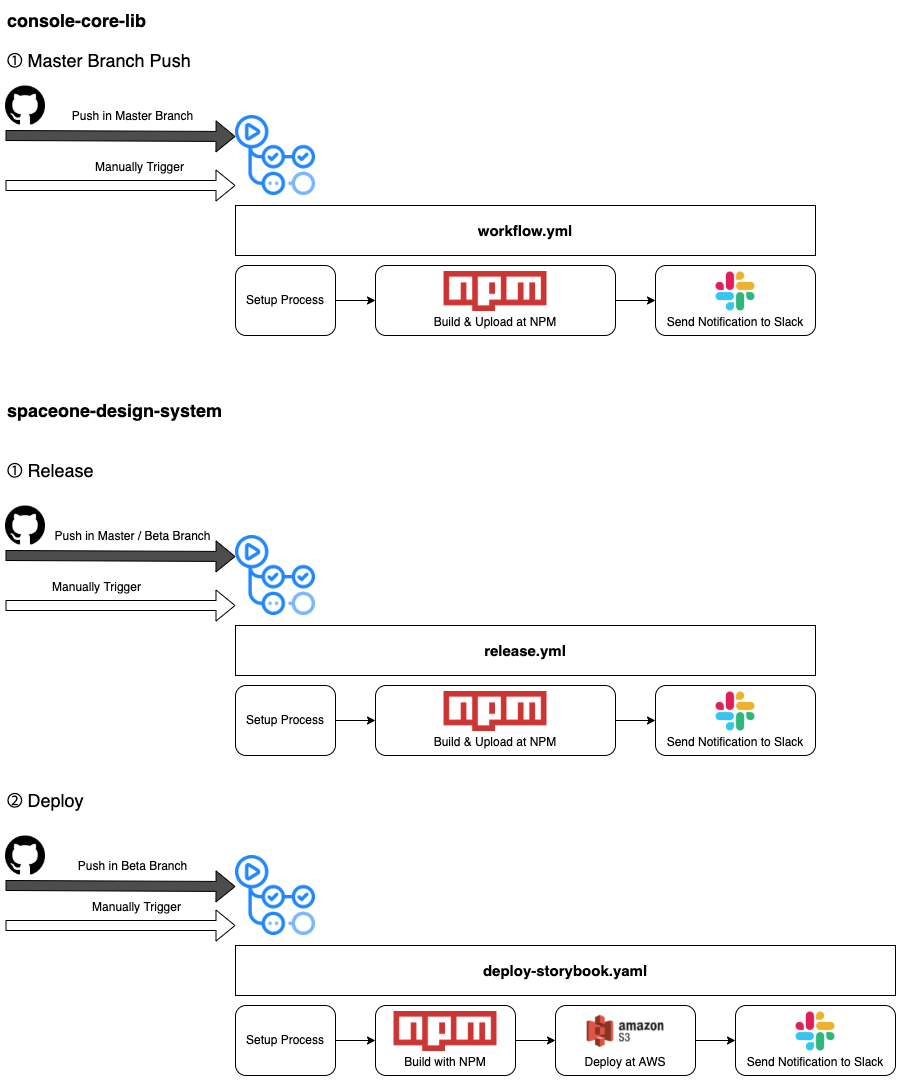

- 5.8.1: Frontend Microservice CI

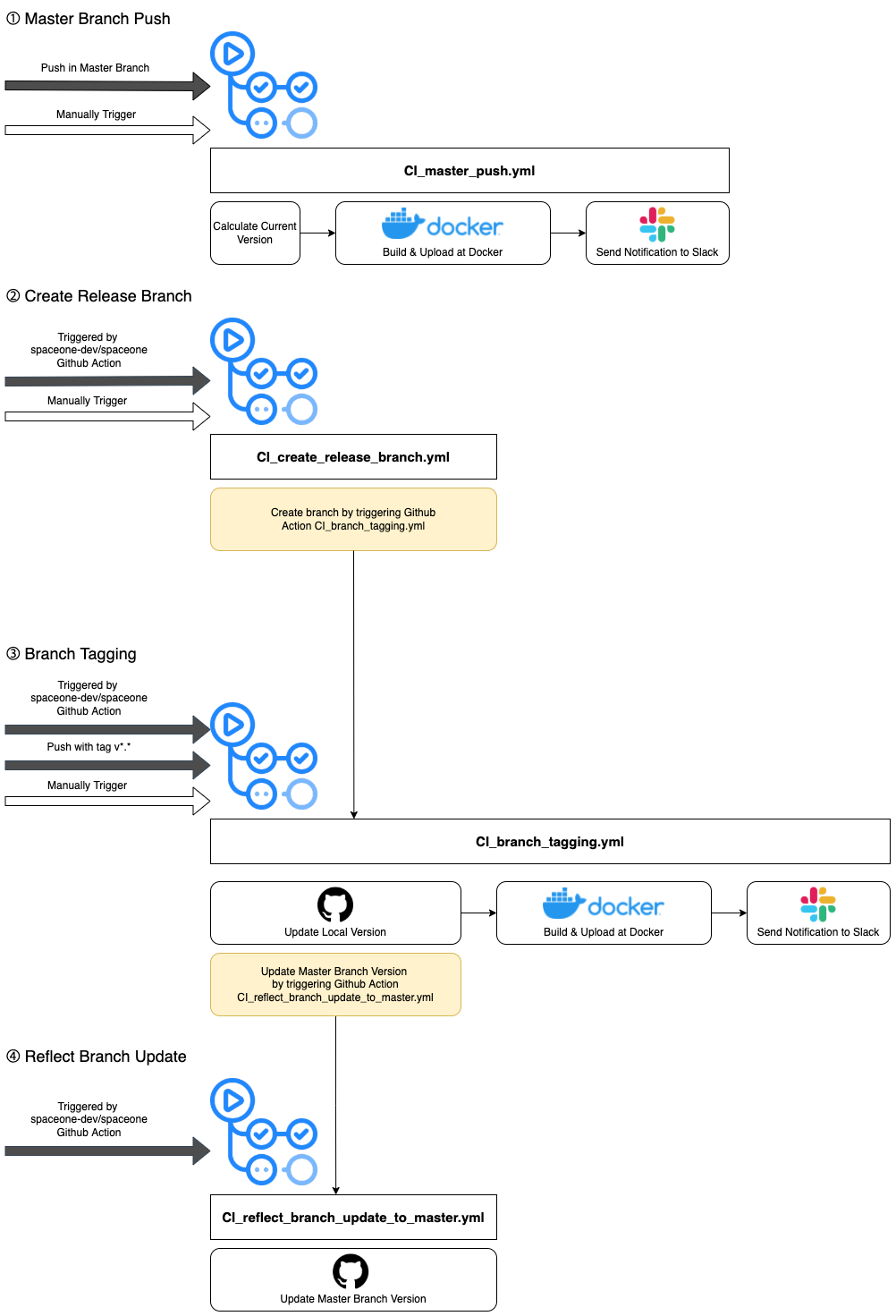

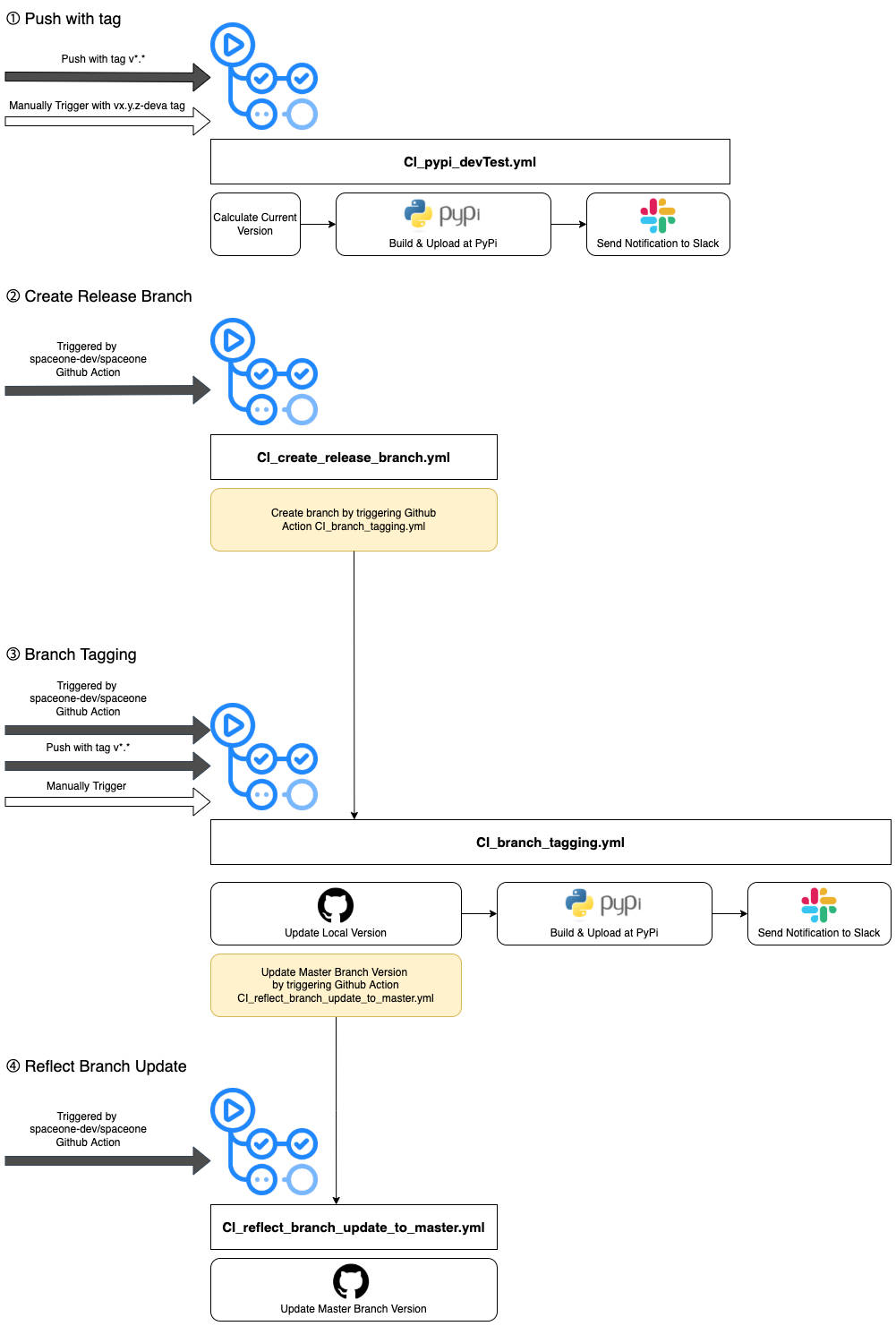

- 5.8.2: Backend Microservice CI

- 5.8.3: Frontend Core Microservice CI

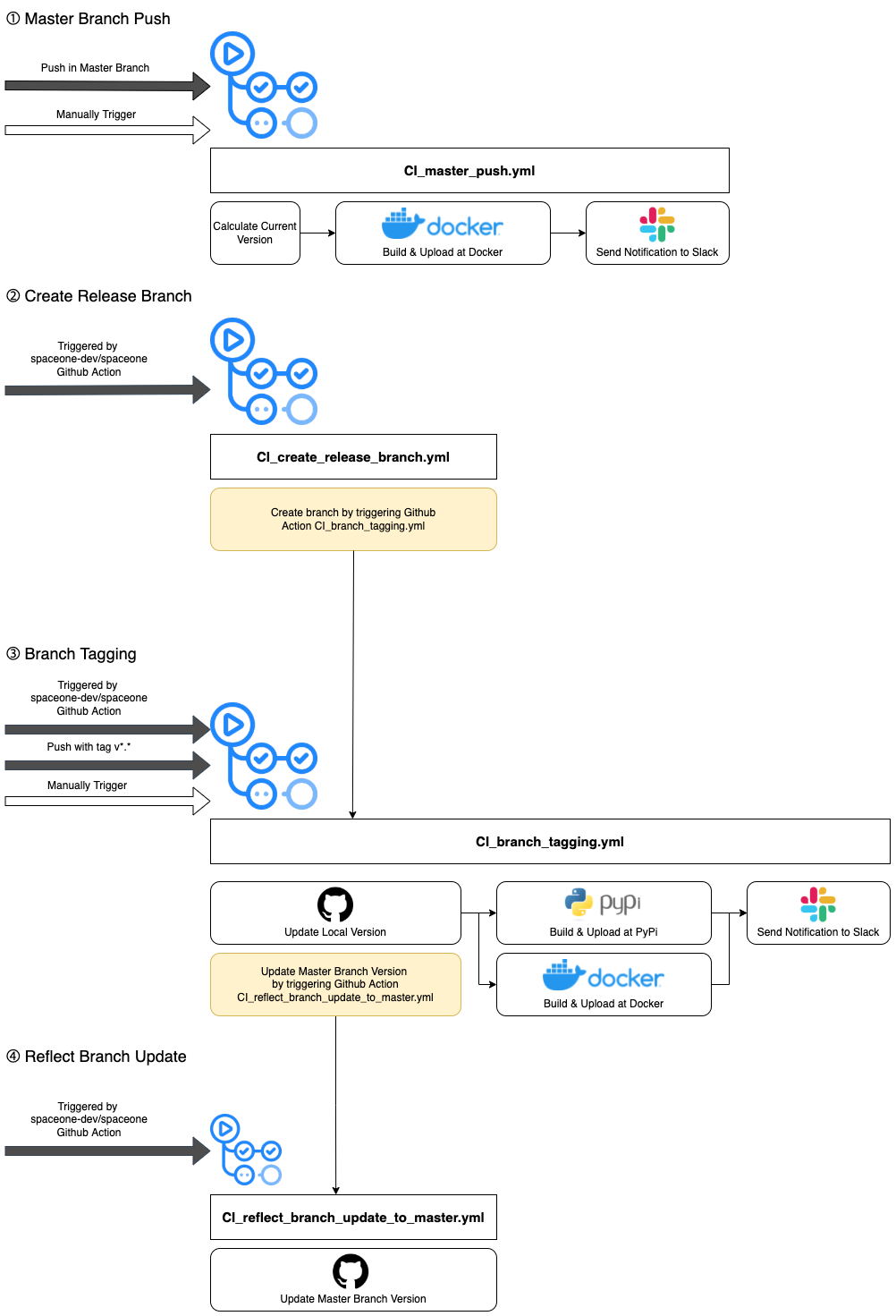

- 5.8.4: Backend Core Microservice CI

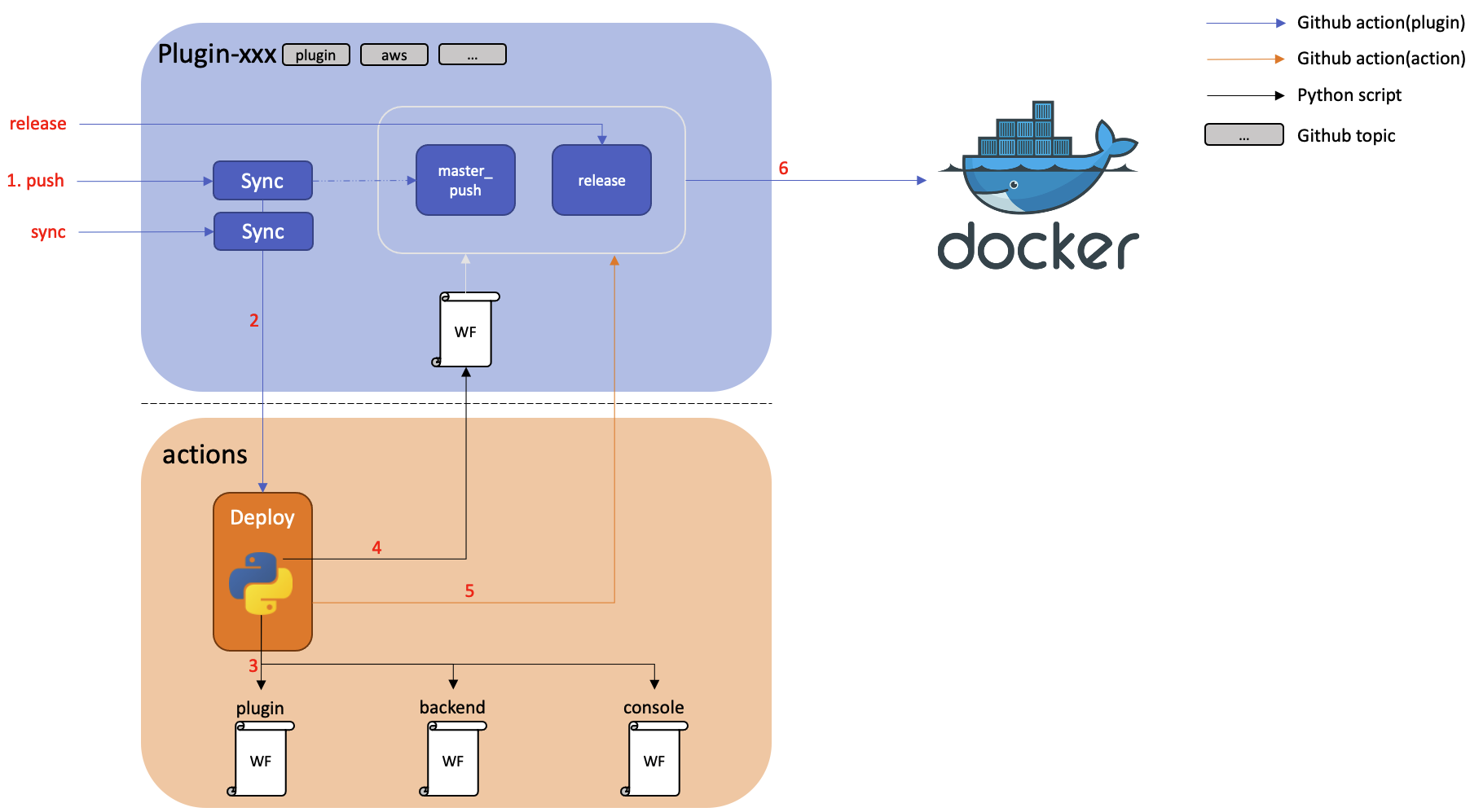

- 5.8.5: Plugin CI

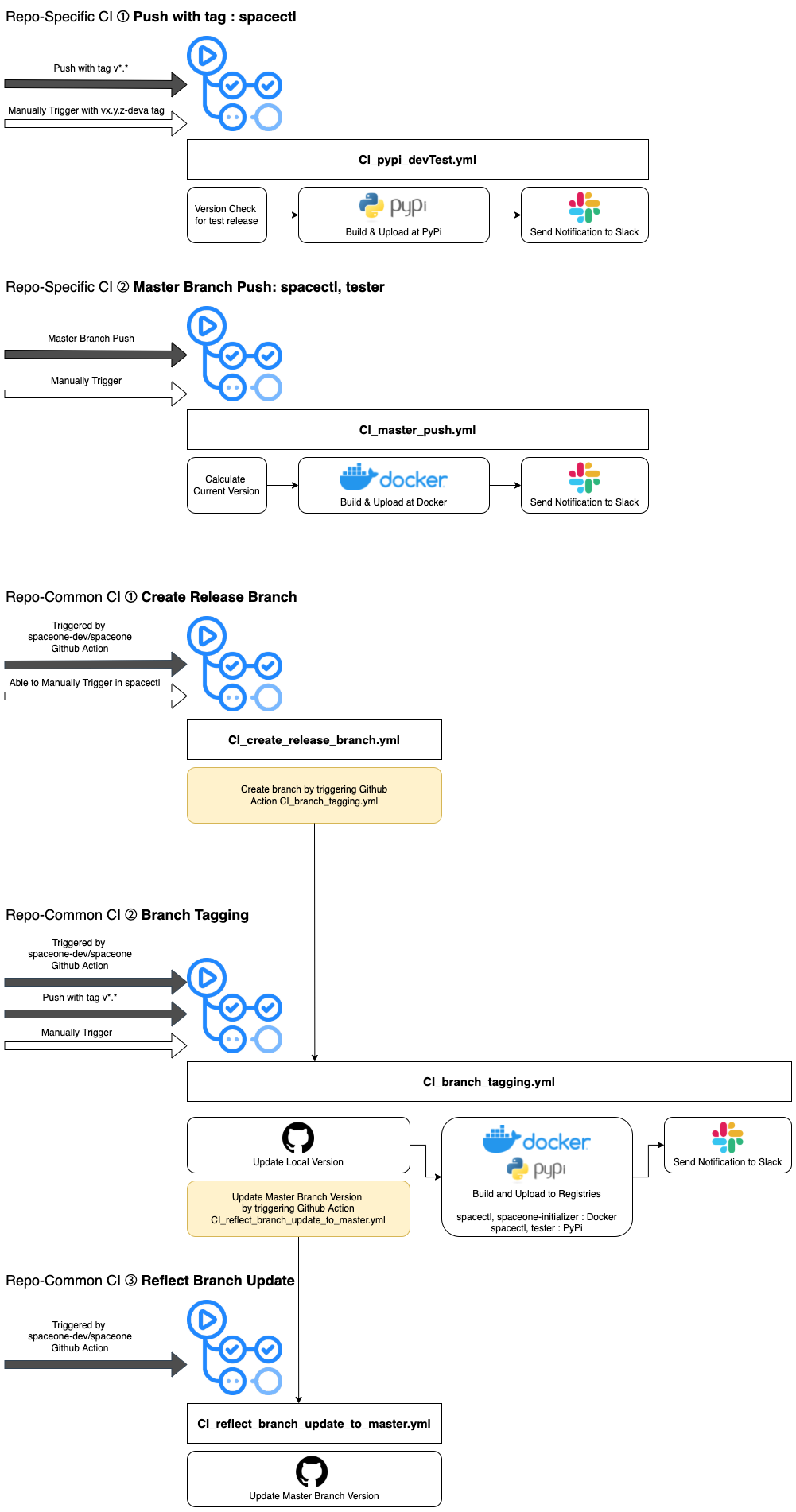

- 5.8.6: Tools CI

- 5.9: Contribute

- 5.9.1: Documentation

- 5.9.1.1: Content Guide

- 5.9.1.2: Style Guide (shortcodes)

1 - Introduction

Introducing Cloudforet Project

1.1 - Overview

Introducing Cloudforet Project

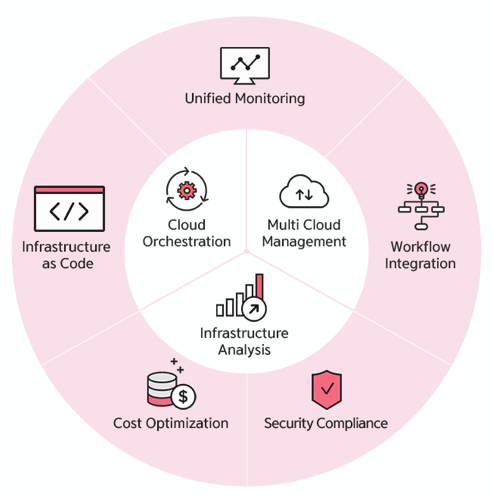

Main Features

1. Multi-Cloud Management

- IaaS Infra-integration: Auto-discovery and sorting infrastructure information scattered across various platforms

- Resource Search: Quick search over resources reflected on relevance

- Resource Monitoring: Instant connection to resource's status connected to infrastructure

2. Cloud Orchestration

Infrastructure as Code: Code-based infrastructure configuration management

Remote Command: Batch command execution over multiple remote servers

Application Catalog: Supports easy installation on applications such as Database and Middleware

3. Infra. Analysis

- Security Compliance: Auto-detection and analysis on Cloud security vulnerabilities

- Cost Optimization: Detection over unused resources and analyzing overinvested infrastructure

- Capacity Planning: Infrastructure usage statistics and expansion plan establishment

Cloudforet Universe

Our feature is expanding all areas to build a Cloudforet universe to fulfill requirements for cloud operation/management based on the inventory data, automation, analysis, and many more for Multi Clouds.

1.2 - Integrations

Supported Technologies

Overview

Cloudforet supports the Plugin Interfaces, which supports to extend Core Services. The supported plugins are below

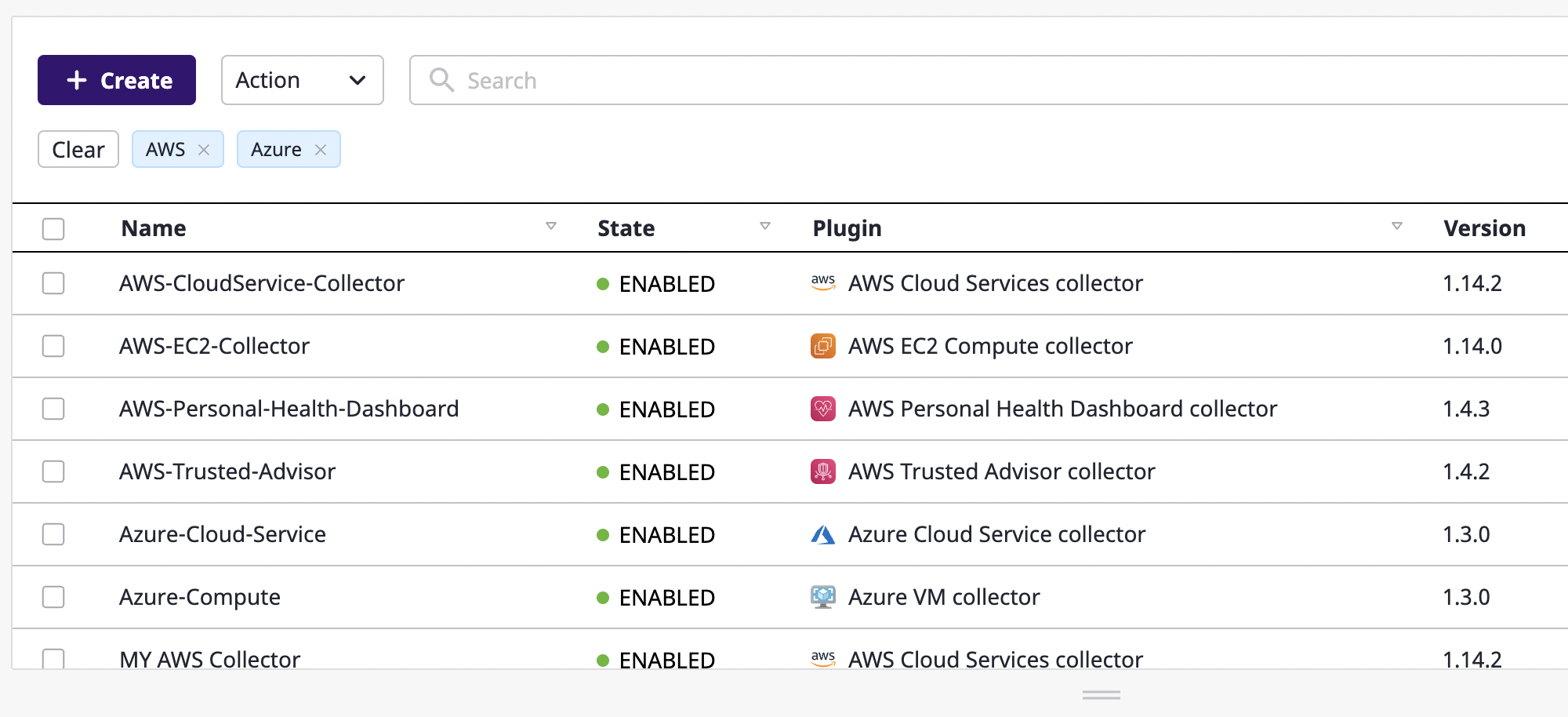

Inventory

Inventory.Collector supports Collection of Assets. Integrate all your cloud service accounts and scan all existing resources. All cloud resources are collected through Cloudforet collector plugins based on the Plugin Interfaces.

AWS Cloud Service Plugin

MS Azure Cloud Service Plugin

Google Cloud Service Plugin

Identity

Identity.auth supports user management. Choonho various authentication options. Cloudforet supports from local ID / password to external identity services including Google OAuth2, Active Directory and Keycloak.

Google oAuth Identity Plugin

KeyCloak Identity Plugin

Monitoring

DataSource

AWS CloudWatch DataSource Plugin

Azure Monitor DataSource Plugin

Google Cloud Monitor DataSource Plugin

Webhook

AWS Simple Notification Webhook Plugin

Zabbix Webhook Plugin

Grafana Webhook Plugin

Notification

API Direct Connect Protocol Plugin

AWS SNS Protocol Plugin

Slack Protocol Plugin

Telegram Protocol Plugin

Email Protocol Plugin

Billing

Megazone Hyperbilling Billing Service

1.3 - Key Differentiators

Core technology of Cloudforet.

Open Platform

In order to provide effective and flexible support over various cloud platforms, we aim for an open source based strategy cloud developer community.

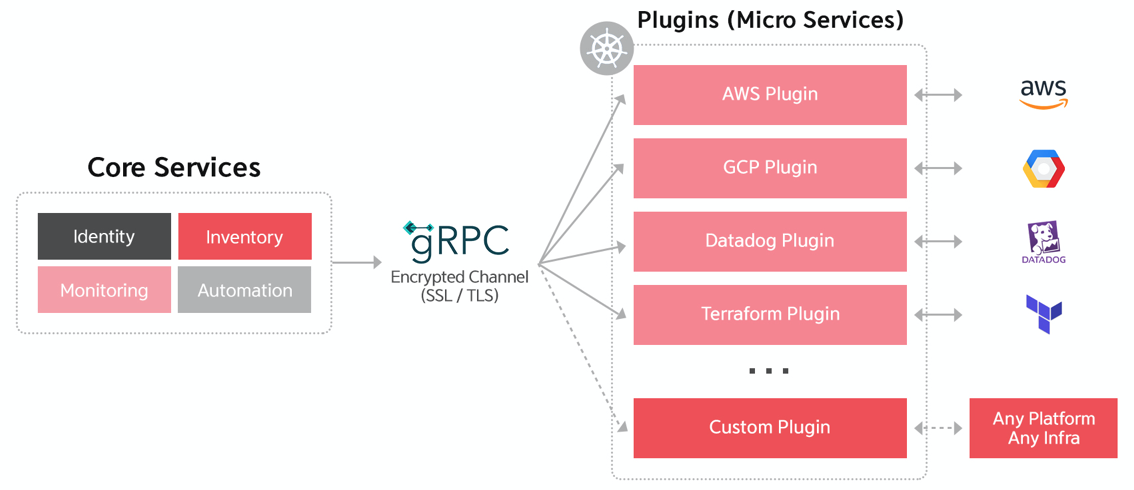

Plugin Interfaces

Protocol-Buffer based gRPC Framework provides optimization on its own engine, enabling effective processing of thousands of various cloud schemas based on MSA (Micro Service Architecture).

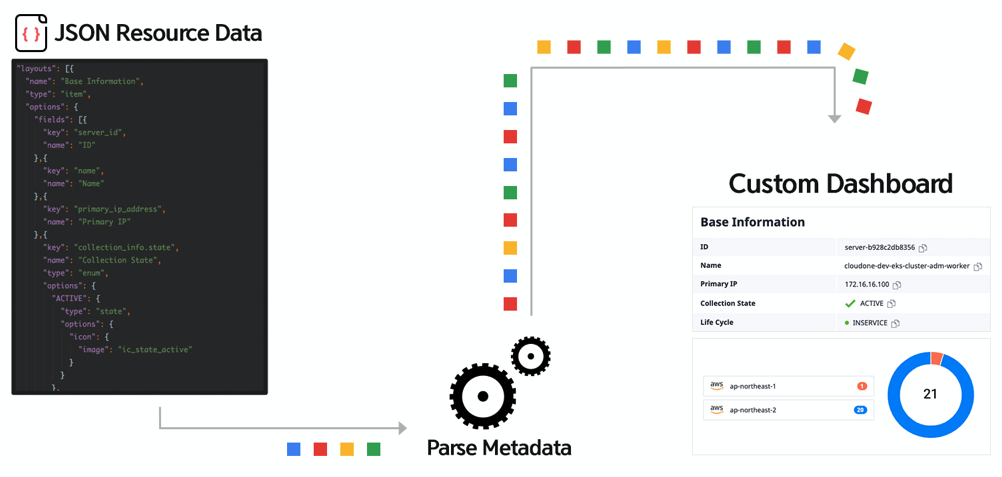

Dynamic Rendering

Provide a user-customized view with selected items by creating a Custom-Dashboard based on Json Metadata.

Plugin Ecosystem

A Plugin marketplace for various users such as MSP, 3rd party, and customers to provide freedom for developments and installation according to their own needs.

1.4 - Release Notes

SpaceONE Release Notes

2 - Concepts

About SpaceONE Project

2.1 - Architecture

Overall Architecture

2.2 - Identity

Overall explanation of identity service

2.2.1 - Project Management

About project management

2.2.2 - Role Based Access Control

This page explores the basic concepts of User Role-Based Access Management (RBAC) in SpaceONE.

How RBAC Works

Define who can access what to who and which organization (project or domain) through SpaceONE's RBAC (Role Based Access Control).

For example, the Project Admin Role can inquire (Read) and make several changes (Update/Delete) on all resources within the specified Project. Domain Viewer Role can inquire (Read) all resources within the specified domain. Resources here include everything from users created within SpaceONE, Project/Project Groups, and individual cloud resources.

Every user has one or more roles, which can be assigned directly or inherited within a project. This makes it easy to manage user role management in complex project relationships.

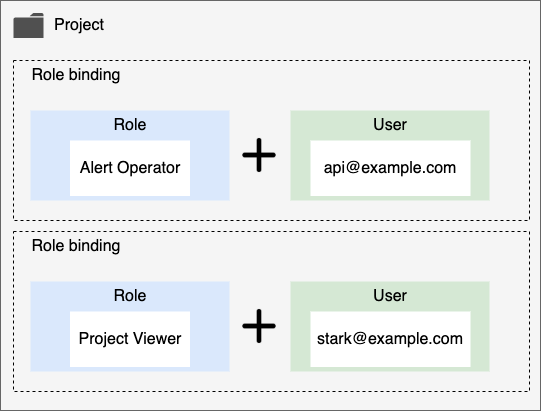

Role defines what actions can be performed on the resource specified through Policy. Also, a Role is bound to each user. The diagram below shows the relationships between Users and Roles and Projects that make up RBAC.

This role management model is divided into three main components.

Role. It is a collection of access right policies that can be granted for each user. All roles must have one policy. For more detailed explanation, please refer to Understanding Role.

Project. The project or project group to which the permission is applied.

User. Users include users who log in to the console and use UI, API users, and SYSTEM users. Each user is connected to multiple Roles through the RoleBinding procedure. Through this, it is possible to access various resources of SpaceONE by receiving appropriate permissions.

Basic Concepts

When a user wants to access resources within an organization, the administrator grants each user a role of the target project or domain. SpaceONE Identity Service verifies the Role/Policy granted to each user to determine whether each user can access resources or not.

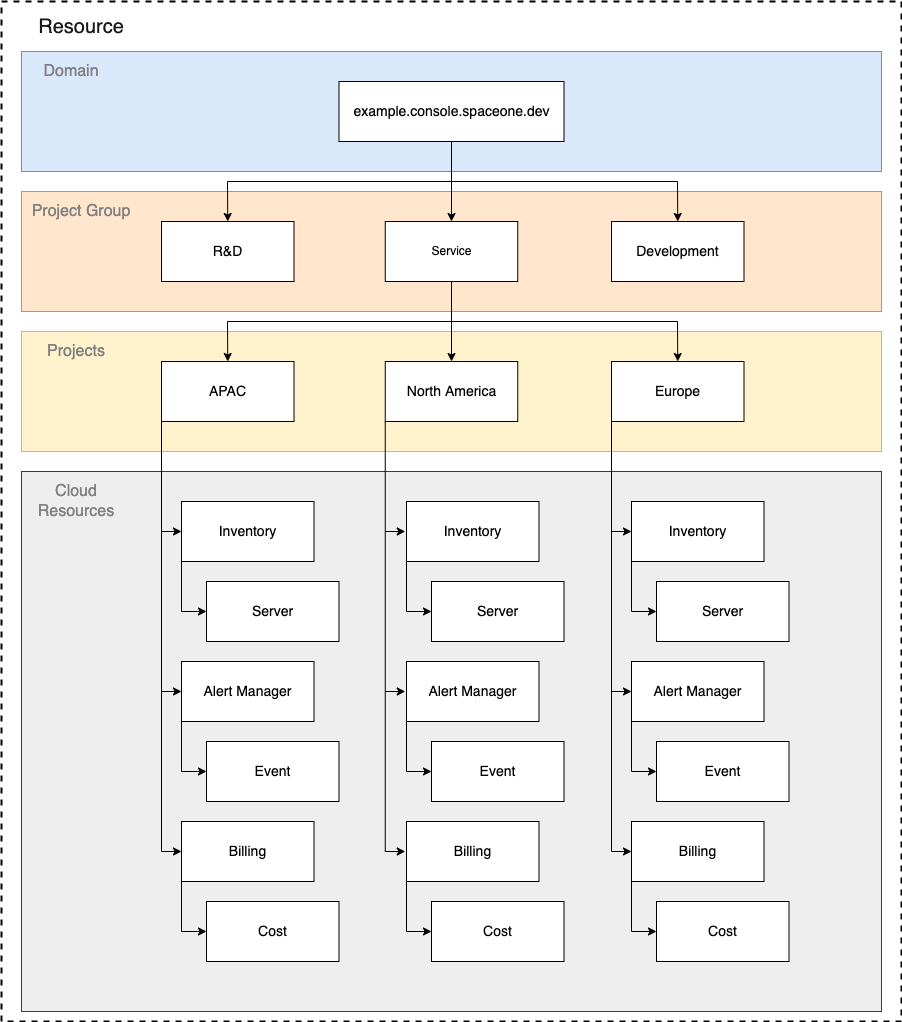

Resource

If a user wants to access a resource in a specific SpaceONE project, you can grant the user an appropriate role and then add it to the target project as a member to make it accessible. Examples of these resources are Server, Project, Alert .

In order to conveniently use the resources managed within SpaceONE for each service, we provide predefined Role/Policy. If you want to define your own access scope within the company, you can create a Custom Policy/Custom Role and apply it to the internal organization.

For a detailed explanation of this, refer to Understanding Role.

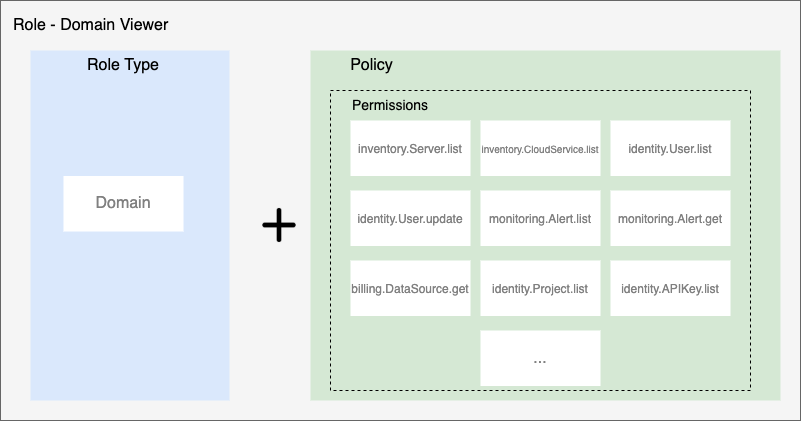

Policy

A policy is a collection of permissions. In permission, the allowed access range for each resource of Space One is defined. A policy can be assigned to each user through a role. Policies can be published on the Marketplace and be used by other users, or can be published privately for a specific domain.

This permission is expressed in the form below. {service}.{resource}.{verb} For example, it has the form inventory.Server.list .

Permission also corresponds to SpaceONE API Method. This is because each microservice in SpaceONE is closely related to each exposed API method. Therefore, when the user calls SpaceONE API Method, corresponding permission is required.

For example, if you want to call inventory.Server.list to see the server list of the Inventory service, you must have the corresponding inventory.Server.list permission included in your role.

Permission cannot be granted directly to a user. Instead, an appropriate set of permissions can be defined as a policy and assigned to a user through a role. For more information, refer to Understanding Policy.

Roles

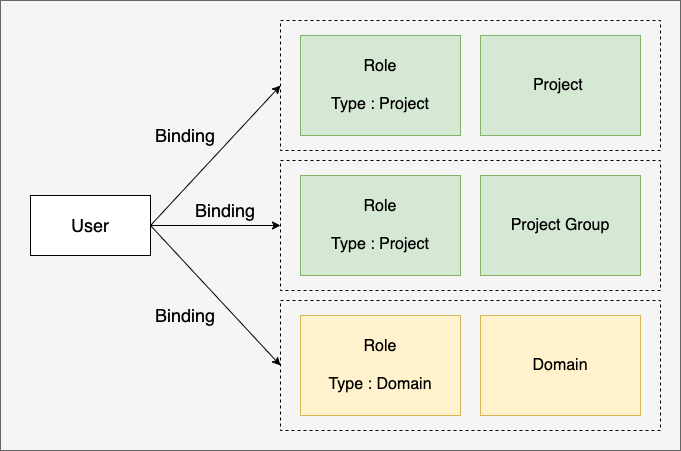

A role is composed of a combination of an access target and a policy. Permission cannot be directly granted to a user, but can be granted in the form of a role. Also, all resources in SpaceONE belong to Project. DOMAIN, PROJECT can be separated and managed.

For example, Domain Admin Role is provided for the full administrator of the domain, and Alert Manager Operator Role is provided for event management of Alert Manager.

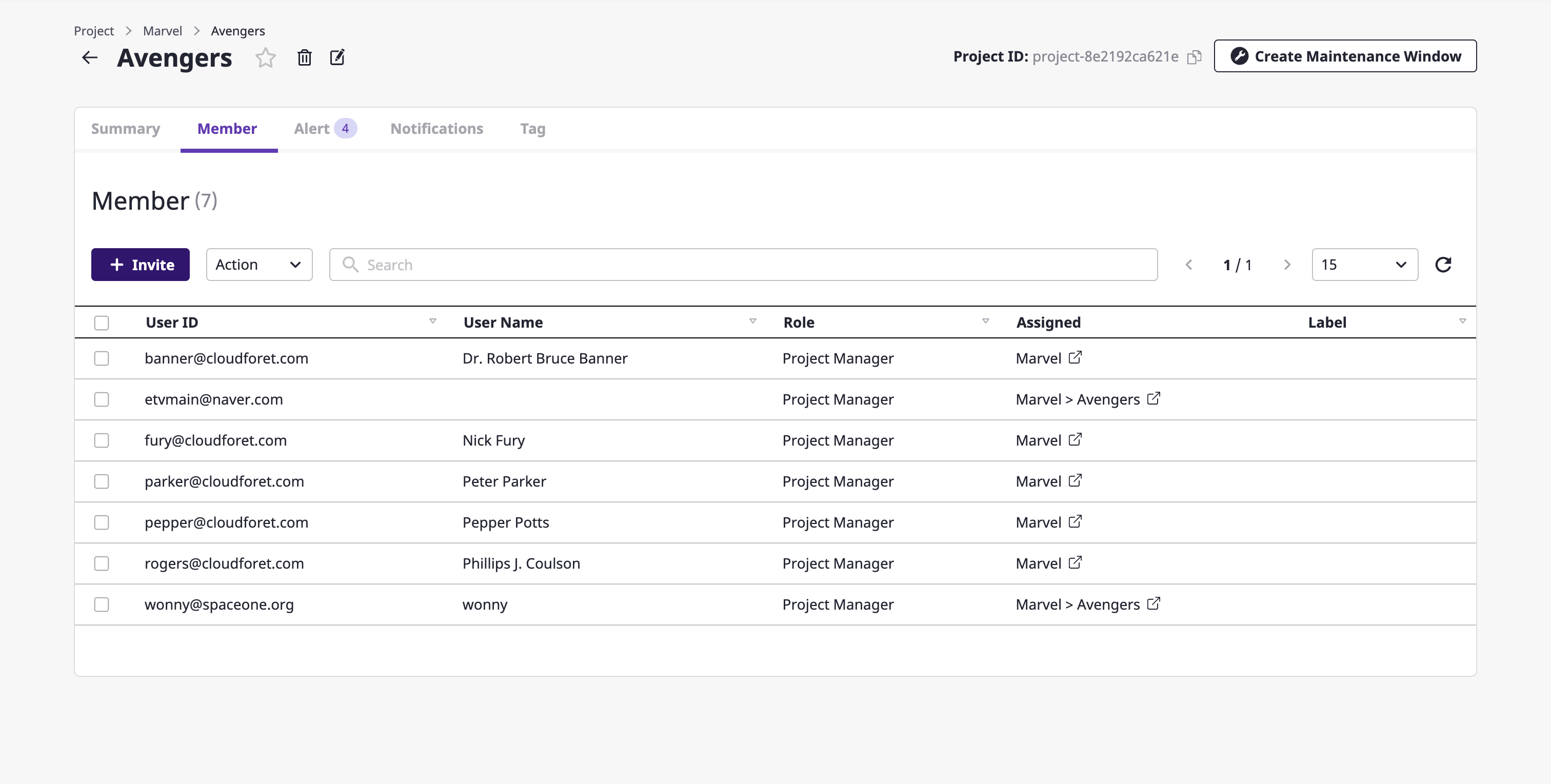

Members

All cloud resources managed within SpaceONE are managed in units of projects. Therefore, you can control access to resources by giving each user a role and adding them as project members.

Depending on the role type, the user can access all resources within the domain or the resources within the specified project.

- Domain: You can access all resources within the domain.

- Project: You can access the resources within the specified Project.

Project type users can access resources within the project by specifically being added as a member of the project.

If you add as member of Project Group, the right to access all subordinate project resources is inherited.

Organization

All resources in SpaceONE can be managed hierarchically through the following organizational structure.

All users can specify access targets in such a way that they are connected (RoleBinding) to the organization.

- Domain : This is the highest level organization. Covers all projects and project groups.

- PROJECT GROUP : This is an organization that can integrate and manage multiple projects.

- Projects : The smallest organizational unit in SpaceONE. All cloud resources belong to a project.

2.2.2.1 - Understanding Policy

This page takes a detailed look at Policy.

Policy

Policy is a set of permissions defined to perform specific actions on SpaceONE resources. Permissions define the scopes that can be managed for Cloud Resources. For an overall description of the authority management system, please refer to Role Based Access Control.

Policy Type

Once defined, the policy can be shared so that it can be used by roles in other domains. Depending on whether or not this is possible, the policy is divided into two types.

- MANAGED: A policy defined globally in the Repository service. The policy is directly managed and shared by the entire system administrator. This is a common policy convenient for most users.

- CUSTOM: You can use a policy with self-defined permissions for each domain. It is useful to manage detailed permission for each domain.

Note

MANAGED Policy is published on Official Marketplace and managed by the CloudONE team.

CUSTOM Policy is published in the Private Repository and managed by the administrator of each domain.

Policy can be classified as following according to Permission Scope.

- Basic: Includes overall permission for all resources in SpaceONE.

- Predefined : Includes granular permission for specific services (alert manager, billing, etc.).

Managed Policy

The policy below is a full list of Managed Policies managed by the CloudONE team. Detailed permission is automatically updated if necessary. Managed Policy was created to classify policies according to the major roles within the organization.

| Policy Type | Policy Name | Policy Id | Permission Description | Reference |

|---|---|---|---|---|

| MANAGED-Basic | Domain Admin Access | policy-managed-domain-admin | Has all privileges except for the following Create/delete domain api_type is SYSTEM/NO_AUTH Manage DomainOwner (create/change/delete) Manage plug-in identity.Auth Plugin management ( change) | policy-managed-domain-admin |

| MANAGED-Basic | Domain Viewer Access | policy-managed-domain-viewer | Read permission among Domain Admin Access permissions | policy-managed-domain-viewer |

| MANAGED-Basic | Project Admin Access | policy-managed-project-admin | Exclude the following permissions from Domain Admin Access Policy Manage providers (create/change/inquire/delete) Manage Role/Policy (create/change/delete) Manage plug-ins inventory.Collector (create/change /delete) plugin management monitoring.DataSource (create/change/delete) plugin management notification.Protocol (create/change/delete) | policy-managed-project-admin |

| MANAGED-Basic | Project Viewer Access | policy-managed-project-viewer | Read permission among Permissions of Project Admin Access Policy | policy-managed-project-viewer |

| MANAGED-Predefined | Alert Manager Full Access | policy-managed-alert-manager-full-access | Full access to Alert Manager | policy-managed-alert-manager-full-access |

Custom Policy

If you want to manage the policy of a domain by yourself, please refer to the Managing Custom Policy document.

2.2.2.2 - Understanding Role

This page takes a detailed look at Roles.

Role structure

Role is a Role Type that specifies the scope of access to resources as shown below and the organization (project or project group) to which the authority is applied. Users can define access rights within each SpaceONE through RoleBinding.

Role Example

Example: Alert Operator Role

---

results:

- created_at: '2021-11-15T05:12:31.060Z'

domain_id: domain-xxx

name: Alert Manager Operator

policies:

- policy_id: policy-managed-alert-manager-operator

policy_type: MANAGED

role_id: role-f18c7d2a9398

role_type: PROJECT

tags: {}

Example : Domain Viewer Role

---

results:

- created_at: '2021-11-15T05:12:28.865Z'

domain_id: domain-xxx

name: Domain Viewer

policies:

- policy_id: policy-managed-domain-viewer

policy_type: MANAGED

role_id: role-242f9851eee7

role_type: DOMAIN

tags: {}

Role Type

Role Type specifies the range of accessible resources within the domain.

- DOMAIN: Access is possible to all resources in the domain.

- PROJECT: Access is possible to all resources in the project added as a member.

Please refer to Add as Project Member for how to add a member as a member in the project.

Add Member

All resources in SpaceONE are hierarchically managed as follows. The administrator of the domain can manage so that users can access resources within the project by adding members to each project. Users who need access to multiple projects can access all projects belonging to the lower hierarchy by being added to the parent project group as a member. For how to add as a member of the Project Group, refer to Add as a Member of Project Group.

Role Hierarchy

If a user has complex Rolebinding within the hierarchical project structure. Role is applied according to the following rules.

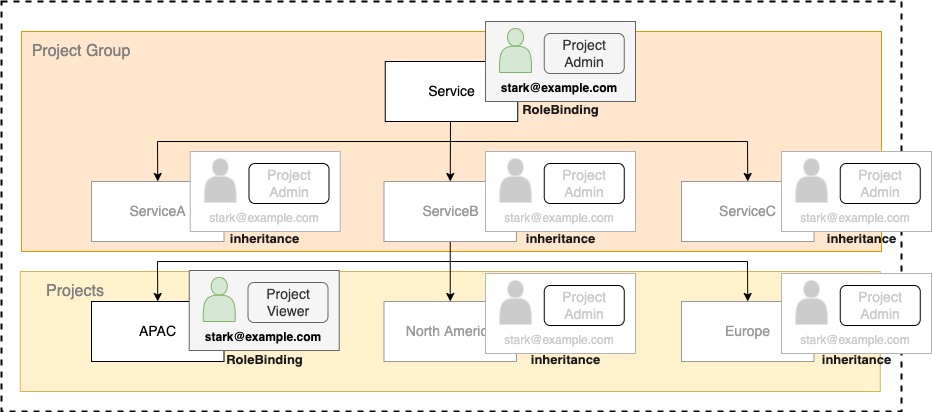

For example, as shown in the figure below, the user stark@example.com is bound to the parent Project Group as Project Admin Role, and the lower level project is APAC. When it is bound to Project Viewer Role in Roles for each project are applied in the following way.

- The role of the parent project is applied to the sub-project/project group that is not directly bound by RoleBinding.

- The role is applied to the subproject that has explicit RoleBinding. (overwriting the higher-level role)

Default Roles

All SpaceOne domains automatically include Default Role when created. Below is the list.

| Name | Role Type | Description |

|---|---|---|

| Domain Admin | DOMAIN | You can search/change/delete all domain resources |

| Domain Viewer | DOMAIN | You can search all domain resources |

| Project Admin | PROJECT | You can view/change/delete the entire project resource added as a member |

| Project Viewer | PROJECT | You can search the entire project resource added as a member |

| Alert Manager Operator | PROJECT | You can inquire the entire project resource added as a member, and have the alert handling authority of Alert Manager |

Managing Roles

Roles can be managed by the domain itself through spacectl. Please refer to the Managing Roles document.

2.3 - Inventory

Overall explanation of inventory service

2.3.1 - Monitoring

About monitoring service

2.4 - Alert Manager

About alert manager

2.5 - Cost Analysis

About cost analysis

3 - Setup & Operation

SpaceONE System Administrator Guide

3.1 - Getting Started

How to install Cloudforet for developer

This is Getting Started Installation guide with minikube.

Note :- This Guide is not for production, but for developer only.

Verified Environments

| Distro | Status | Link(ex. Blog) |

|---|---|---|

| Ubuntu 20.04 | Not Tested | |

| Ubuntu 22.04 | Verified | |

| Amazon Linux 2 | Not Tested | |

| Amazon Linux 2023 | Not Tested | |

| macOS (Apple Silicon, M1) | Verified | |

| macOS (Apple Silicon, M2) | Verified | |

| Windows | Verified | https://medium.com/@ayushsharma2267410/installation-of-cloudforet-in-windows-8c4a10c9a65f |

Overview

Cloudforet-Minikube Architecture

Prerequisites

- AWS EC2 VM (Intel/AMD/ARM CPU)

Recommended instance type: t3.large (2 cores, 8 GB Memory, 30GB EBS)

- Docker/Docker Desktop

- If you don't have Docker installed, minikube will return an error as minikube uses docker as the driver.

- Highly recommend installing Docker Desktop based on your OS.

- Minikube

- Requires minimum Kubernetes version of 1.21+.

- Kubectl

- Helm

- Requires minimum Helm version of 3.11.0+.

- If you want to learn more about Helm, refer to this.

Before diving into the Cloudforet Installation process, start minikube by running the command below.

minikube start --driver=docker --memory=5000mb

If you encounter

Unable to resolve the current Docker CLI context "default"error, check if the docker daemon is running.

Installation

You can install the Cloudforet by the following the steps below.

For Cloudforet v1.12.x, we DONOT provide helm charts online. You can download the helm chart from the Cloudforet Github

1) Download Helm Chart Repository

This command wll download Helm repository.

# Set working directory

mkdir cloudforet-deployment

cd cloudforet-deployment

wget https://github.com/cloudforet-io/charts/releases/download/spaceone-1.12.12/spaceone-1.12.12.tgz

tar zxvf spaceone-1.12.12.tgz

2) Create Namespaces

kubectl create ns cloudforet

kubectl create ns cloudforet-plugin

3) Create Role and RoleBinding

First, download the rbac.yaml file.

The rbac.yaml file basically serves as a means to regulate access to computer or network resources based on the roles of individual users. For more information about RBAC Authorization in Kubernetes, refer to this.

If you are used to downloading files via command-line, run this command to download the file.

wget https://raw.githubusercontent.com/cloudforet-io/charts/master/examples/rbac.yaml -O rbac.yaml

Next, execute the following command.

kubectl apply -f rbac.yaml -n cloudforet-plugin

4) Install Cloudforet Chart

Download default YAML file for helm chart.

wget https://raw.githubusercontent.com/cloudforet-io/charts/master/examples/values/release-1-12.yaml -O release-1-12.yaml

helm install cloudforet spaceone -n cloudforet -f release-1-12.yaml

After executing the above command, check the status of the pod.

Scheduler pods are in

CrashLoopBackOfforErrorstate. This is because the setup is not complete.

kubectl get pod -n cloudforet

NAME READY STATUS RESTARTS AGE

board-5746fd9657-vtd45 1/1 Running 0 57s

config-5d4c4b7f58-z8k9q 1/1 Running 0 58s

console-6b64cf66cb-q8v54 1/1 Running 0 59s

console-api-7c95848cb8-sgt56 2/2 Running 0 58s

console-api-v2-rest-7d64bc85dd-987zn 2/2 Running 0 56s

cost-analysis-7b9d64b944-xw9qg 1/1 Running 0 59s

cost-analysis-scheduler-ff8cc758d-lfx4n 0/1 Error 3 (37s ago) 55s

cost-analysis-worker-559b4799b9-fxmxj 1/1 Running 0 58s

dashboard-b4cc996-mgwj9 1/1 Running 0 56s

docs-5fb4cc56c7-68qbk 1/1 Running 0 59s

identity-6fc984459d-zk8r9 1/1 Running 0 56s

inventory-67498999d6-722bw 1/1 Running 0 57s

inventory-scheduler-5dc6856d44-4spvm 0/1 CrashLoopBackOff 3 (18s ago) 59s

inventory-worker-68d9fcf5fb-x6knb 1/1 Running 0 55s

marketplace-assets-8675d44557-ssm92 1/1 Running 0 59s

mongodb-7c9794854-cdmwj 1/1 Running 0 59s

monitoring-fdd44bdbf-pcgln 1/1 Running 0 59s

notification-5b477f6c49-gzfl8 1/1 Running 0 59s

notification-scheduler-675696467-gn24j 1/1 Running 0 59s

notification-worker-d88bb6df6-pjtmn 1/1 Running 0 57s

plugin-556f7bc49b-qmwln 1/1 Running 0 57s

plugin-scheduler-86c4c56d84-cmrmn 0/1 CrashLoopBackOff 3 (13s ago) 59s

plugin-worker-57986dfdd6-v9vqg 1/1 Running 0 58s

redis-75df77f7d4-lwvvw 1/1 Running 0 59s

repository-5f5b7b5cdc-lnjkl 1/1 Running 0 57s

secret-77ffdf8c9d-48k46 1/1 Running 0 55s

spacectl-5664788d5d-dtwpr 1/1 Running 0 59s

statistics-67b77b6654-p9wcb 1/1 Running 0 56s

statistics-scheduler-586875947c-8zfqg 0/1 Error 3 (30s ago) 56s

statistics-worker-68d646fc7-knbdr 1/1 Running 0 58s

supervisor-scheduler-6744657cb6-tpf78 2/2 Running 0 59s

To execute the commands below, every POD except xxxx-scheduler-yyyy must have a Running status.

5) Initialize the Configuration

First, download the initializer.yaml file.

For more information about the initializer, please refer to the spaceone-initializer.

If you are used to downloading files via command-line, run this command to download the file.

wget https://raw.githubusercontent.com/cloudforet-io/charts/master/examples/initializer.yaml -O initializer.yaml

And execute the following command.

wget https://github.com/cloudforet-io/charts/releases/download/spaceone-initializer-1.3.3/spaceone-initializer-1.3.3.tgz

tar zxvf spaceone-initializer-1.3.3.tgz

helm install initializer spaceone-initializer -n cloudforet -f initializer.yaml

6) Set the Helm Values and Upgrade the Chart

Complete the initialization, you can get the system token from the initializer pod logs.

To figure out the pod name for the initializer, run this command first to show all pod names for namespace spaceone.

kubectl get pods -n cloudforet

Then, among the pods shown copy the name of the pod that starts with initialize-spaceone.

NAME READY STATUS RESTARTS AGE

board-5997d5688-kq4tx 1/1 Running 0 24m

config-5947d845b5-4ncvn 1/1 Running 0 24m

console-7fcfddbd8b-lbk94 1/1 Running 0 24m

console-api-599b86b699-2kl7l 2/2 Running 0 24m

console-api-v2-rest-cb886d687-d7n8t 2/2 Running 0 24m

cost-analysis-8658c96f8f-88bmh 1/1 Running 0 24m

cost-analysis-scheduler-67c9dc6599-k8lgx 1/1 Running 0 24m

cost-analysis-worker-6df98df444-5sjpm 1/1 Running 0 24m

dashboard-84d8969d79-vqhr9 1/1 Running 0 24m

docs-6b9479b5c4-jc2f8 1/1 Running 0 24m

identity-6d7bbb678f-b5ptf 1/1 Running 0 24m

initialize-spaceone-fsqen-74x7v 0/1 Completed 0 98m

inventory-64d6558bf9-v5ltj 1/1 Running 0 24m

inventory-scheduler-69869cc5dc-k6fpg 1/1 Running 0 24m

inventory-worker-5649876687-zjxnn 1/1 Running 0 24m

marketplace-assets-5fcc55fb56-wj54m 1/1 Running 0 24m

mongodb-b7f445749-2sr68 1/1 Running 0 101m

monitoring-799cdb8846-25w78 1/1 Running 0 24m

notification-c9988d548-gxw2c 1/1 Running 0 24m

notification-scheduler-7d4785fd88-j8zbn 1/1 Running 0 24m

notification-worker-586bc9987c-kdfn6 1/1 Running 0 24m

plugin-79976f5747-9snmh 1/1 Running 0 24m

plugin-scheduler-584df5d649-cflrb 1/1 Running 0 24m

plugin-worker-58d5cdbff9-qk5cp 1/1 Running 0 24m

redis-b684c5bbc-528q9 1/1 Running 0 24m

repository-64fc657d4f-cbr7v 1/1 Running 0 24m

secret-74578c99d5-rk55t 1/1 Running 0 24m

spacectl-8cd55f46c-xw59j 1/1 Running 0 24m

statistics-767d84bb8f-rrvrv 1/1 Running 0 24m

statistics-scheduler-65cc75fbfd-rsvz7 1/1 Running 0 24m

statistics-worker-7b6b7b9898-lmj7x 1/1 Running 0 24m

supervisor-scheduler-555d644969-95jxj 2/2 Running 0 24m

To execute the below kubectl logs command, the status of POD(Ex: here initialize-spaceone-fsqen-74x7v) should be Completed . Proceeding with this while the POD is INITIALIZING will give errors

Get the token by getting the log information of the pod with the name you found above.

kubectl logs initialize-spaceone-fsqen-74x7v -n cloudforet

...

TASK [Print Admin API Key] *********************************************************************************************

"TOKEN_SHOWN_HERE"

FINISHED [ ok=23, skipped=0 ] ******************************************************************************************

FINISH SPACEONE INITIALIZE

Update your helm values file (ex. release-1-12.yaml) and edit the values. There is only one item that need to be updated.

For EC2 users: put in your EC2 server's public IP instead of 127.0.0.1 for both CONSOLE_API and CONSOLE_API_V2 ENDPOINT.

- TOKEN

console:

production_json:

CONSOLE_API:

ENDPOINT: http://localhost:8081 # http://ec2_public_ip:8081 for EC2 users

CONSOLE_API_V2:

ENDPOINT: http://localhost:8082 # http://ec2_public_ip:8082 for EC2 users

global:

shared_conf:

TOKEN: 'TOKEN_VALUE_FROM_ABOVE' # Change the system token

After editing the helm values file(ex. release-1-12.yaml), upgrade the helm chart.

helm upgrade cloudforet spaceone -n cloudforet -f release-1-12.yaml

After upgrading, delete the pods in cloudforet namespace that have the label app.kubernetes.io/instance and value cloudforet.

kubectl delete po -n cloudforet -l app.kubernetes.io/instance=cloudforet

7) Check the status of the pods

kubectl get pod -n cloudforet

If all pods are in Running state, the setup is complete.

Port-forwarding

Installing Cloudforet on minikube doesn't provide any Ingress objects such as Amazon ALB or NGINX ingress controller. We can use kubectl port-forward instead.

Run the following commands for port forwarding.

# CLI commands

kubectl port-forward -n cloudforet svc/console 8080:80 --address='0.0.0.0' &

kubectl port-forward -n cloudforet svc/console-api 8081:80 --address='0.0.0.0' &

kubectl port-forward -n cloudforet svc/console-api-v2-rest 8082:80 --address='0.0.0.0' &

Start Cloudforet

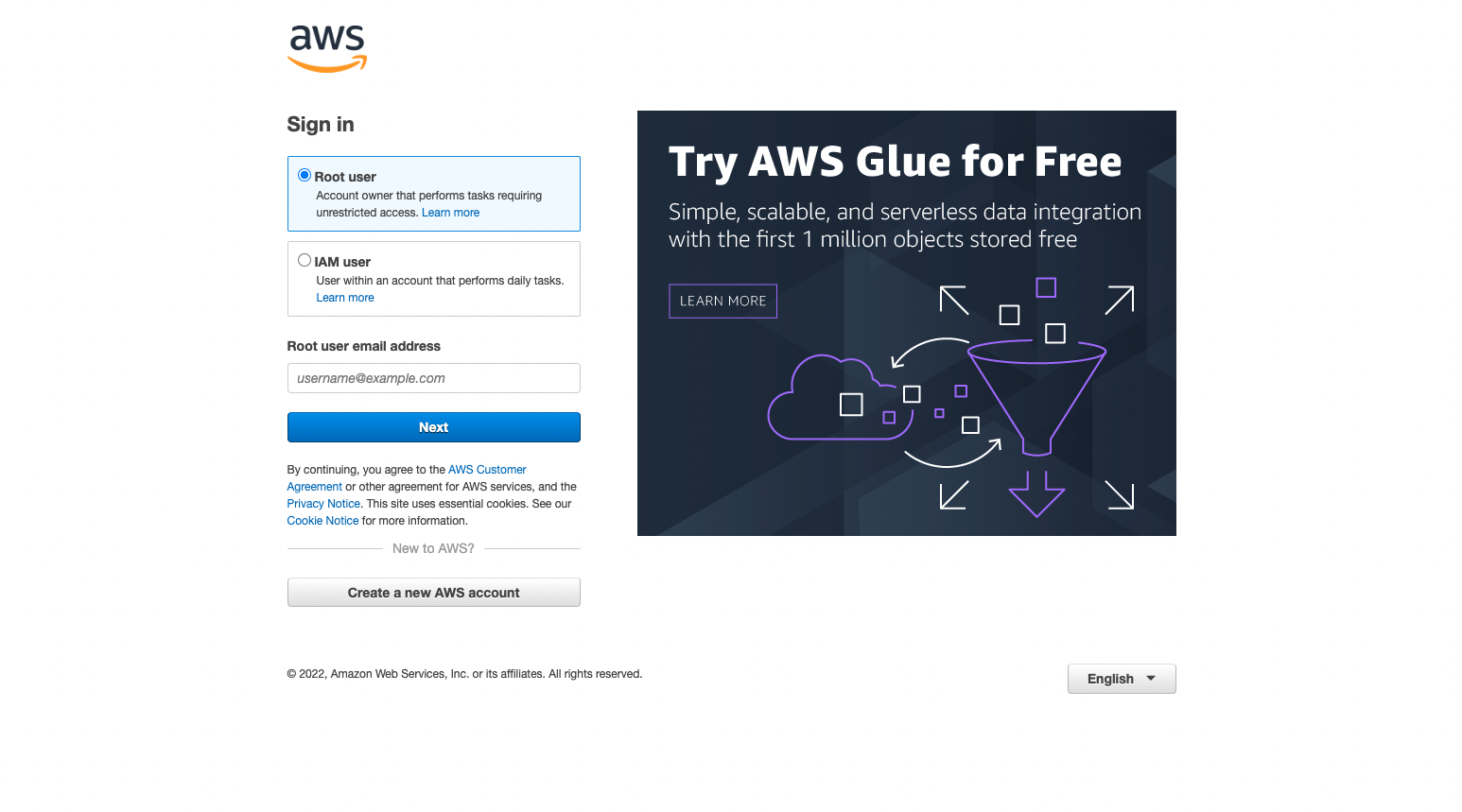

Log-In (Sign in for Root Account)

For EC2 users: open browser with http://your_ec2_server_ip:8080

Open browser (http://127.0.0.1:8080)

| ID | PASSWORD |

|---|---|

| admin | Admin123!@# |

Initial Setup for Cloudforet

For your reference, Cloudforet is an open source project for SpaceOne. For additional information, refer to our official website here.

Reference

3.2 - Installation

This section describes how to install CloudForet.

3.2.1 - AWS

Install Guide of Cloudforet on AWS

Cloudforet Helm Charts

A Helm Chart for Cloudforet 1.12.

Prerequisites

- Kubernetes 1.21+

- Helm 3.2.0+

- Service Domain & SSL Certificate (optional)

- Console:

console.example.com - REST API:

*.api.example.com - gRPC API:

*.grpc.example.com - Webhook:

webhook.example.com

- Console:

- MongoDB 5.0+ (optional)

Cloudforet Architecture

Installation

You can install the Cloudforet using the following the steps.

1) Add Helm Repository

helm repo add cloudforet https://cloudforet-io.github.io/charts

helm repo update

helm search repo cloudforet

2) Create Namespaces

kubectl create ns spaceone

kubectl create ns spaceone-plugin

If you want to use only one namespace, you don't create the spaceone-plugin namespace.

3) Create Role and RoleBinding

First, download the rbac.yaml file.

wget https://raw.githubusercontent.com/cloudforet-io/charts/master/examples/rbac.yaml -O rbac.yaml

And execute the following command.

kubectl apply -f rbac.yaml -n spaceone-plugin

or

kubectl apply -f https://raw.githubusercontent.com/cloudforet-io/charts/master/examples/rbac.yaml -n spaceone-plugin

4) Install Cloudforet Chart

helm install cloudforet cloudforet/spaceone -n spaceone

After executing the above command, check the status of the pod.

kubectl get pod -n spaceone

NAME READY STATUS RESTARTS AGE

board-64f468ccd6-v8wx4 1/1 Running 0 4m16s

config-6748dc8cf9-4rbz7 1/1 Running 0 4m14s

console-767d787489-wmhvp 1/1 Running 0 4m15s

console-api-846867dc59-rst4k 2/2 Running 0 4m16s

console-api-v2-rest-79f8f6fb59-7zcb2 2/2 Running 0 4m16s

cost-analysis-5654566c95-rlpkz 1/1 Running 0 4m13s

cost-analysis-scheduler-69d77598f7-hh8qt 0/1 CrashLoopBackOff 3 (39s ago) 4m13s

cost-analysis-worker-68755f48bf-6vkfv 1/1 Running 0 4m15s

cost-analysis-worker-68755f48bf-7sj5j 1/1 Running 0 4m15s

cost-analysis-worker-68755f48bf-fd65m 1/1 Running 0 4m16s

cost-analysis-worker-68755f48bf-k6r99 1/1 Running 0 4m15s

dashboard-68f65776df-8s4lr 1/1 Running 0 4m12s

file-manager-5555876d89-slqwg 1/1 Running 0 4m16s

identity-6455d6f4b7-bwgf7 1/1 Running 0 4m14s

inventory-fc6585898-kjmwx 1/1 Running 0 4m13s

inventory-scheduler-6dd9f6787f-k9sff 0/1 CrashLoopBackOff 4 (21s ago) 4m15s

inventory-worker-7f6d479d88-59lxs 1/1 Running 0 4m12s

mongodb-6b78c74d49-vjxsf 1/1 Running 0 4m14s

monitoring-77d9bd8955-hv6vp 1/1 Running 0 4m15s

monitoring-rest-75cd56bc4f-wfh2m 2/2 Running 0 4m16s

monitoring-scheduler-858d876884-b67tc 0/1 Error 3 (33s ago) 4m12s

monitoring-worker-66b875cf75-9gkg9 1/1 Running 0 4m12s

notification-659c66cd4d-hxnwz 1/1 Running 0 4m13s

notification-scheduler-6c9696f96-m9vlr 1/1 Running 0 4m14s

notification-worker-77865457c9-b4dl5 1/1 Running 0 4m16s

plugin-558f9c7b9-r6zw7 1/1 Running 0 4m13s

plugin-scheduler-695b869bc-d9zch 0/1 Error 4 (59s ago) 4m15s

plugin-worker-5f674c49df-qldw9 1/1 Running 0 4m16s

redis-566869f55-zznmt 1/1 Running 0 4m16s

repository-8659578dfd-wsl97 1/1 Running 0 4m14s

secret-69985cfb7f-ds52j 1/1 Running 0 4m12s

statistics-98fc4c955-9xtbp 1/1 Running 0 4m16s

statistics-scheduler-5b6646d666-jwhdw 0/1 CrashLoopBackOff 3 (27s ago) 4m13s

statistics-worker-5f9994d85d-ftpwf 1/1 Running 0 4m12s

supervisor-scheduler-74c84646f5-rw4zf 2/2 Running 0 4m16s

Scheduler pods are in

CrashLoopBackOfforErrorstate. This is because the setup is not complete.

5) Initialize the Configuration

First, download the initializer.yaml file.

wget https://raw.githubusercontent.com/cloudforet-io/charts/master/examples/initializer.yaml -O initializer.yaml

And execute the following command.

helm install cloudforet-initializer cloudforet/spaceone-initializer -n spaceone -f initializer.yaml

or

helm install cloudforet-initializer cloudforet/spaceone-initializer -n spaceone -f https://raw.githubusercontent.com/cloudforet-io/charts/master/examples/initializer.yaml

For more information about the initializer, please refer the spaceone-initializer.

6) Set the Helm Values and Upgrade the Chart

Complete the initialization, you can get the system token from the initializer pod logs.

# check pod name

kubectl logs initialize-spaceone-xxxx-xxxxx -n spaceone

...

TASK [Print Admin API Key] *********************************************************************************************

"{TOKEN}"

FINISHED [ ok=23, skipped=0 ] ******************************************************************************************

FINISH SPACEONE INITIALIZE

First, copy this TOKEN, then Create the values.yaml file and paste it to the TOKEN.

console:

production_json:

# If you don't have a service domain, you refer to the following 'No Domain & IP Access' example.

CONSOLE_API:

ENDPOINT: https://console.api.example.com # Change the endpoint

CONSOLE_API_V2:

ENDPOINT: https://console-v2.api.example.com # Change the endpoint

global:

shared_conf:

TOKEN: '{TOKEN}' # Change the system token

For more advanced configuration, please refer the following the links.

- Documents

- Examples

After editing the values.yaml file, upgrade the helm chart.

helm upgrade cloudforet cloudforet/spaceone -n spaceone -f values.yaml

kubectl delete po -n spaceone -l app.kubernetes.io/instance=cloudforet

7) Check the status of the pods

kubectl get pod -n spaceone

If all pods are in Running state, the setup is complete.

8) Ingress and AWS Load Balancer

In Kubernetes, Ingress is an API object that provides a load-balanced external IP address to access Services in your cluster. It acts as a layer 7 (HTTP/HTTPS) reverse proxy and can route traffic to other services based on the requested host and URL path.

For more information, see What is an Application Load Balancer? on AWS and ingress in the Kubernetes documentation.

Prerequisite

Install AWS Load Balancer Controller

AWS Load Balancer Controller is a controller that helps manage ELB (Elastic Load Balancers) in a Kubernetes Cluster. Ingress resources are provisioned with Application Load Balancer, and service resources are provisioned with Network Load Balancer.

Installation methods may vary depending on the environment, so please refer to the official guide document below.

How to set up Cloudforet ingress

1) Ingress Type

Cloudforet provisions a total of 3 ingresses through 2 files.

- Console : Ingress to access the domain

- REST API : Ingress for API service

- console-api

- console-api-v2

2) Console ingress

Setting the ingress to accerss the console is as follows.

cat <<EOF> spaceone-console-ingress.yaml

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: console-ingress

namespace: spaceone

annotations:

alb.ingress.kubernetes.io/listen-ports: '[{"HTTP": 80}]'

alb.ingress.kubernetes.io/scheme: internet-facing

alb.ingress.kubernetes.io/target-type: ip

alb.ingress.kubernetes.io/load-balancer-attributes: idle_timeout.timeout_seconds=600

alb.ingress.kubernetes.io/healthcheck-protocol: HTTP

alb.ingress.kubernetes.io/success-codes: 200-399

alb.ingress.kubernetes.io/load-balancer-name: spaceone-console-ingress # Caution!! Must be fewer than 32 characters.

spec:

ingressClassName: alb

defaultBackend:

service:

name: console

port:

number: 80

EOF

# Apply ingress

kubectl apply -f spaceone-console-ingress.yaml

If you apply the ingress, it will be provisioned to AWS Load Balancer with the name spaceone-console-ingress. You can connect through the provisioned DNS name using HTTP (80 Port).

3) REST API ingress

Setting the REST API ingress for the API service is as follows.

cat <<EOF> spaceone-rest-ingress.yaml

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: console-api-ingress

namespace: spaceone

annotations:

alb.ingress.kubernetes.io/listen-ports: '[{"HTTP": 80}]'

alb.ingress.kubernetes.io/scheme: internet-facing

alb.ingress.kubernetes.io/target-type: ip

alb.ingress.kubernetes.io/load-balancer-attributes: idle_timeout.timeout_seconds=600

alb.ingress.kubernetes.io/healthcheck-protocol: HTTP

alb.ingress.kubernetes.io/success-codes: 200-399

alb.ingress.kubernetes.io/load-balancer-name: spaceone-console-api-ingress # Caution!! Must be fewer than 32 characters.

spec:

ingressClassName: alb

defaultBackend:

service:

name: console-api

port:

number: 80

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: console-api-v2-ingress

namespace: spaceone

annotations:

alb.ingress.kubernetes.io/listen-ports: '[{"HTTP": 80}]'

alb.ingress.kubernetes.io/scheme: internet-facing

alb.ingress.kubernetes.io/target-type: ip

alb.ingress.kubernetes.io/load-balancer-attributes: idle_timeout.timeout_seconds=600

alb.ingress.kubernetes.io/healthcheck-protocol: HTTP

alb.ingress.kubernetes.io/success-codes: 200-399

alb.ingress.kubernetes.io/load-balancer-name: spaceone-console-api-v2-ingress

spec:

ingressClassName: alb

defaultBackend:

service:

name: console-api-v2-rest

port:

number: 80

EOF

# Apply ingress

kubectl apply -f spaceone-rest-ingress.yaml

REST API ingress provisions two ALBs. The DNS Name of the REST API must be saved as console.CONSOLE_API.ENDPOINT and console.CONSOLE_API_V2.ENDPOINT in the values.yaml file.

4) Check DNS Name

The DNS name will be generated as http://{ingress-name}-{random}.{region-code}.elb.amazoneaws.com. You can check this through the kubectl get ingress -n spaceone command in Kubernetes.

kubectl get ingress -n spaceone

NAME CLASS HOSTS ADDRESS PORTS AGE

console-api-ingress alb * spaceone-console-api-ingress-xxxxxxxxxx.{region-code}.elb.amazonaws.com 80 15h

console-api-v2-ingress alb * spaceone-console-api-v2-ingress-xxxxxxxxxx.{region-code}.elb.amazonaws.com 80 15h

console-ingress alb * spaceone-console-ingress-xxxxxxxxxx.{region-code}.elb.amazonaws.com 80 15h

Or, you can check it in AWS Console. You can check it in EC2 > Load balancer as shown in the image below.

5) Connect with DNS Name

When all ingress is ready, edit the values.yaml file, restart pods, and access the console.

console:

production_json:

# If you don't have a service domain, you refer to the following 'No Domain & IP Access' example.

CONSOLE_API:

ENDPOINT: http://spaceone-console-api-ingress-xxxxxxxxxx.{region-code}.elb.amazonaws.com

CONSOLE_API_V2:

ENDPOINT: http://spaceone-console-api-v2-ingress-xxxxxxxxxx.{region-code}.elb.amazonaws.com

After applying the prepared values.yaml file, restart the pods.

helm upgrade cloudforet cloudforet/spaceone -n spaceone -f values.yaml

kubectl delete po -n spaceone -l app.kubernetes.io/instance=cloudforet

Now you can connect to Cloudforet with the DNS Name of spaceone-console-ingress.

http://spaceone-console-ingress-xxxxxxxxxx.{region-code}.elb.amazonaws.com

Advanced ingress settings

How to register an SSL certificate

We will guide you through how to register a certificate in ingress for SSL communication.

There are two methods for registering a certificate. One is when using ACM(AWS Certificate Manager), and the other is how to register an external certificate.

How to register an ACM certificate with ingress

If the certificate was issued through ACM, you can register the SSL certificate by simply registering acm arn in ingress.

First of all, please refer to the AWS official guide document on how to issue a certificate.

How to register the issued certificate is as follows. Please check the options added or changed for SSL communication in existing ingress.

Check out the changes in ingress.

Various settings for SSL are added and changed. Check the contents ofmetadata.annotations.

Also, check the added contents such asssl-redirectandspec.rules.hostinspec.rules.

- spaceone-console-ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: console-ingress

namespace: spaceone

annotations:

+ alb.ingress.kubernetes.io/actions.ssl-redirect: '{"Type": "redirect", "RedirectConfig": { "Protocol": "HTTPS", "Port": "443", "StatusCode": "HTTP_301"}}'

+ alb.ingress.kubernetes.io/listen-ports: '[{"HTTP": 80}, {"HTTPS":443}]'

- alb.ingress.kubernetes.io/listen-ports: '[{"HTTP": 80}]'

alb.ingress.kubernetes.io/scheme: internet-facing

alb.ingress.kubernetes.io/target-type: ip

alb.ingress.kubernetes.io/load-balancer-attributes: idle_timeout.timeout_seconds=600

alb.ingress.kubernetes.io/healthcheck-protocol: HTTP

+ alb.ingress.kubernetes.io/certificate-arn: "arn:aws:acm:..." # Change the certificate-arn

alb.ingress.kubernetes.io/success-codes: 200-399

alb.ingress.kubernetes.io/load-balancer-name: spaceone-console-ingress # Caution!! Must be fewer than 32 characters.

spec:

ingressClassName: alb

- defaultBackend:

- service:

- name: console

- port:

- number: 80

+ rules:

+ - http:

+ paths:

+ - path: /*

+ pathType: ImplementationSpecific

+ backend:

+ service:

+ name: ssl-redirect

+ port:

+ name: use-annotation

+ - host: "console.example.com" # Change the hostname

+ http:

+ paths:

+ - path: /*

+ pathType: ImplementationSpecific

+ backend:

+ service:

+ name: console

+ port:

+ number: 80

- spaceone-rest-ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: console-api-ingress

namespace: spaceone

annotations:

+ alb.ingress.kubernetes.io/actions.ssl-redirect: '{"Type": "redirect", "RedirectConfig": { "Protocol": "HTTPS", "Port": "443", "StatusCode": "HTTP_301"}}'

+ alb.ingress.kubernetes.io/listen-ports: '[{"HTTP": 80}, {"HTTPS":443}]'

- alb.ingress.kubernetes.io/listen-ports: '[{"HTTP": 80}]'

alb.ingress.kubernetes.io/scheme: internet-facing

alb.ingress.kubernetes.io/target-type: ip

alb.ingress.kubernetes.io/load-balancer-attributes: idle_timeout.timeout_seconds=600

alb.ingress.kubernetes.io/healthcheck-protocol: HTTP

+ alb.ingress.kubernetes.io/certificate-arn: "arn:aws:acm:..." # Change the certificate-arn

alb.ingress.kubernetes.io/success-codes: 200-399

alb.ingress.kubernetes.io/load-balancer-name: spaceone-console-api-ingress # Caution!! Must be fewer than 32 characters.

spec:

ingressClassName: alb

- defaultBackend:

- service:

- name: console-api

- port:

- number: 80

+ rules:

+ - http:

+ paths:

+ - path: /*

+ pathType: ImplementationSpecific

+ backend:

+ service:

+ name: ssl-redirect

+ port:

+ name: use-annotation

+ - host: "console.api.example.com" # Change the hostname

+ http:

+ paths:

+ - path: /*

+ pathType: ImplementationSpecific

+ backend:

+ service:

+ name: console-api

+ port:

+ number: 80

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: console-api-v2-ingress

namespace: spaceone

annotations:

+ alb.ingress.kubernetes.io/actions.ssl-redirect: '{"Type": "redirect", "RedirectConfig": { "Protocol": "HTTPS", "Port": "443", "StatusCode": "HTTP_301"}}'

+ alb.ingress.kubernetes.io/listen-ports: '[{"HTTP": 80}, {"HTTPS":443}]'

- alb.ingress.kubernetes.io/listen-ports: '[{"HTTP": 80}]'

alb.ingress.kubernetes.io/scheme: internet-facing

alb.ingress.kubernetes.io/target-type: ip

alb.ingress.kubernetes.io/load-balancer-attributes: idle_timeout.timeout_seconds=600

alb.ingress.kubernetes.io/healthcheck-protocol: HTTP

+ alb.ingress.kubernetes.io/certificate-arn: "arn:aws:acm:..." # Change the certificate-arn

alb.ingress.kubernetes.io/success-codes: 200-399

alb.ingress.kubernetes.io/load-balancer-name: spaceone-console-api-v2-ingress

spec:

ingressClassName: alb

- defaultBackend:

- service:

- name: console-api-v2-rest

- port:

- number: 80

+ rules:

+ - http:

+ paths:

+ - path: /*

+ pathType: ImplementationSpecific

+ backend:

+ service:

+ name: ssl-redirect

+ port:

+ name: use-annotation

+ - host: "console-v2.api.example.com" # Change the hostname

+ http:

+ paths:

+ - path: /*

+ pathType: ImplementationSpecific

+ backend:

+ service:

+ name: console-api-v2-rest

+ port:

+ number: 80

SSL application is completed when the changes are reflected through the kubectl command.

kubectl apply -f spaceone-console-ingress.yaml

kubectl apply -f spaceone-rest-ingress.yaml

How to register an SSL/TLS certificate

Certificate registration is possible even if you have an external certificate that was previously issued. You can register by adding a Kubernetes secret using the issued certificate and declaring the added secret name in ingress.

Create SSL/TLS certificates as Kubernetes secrets. There are two ways:

1. Using yaml file

You can add a secret to a yaml file using the command below.

kubectl apply -f <<EOF> tls-secret.yaml

apiVersion: v1

data:

tls.crt: {your crt} # crt

tls.key: {your key} # key

kind: Secret

metadata:

name: tls-secret

namespace: spaceone

type: kubernetes.io/tls

EOF

2. How to use the command if a file exists

If you have a crt and key file, you can create a secret using the following command.

kubectl create secret tls tlssecret --key tls.key --cert tls.crt

Add tls secret to Ingress

Modify ingress using registered secret information.

ingress-nginx settings

Using secret and tls may require setup methods using ingress-nginx. For more information, please refer to the following links:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: console-ingress

namespace: spaceone

annotations:

alb.ingress.kubernetes.io/actions.ssl-redirect: '{"Type": "redirect", "RedirectConfig": { "Protocol": "HTTPS", "Port": "443", "StatusCode": "HTTP_301"}}'

alb.ingress.kubernetes.io/listen-ports: '[{"HTTP": 80}, {"HTTPS":443}]'

alb.ingress.kubernetes.io/scheme: internet-facing

alb.ingress.kubernetes.io/target-type: ip

alb.ingress.kubernetes.io/load-balancer-attributes: idle_timeout.timeout_seconds=600

alb.ingress.kubernetes.io/healthcheck-protocol: HTTP

alb.ingress.kubernetes.io/success-codes: 200-399

alb.ingress.kubernetes.io/load-balancer-name: spaceone-console-ingress # Caution!! Must be fewer than 32 characters.

spec:

tls:

- hosts:

- console.example.com # Change the hostname

secretName: tlssecret # Insert secret name

rules:

- http:

paths:

- path: /*

pathType: ImplementationSpecific

backend:

service:

name: ssl-redirect

port:

name: use-annotation

- host: "console.example.com" # Change the hostname

http:

paths:

- path: /*

pathType: ImplementationSpecific

backend:

service:

name: console

port:

number: 80

3.2.2 - On Premise

This section describes how to install CloudForet in an On-Premise environment.

Prerequisites

Kubernetes 1.21+ : https://kubernetes.io/docs/setup/

Kubectl command-line tool : https://kubernetes.io/docs/tasks/tools/

Helm 3.11.0+ : https://helm.sh/docs/intro/install/

Nginx Ingress Controller : https://kubernetes.github.io/ingress-nginx/deploy/

Install Cloudforet

It guides you on how to install Cloudforet using Helm chart. Related information is also available at: https://github.com/cloudforet-io/charts

1. Add Helm Repository

helm repo add cloudforet https://cloudforet-io.github.io/charts

helm repo update

helm search repo

2. Create Namespaces

kubectl create ns spaceone

kubectl create ns spaceone-plugin

Cautions of creation namespace

If you need to use only one namespace, you do not need to create thespaceone-pluginnamespace.

If changing the Cloudforet namespace, please refer to the following link. Change K8S Namespace

3. Create Role and RoleBinding

In a general situation where namespaces are not merged, supervisor distributes plugins from spaceone namespace to spaceone-plugin namespace, so roles and rolebindings are required as follows. Check the contents at the following link. https://github.com/cloudforet-io/charts/blob/master/examples/rbac.yaml

Details of the authority are as follows. You can edit the file to specify permissions if needed.

Create file

cat <<EOF> rbac.yaml --- apiVersion: rbac.authorization.k8s.io/v1 kind: Role metadata: name: supervisor-plugin-control-role namespace: spaceone-plugin rules: - apiGroups: - "*" resources: - replicaSets - pods - deployments - services - endpoints verbs: - get - list - watch - create - delete --- apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: name: supervisor-role-binding namespace: spaceone-plugin roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: supervisor-plugin-control-role subjects: - kind: ServiceAccount name: default namespace: spaceone EOF

To apply the permission, you can reflect it with the command below. If you have changed the namespace, enter the changed namespace. (Be careful with the namespace.)

kubectl apply -f rbac.yaml -n spaceone-plugin

4. Install

Proceed with the installation using the helm command below.

helm install cloudforet cloudforet/spaceone -n spaceone

After entering the command, you can see that pods are uploaded in the spaceone namespace as shown below.

kubectl get pod -n spaceone

NAME READY STATUS RESTARTS AGE

board-64f468ccd6-v8wx4 1/1 Running 0 4m16s

config-6748dc8cf9-4rbz7 1/1 Running 0 4m14s

console-767d787489-wmhvp 1/1 Running 0 4m15s

console-api-846867dc59-rst4k 2/2 Running 0 4m16s

console-api-v2-rest-79f8f6fb59-7zcb2 2/2 Running 0 4m16s

cost-analysis-5654566c95-rlpkz 1/1 Running 0 4m13s

cost-analysis-scheduler-69d77598f7-hh8qt 0/1 CrashLoopBackOff 3 (39s ago) 4m13s

cost-analysis-worker-68755f48bf-6vkfv 1/1 Running 0 4m15s

cost-analysis-worker-68755f48bf-7sj5j 1/1 Running 0 4m15s

cost-analysis-worker-68755f48bf-fd65m 1/1 Running 0 4m16s

cost-analysis-worker-68755f48bf-k6r99 1/1 Running 0 4m15s

dashboard-68f65776df-8s4lr 1/1 Running 0 4m12s

file-manager-5555876d89-slqwg 1/1 Running 0 4m16s

identity-6455d6f4b7-bwgf7 1/1 Running 0 4m14s

inventory-fc6585898-kjmwx 1/1 Running 0 4m13s

inventory-scheduler-6dd9f6787f-k9sff 0/1 CrashLoopBackOff 4 (21s ago) 4m15s

inventory-worker-7f6d479d88-59lxs 1/1 Running 0 4m12s

mongodb-6b78c74d49-vjxsf 1/1 Running 0 4m14s

monitoring-77d9bd8955-hv6vp 1/1 Running 0 4m15s

monitoring-rest-75cd56bc4f-wfh2m 2/2 Running 0 4m16s

monitoring-scheduler-858d876884-b67tc 0/1 Error 3 (33s ago) 4m12s

monitoring-worker-66b875cf75-9gkg9 1/1 Running 0 4m12s

notification-659c66cd4d-hxnwz 1/1 Running 0 4m13s

notification-scheduler-6c9696f96-m9vlr 1/1 Running 0 4m14s

notification-worker-77865457c9-b4dl5 1/1 Running 0 4m16s

plugin-558f9c7b9-r6zw7 1/1 Running 0 4m13s

plugin-scheduler-695b869bc-d9zch 0/1 Error 4 (59s ago) 4m15s

plugin-worker-5f674c49df-qldw9 1/1 Running 0 4m16s

redis-566869f55-zznmt 1/1 Running 0 4m16s

repository-8659578dfd-wsl97 1/1 Running 0 4m14s

secret-69985cfb7f-ds52j 1/1 Running 0 4m12s

statistics-98fc4c955-9xtbp 1/1 Running 0 4m16s

statistics-scheduler-5b6646d666-jwhdw 0/1 CrashLoopBackOff 3 (27s ago) 4m13s

statistics-worker-5f9994d85d-ftpwf 1/1 Running 0 4m12s

supervisor-scheduler-74c84646f5-rw4zf 2/2 Running 0 4m16s

If some of the scheduler pods are having problems and the rest of the pods are up, you're in the right state for now. The scheduler problem requires an upgrade operation using the values.yaml file after issuing a token through the initializer.

5. Initialize the configuration

This is a task for Cloudforet's domain creation. A root domain is created and a root token is issued through the initializer.

spaceone-initializer can be found on the following cloudforet-io github site. https://github.com/cloudforet-io/spaceone-initializer

The initializer.yaml file to be used here can be found at the following link. https://github.com/cloudforet-io/charts/blob/master/examples/initializer.yaml

You can change the domain name, domain_owner.id/password, etc. in the initializer.yaml file.

Create file

cat <<EOF> filename.yaml main: import: - /root/spacectl/apply/root_domain.yaml - /root/spacectl/apply/create_managed_repository.yaml - /root/spacectl/apply/user_domain.yaml - /root/spacectl/apply/create_role.yaml - /root/spacectl/apply/add_statistics_schedule.yaml - /root/spacectl/apply/print_api_key.yaml var: domain: root: root user: spaceone default_language: ko default_timezone: Asia/Seoul domain_owner: id: admin password: Admin123!@# # Change your password user: id: system_api_key EOF

After editing the file, execute the initializer with the command below.

helm install initializer cloudforet/spaceone-initializer -n spaceone -f initializer.yaml

After execution, an initializer pod is created in the specified spaceone namespace and domain creation is performed. You can check the log when the pod is in Completed state.

6. Set the Helm Values and Upgrade the chart

To customize the default installed helm chart, the values.yaml file is required.

A typical example of a values.yaml file can be found at the following link. https://github.com/cloudforet-io/charts/blob/master/examples/values/all.yaml

To solve the scheduler problem, check the pod log in Completed status as shown below to obtain an admin token.

kubectl logs initializer-5f5b7b5cdc-abcd1 -n spaceone

(omit)

TASK [Print Admin API Key] *********************************************************************************************

"{TOKEN}"

FINISHED [ ok=23, skipped=0 ] ******************************************************************************************

FINISH SPACEONE INITIALIZE

Create a values.yaml file using the token value obtained from the initializer pod log. Inside the file, you can declare app settings, namespace changes, kubernetes options changes, etc.

The following describes how to configure the console domain in the values.yaml file and how to use the issued token as a global config.

console:

production_json:

# If you don't have a service domain, you refer to the following 'No Domain & IP Access' example.

CONSOLE_API:

ENDPOINT: https://console.api.example.com # Change the endpoint

CONSOLE_API_V2:

ENDPOINT: https://console-v2.api.example.com # Change the endpoint

global:

shared_conf:

TOKEN: '{TOKEN}' # Change the system token

After setting the values.yaml file as above, execute the helm upgrade operation with the command below. After the upgrade is finished, delete all app instances related to cloudforet so that all pods are restarted.

helm upgrade cloudforet cloudforet/spaceone -n spaceone -f values.yaml

kubectl delete po -n spaceone -l app.kubernetes.io/instance=cloudforet

7. Check the status of the pods

Check the status of the pod with the following command. If all pods are in Running state, the installation is complete.

kubectl get pod -n spaceone

8. Configuration Ingress

Kubernetes Ingress is a resource that manages connections between services in a cluster and external connections. Cloudforet is serviced by registering the generated certificate as a secret and adding an ingress in the order below.

Install Nginx Ingress Controller

An ingress controller is required to use ingress in an on-premise environment. Here is a link to the installation guide for Nginx Ingress Controller supported by Kubernetes.

- Nginx Ingress Controller : https://kubernetes.github.io/ingress-nginx/deploy/

Generate self-managed SSL

Create a private ssl certificate using the openssl command below. (If an already issued certificate exists, you can create a Secret using the issued certificate. For detailed instructions, please refer to the following link. Create secret by exist cert)

console

- *.{domain}

openssl req -x509 -nodes -days 365 -newkey rsa:2048 -keyout console_ssl.pem -out console_ssl.csr -subj "/CN=*.{domain}/O=spaceone" -addext "subjectAltName = DNS:*.{domain}"

api

- *.api.{domain}

openssl req -x509 -nodes -days 365 -newkey rsa:2048 -keyout api_ssl.pem -out api_ssl.csr -subj "/CN=*.api.{domain}/O=spaceone" -addext "subjectAltName = DNS:*.api.{domain}"

Create secret for ssl

If the certificate is ready, create a secret using the certificate file.

kubectl create secret tls console-ssl --key console_ssl.pem --cert console_ssl.csr

kubectl create secret tls api-ssl --key api_ssl.pem --cert api_ssl.csr

Create Ingress

Prepare the two ingress files below. These ingress files can be downloaded from the following link.

console_ingress.yaml : https://github.com/cloudforet-io/charts/blob/master/examples/ingress/on_premise/console_ingress.yaml

rest_api_ingress.yaml : https://github.com/cloudforet-io/charts/blob/master/examples/ingress/on_premise/rest_api_ingress.yaml

Each file is as follows. Change the hostname inside the file to match the domain of the certificate you created.

console

cat <<EOF> console_ingress.yaml --- apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: console-ingress namespace: spaceone spec: ingressClassName: nginx tls: - hosts: - "console.example.com" # Change the hostname secretName: spaceone-tls rules: - host: "console.example.com" # Change the hostname http: paths: - path: / pathType: Prefix backend: service: name: console port: number: 80 EOFrest_api

cat <<EOF> rest_api_ingress.yaml --- apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: console-api-ingress namespace: spaceone spec: ingressClassName: nginx tls: - hosts: - "*.api.example.com" # Change the hostname secretName: spaceone-tls rules: - host: "console.api.example.com" # Change the hostname http: paths: - path: / pathType: Prefix backend: service: name: console-api port: number: 80 --- apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: console-api-v2-ingress namespace: spaceone spec: ingressClassName: nginx tls: - hosts: - "*.api.example.com" # Change the hostname secretName: spaceone-tls rules: - host: "console-v2.api.example.com" # Change the hostname http: paths: - path: / pathType: Prefix backend: service: name: console-api-v2-rest port: number: 80 EOF

Create the prepared ingress in the spaceone namespace with the command below.

kubectl apply -f console_ingress.yaml -n spaceone

kubectl apply -f rest_api_ingress.yaml -n spaceone

Connect to the Console

Connect to the Cloudforet Console service.

Advanced Configurations

Additional settings are required for the following special features. Below are examples and solutions for each situation.

| Name | Description |

|---|---|

| Set Plugin Certificate | This is how to set a certificate for each plugin when using a private certificate. |

| Support Private Image Registry | In an environment where communication with the outside is blocked for organization's security reasons, you can operate your own Private Image Registry. In this case, Container Image Sync operation is required, and Cloudforet suggests a method using the dregsy tool. |

| Change K8S Namespace | Namespace usage is limited by each environment, or you can use your own namespace name. Here is how to change Namespace in Cloudforet. |

| Set HTTP Proxy | In the on-premise environment with no Internet connection, proxy settings are required to communicate with the external world. Here's how to set up HTTP Proxy. |

| Set K8S ImagePullSecrets | If you are using Private Image Registry, you may need credentials because user authentication is set. In Kubernetes, you can use secrets to register credentials with pods. Here's how to set ImagePullSecrets. |

3.3 - Configuration

In the Configuration section, We will introduce the settings for using Cloudforet.

3.3.1 - Set plugin certificate

Describes how to set up private certificates for plugins used in Cloudforet.

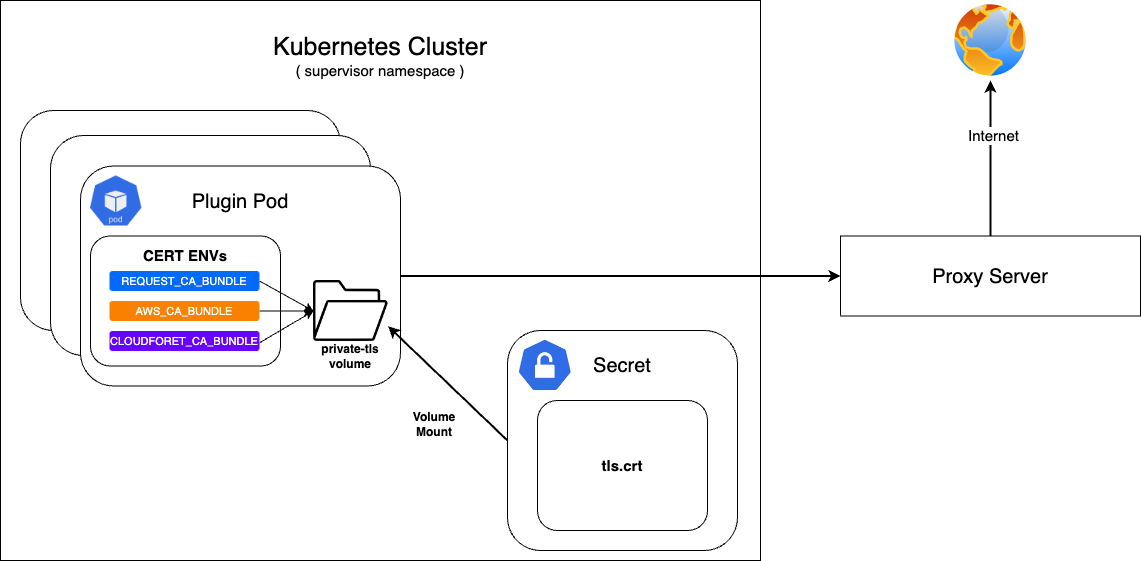

If Cloudforet is built in an on-premise environment, it can be accessed through a proxy server without direct communication with the Internet.

At this time, a private certificate is required when communicating with the proxy server.

First, configure the secret with the prepared private certificate and mount it on the private-tls volume.

After that, set the value of various environment variables required to set the certificate in supervisor's KubernetesConnectorto be the path of tls.crt in the private-tls volume.

Register the prepared private certificate as a Kubernetes Secret

| Parameter | Description | Default |

|---|---|---|

| apiVersion | API version of resource | v1 |

| kind | Kind of resource | Secret |

| metadata | Metadata of resource | {...} |

| metadata.name | Name of resource | private-tls |

| metadata.namespace | Namespace of resource | spaceone |

| data | Data of resource | tls.crt |

| type | Type of resource | kubernetes.io/tls |

kubectl apply -f create_tls_secret.yml

---

apiVersion: v1

kind: Secret

metadata:

name: spaceone-tls

namespace: spaceone

data:

tls.crt: base64 encoded cert # openssl base64 -in cert.pem -out cert.base64

type: kubernetes.io/tls

Set up on KubernetesConnector of supervisor

| Parameter | Description | Default |

|---|---|---|

| supervisor.application_scheduler | Configuration of supervisor scheduler | {...} |

| supervisor.application_scheduler.CONNECTORS.KubernetesConnector.env[] | Environment variables for plugin | [...] |

| supervisor.application_scheduler.CONNECTORS.KubernetesConnector.env[].name | Name of environment variable | REQUESTS_CA_BUNDLE, AWS_CA_BUNDLE, CLOUDFORET_CA_BUNDLE |

| supervisor.application_scheduler.CONNECTORS.KubernetesConnector.env[].value | Value of environment variable | /opt/ssl/cert/tls.crt |

| supervisor.application_scheduler.CONNECTORS.KubernetesConnector.volumes[] | Volumes for plugin | [...] |

| supervisor.application_scheduler.CONNECTORS.KubernetesConnector.volumes[].name | Name of volumes | private-tls |

| supervisor.application_scheduler.CONNECTORS.KubernetesConnector.volumes[].secret.secretName | Secret name of secret volume | private-tls |

| supervisor.application_scheduler.CONNECTORS.KubernetesConnector.volumeMounts[] | Volume mounts of plugins | [...] |

| supervisor.application_scheduler.CONNECTORS.KubernetesConnector.volumeMounts[].name | Name of volume mounts | private-tls |

| supervisor.application_scheduler.CONNECTORS.KubernetesConnector.volumeMounts[].mountPath | Path of volume mounts | /opt/ssl/cert/tls.crt |

| supervisor.application_scheduler.CONNECTORS.KubernetesConnector.volumeMounts[].readOnly | Read permission on the mounted volume | true |

supervisor:

enabled: true

image:

name: spaceone/supervisor

version: x.y.z

imagePullSecrets:

- name: my-credential

application_scheduler:

CONNECTORS:

KubernetesConnector:

env:

- name: REQUESTS_CA_BUNDLE

value: /opt/ssl/cert/tls.crt

- name: AWS_CA_BUNDLE

value: /opt/ssl/cert/tls.crt

- name: CLOUDFORET_CA_BUNDLE

value: /opt/ssl/cert/tls.crt

volumes:

- name: private-tls

secret:

secretName: private-tls

volumeMounts:

- name: private-tls

mountPath: /opt/ssl/cert/tls.crt

readOnly: true

Update

You can apply the changes through the helm upgrade command and by deleting the pods

helm upgrade cloudforet cloudforet/spaceone -n spaceone -f values.yaml

kubectl delete po -n spaceone -l app.kubernetes.io/instance=cloudforet

3.3.2 - Change kubernetes namespace

This section describes how to change a core service or plugin service to a namespace with a different name.

When Cloudforet is installed in the K8S environment, the core service is installed in spaceone and the plugin service for extension function is installed in spaceone-plugin namespace. (In v1.11.5 and below, it is installed in root-supervisor.)

If the user wants to change the core service or plugin service to a namespace with a different name or to install in a single namespace, the namespace must be changed through options.

In order to change the namespace, you need to write changes in Cloudforet's values.yaml. Changes can be made to each core service and plugin service.

Change the namespace of the core service

To change the namespace of the core service, add the spaceone-namespace value by declaring global.namespace in the values.yaml file.

#console:

# production_json:

# CONSOLE_API:

# ENDPOINT: https://console.api.example.com # Change the endpoint

# CONSOLE_API_V2:

# ENDPOINT: https://console-v2.api.example.com # Change the endpoint

global:

namespace: spaceone-namespace # Change the namespace

shared_conf:

Change the namespace of plugin service

You can change the namespace of supervisor's plugin service as well as the core service. Life-cycle of plugin service is managed by supervisor, and plugin namespace setting is also set in supervisor.

Below is the part where supervisor is set to change the namespace of the plugin service in the values.yaml file. Add the plugin-namespace value to supervisor.application_scheduler.CONNECTORS.KubernetesConnector.namespace.

#console:

supervisor:

application_scheduler:

HOSTNAME: spaceone.svc.cluster.local # Change the hostname

CONNECTORS:

KubernetesConnector:

namespace: plugin-namespace # Change the namespace

Update

You can apply the changes through the helm upgrade command and by deleting the pods.

helm upgrade cloudforet cloudforet/spaceone -n spaceone -f values.yaml

kubectl delete po -n spaceone -l app.kubernetes.io/instance=cloudforet

3.3.3 - Creating and applying kubernetes imagePullSecrets

We will explain the process of enabling Cloudforet pods to get private container images using imagePullSecrets.

Due to organization's security requirements, User can Build and utilize a private dedicated image registry to manage private images.

To pull container images from a private image registry, credentials are required. In Kubernetes, Secrets can be used to register such credentials with pods, enabling them to retrieve and pull private container images.

For more detailed information, please refer to the official documentation.

Creating a Secret for credentials.

Kubernetes pods can pull private container images using a Secret of type kubernetes.io/dockerconfigjson.

To do this, create a secret for credentials based on registry credentials.

kubectl create secret docker-registry my-credential --docker-server=<your-registry-server> --docker-username=<your-name> --docker-password=<your-pword> --docker-email=<your-email>

Mount the credentials Secret to a Pod.

You can specify imagePullSecrets in the helm chart values of Cloudforet to mount the credentials Secret to the pods.

WARN: Kubernetes Secret is namespace-scoped resources, so they need to exist in the same namespace.

Set imagePullSecrets configuration for the core service

| Parameter | description | Default |

|---|---|---|

| [services].imagePullSecrets[]] | imagePullSecrets configuration(* Each micro service section) | [] |

| [services].imagePullSecrets[].name | Name of secret type of kubernetes.io/dockerconfigjson | "" |

console:

enable: true

image:

name: spaceone/console

version: x.y.z

imagePullSecrets:

- name: my-credential

console-api:

enable: true

image:

name: spaceone/console-api

version: x.y.z

imagePullSecrets:

- name: my-credential

(...)

Set imagePullSecrets configuration for the plugin

| Parameter | description | Default |

|---|---|---|

| supervisor.application_scheduler | Configuration of supervisor scheduler | {...} |

| supervisor.application_scheduler.CONNECTORS.KubernetesConnector.imagePullSecrets[] | imagePullSecrets configuration for plugin | [] |

| supervisor.application_scheduler.CONNECTORS.KubernetesConnector.imagePullSecrets[].name | Name of secret type of kubernetes.io/dockerconfigjson for plugin | "" |

supervisor:

enabled: true

image:

name: spaceone/supervisor

version: x.y.z

imagePullSecrets:

- name: my-credential

application_scheduler:

CONNECTORS:

KubernetesConnector:

imagePullSecrets:

- name: my-credential

Update

You can apply the changes through the helm upgrade command and by deleting the pods

helm upgrade cloudforet cloudforet/spaceone -n spaceone -f values.yaml

kubectl delete po -n spaceone -l app.kubernetes.io/instance=cloudforet

3.3.4 - Setting up http proxy

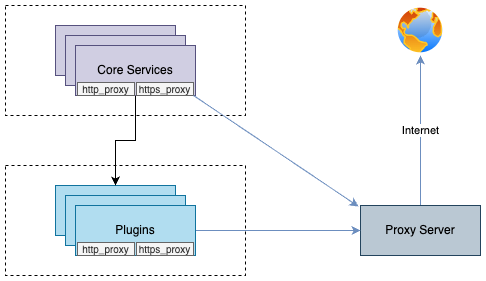

We will explain the http_proxy configuration for a Kubernetes pod to establish a proxy connection.

You can enable communication from pods to the external world through a proxy server by declaring the http_proxy and https_proxy environment variables.

This configuration is done by declaring http_proxy and https_proxy in the environment variables of each container.

no_proxyenvironment variable is used to exclude destinations from proxy communication.

For Cloudforet, It is recommended to exclude the service domains within the cluster for communication between micro services.

Example

Set roxy configuration for the core service

| Parameter | description | Default |

|---|---|---|

| global.common_env[] | Environment Variable for all micro services | [] |

| global.common_env[].name | Name of environment variable | "" |

| global.common_env[].value | Value of environment variable | "" |

global:

common_env:

- name: HTTP_PROXY

value: http://{proxy_server_address}:{proxy_port}

- name: HTTPS_PROXY

value: http://{proxy_server_address}:{proxy_port}

- name: no_proxy

value: .svc.cluster.local,localhost,{cluster_ip},board,config,console,console-api,console-api-v2,cost-analysis,dashboard,docs,file-manager,identity,inventory,marketplace-assets,monitoring,notification,plugin,repository,secret,statistics,supervisor

Set proxy configuration for the plugin

| Parameter | description | Default |

|---|---|---|

| supervisor.application_scheduler | Configuration of supervisor schduler | {...} |

| supervisor.application_scheduler.CONNECTORS.KubernetesConnector.env[] | Environment Variable for plugin | [] |

| supervisor.application_scheduler.CONNECTORS.KubernetesConnector.env[].name | Name of environment variable | "" |

| supervisor.application_scheduler.CONNECTORS.KubernetesConnector.env[].value | Name of environment variable | "" |

WRAN:

Depending on your the installation environment, the default local domain may differ, so you need to change the default local domain such as.svc.cluster.localto match your environment. You can check the current cluster DNS settings with the following command.kubectl run -it --rm busybox --image=busybox --restart=Never -- cat /etc/resolv.conf

supervisor:

enabled: true

image:

name: spaceone/supervisor

version: x.y.z

imagePullSecrets:

- name: my-credential

application_scheduler:

CONNECTORS:

KubernetesConnector:

env:

- name: HTTP_PROXY

value: http://{proxy_server_address}:{proxy_port}

- name: HTTPS_PROXY

value: http://{proxy_server_address}:{proxy_port}

- name: no_proxy

value: .svc.cluster.local,localhost,{cluster_ip},board,config,console,console-api,console-api-v2,cost-analysis,dashboard,docs,file-manager,identity,inventory,marketplace-assets,monitoring,notification,plugin,repository,secret,statistics,supervisor

Update

You can apply the changes through the helm upgrade command and by deleting the pods

helm upgrade cloudforet cloudforet/spaceone -n spaceone -f values.yaml

kubectl delete po -n spaceone -l app.kubernetes.io/instance=cloudforet

3.3.5 - Support private image registry

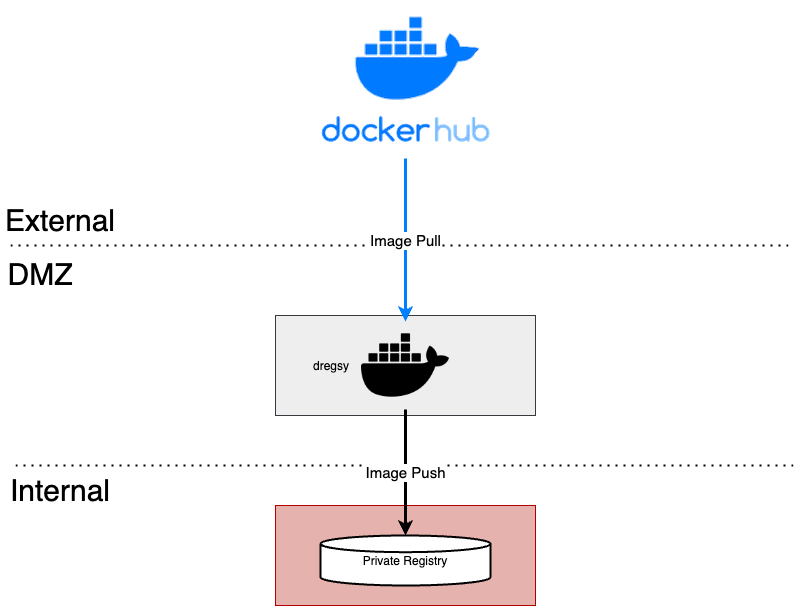

Cloudforet proposes a way to sync container images between private and public image registries.

In organizations operating in an on-premise environment, there are cases where they establish and operate their own container registry within the internal network due to security concerns.

In such environments, when installing Cloudforet, access to external networks is restricted, requiring the preparation of images from Dockerhub and syncing them to their own container registry.

To automate the synchronization of container images in such scenarios, Cloudforet proposes using a Container Registry Sync tool called 'dregsy' to periodically sync container images.

In an environment situated between an external network and an internal network, dregsy is executed.

This tool periodically pulls specific container images from Dockerhub and uploads them to the organization's private container registry.

NOTE:

The dregsy tool described in this guide always pulls container images from Dockerhub, regardless of whether the images already exist in the destination registry.

And, Docker Hub limits the number of Docker image downloads, or pulls based on the account type of the user pulling the image

- For anonymous users, the rate limit is set to 100 pulls per 6 hours per IP address

- For authenticated users, it’s 200 pulls per 6 hour period.

- Users with a paid Docker subscription get up to 5000 pulls per day.

Install and Configuration

NOTE:

In this configuration, communication with Dockerhub is required, so it should be performed in an environment with internet access.

Also, this explanation is based on the installation of Cloudforet version 1.11.x

Prerequisite

- docker (Install Docker Engine)

Installation

Since the tools are executed using Docker, there is no separate installation process required.

The plan is to pull and run the dregsy image, which includes skopeo (mirror tool).

Configuration

- Create files

touch /path/to/your/dregsy-spaceone-core.yaml

touch /path/to/your/dregsy-spaceone-plugin.yaml

- Add configuration (dregsy-spaceone-core.yaml)

If authentication to the registry is configured with

username:password,

the information is encoded and set in the 'auth' field as shown below (example - lines 19 and 22 of the configuration).echo '{"username": "...", "password": "..."}' | base64

In the case of Harbor, Robot Token is not supported for authentication.

Please authenticate by encoding the username:password

relay: skopeo

watch: true

skopeo:

binary: skopeo

certs-dir: /etc/skopeo/certs.d

lister:

maxItems: 100

cacheDuration: 2h

tasks:

- name: sync_spaceone_doc

interval: 21600 # 6 hours

verbose: true

source:

registry: registry.hub.docker.com

auth: {Token} # replace to your dockerhub token

target:

registry: {registry_address} # replace to your registry address

auth: {Token} # replace to your registry token

skip-tls-verify: true

mappings:

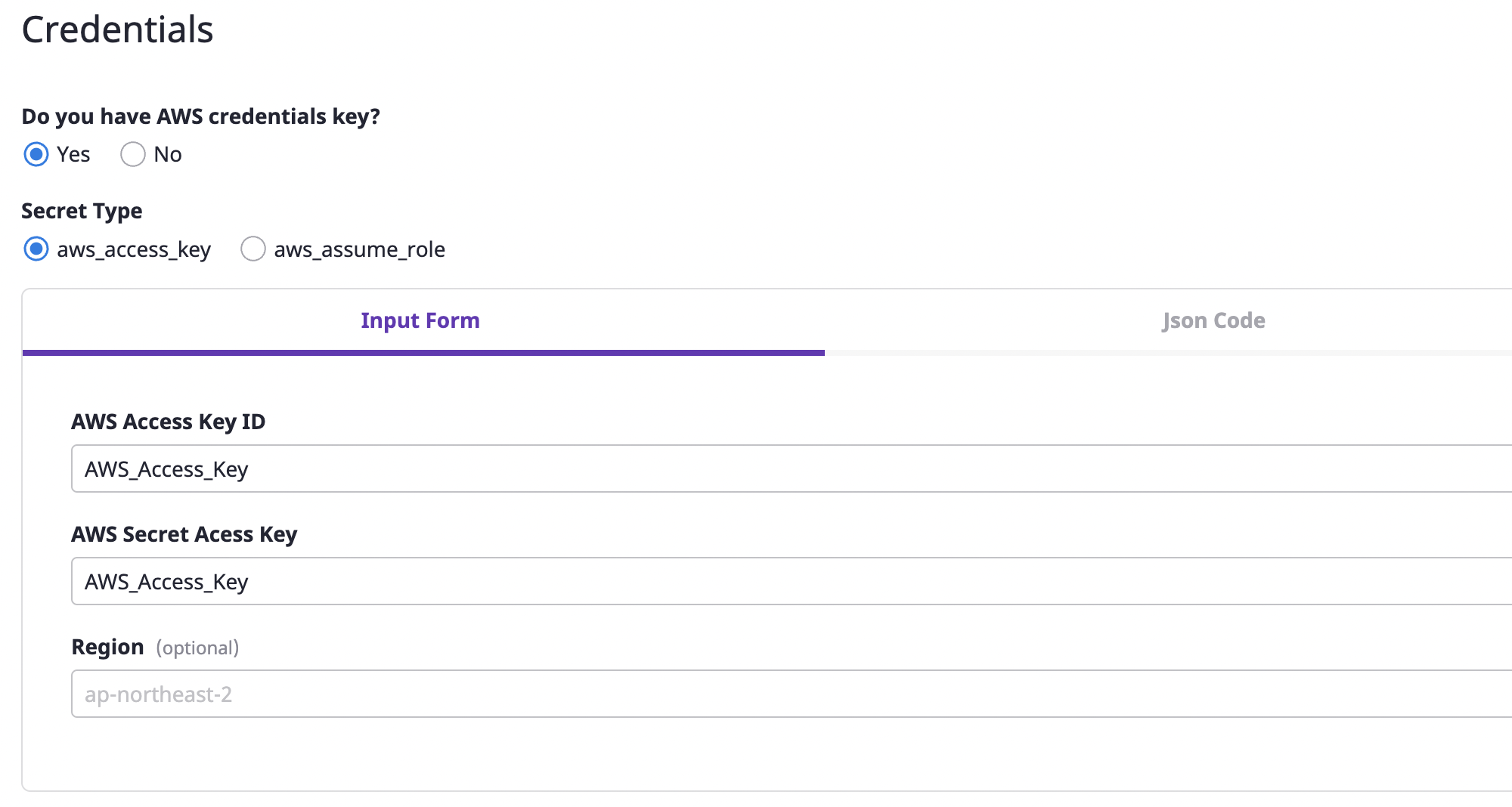

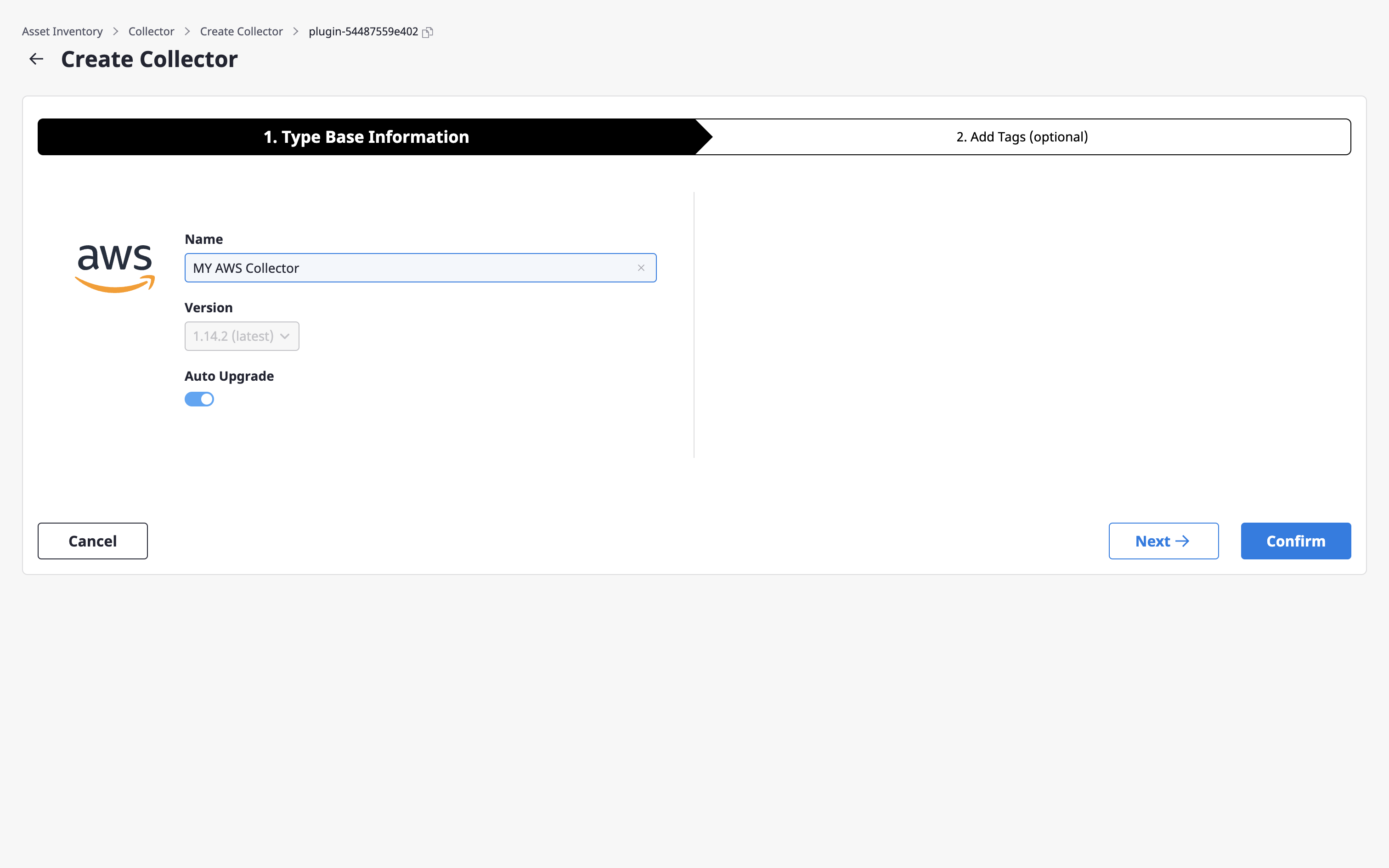

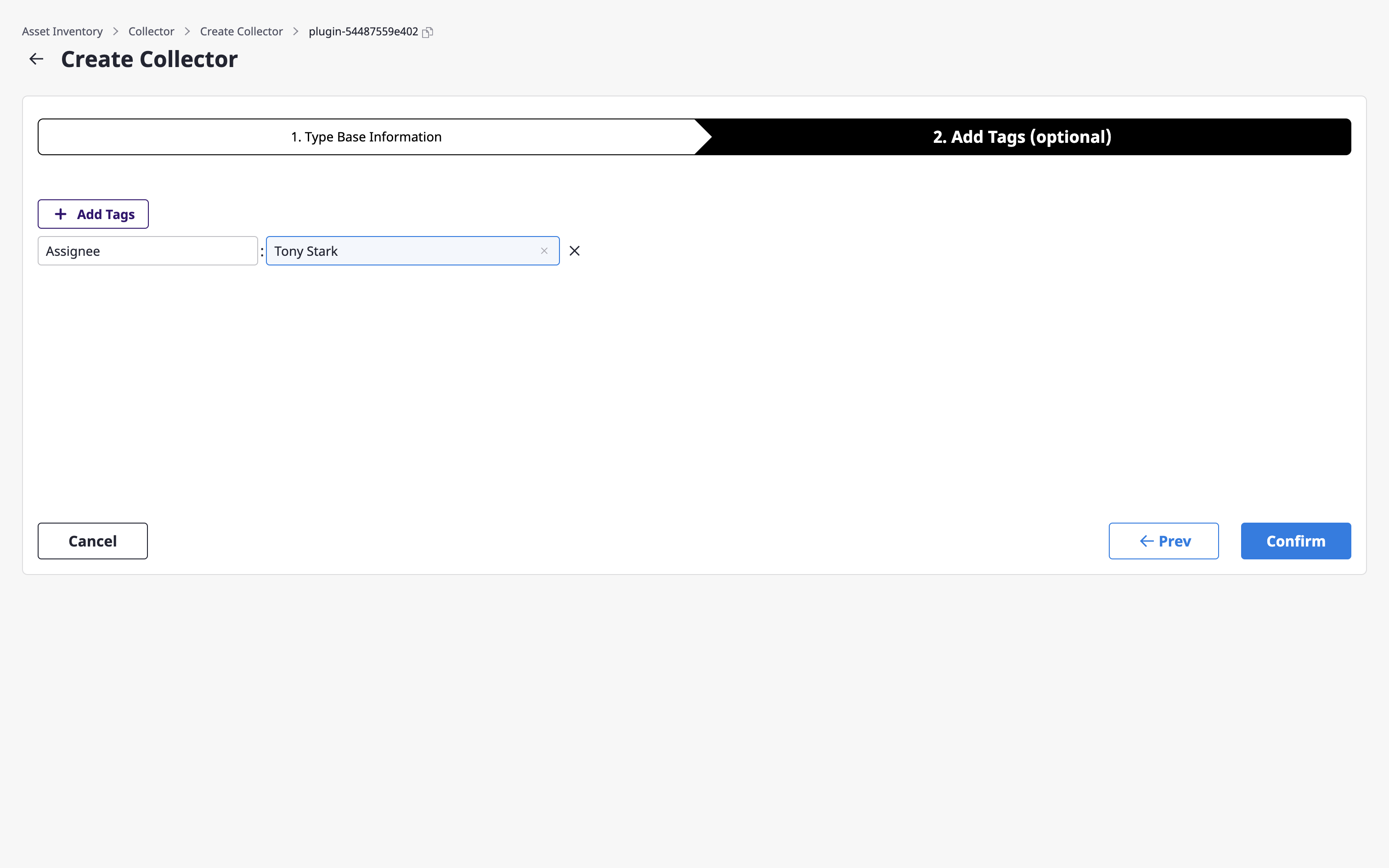

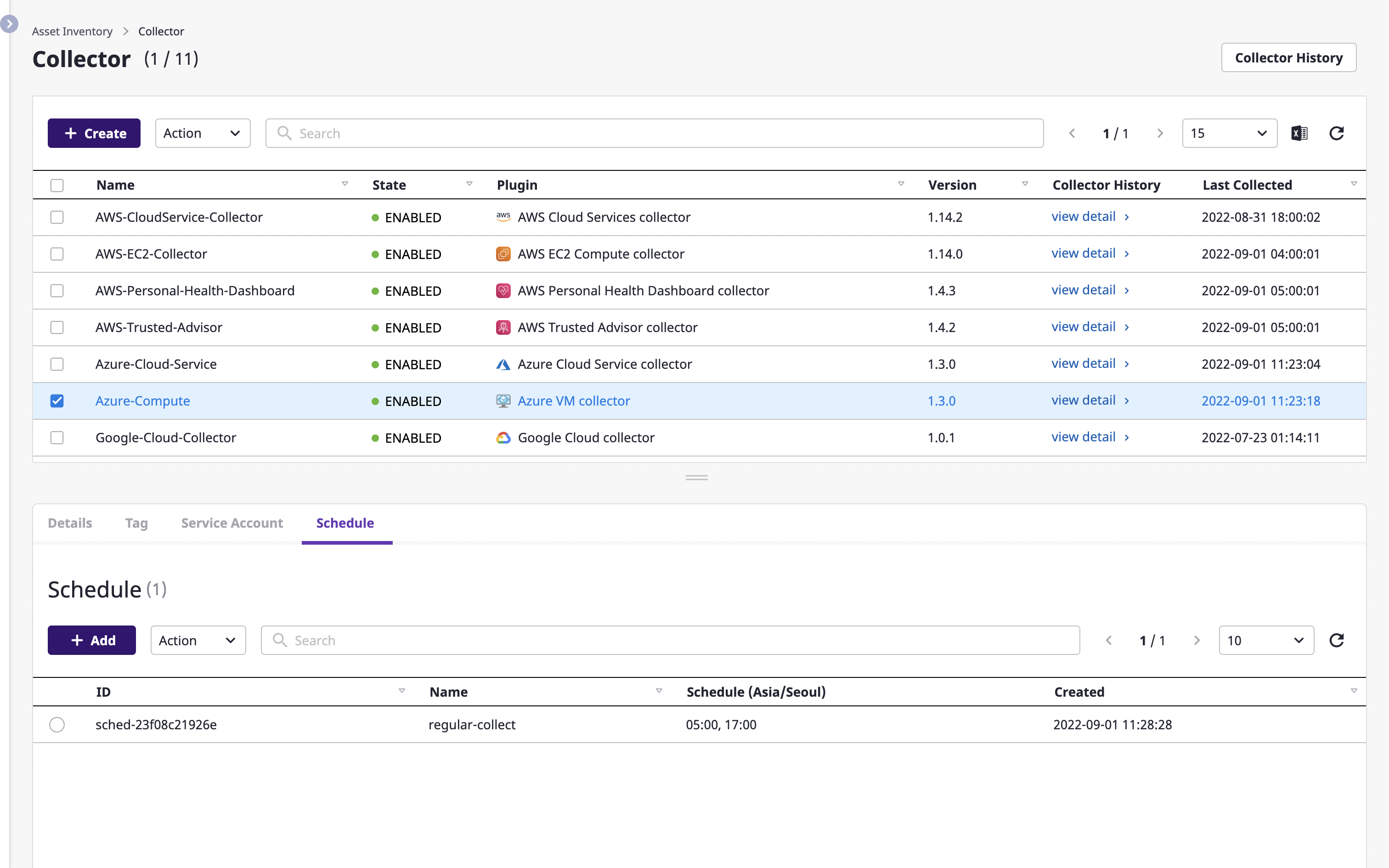

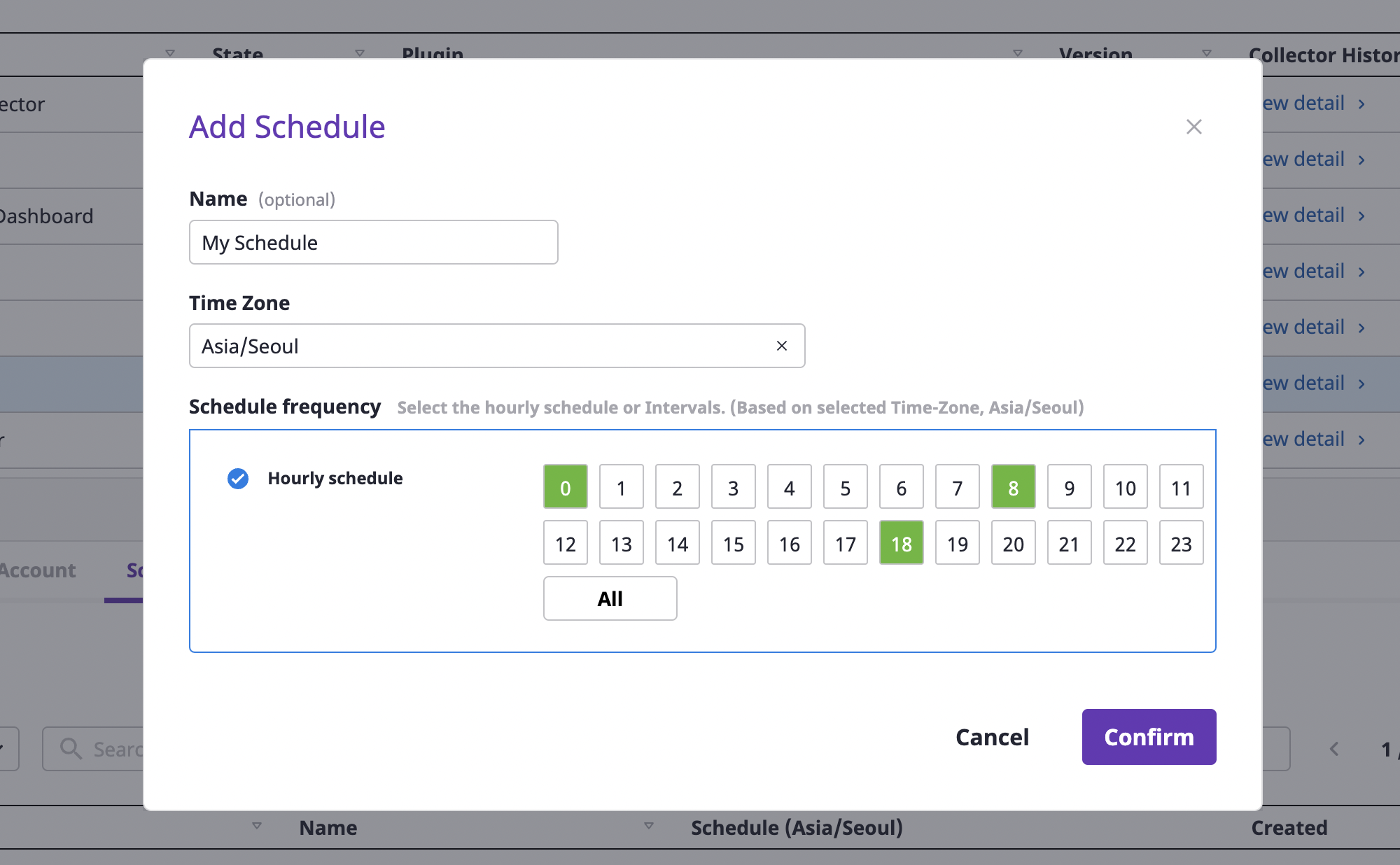

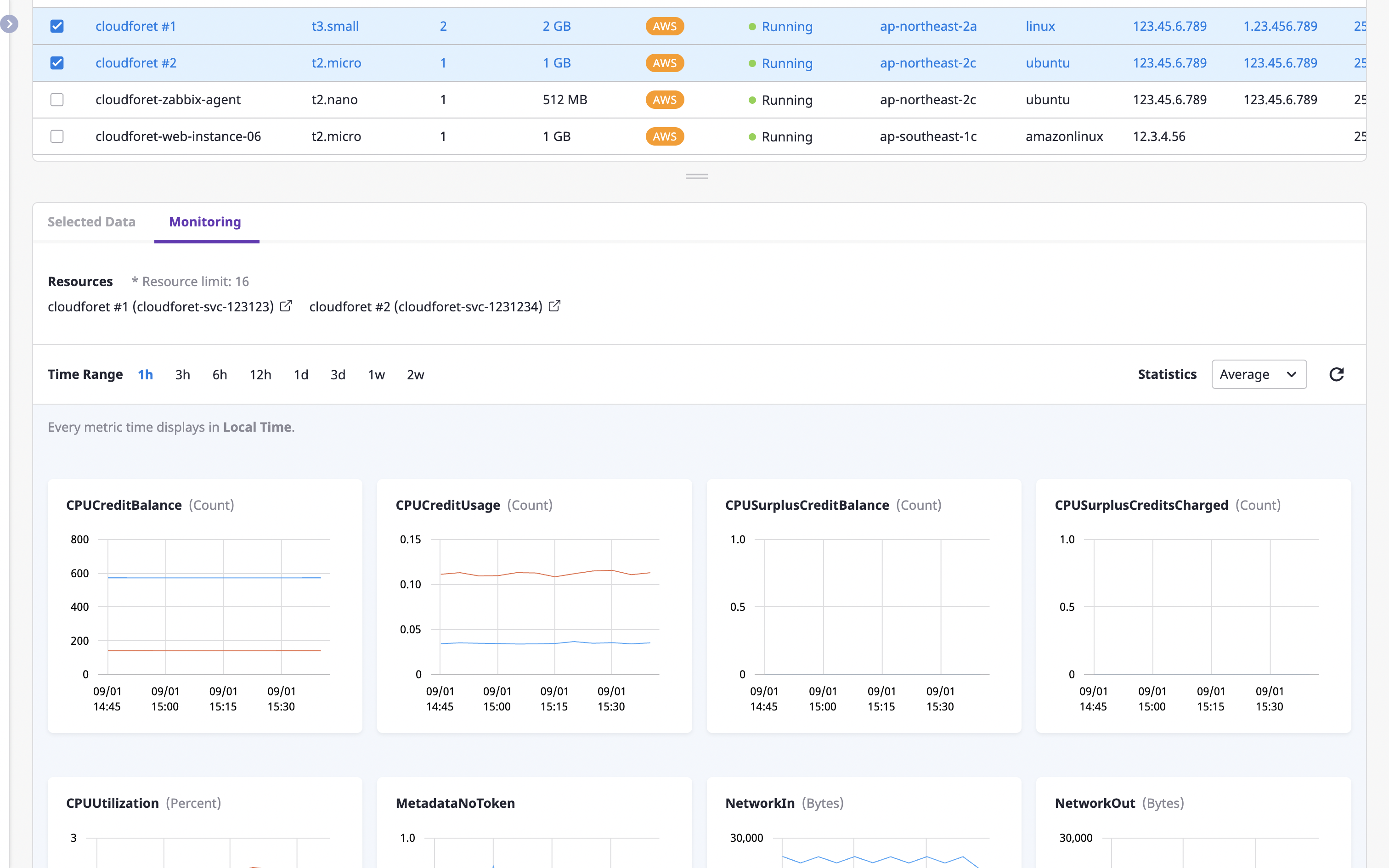

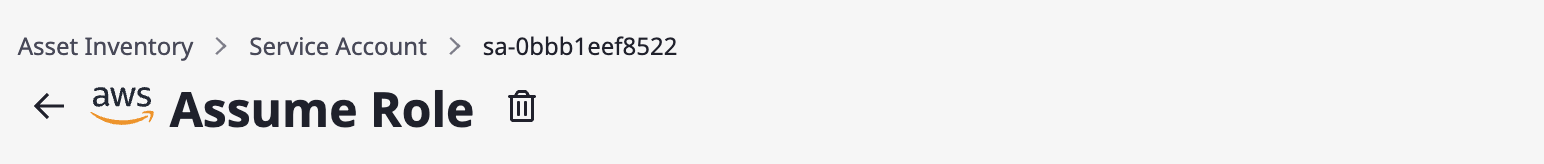

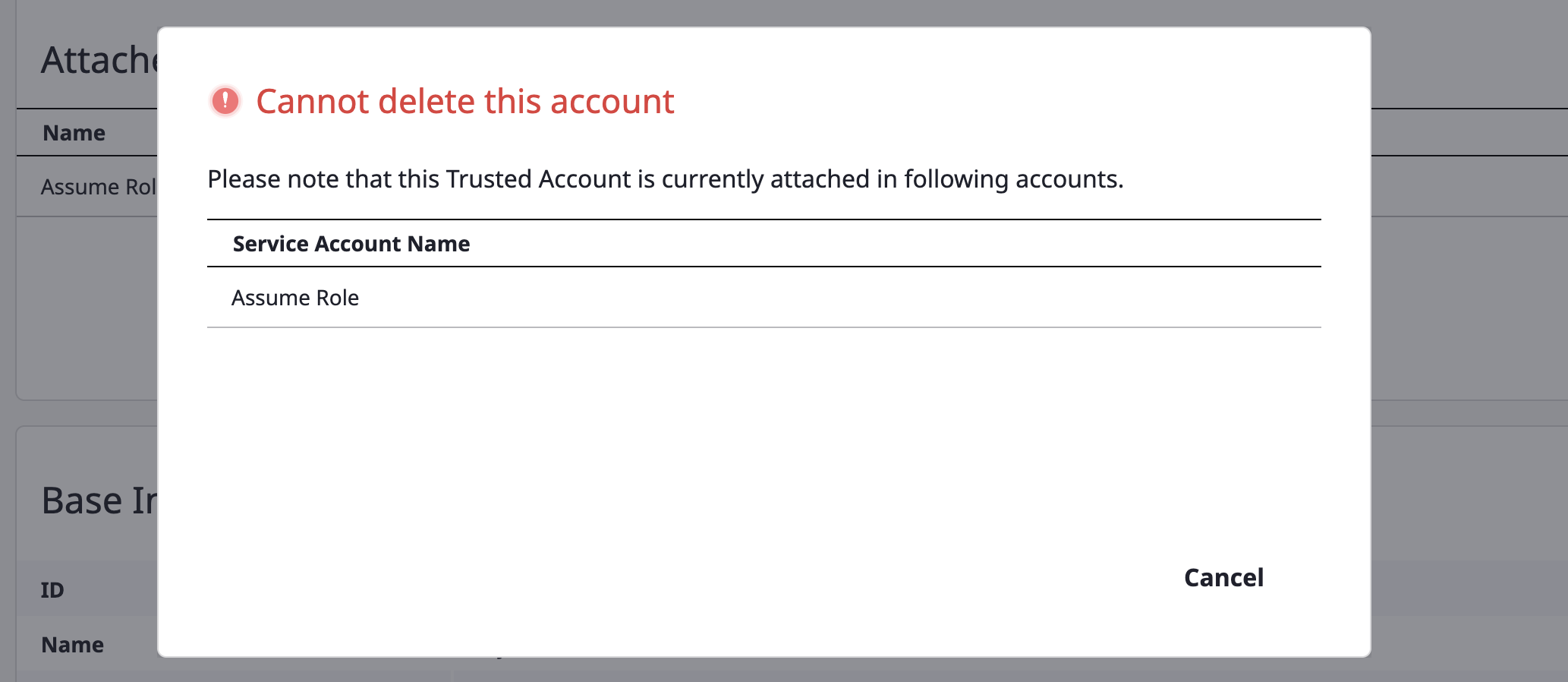

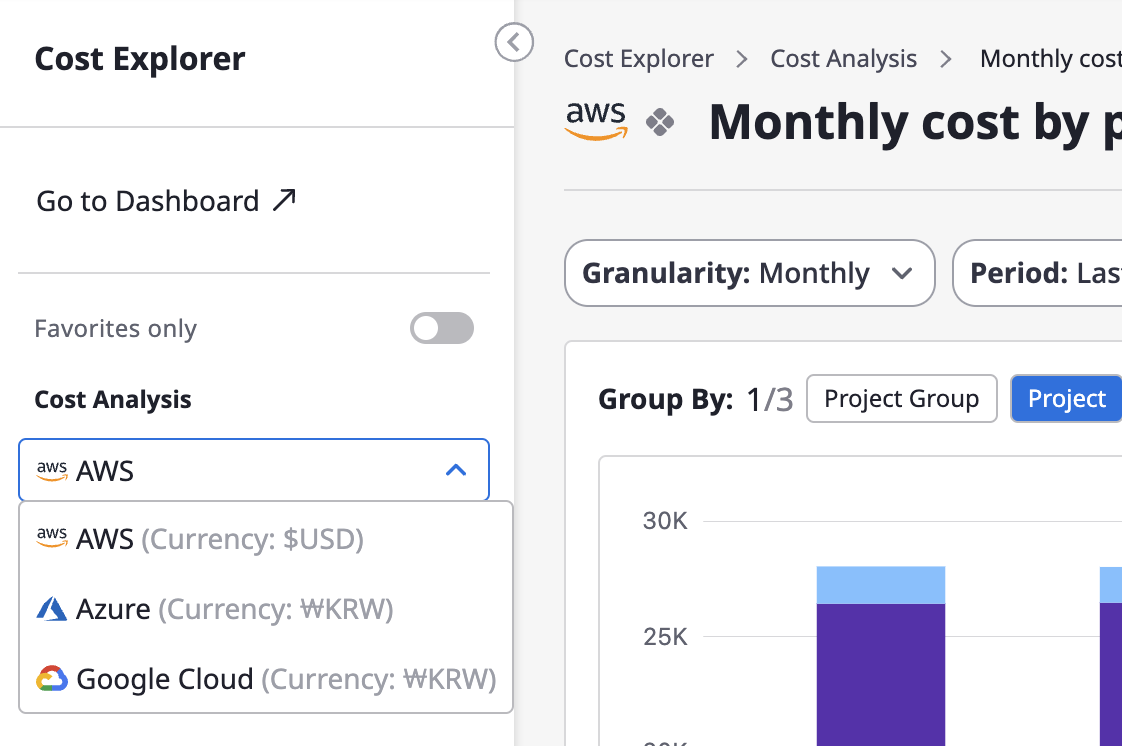

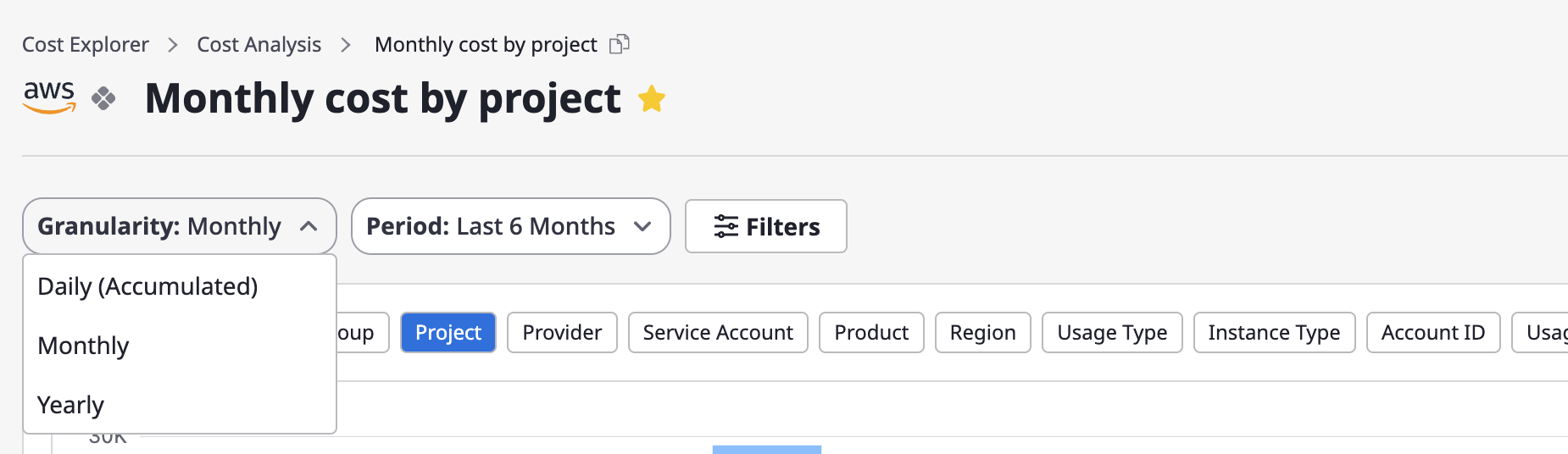

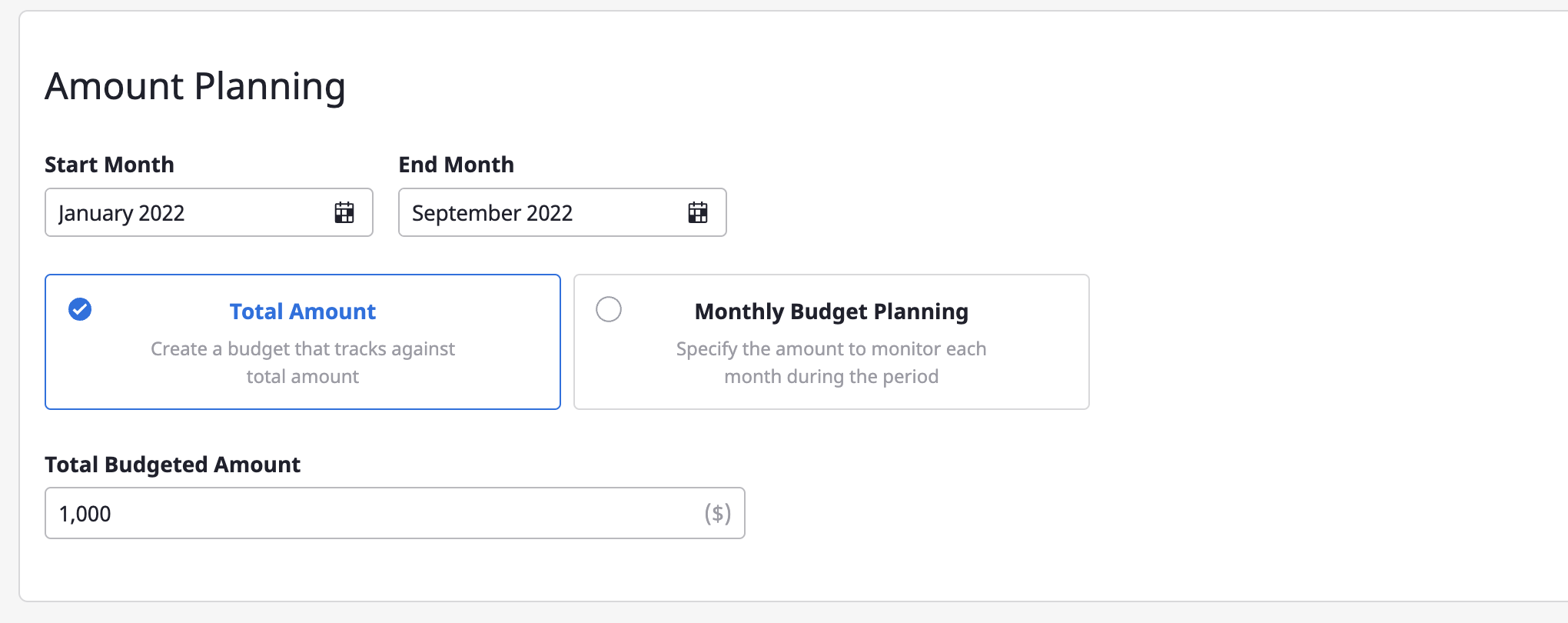

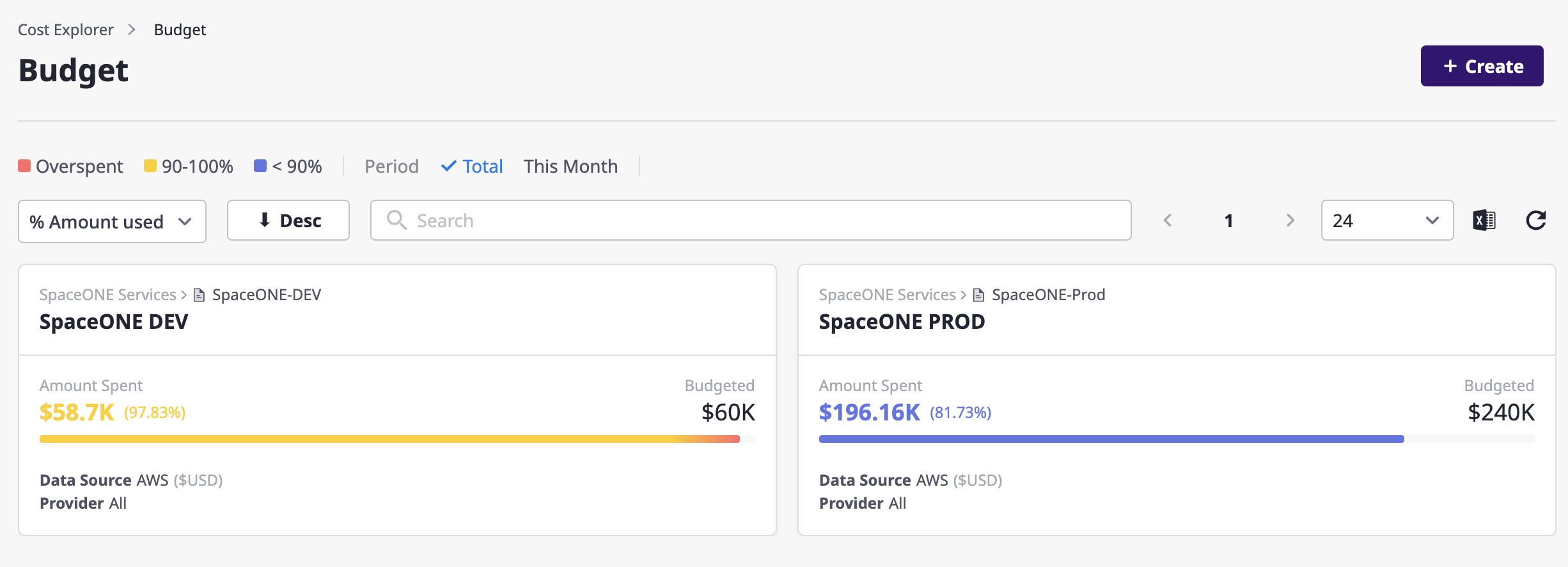

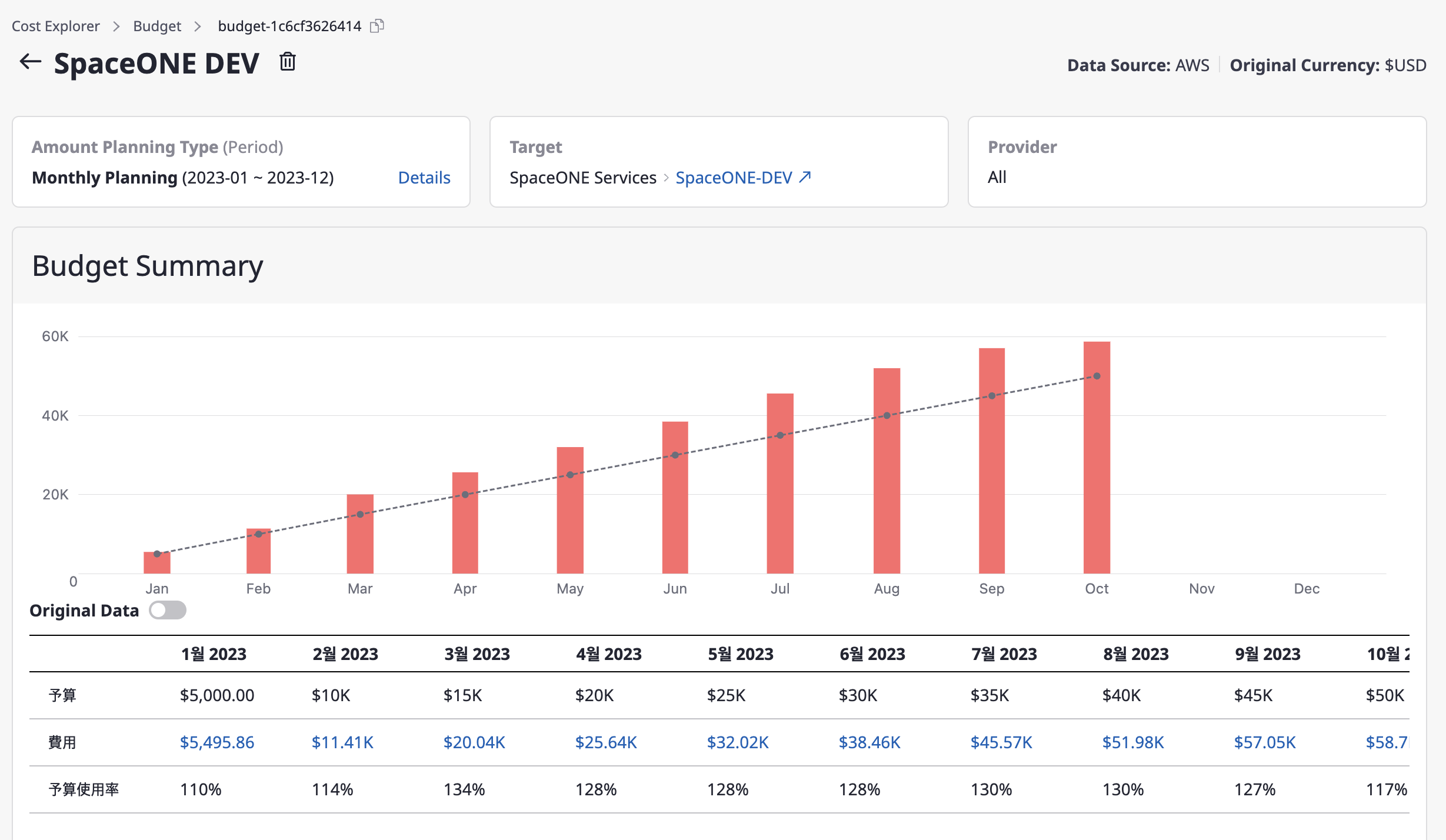

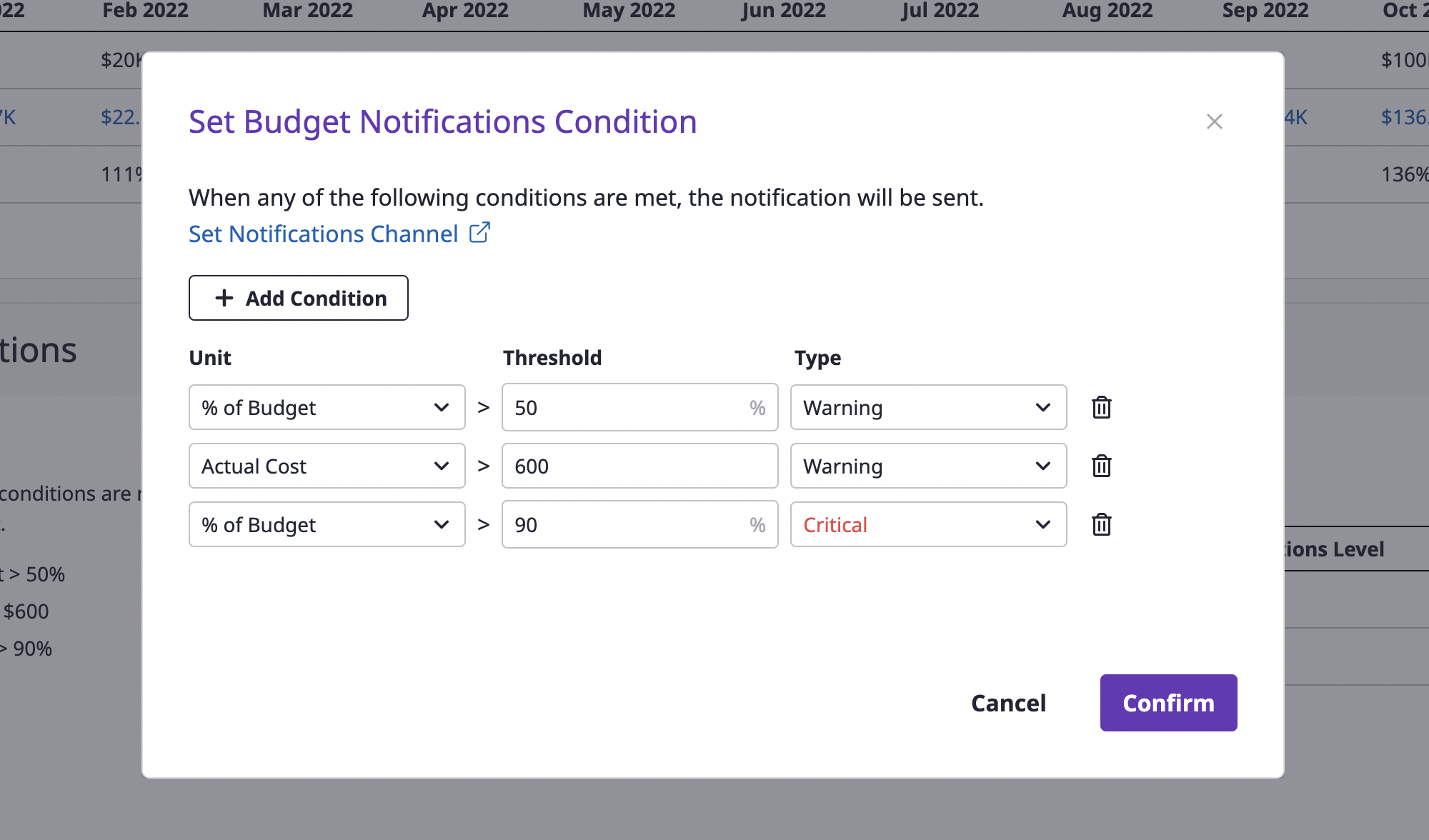

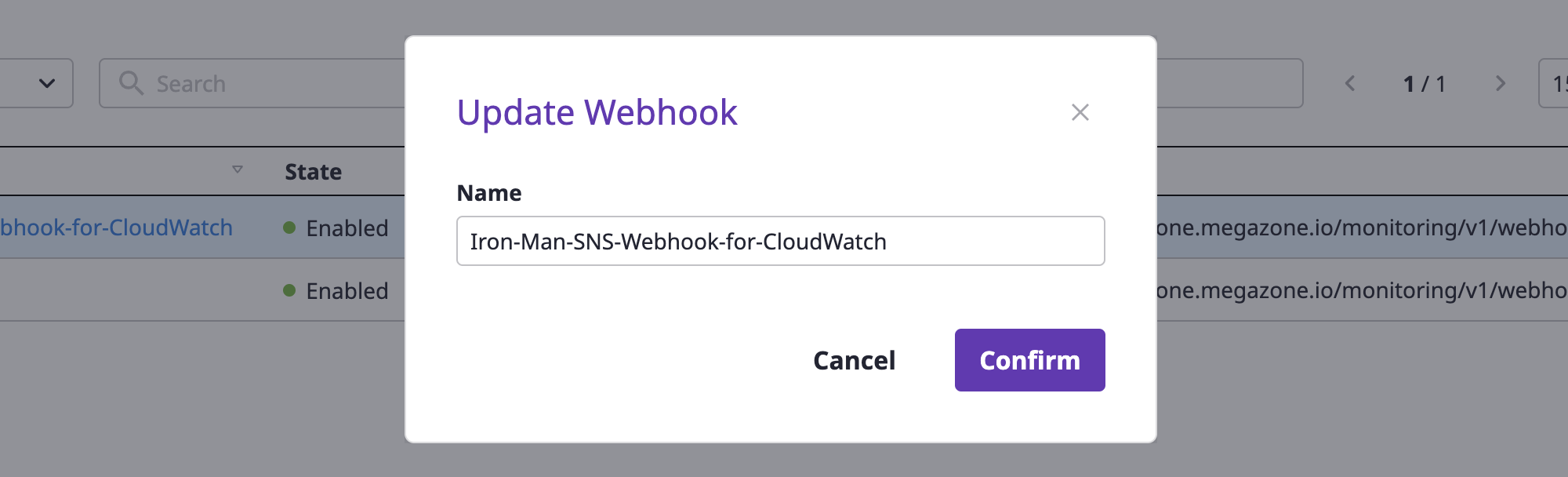

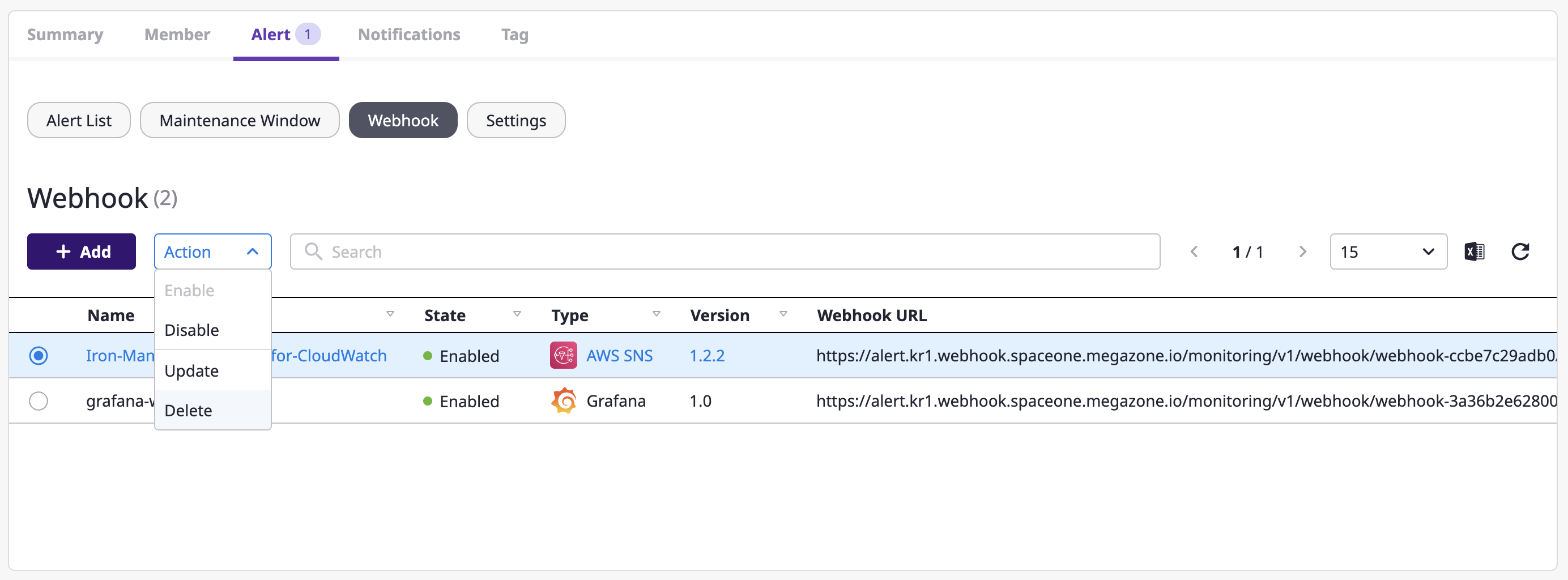

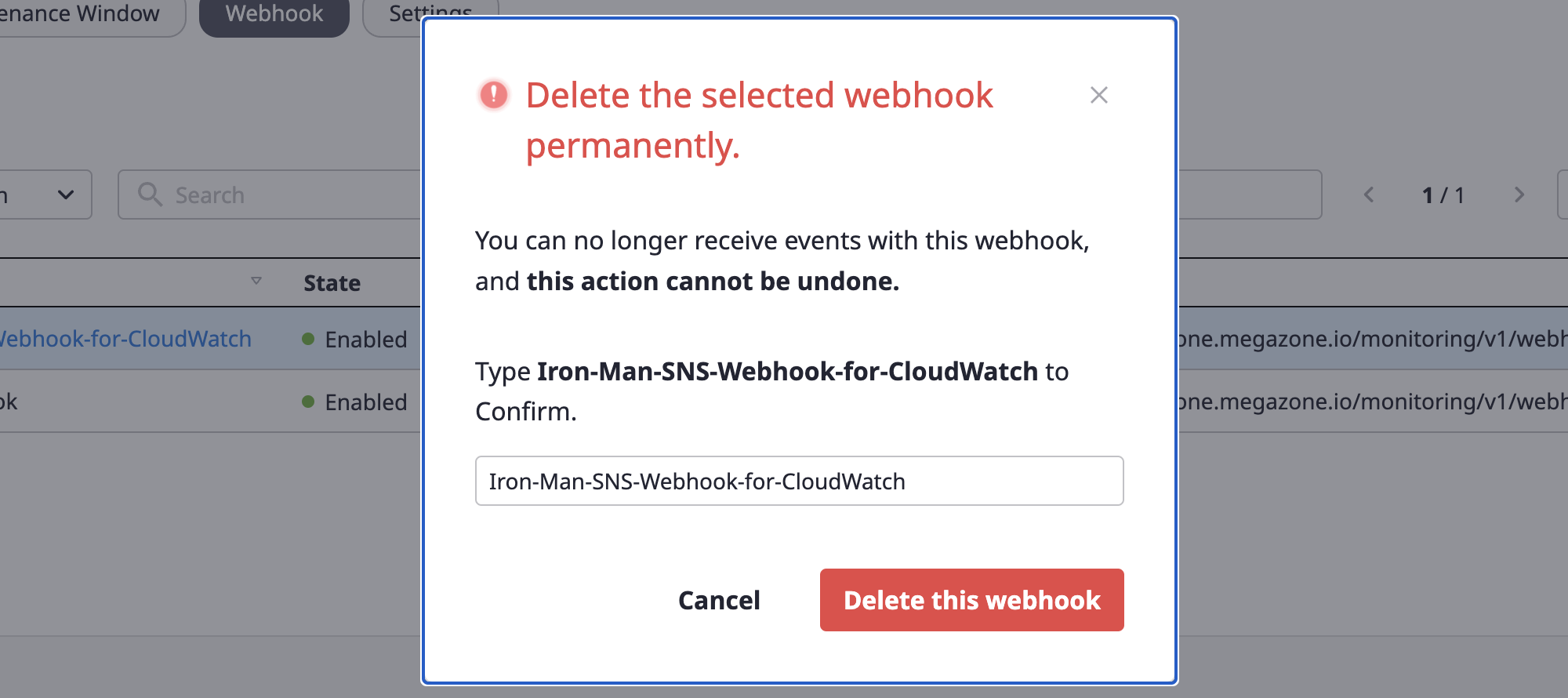

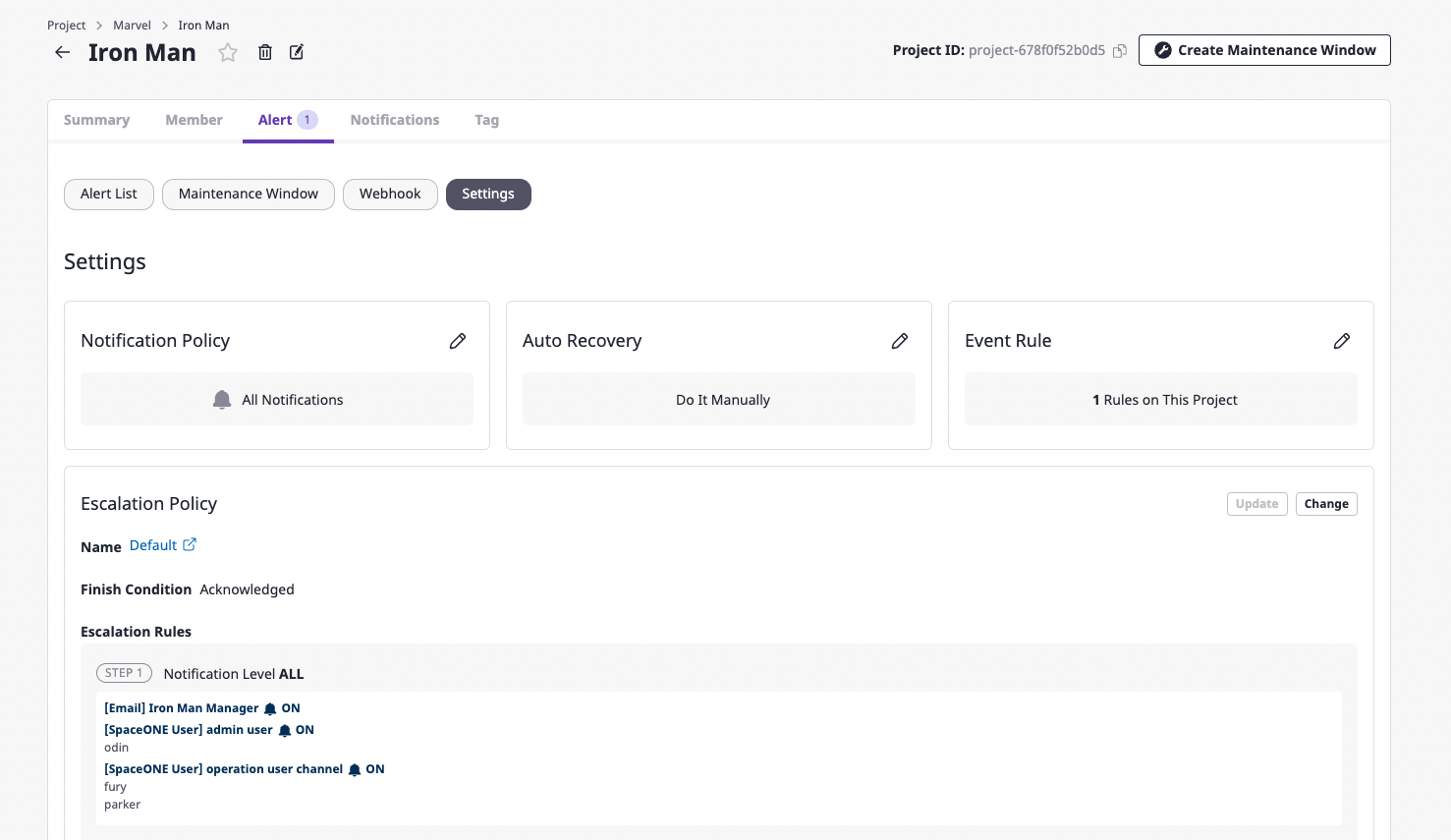

- from: spaceone/spacectl